Difference between revisions of "Timeline of Center for Security and Emerging Technology"

(→Big picture) |

(→Timeline) |

||

| (24 intermediate revisions by 2 users not shown) | |||

| Line 7: | Line 7: | ||

Here are some interesting questions this timeline can answer: | Here are some interesting questions this timeline can answer: | ||

| − | What are CSET's main research focuses since its inception? | + | *What are CSET's main research focuses since its inception? |

| − | Which key reports and publications have been released by CSET that shape policy discussions on emerging technology? | + | *Which key reports and publications have been released by CSET that shape policy discussions on emerging technology? |

| − | + | *How has CSET contributed to policy recommendations related to AI and national security? | |

| − | How has CSET contributed to policy recommendations related to AI and national security? | + | *What collaborations and partnerships has CSET formed to advance its mission? |

| − | What collaborations and partnerships has CSET formed to advance its mission? | ||

For more information on evaluating the timeline's coverage, see [[Representativeness of events in timelines]]. | For more information on evaluating the timeline's coverage, see [[Representativeness of events in timelines]]. | ||

| Line 18: | Line 17: | ||

{| class="wikitable" | {| class="wikitable" | ||

! Time period !! Development summary !! More details | ! Time period !! Development summary !! More details | ||

| + | |- | ||

| + | | 2014 to 2019 || Early Foundations of CSET || Jason Matheny’s leadership at IARPA and his focus on AI ethics, security, and research lays the groundwork for the creation of the Center for Security and Emerging Technology (CSET). Discussions with policymakers and academic collaborations highlight the need for a dedicated U.S.-based think tank addressing AI's societal impacts. CSET is officially launched in 2019 with a $55 million grant, emphasizing bridging the gap between technical advancements and policy needs. | ||

| + | |- | ||

| + | |||

| + | | 2019 to 2021 || Establishing Credibility and Influence || CSET gains prominence with reports such as "The Global AI Talent Landscape" and analyses of China's AI developments, emphasizing the strategic importance of AI talent retention and ethical military applications. Collaborations with the NSCAI further shape U.S. AI policy and national security strategies, solidifying CSET’s role as a key player in AI policy. | ||

| + | |- | ||

| + | |||

| + | | 2021 to 2022 || International Collaboration and Responsible AI || Under new leadership, CSET broadens its research scope, producing influential reports on global AI trends, talent migration, and ethical use of AI in defense systems. Its work sparks discussions on creating international treaties to govern autonomous military technologies, positioning it as a leader in international AI policy dialogue. | ||

| + | |- | ||

| + | |||

| + | | 2023 to 2024 || AI Risk Management and Emerging Challenges || CSET’s research influences U.S. federal guidelines on AI risk management and highlights challenges such as generative AI, cybersecurity threats, and election integrity. Reports like "Securing Critical Infrastructure in the Age of AI" and analyses of AI-driven misinformation demonstrate CSET’s continued focus on mitigating risks and adapting to emerging AI applications. | ||

|} | |} | ||

==Full timeline== | ==Full timeline== | ||

| − | + | ===Inclusion Criteria=== | |

Here are the inclusion criteria for various event types: | Here are the inclusion criteria for various event types: | ||

| Line 37: | Line 47: | ||

* For "Collaboration", the intention is to include significant collaborations with other institutions where FHI co-authored reports, conducted joint research, or played a major role in advising. | * For "Collaboration", the intention is to include significant collaborations with other institutions where FHI co-authored reports, conducted joint research, or played a major role in advising. | ||

| + | ===Timeline=== | ||

{| class="sortable wikitable" | {| class="sortable wikitable" | ||

| − | ! Year !! Month and date !! Event type !! Details | + | ! Year !! Month and date !! Event type !! Domain !! Details |

| + | |- | ||

| + | | 2013 || {{dts|July}} || Think Tank Formation || Research Initiatives || Early discussions begin about creating a U.S.-focused think tank dedicated to AI safety and emerging technologies. Key figures such as Jason Matheny and collaborators at Open Philanthropy explore funding and organizational models.<ref>{{cite web |url=https://openphilanthropy.org/ai-policy-initiatives |title=Open Philanthropy AI Policy Initiatives |accessdate=October 31, 2024}}</ref> | ||

| + | |- | ||

| + | | 2015 || {{dts|October}} || Policy Initiative || AI R&D || Matheny co-leads the National AI R&D Strategic Plan with the White House, setting out a framework for ethical AI research in the U.S. This plan emphasizes responsible AI development, transparency, and risk management, and influences Matheny’s future policy-focused work at CSET.<ref>{{cite web |url=https://www.whitehouse.gov/briefing-room/statements-releases/2015/10/20/national-ai-strategic-plan |title=National AI R&D Strategic Plan |accessdate=October 31, 2024}}</ref> | ||

| + | |- | ||

| + | | 2016 || {{dts|April}} || Recognition || Personal Achievement || Matheny receives the National Intelligence Superior Service Medal and is named one of Foreign Policy's “Top 50 Global Thinkers” for his innovative work on AI, biosecurity, and emerging tech policy. These recognitions mark him as an influential figure in the field, highlighting his leadership in technology policy and setting the stage for his later role at CSET.<ref>{{cite web |url=https://www.cnas.org/people/jason-matheny |title=Jason Matheny at CNAS |accessdate=October 31, 2024}}</ref><ref>{{cite web |url=https://www.fedscoop.com |title=Jason Matheny's Achievements |accessdate=October 31, 2024}}</ref> | ||

| + | |- | ||

| + | | 2016–2017 || N/A || Background || AI Safety Research || Rising global concerns around AI ethics and safety spur discussions at institutions like the Future of Humanity Institute (FHI) at Oxford and the Center for Human-Compatible AI (CHAI) at Berkeley. These dialogues emphasize the dual-use nature of AI technologies and underscore a need for U.S.-based policy research focused on these risks. Matheny envisions the Center for Security and Emerging Technology (CSET) as a response.<ref>{{cite web |url=https://georgetown.edu/news/introducing-cset/ |title=Georgetown Announces CSET Launch |accessdate=October 31, 2024}}</ref><ref>{{cite web |url=https://futureoflife.org/2016/01/15/future-humanity-institute-ai/ |title=FHI and CHAI Statements on AI Safety |accessdate=October 31, 2024}}</ref> | ||

| + | |- | ||

| + | | 2018 || {{dts|July}} || Pre-founding || Think Tank Formation || Recognizing critical gaps in the understanding of AI's societal impacts, Matheny collaborates with academics, policymakers, and tech leaders to build a case for a U.S.-focused think tank. Helen Toner, then at Open Philanthropy, contributes by aligning funding priorities for AI safety and policy research, helping shape the blueprint for CSET.<ref>{{cite web |url=https://georgetown.edu/news/introducing-cset/ |title=Georgetown Announces CSET Launch |accessdate=October 31, 2024}}</ref><ref>{{cite web |url=https://www.cnas.org/publications/reports/the-future-of-ai-in-national-security-and-defense |title=AI and National Security - CNAS Report |accessdate=October 31, 2024}}</ref> | ||

|- | |- | ||

| − | | | + | | 2019 || {{dts|January 15}} || Founding || CSET Establishment || The Center for Security and Emerging Technology (CSET) is officially launched at Georgetown University’s Walsh School of Foreign Service, with a $55 million grant from Open Philanthropy. Matheny, as the founding director, envisions CSET as a center that translates cutting-edge technology research into insights for U.S. national security. Founding members include Helen Toner as Director of Strategy and Dewey Murdick as Director of Data Science.<ref>{{cite web |url=https://cset.georgetown.edu/ |title=About CSET |accessdate=October 31, 2024}}</ref><ref>{{cite web |url=https://www.openphilanthropy.org |title=Open Philanthropy Grant for CSET |accessdate=October 31, 2024}}</ref> |

|- | |- | ||

| − | | | + | | 2019 || {{dts|January}} || Leadership || Strategy and Data Science || Helen Toner joins as Director of Strategy, leveraging her expertise in AI policy and China’s technological landscape. Dewey Murdick, formerly Chief Analytics Officer at the Department of Homeland Security, becomes Director of Data Science. Together, they enhance CSET’s ability to conduct detailed, data-driven policy analyses and inform U.S. national security.<ref>{{cite web |url=https://cset.georgetown.edu/staff/helen-toner/ |title=Helen Toner Bio |accessdate=October 31, 2024}}</ref><ref>{{cite web |url=https://cset.georgetown.edu/staff/dewey-murdick/ |title=Dewey Murdick Bio |accessdate=October 31, 2024}}</ref> |

|- | |- | ||

| − | | | + | | 2019 || {{dts|July 1}} || Report || AI Talent || CSET publishes its first report, "The Global AI Talent Landscape," an analysis of global AI talent distribution and its implications for U.S. national security. The report garners attention from U.S. policymakers, marking CSET as a pivotal player in AI policy and intelligence research. This report highlights the strategic importance of retaining talent to remain competitive.<ref>{{cite web |url=https://cset.georgetown.edu/publication/the-global-ai-talent-landscape/ |title=The Global AI Talent Landscape Report |accessdate=October 31, 2024}}</ref><ref>{{cite web |url=https://www.whitehouse.gov/briefing-room/statements-releases/2019/07/01/global-ai-talent-report |title=White House Response to CSET's AI Talent Report |accessdate=October 31, 2024}}</ref> |

|- | |- | ||

| − | | | + | | 2020 || {{dts|March 10}} || Expansion || Research Portfolio || CSET significantly broadens its research portfolio to address emerging challenges in AI talent migration, global investment trends in AI, and applications of AI in biotechnology. Helen Toner leads research on China's AI ecosystem, helping policymakers navigate critical U.S.-China technology competition.<ref>{{cite web |url=https://cset.georgetown.edu/research-areas/ |title=CSET Research Areas |accessdate=October 31, 2024}}</ref><ref>{{cite web |url=https://georgetown.edu/news/cset-expansion/ |title=CSET Expands Research Focus |accessdate=October 31, 2024}}</ref> |

|- | |- | ||

| − | | | + | | 2020 || {{dts|July 15}} || Report || China Initiative || CSET publishes "China’s AI Development: Implications for the United States," analyzing China’s rapid advancements in AI and the strategic threat it poses to U.S. dominance in AI technology. The report garners significant attention from the Department of Defense and other national security agencies.<ref>{{cite web |url=https://cset.georgetown.edu/publication/chinas-ai-development/ |title=China’s AI Development: Implications for the U.S. |accessdate=October 31, 2024}}</ref><ref>{{cite web |url=https://www.defense.gov/news/china-ai-report |title=Defense Department's Response to CSET's China AI Report |accessdate=October 31, 2024}}</ref> |

|- | |- | ||

| − | | | + | | 2020 || {{dts|September 15}} || Report || Pandemic Preparedness || In the wake of the COVID-19 pandemic, CSET releases "Artificial Intelligence and Pandemics: Using AI to Predict and Combat Disease." This report explores how AI can forecast disease outbreaks, monitor infection rates, and enhance healthcare logistics. It sparks discussions about AI’s role in healthcare resilience.<ref>{{cite web |url=https://cset.georgetown.edu/publication/artificial-intelligence-and-pandemics/ |title=Artificial Intelligence and Pandemics |accessdate=October 31, 2024}}</ref><ref>{{cite web |url=https://www.who.int/emergencies/diseases/coronavirus-2019 |title=WHO Report on AI in Pandemic Preparedness |accessdate=October 31, 2024}}</ref> |

|- | |- | ||

| − | | 2020 || {{dts| | + | | 2020 || {{dts|November 30}} || Policy Impact || AI Safety and Governance || CSET’s research on AI safety and national security starts influencing U.S. policy discussions, particularly around AI ethics and governance. The center's work contributes to early drafts of AI policy frameworks that emphasize the importance of transparency, accountability, and ethical considerations in AI development for government use.<ref>{{cite web |url=https://cset.georgetown.edu/research/ |title=CSET Research Portal |accessdate=October 31, 2024}}</ref><ref>{{cite web |url=https://www.nscai.gov/reports |title=National Security Commission on AI Reports |accessdate=October 31, 2024}}</ref> |

|- | |- | ||

| − | | 2020 || {{dts| | + | | 2020 || {{dts|December 20}} || Collaboration || U.S. AI Strategy || CSET collaborates with the National Security Commission on Artificial Intelligence (NSCAI) to draft recommendations for a comprehensive U.S. AI strategy. This work includes strategic investment recommendations in AI talent, technology, and infrastructure to ensure U.S. leadership in critical AI capabilities, with a strong focus on defense and economic competitiveness.<ref>{{cite web |url=https://cset.georgetown.edu/publication/us-ai-strategy/ |title=U.S. AI Strategy Recommendations |accessdate=October 31, 2024}}</ref><ref>{{cite web |url=https://www.nscai.gov/white-paper |title=NSCAI White Paper on U.S. AI Strategy |accessdate=October 31, 2024}}</ref> |

|- | |- | ||

| − | | | + | | 2021 || {{dts|January 20}} || Leadership Transition || Organizational Leadership || Jason Matheny steps down as Director of CSET to join the National Security Commission on Artificial Intelligence (NSCAI). Dewey Murdick, formerly CSET’s Director of Data Science, succeeds Matheny as Director. Murdick continues to expand CSET’s focus on AI in national security, working closely with policymakers to advance responsible AI development.<ref>{{cite web |url=https://cset.georgetown.edu/staff/dewey-murdick/ |title=Dewey Murdick Bio at CSET |accessdate=October 31, 2024}}</ref><ref>{{cite web |url=https://www.nscai.gov/ |title=NSCAI Official Site |accessdate=October 31, 2024}}</ref> |

|- | |- | ||

| − | | 2021 || {{dts| | + | | 2021 || {{dts|February 1}} || National Report || U.S. AI Strategy || Jason Matheny plays a key role in producing the NSCAI’s final report, which recommends a comprehensive U.S. AI strategy. The report emphasizes talent retention, robust funding for AI research, and the need for ethical guidelines in defense applications of AI. These recommendations influence U.S. policymakers and underscore the strategic importance of AI in national security.<ref>{{cite web |url=https://www.nscai.gov/reports |title=NSCAI Final Report |accessdate=October 31, 2024}}</ref><ref>{{cite web |url=https://www.defense.gov/news/ai-strategy/ |title=Defense Department AI Strategy |accessdate=October 31, 2024}}</ref> |

|- | |- | ||

| − | | 2021 || {{dts| | + | | 2021 || {{dts|August 1}} || Collaboration || Military AI || CSET collaborates with international organizations to assess global trends in AI, particularly focusing on the use of AI in military applications. Reports led by Helen Toner emphasize the importance of ethical guidelines to prevent misuse of AI in autonomous weapon systems, shaping international AI policy.<ref>{{cite web |url=https://cset.georgetown.edu/publication/ai-military-use/ |title=AI and Military Use Report |accessdate=October 31, 2024}}</ref><ref>{{cite web |url=https://www.nato.int/cps/en/natohq/topics_184460.htm |title=NATO on AI in Military Applications |accessdate=October 31, 2024}}</ref> |

|- | |- | ||

| − | | | + | | 2022 || {{dts|February 20}} || Report || AI Talent || CSET releases "Keeping Top Talent: AI Talent Flows to and from the U.S.," which examines global AI talent migration patterns and stresses the need for U.S. policies to retain top AI researchers. The report, highlighting competition from China and Europe, draws attention to the critical role of talent in maintaining U.S. technological leadership.<ref>{{cite web |url=https://cset.georgetown.edu/publication/keeping-top-talent-ai-talent-flows/ |title=Keeping Top Talent: AI Talent Flows to and from the U.S. |accessdate=October 31, 2024}}</ref><ref>{{cite web |url=https://www.brookings.edu/ai-talent/ |title=Brookings on AI Talent Retention |accessdate=October 31, 2024}}</ref> |

|- | |- | ||

| − | | 2022 || {{dts| | + | | 2022 || {{dts|July 12}} || Report || China Initiative || CSET publishes "Harnessing AI: How China’s AI Ambitions Pose Challenges to U.S. Leadership," a report suggesting that China’s advancements in AI could threaten U.S. dominance in defense and intelligence. The report calls for increased U.S. investment in AI and advocates for proactive policy measures to safeguard U.S. interests.<ref>{{cite web |url=https://cset.georgetown.edu/publication/chinas-ai-strategy/ |title=Harnessing AI: How China's AI Ambitions Pose Challenges to U.S. Leadership |accessdate=October 31, 2024}}</ref><ref>{{cite web |url=https://www.cnas.org/reports/ai-national-security |title=CNAS on AI and National Security |accessdate=October 31, 2024}}</ref> |

|- | |- | ||

| − | | 2022 || {{dts| | + | | 2022 || {{dts|October 18}} || Report || Responsible AI || Responding to growing concerns about ethical AI, CSET publishes "Responsible AI in Defense: Recommendations for U.S. Policy." The report advocates for clear ethical frameworks to ensure accountability and transparency in autonomous military systems. It receives attention from the Department of Defense and contributes to ongoing discussions on responsible AI governance.<ref>{{cite web |url=https://cset.georgetown.edu/publication/responsible-ai-in-defense/ |title=Responsible AI in Defense |accessdate=October 31, 2024}}</ref><ref>{{cite web |url=https://www.defense.gov/ai-responsible-use/ |title=DoD on Responsible AI Use |accessdate=October 31, 2024}}</ref> |

|- | |- | ||

| − | | | + | | 2023 || {{dts|February 15}} || Policy Impact || AI Governance || CSET’s research informs the Biden administration’s Executive Order on AI Risk Management. The order, influenced by CSET’s work, establishes federal guidelines to promote responsible AI development, emphasizing AI safety, transparency, and accountability. This policy milestone underscores CSET’s impact on shaping federal AI governance.<ref>{{cite web |url=https://www.whitehouse.gov/briefing-room/statements-releases/2023/02/15/executive-order-on-ai/ |title=Executive Order on AI Risk Management |accessdate=October 31, 2024}}</ref><ref>{{cite web |url=https://cset.georgetown.edu/research |title=CSET Research Portal |accessdate=October 31, 2024}}</ref> |

|- | |- | ||

| − | | 2023 || {{dts| | + | | 2023 || {{dts|August 10}} || Report || Military AI || CSET releases a detailed report on AI’s role in autonomous weapons systems, emphasizing the need for international collaboration to prevent misuse. The report, "AI and Autonomous Weapons Systems," advocates for new international treaties to govern the ethical use of military AI.<ref>{{cite web |url=https://cset.georgetown.edu/publication/ai-and-autonomous-weapons/ |title=AI and Autonomous Weapons Systems Report |accessdate=October 31, 2024}}</ref><ref>{{cite web |url=https://www.un.org/disarmament/ccw/ |title=UN Convention on Certain Conventional Weapons |accessdate=October 31, 2024}}</ref> |

|- | |- | ||

| − | | | + | | 2024 || {{dts|February 20}} || Research || Cybersecurity || CSET releases "Securing Critical Infrastructure in the Age of AI," which addresses the growing risks of AI-driven cyber threats to essential systems like energy grids and transportation networks. This report emphasizes the role of AI in mitigating vulnerabilities.<ref>{{cite web |url=https://cset.georgetown.edu/publication/securing-critical-infrastructure-in-the-age-of-ai/ |title=Securing Critical Infrastructure in the Age of AI |accessdate=October 31, 2024}}</ref> |

|- | |- | ||

| − | | | + | | 2024 || {{dts|September 26}} || Research || AI Red-Teaming || At DEF CON 2024, CSET releases findings on advancements in AI red-teaming, an approach to testing AI safety through adversarial prompts and simulations.<ref>{{cite web |url=https://cset.georgetown.edu/publication/revisiting-ai-red-teaming-2024/ |title=Revisiting AI Red-Teaming |accessdate=October 31, 2024}}</ref><ref>{{cite web |url=https://www.defcon.org/ai-red-teaming-2024 |title=DEF CON 2024 on AI Red-Teaming |accessdate=October 31, 2024}}</ref> |

|} | |} | ||

| Line 81: | Line 102: | ||

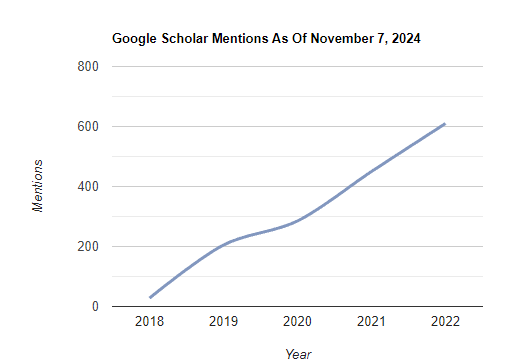

=== Google Scholar === | === Google Scholar === | ||

| − | The following table summarizes per-year mentions on Google Scholar as of | + | The following table summarizes per-year mentions on Google Scholar as of November 5, 2024. |

| + | |||

| + | {| class="sortable wikitable" | ||

| + | ! Year | ||

| + | ! "Center for Security and Emerging Technology" | ||

| + | |- | ||

| + | | 2019 || 28 | ||

| + | |- | ||

| + | | 2020 || 205 | ||

| + | |- | ||

| + | | 2021 || 285 | ||

| + | |- | ||

| + | | 2022 || 450 | ||

| + | |- | ||

| + | | 2023 || 610 | ||

| + | |- | ||

| + | |} | ||

| + | |||

| + | [[File:CSET Googlescholar.png|thumb|center|700px]] | ||

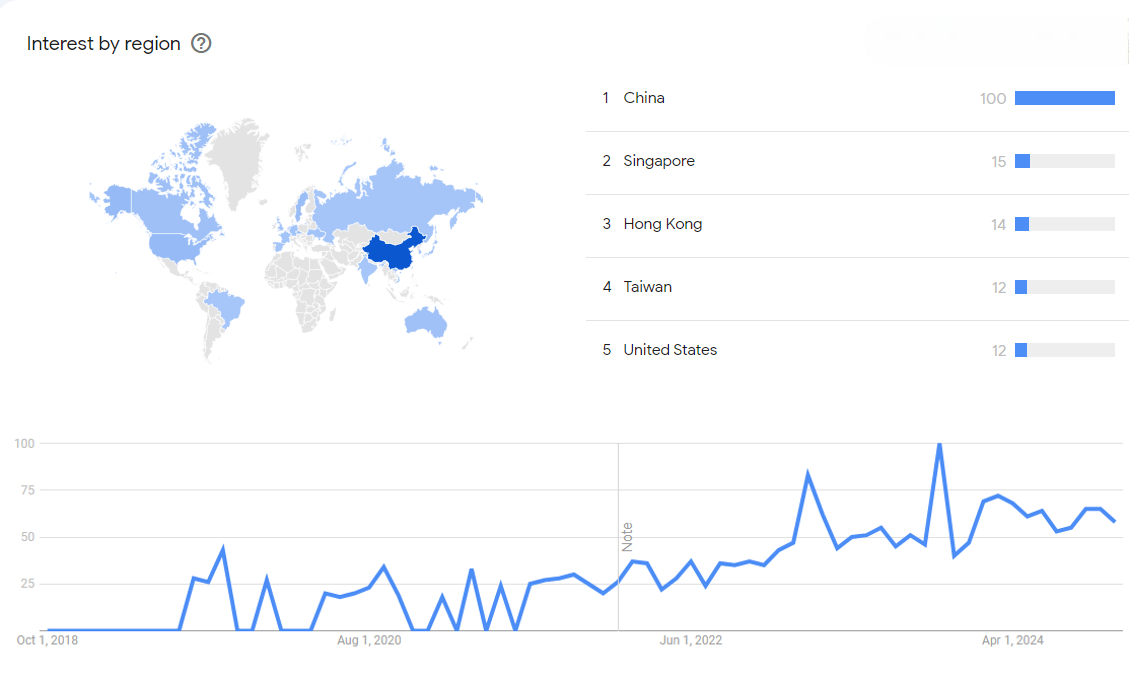

=== Google Trends === | === Google Trends === | ||

| + | The image below shows {{w|Google Trends}} data for Center for Security and Emerging Technology (Research institute), from January 2018 to November 2024, when the screenshot was taken. Interest is also ranked by country and displayed on world map.<ref>{{cite web |title= Center for Security and Emerging Technology (|url=https://trends.google.com/trends/explore?date=2018-10-10%202024-11-10&q=%2Fg%2F11h4f0lqb5&hl=en |website=Google Trends |access-date=10 November 2024}}</ref> | ||

| + | |||

| + | [[File:CSET Trends.jpg|thumb|center|600px]] | ||

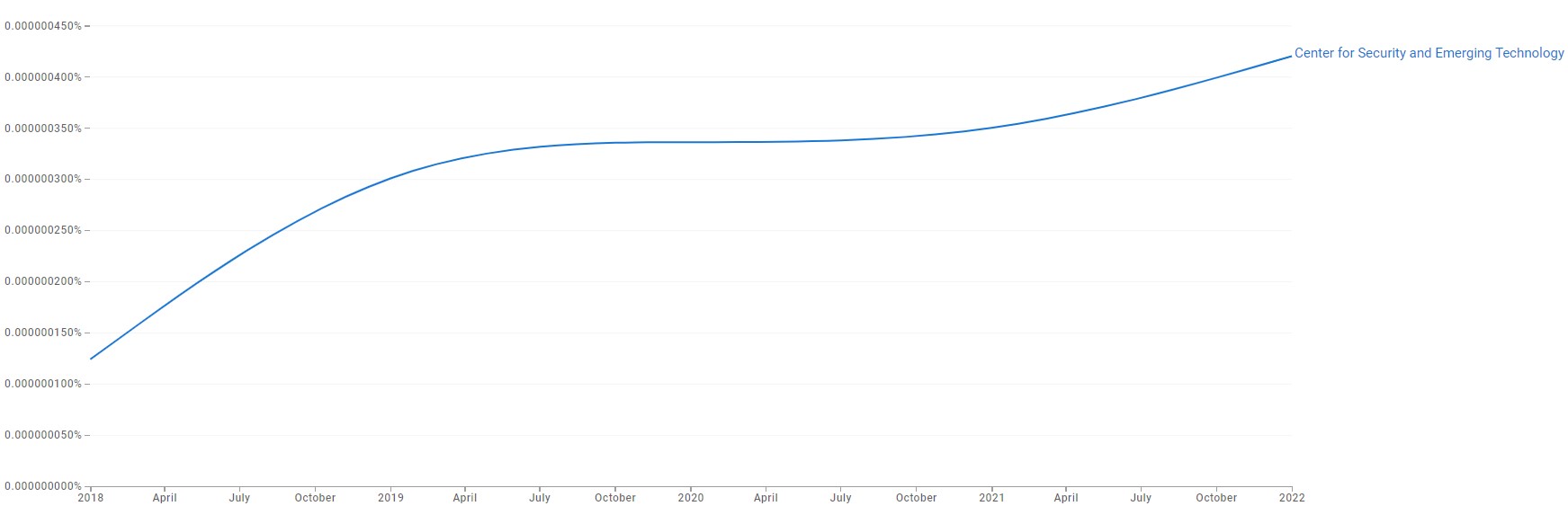

=== Google Ngram Viewer === | === Google Ngram Viewer === | ||

| + | The chart below shows {{w|Google Ngram Viewer}} data for Center for Security and Emerging Technology, from 2018 to 2022.<ref>{{cite web |title=Center for Security and Emerging Technology |url=https://books.google.com/ngrams/graph?content=Center+for+Security+and+Emerging+Technology&year_start=2018&year_end=2022&corpus=en&smoothing=3&case_insensitive=false|website=books.google.com |access-date=10 November 2024 |language=en}}</ref> | ||

| + | |||

| + | [[File:CSET Ngram.jpg|thumb|center|700px]] | ||

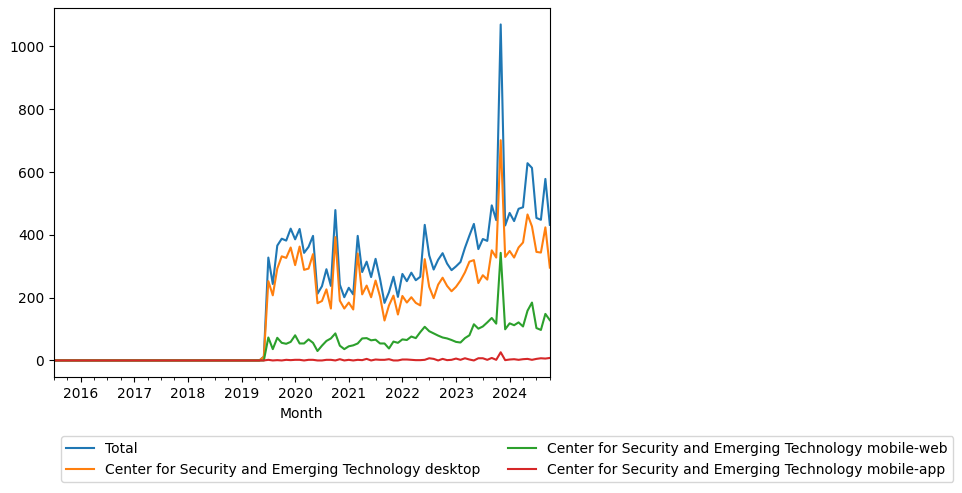

===Wikipedia pageviews for CSET page=== | ===Wikipedia pageviews for CSET page=== | ||

| + | The following plots pageviews for the {{W|Center for Security and Emerging Technolo}} Wikipedia page. The image generated on [https://wikipediaviews.org/displayviewsformultiplemonths.php]. | ||

| + | |||

| + | [[File:CSET Wikipedia Views.png|thumb|center|550px]] | ||

==External links== | ==External links== | ||

Latest revision as of 22:00, 29 December 2024

This is a timeline of the Center for Security and Emerging Technology (CSET).

Contents

Sample questions

This section provides some sample questions for readers who may not have a clear goal when browsing the timeline. It serves as a guide to help readers approach the page with more purpose and understand the timeline’s significance.

Here are some interesting questions this timeline can answer:

- What are CSET's main research focuses since its inception?

- Which key reports and publications have been released by CSET that shape policy discussions on emerging technology?

- How has CSET contributed to policy recommendations related to AI and national security?

- What collaborations and partnerships has CSET formed to advance its mission?

For more information on evaluating the timeline's coverage, see Representativeness of events in timelines.

Big picture

| Time period | Development summary | More details |

|---|---|---|

| 2014 to 2019 | Early Foundations of CSET | Jason Matheny’s leadership at IARPA and his focus on AI ethics, security, and research lays the groundwork for the creation of the Center for Security and Emerging Technology (CSET). Discussions with policymakers and academic collaborations highlight the need for a dedicated U.S.-based think tank addressing AI's societal impacts. CSET is officially launched in 2019 with a $55 million grant, emphasizing bridging the gap between technical advancements and policy needs. |

| 2019 to 2021 | Establishing Credibility and Influence | CSET gains prominence with reports such as "The Global AI Talent Landscape" and analyses of China's AI developments, emphasizing the strategic importance of AI talent retention and ethical military applications. Collaborations with the NSCAI further shape U.S. AI policy and national security strategies, solidifying CSET’s role as a key player in AI policy. |

| 2021 to 2022 | International Collaboration and Responsible AI | Under new leadership, CSET broadens its research scope, producing influential reports on global AI trends, talent migration, and ethical use of AI in defense systems. Its work sparks discussions on creating international treaties to govern autonomous military technologies, positioning it as a leader in international AI policy dialogue. |

| 2023 to 2024 | AI Risk Management and Emerging Challenges | CSET’s research influences U.S. federal guidelines on AI risk management and highlights challenges such as generative AI, cybersecurity threats, and election integrity. Reports like "Securing Critical Infrastructure in the Age of AI" and analyses of AI-driven misinformation demonstrate CSET’s continued focus on mitigating risks and adapting to emerging AI applications. |

Full timeline

Inclusion Criteria

Here are the inclusion criteria for various event types:

- For "Publication", the intention is to include the most notable publications. This usually means that if a publication has been featured by FHI itself or has been discussed by some outside sources, it is included. There are too many publications to include all of them.

- For "Website", the intention is to include all websites associated with FHI. There are not that many such websites, so this is doable.

- For "Staff", the intention is to include all Research Fellows and leadership positions (so far, Nick Bostrom has been the only director so not much to record here).

- For "Workshop" and "Conference", the intention is to include all events organized or hosted by FHI, but not events where FHI staff only attended or only helped with organizing.

- For "Internal review", the intention is to include all annual review documents.

- For "External review", the intention is to include all reviews that seem substantive (judged by intuition). For mainstream media articles, only ones that treat FHI/Bostrom at length are included.

- For "Financial", the intention is to include all substantial (say, over $10,000) donations, including aggregated donations and donations of unknown amounts.

- For "Nick Bostrom", the intention is to include events sufficient to give a rough overview of Bostrom's development prior to the founding of FHI.

- For "Social media", the intention is to include all social media account creations (where the date is known) and Reddit AMAs.

- Events about FHI staff giving policy advice (to e.g. government bodies) are not included, as there are many such events and it is difficult to tell which ones are more important.

- For "Project Announcement" or "Intiatives", the intention is to include announcements of major initiatives and research programs launched by FHI, especially those aimed at training researchers or advancing existential risk mitigation.

- For "Collaboration", the intention is to include significant collaborations with other institutions where FHI co-authored reports, conducted joint research, or played a major role in advising.

Timeline

| Year | Month and date | Event type | Domain | Details |

|---|---|---|---|---|

| 2013 | July | Think Tank Formation | Research Initiatives | Early discussions begin about creating a U.S.-focused think tank dedicated to AI safety and emerging technologies. Key figures such as Jason Matheny and collaborators at Open Philanthropy explore funding and organizational models.[1] |

| 2015 | October | Policy Initiative | AI R&D | Matheny co-leads the National AI R&D Strategic Plan with the White House, setting out a framework for ethical AI research in the U.S. This plan emphasizes responsible AI development, transparency, and risk management, and influences Matheny’s future policy-focused work at CSET.[2] |

| 2016 | April | Recognition | Personal Achievement | Matheny receives the National Intelligence Superior Service Medal and is named one of Foreign Policy's “Top 50 Global Thinkers” for his innovative work on AI, biosecurity, and emerging tech policy. These recognitions mark him as an influential figure in the field, highlighting his leadership in technology policy and setting the stage for his later role at CSET.[3][4] |

| 2016–2017 | N/A | Background | AI Safety Research | Rising global concerns around AI ethics and safety spur discussions at institutions like the Future of Humanity Institute (FHI) at Oxford and the Center for Human-Compatible AI (CHAI) at Berkeley. These dialogues emphasize the dual-use nature of AI technologies and underscore a need for U.S.-based policy research focused on these risks. Matheny envisions the Center for Security and Emerging Technology (CSET) as a response.[5][6] |

| 2018 | July | Pre-founding | Think Tank Formation | Recognizing critical gaps in the understanding of AI's societal impacts, Matheny collaborates with academics, policymakers, and tech leaders to build a case for a U.S.-focused think tank. Helen Toner, then at Open Philanthropy, contributes by aligning funding priorities for AI safety and policy research, helping shape the blueprint for CSET.[7][8] |

| 2019 | January 15 | Founding | CSET Establishment | The Center for Security and Emerging Technology (CSET) is officially launched at Georgetown University’s Walsh School of Foreign Service, with a $55 million grant from Open Philanthropy. Matheny, as the founding director, envisions CSET as a center that translates cutting-edge technology research into insights for U.S. national security. Founding members include Helen Toner as Director of Strategy and Dewey Murdick as Director of Data Science.[9][10] |

| 2019 | January | Leadership | Strategy and Data Science | Helen Toner joins as Director of Strategy, leveraging her expertise in AI policy and China’s technological landscape. Dewey Murdick, formerly Chief Analytics Officer at the Department of Homeland Security, becomes Director of Data Science. Together, they enhance CSET’s ability to conduct detailed, data-driven policy analyses and inform U.S. national security.[11][12] |

| 2019 | July 1 | Report | AI Talent | CSET publishes its first report, "The Global AI Talent Landscape," an analysis of global AI talent distribution and its implications for U.S. national security. The report garners attention from U.S. policymakers, marking CSET as a pivotal player in AI policy and intelligence research. This report highlights the strategic importance of retaining talent to remain competitive.[13][14] |

| 2020 | March 10 | Expansion | Research Portfolio | CSET significantly broadens its research portfolio to address emerging challenges in AI talent migration, global investment trends in AI, and applications of AI in biotechnology. Helen Toner leads research on China's AI ecosystem, helping policymakers navigate critical U.S.-China technology competition.[15][16] |

| 2020 | July 15 | Report | China Initiative | CSET publishes "China’s AI Development: Implications for the United States," analyzing China’s rapid advancements in AI and the strategic threat it poses to U.S. dominance in AI technology. The report garners significant attention from the Department of Defense and other national security agencies.[17][18] |

| 2020 | September 15 | Report | Pandemic Preparedness | In the wake of the COVID-19 pandemic, CSET releases "Artificial Intelligence and Pandemics: Using AI to Predict and Combat Disease." This report explores how AI can forecast disease outbreaks, monitor infection rates, and enhance healthcare logistics. It sparks discussions about AI’s role in healthcare resilience.[19][20] |

| 2020 | November 30 | Policy Impact | AI Safety and Governance | CSET’s research on AI safety and national security starts influencing U.S. policy discussions, particularly around AI ethics and governance. The center's work contributes to early drafts of AI policy frameworks that emphasize the importance of transparency, accountability, and ethical considerations in AI development for government use.[21][22] |

| 2020 | December 20 | Collaboration | U.S. AI Strategy | CSET collaborates with the National Security Commission on Artificial Intelligence (NSCAI) to draft recommendations for a comprehensive U.S. AI strategy. This work includes strategic investment recommendations in AI talent, technology, and infrastructure to ensure U.S. leadership in critical AI capabilities, with a strong focus on defense and economic competitiveness.[23][24] |

| 2021 | January 20 | Leadership Transition | Organizational Leadership | Jason Matheny steps down as Director of CSET to join the National Security Commission on Artificial Intelligence (NSCAI). Dewey Murdick, formerly CSET’s Director of Data Science, succeeds Matheny as Director. Murdick continues to expand CSET’s focus on AI in national security, working closely with policymakers to advance responsible AI development.[25][26] |

| 2021 | February 1 | National Report | U.S. AI Strategy | Jason Matheny plays a key role in producing the NSCAI’s final report, which recommends a comprehensive U.S. AI strategy. The report emphasizes talent retention, robust funding for AI research, and the need for ethical guidelines in defense applications of AI. These recommendations influence U.S. policymakers and underscore the strategic importance of AI in national security.[27][28] |

| 2021 | August 1 | Collaboration | Military AI | CSET collaborates with international organizations to assess global trends in AI, particularly focusing on the use of AI in military applications. Reports led by Helen Toner emphasize the importance of ethical guidelines to prevent misuse of AI in autonomous weapon systems, shaping international AI policy.[29][30] |

| 2022 | February 20 | Report | AI Talent | CSET releases "Keeping Top Talent: AI Talent Flows to and from the U.S.," which examines global AI talent migration patterns and stresses the need for U.S. policies to retain top AI researchers. The report, highlighting competition from China and Europe, draws attention to the critical role of talent in maintaining U.S. technological leadership.[31][32] |

| 2022 | July 12 | Report | China Initiative | CSET publishes "Harnessing AI: How China’s AI Ambitions Pose Challenges to U.S. Leadership," a report suggesting that China’s advancements in AI could threaten U.S. dominance in defense and intelligence. The report calls for increased U.S. investment in AI and advocates for proactive policy measures to safeguard U.S. interests.[33][34] |

| 2022 | October 18 | Report | Responsible AI | Responding to growing concerns about ethical AI, CSET publishes "Responsible AI in Defense: Recommendations for U.S. Policy." The report advocates for clear ethical frameworks to ensure accountability and transparency in autonomous military systems. It receives attention from the Department of Defense and contributes to ongoing discussions on responsible AI governance.[35][36] |

| 2023 | February 15 | Policy Impact | AI Governance | CSET’s research informs the Biden administration’s Executive Order on AI Risk Management. The order, influenced by CSET’s work, establishes federal guidelines to promote responsible AI development, emphasizing AI safety, transparency, and accountability. This policy milestone underscores CSET’s impact on shaping federal AI governance.[37][38] |

| 2023 | August 10 | Report | Military AI | CSET releases a detailed report on AI’s role in autonomous weapons systems, emphasizing the need for international collaboration to prevent misuse. The report, "AI and Autonomous Weapons Systems," advocates for new international treaties to govern the ethical use of military AI.[39][40] |

| 2024 | February 20 | Research | Cybersecurity | CSET releases "Securing Critical Infrastructure in the Age of AI," which addresses the growing risks of AI-driven cyber threats to essential systems like energy grids and transportation networks. This report emphasizes the role of AI in mitigating vulnerabilities.[41] |

| 2024 | September 26 | Research | AI Red-Teaming | At DEF CON 2024, CSET releases findings on advancements in AI red-teaming, an approach to testing AI safety through adversarial prompts and simulations.[42][43] |

Numerical and visual data

Google Scholar

The following table summarizes per-year mentions on Google Scholar as of November 5, 2024.

| Year | "Center for Security and Emerging Technology" |

|---|---|

| 2019 | 28 |

| 2020 | 205 |

| 2021 | 285 |

| 2022 | 450 |

| 2023 | 610 |

Google Trends

The image below shows Google Trends data for Center for Security and Emerging Technology (Research institute), from January 2018 to November 2024, when the screenshot was taken. Interest is also ranked by country and displayed on world map.[44]

Google Ngram Viewer

The chart below shows Google Ngram Viewer data for Center for Security and Emerging Technology, from 2018 to 2022.[45]

Wikipedia pageviews for CSET page

The following plots pageviews for the Center for Security and Emerging Technolo Wikipedia page. The image generated on [1].

External links

References

- ↑ "Open Philanthropy AI Policy Initiatives". Retrieved October 31, 2024.

- ↑ "National AI R&D Strategic Plan". Retrieved October 31, 2024.

- ↑ "Jason Matheny at CNAS". Retrieved October 31, 2024.

- ↑ "Jason Matheny's Achievements". Retrieved October 31, 2024.

- ↑ "Georgetown Announces CSET Launch". Retrieved October 31, 2024.

- ↑ "FHI and CHAI Statements on AI Safety". Retrieved October 31, 2024.

- ↑ "Georgetown Announces CSET Launch". Retrieved October 31, 2024.

- ↑ "AI and National Security - CNAS Report". Retrieved October 31, 2024.

- ↑ "About CSET". Retrieved October 31, 2024.

- ↑ "Open Philanthropy Grant for CSET". Retrieved October 31, 2024.

- ↑ "Helen Toner Bio". Retrieved October 31, 2024.

- ↑ "Dewey Murdick Bio". Retrieved October 31, 2024.

- ↑ "The Global AI Talent Landscape Report". Retrieved October 31, 2024.

- ↑ "White House Response to CSET's AI Talent Report". Retrieved October 31, 2024.

- ↑ "CSET Research Areas". Retrieved October 31, 2024.

- ↑ "CSET Expands Research Focus". Retrieved October 31, 2024.

- ↑ "China's AI Development: Implications for the U.S.". Retrieved October 31, 2024.

- ↑ "Defense Department's Response to CSET's China AI Report". Retrieved October 31, 2024.

- ↑ "Artificial Intelligence and Pandemics". Retrieved October 31, 2024.

- ↑ "WHO Report on AI in Pandemic Preparedness". Retrieved October 31, 2024.

- ↑ "CSET Research Portal". Retrieved October 31, 2024.

- ↑ "National Security Commission on AI Reports". Retrieved October 31, 2024.

- ↑ "U.S. AI Strategy Recommendations". Retrieved October 31, 2024.

- ↑ "NSCAI White Paper on U.S. AI Strategy". Retrieved October 31, 2024.

- ↑ "Dewey Murdick Bio at CSET". Retrieved October 31, 2024.

- ↑ "NSCAI Official Site". Retrieved October 31, 2024.

- ↑ "NSCAI Final Report". Retrieved October 31, 2024.

- ↑ "Defense Department AI Strategy". Retrieved October 31, 2024.

- ↑ "AI and Military Use Report". Retrieved October 31, 2024.

- ↑ "NATO on AI in Military Applications". Retrieved October 31, 2024.

- ↑ "Keeping Top Talent: AI Talent Flows to and from the U.S.". Retrieved October 31, 2024.

- ↑ "Brookings on AI Talent Retention". Retrieved October 31, 2024.

- ↑ "Harnessing AI: How China's AI Ambitions Pose Challenges to U.S. Leadership". Retrieved October 31, 2024.

- ↑ "CNAS on AI and National Security". Retrieved October 31, 2024.

- ↑ "Responsible AI in Defense". Retrieved October 31, 2024.

- ↑ "DoD on Responsible AI Use". Retrieved October 31, 2024.

- ↑ "Executive Order on AI Risk Management". Retrieved October 31, 2024.

- ↑ "CSET Research Portal". Retrieved October 31, 2024.

- ↑ "AI and Autonomous Weapons Systems Report". Retrieved October 31, 2024.

- ↑ "UN Convention on Certain Conventional Weapons". Retrieved October 31, 2024.

- ↑ "Securing Critical Infrastructure in the Age of AI". Retrieved October 31, 2024.

- ↑ "Revisiting AI Red-Teaming". Retrieved October 31, 2024.

- ↑ "DEF CON 2024 on AI Red-Teaming". Retrieved October 31, 2024.

- ↑ "Center for Security and Emerging Technology (". Google Trends. Retrieved 10 November 2024.

- ↑ "Center for Security and Emerging Technology". books.google.com. Retrieved 10 November 2024.