Difference between revisions of "Timeline of Wei Dai publications"

(→External links) |

(→Timeline update strategy) |

||

| (19 intermediate revisions by 2 users not shown) | |||

| Line 20: | Line 20: | ||

| LessWrong Wiki || 2009 || | | LessWrong Wiki || 2009 || | ||

|} | |} | ||

| + | |||

==Full timeline== | ==Full timeline== | ||

| Line 187: | Line 188: | ||

|- | |- | ||

| 1999 || {{Dts|January 15}} || Email || everything-list || [https://riceissa.github.io/everything-list-1998-2009/0289.html "book recommendation"] || The message recommends Li and Vitanyi's ''An Introduction to Kolmogorov Complexity and Its Applications'' to everyone on the mailing list. || || | | 1999 || {{Dts|January 15}} || Email || everything-list || [https://riceissa.github.io/everything-list-1998-2009/0289.html "book recommendation"] || The message recommends Li and Vitanyi's ''An Introduction to Kolmogorov Complexity and Its Applications'' to everyone on the mailing list. || || | ||

| + | |- | ||

| + | | 1999 || {{dts|January 21}} || Email || everything-list || [https://riceissa.github.io/everything-list-1998-2009/0316.html "Re: consciousness based on information or computation?"] || This seems to be where Wei Dai proposes what would later be called UDASSA.<ref>https://riceissa.github.io/everything-list-1998-2009/14000.html -- the nabble.com link is broken as of 2020-03-06, so I can't be certain of which email Wei is linking to, but at least I know that the thread is the right one.</ref><ref>https://riceissa.github.io/everything-list-1998-2009/6919.html -- this email doesn't mention the name "UDASSA", but I'm pretty sure that they are talking about UDASSA, given the context.</ref> || || | ||

|- | |- | ||

| 1999 || {{dts|March 27}} || Email || Extropians || [http://extropians.weidai.com/extropians.1Q99/3684.html "reasoning under computational limitations"] || || || | | 1999 || {{dts|March 27}} || Email || Extropians || [http://extropians.weidai.com/extropians.1Q99/3684.html "reasoning under computational limitations"] || || || | ||

| Line 272: | Line 275: | ||

| 2007 || {{dts|August 28}} || Paper || || [http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.65.9987&rep=rep1&type=pdf "VHASH Security"] || Co-authored with Ted Krovetz. || || | | 2007 || {{dts|August 28}} || Paper || || [http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.65.9987&rep=rep1&type=pdf "VHASH Security"] || Co-authored with Ted Krovetz. || || | ||

|- | |- | ||

| − | | 2007 || {{dts|September 26}} || Email || everything-list || [https://www.mail-archive.com/everything-list@googlegroups.com/msg13817.html "against UD+ASSA, part 1"] || || || | + | | 2007 || {{dts|September 26}} || Email || everything-list || [https://www.mail-archive.com/everything-list@googlegroups.com/msg13817.html "against UD+ASSA, part 1"] || This was reposted to LessWrong [https://www.lesswrong.com/posts/zd2DrbHApWypJD2Rz/udt2-and-against-ud-assa]. || || |

|- | |- | ||

| − | | 2007 || {{dts|September 26}} || Email || everything-list || [https://www.mail-archive.com/everything-list@googlegroups.com/msg13818.html "against UD+ASSA, part 2"] || || || | + | | 2007 || {{dts|September 26}} || Email || everything-list || [https://www.mail-archive.com/everything-list@googlegroups.com/msg13818.html "against UD+ASSA, part 2"] || This was reposted to LessWrong [https://www.lesswrong.com/posts/zd2DrbHApWypJD2Rz/udt2-and-against-ud-assa]. || || |

|- | |- | ||

| 2007 || {{dts|November 1}} || Email || SL4 || [http://sl4.org/archive/0711/16977.html "how to do something with really small probability?"] || || || | | 2007 || {{dts|November 1}} || Email || SL4 || [http://sl4.org/archive/0711/16977.html "how to do something with really small probability?"] || || || | ||

| Line 344: | Line 347: | ||

| 2009 || {{dts|November 9}} || Blog post || LessWrong || [http://lesswrong.com/lw/1e8/reflections_on_prerationality/ "Reflections on Pre-Rationality"] || || 30 || | | 2009 || {{dts|November 9}} || Blog post || LessWrong || [http://lesswrong.com/lw/1e8/reflections_on_prerationality/ "Reflections on Pre-Rationality"] || || 30 || | ||

|- | |- | ||

| − | | 2009 || {{Dts|November 16}} || Blog post || LessWrong || [http://lesswrong.com/lw/1fu/why_and_why_not_bayesian_updating/ "Why (and why not) Bayesian Updating?"] || || 26 || | + | | 2009 || {{Dts|November 16}} || Blog post || LessWrong || [http://lesswrong.com/lw/1fu/why_and_why_not_bayesian_updating/ "Why (and why not) Bayesian Updating?"] || The post shares Paolo Ghirardato's paper "Revisiting Savage in a Conditional World", which gives axioms (based on [[wikipedia:Leonard Jimmie Savage|Savage]]'s axioms) which are "necessary and sufficient for an agent's preferences in a dynamic decision problem to be represented as expected utility maximization with Bayesian belief updating". The post points out that one of the axioms is violated in certain problems like counterfactual mugging and the absentminded driver; this motivates using something other than Bayesian updating, namely something like Wei Dai's own updateless decision theory. The postscript points out that in Ghirardato's decision theory, using the joint probability instead of conditional probability gives an equivalent theory (i.e. it is possible to have an agent that produces the same actions, but where the agent's beliefs about each thing can only get weaker and never stronger), and asks why human beliefs don't work like this. (The comments provide some answers.) || 26 || |

|- | |- | ||

| 2009 || {{dts|November 24}} || Blog post || LessWrong || [http://lesswrong.com/lw/1gg/agree_retort_or_ignore_a_post_from_the_future/ "Agree, Retort, or Ignore? A Post From the Future"] || || 87 || | | 2009 || {{dts|November 24}} || Blog post || LessWrong || [http://lesswrong.com/lw/1gg/agree_retort_or_ignore_a_post_from_the_future/ "Agree, Retort, or Ignore? A Post From the Future"] || || 87 || | ||

| Line 366: | Line 369: | ||

| 2010 || {{dts|January 24}} || Blog post || LessWrong || [http://lesswrong.com/lw/1ns/value_uncertainty_and_the_singleton_scenario/ "Value Uncertainty and the Singleton Scenario"] || || 28 || | | 2010 || {{dts|January 24}} || Blog post || LessWrong || [http://lesswrong.com/lw/1ns/value_uncertainty_and_the_singleton_scenario/ "Value Uncertainty and the Singleton Scenario"] || || 28 || | ||

|- | |- | ||

| − | | 2010 || {{dts|January 30}} || Blog post || LessWrong || [http://lesswrong.com/lw/1oj/complexity_of_value_complexity_of_outcome/ "Complexity of Value ≠ Complexity of Outcome"] || || 198 || | + | | 2010 || {{dts|January 30}} || Blog post || LessWrong || [http://lesswrong.com/lw/1oj/complexity_of_value_complexity_of_outcome/ "Complexity of Value ≠ Complexity of Outcome"] || The post makes the distinction between simple vs complex values, and simple vs complex outcomes. Others on LessWrong have argued that human values are complex (in the Kolmogorov complexity sense). Wei Dai points out that there is a tendency on LessWrong to further assume that complex values lead to complex outcomes (thus a future that reflects human values will be complex). Wei Dai argues against this further assumption, saying that complex values can lead to simple outcomes: human values have many different components that don't reduce to each other, but most of them don't scale with the amount of available resources. This means that the few components of human values which ''do'' scale can come to dominate the future. The post then discusses the relevance of this idea to AI alignment: if the different components of human values interact additively, then instead of using something like Eliezer Yudkowsky's Coherent Extrapolated Volition, it may be possible to obtain almost all possible value by creating a superintelligence with just those components that do scale with resources. || 198 || |

|- | |- | ||

| 2010 || {{dts|February 9}} || Blog post || LessWrong || [http://lesswrong.com/lw/1r9/shut_up_and_divide/ "Shut Up and Divide?"] || || 258 || | | 2010 || {{dts|February 9}} || Blog post || LessWrong || [http://lesswrong.com/lw/1r9/shut_up_and_divide/ "Shut Up and Divide?"] || || 258 || | ||

| Line 495: | Line 498: | ||

|- | |- | ||

| 2019 || {{dts|January 4}} || Blog post || LessWrong || [https://www.lesswrong.com/posts/6RjL996E8Dsz3vHPk/two-more-decision-theory-problems-for-humans "Two More Decision Theory Problems for Humans"] || || || | | 2019 || {{dts|January 4}} || Blog post || LessWrong || [https://www.lesswrong.com/posts/6RjL996E8Dsz3vHPk/two-more-decision-theory-problems-for-humans "Two More Decision Theory Problems for Humans"] || || || | ||

| + | |- | ||

| + | | 2019 || {{dts|January 8}} || Question post || Effective Altruism Forum || [https://forum.effectivealtruism.org/posts/rRvWNwdfveSS2uNtT/how-should-large-donors-coordinate-with-small-donors "How should large donors coordinate with small donors?"] || || || | ||

|- | |- | ||

| 2019 || {{dts|January 11}} || Question post || LessWrong || [https://www.lesswrong.com/posts/hnvPCZ4Cx35miHkw3/why-is-so-much-discussion-happening-in-private-google-docs "Why is so much discussion happening in private Google Docs?"] || || || | | 2019 || {{dts|January 11}} || Question post || LessWrong || [https://www.lesswrong.com/posts/hnvPCZ4Cx35miHkw3/why-is-so-much-discussion-happening-in-private-google-docs "Why is so much discussion happening in private Google Docs?"] || || || | ||

| Line 511: | Line 516: | ||

|- | |- | ||

| 2019 || {{dts|March 21}} || Blog post || LessWrong || [https://www.lesswrong.com/posts/WXvt8bxYnwBYpy9oT/the-main-sources-of-ai-risk "The Main Sources of AI Risk?"] || || || | | 2019 || {{dts|March 21}} || Blog post || LessWrong || [https://www.lesswrong.com/posts/WXvt8bxYnwBYpy9oT/the-main-sources-of-ai-risk "The Main Sources of AI Risk?"] || || || | ||

| + | |- | ||

| + | | 2019 || {{dts|March 28}} || Blog post || LessWrong || [https://www.lesswrong.com/posts/GEHg5T9tNbJYTdZwb/please-use-real-names-especially-for-alignment-forum "Please use real names, especially for Alignment Forum?"] || || || | ||

| + | |- | ||

| + | | 2019 || {{dts|April 24}} || Blog post || LessWrong, Alignment Forum || [https://www.lesswrong.com/posts/gYaKZeBbSL4y2RLP3/strategic-implications-of-ais-ability-to-coordinate-at-low "Strategic implications of AIs' ability to coordinate at low cost, for example by merging"] || The post argues that compared to humans, AIs will be able to coordinate much more easily with each other, for example by merging their utility functions. The post then discusses two implications of this: (1) Robin Hanson has argued that it is more important for AIs to obey laws than to share human values and that this implies humans should work to make sure institutions like laws will survive into the future; but if AIs can coordinate with each other without laws, it doesn't make sense to work toward this goal; (2) it is likely that an important part of competitiveness for AIs is the ability to coordinate with other AIs, so any AI alignment approach that aims to be competitive with unaligned AIs must preserve the ability to coordinate with other AIs, which might imply for instance that aligned AI will refuse to be shut down because doing so will make it unable to merge with other AIs. || || 472 | ||

| + | |- | ||

| + | | 2019 || {{dts|May 10}} || Blog post || LessWrong || [https://www.lesswrong.com/posts/xYav5gMSuQvhQHNHG/disincentives-for-participating-on-lw-af "Disincentives for participating on LW/AF"] || || || | ||

| + | |- | ||

| + | | 2019 || {{dts|May 11}} || Blog post || LessWrong || [https://www.lesswrong.com/posts/zd2DrbHApWypJD2Rz/udt2-and-against-ud-assa {{"'}}UDT2' and 'against UD+ASSA{{'"}}] || || || | ||

| + | |- | ||

| + | | 2019 || {{dts|May 21}} || Question post || LessWrong || [https://www.lesswrong.com/posts/sM2sANArtSJE6duZZ/where-are-people-thinking-and-talking-about-global "Where are people thinking and talking about global coordination for AI safety?"] || || || | ||

| + | |- | ||

| + | | 2019 || {{dts|May 29}} || Blog post || LessWrong || [https://www.lesswrong.com/posts/B3NR6SwkXkvY8cFpK/how-to-find-a-lost-phone-with-dead-battery-using-google "How to find a lost phone with dead battery, using Google Location History Takeout"] || || || | ||

| + | |- | ||

| + | | 2019 || {{dts|June 7}} || Blog post || LessWrong || [https://www.lesswrong.com/posts/Sn5NiiD5WBi4dLzaB/agi-will-drastically-increase-economies-of-scale "AGI will drastically increase economies of scale"] || || || | ||

| + | |- | ||

| + | | 2019 || {{dts|July 25}} || Blog post || LessWrong || [https://www.lesswrong.com/posts/JSjagTDGdz2y6nNE3/on-the-purposes-of-decision-theory-research "On the purposes of decision theory research"] || || || | ||

| + | |- | ||

| + | | 2019 || {{dts|July 30}} || Blog post || LessWrong || [https://www.lesswrong.com/posts/rBkZvbGDQZhEymReM/forum-participation-as-a-research-strategy "Forum participation as a research strategy"] || || || | ||

| + | |- | ||

| + | | 2019 || {{dts|August 17}} || Blog post || LessWrong || [https://www.lesswrong.com/posts/rASeoR7iZ9Fokzh7L/problems-in-ai-alignment-that-philosophers-could-potentially "Problems in AI Alignment that philosophers could potentially contribute to"] || || || | ||

| + | |- | ||

| + | | 2019 || {{dts|August 26}} || Blog post || LessWrong || [https://www.lesswrong.com/posts/dt4z82hpvvPFTDTfZ/six-ai-risk-strategy-ideas "Six AI Risk/Strategy Ideas"] || || || | ||

| + | |- | ||

| + | | 2019 || {{dts|September 6}} || Blog post || LessWrong || [https://www.lesswrong.com/posts/bnY3L48TtDrKTzGRb/ai-safety-success-stories "AI Safety 'Success Stories{{'"}}] || || || | ||

| + | |- | ||

| + | | 2019 || {{dts|September 10}} || Blog post || LessWrong || [https://www.lesswrong.com/posts/yAiqLmLFxvyANSfs2/counterfactual-oracles-online-supervised-learning-with "Counterfactual Oracles = online supervised learning with random selection of training episodes"] || || || | ||

| + | |- | ||

| + | | 2019 || {{dts|September 18}} || Blog post || LessWrong || [https://www.lesswrong.com/posts/CJLt4ehxE5d35os23/don-t-depend-on-others-to-ask-for-explanations "Don't depend on others to ask for explanations"] || || || | ||

| + | |- | ||

| + | | 2019 || {{dts|September 30}} || Blog post || LessWrong || [https://www.lesswrong.com/posts/FdfzFcRvqLf4k5eoQ/list-of-resolved-confusions-about-ida "List of resolved confusions about IDA"] || || || | ||

| + | |- | ||

| + | | 2019 || {{dts|November 4}} || Link post || LessWrong || [https://www.lesswrong.com/posts/JMoASne9ccMBxntaX/ways-that-china-is-surpassing-the-us "Ways that China is surpassing the US"] || || || | ||

| + | |- | ||

| + | | 2019 || {{dts|December 9}} || Question post || LessWrong || [https://www.lesswrong.com/posts/vA2Gd2PQjNk68ngFu/what-determines-the-balance-between-intelligence-signaling "What determines the balance between intelligence signaling and virtue signaling?"] || || || | ||

| + | |- | ||

| + | | 2019 || {{dts|December 18}} || Blog post || LessWrong || [https://www.lesswrong.com/posts/bFv8soRx6HB94p5Pg/against-premature-abstraction-of-political-issues "Against Premature Abstraction of Political Issues"] || || || | ||

| + | |- | ||

| + | | 2020 || {{dts|January 24}} || Question post || LessWrong || [https://www.lesswrong.com/posts/PjfsbKrK5MnJDDoFr/have-epistemic-conditions-always-been-this-bad "Have epistemic conditions always been this bad?"] || || || | ||

|} | |} | ||

| + | |||

| + | == Visual data == | ||

| + | |||

| + | === Google Trends === | ||

| + | |||

| + | The chart below shows {{w|Google Trends}} data for Wei Dai (Computer scientist), from January 2004 to April 2021, when the screenshot was taken. Interest is also ranked by country and displayed on world map.<ref>{{cite web |title=Wei Dai |url=https://trends.google.com/trends/explore?date=all&q=%2Fg%2F11hczx4gd7 |website=Google Trends |access-date=28 April 2021}}</ref> | ||

| + | |||

| + | [[File:Wei Dai gt.png|thumb|center|600px]] | ||

| + | |||

| + | |||

| + | === Google Ngram Viewer === | ||

| + | |||

| + | The chart below shows {{w|Google Ngram Viewer}} data for Wei Dai, from 1980 to 2019.<ref>{{cite web |title=Wei Dai |url=https://books.google.com/ngrams/graph?content=Wei+Dai&year_start=1980&year_end=2019&corpus=26&smoothing=3&direct_url=t1%3B%2CWei%20Dai%3B%2Cc0#t1%3B%2CWei%20Dai%3B%2Cc0 |website=books.google.com |access-date=28 April 2021 |language=en}}</ref> | ||

| + | |||

| + | [[File:Wei Dai ngram.png|thumb|center|700px]] | ||

| + | |||

| + | === Wikipedia Views === | ||

| + | |||

| + | The chart below shows pageviews of the English Wikipedia article {{w|Wei Dai}}, from July 2015 to March 2021.<ref>{{cite web |title=Wei Dai |url=https://wikipediaviews.org/displayviewsformultiplemonths.php?page=Wei+Dai&allmonths=allmonths-api&language=en&drilldown=all |website=wikipediaviews.org |access-date=28 April 2021}}</ref> | ||

| + | |||

| + | [[File:Wei Dai wv.png|thumb|center|450px]] | ||

| + | |||

==Meta information on the timeline== | ==Meta information on the timeline== | ||

| Line 536: | Line 601: | ||

I think the new stuff is posted on [https://www.lesswrong.com/users/wei_dai LessWrong]. | I think the new stuff is posted on [https://www.lesswrong.com/users/wei_dai LessWrong]. | ||

| + | |||

| + | ===Pingbacks=== | ||

| + | |||

| + | * [https://x.com/weidai11/status/1822054576276529236 Wei Dai on X] cites this timeline as evidence against him being Satoshi Nakamoto, by showing that his interests had moved away from digital money by the time Bitcoin was introduced. | ||

==See also== | ==See also== | ||

| Line 543: | Line 612: | ||

==External links== | ==External links== | ||

| − | * [http://www.weidai.com/ Wei Dai's personal website] | + | * [http://www.weidai.com/ Wei Dai's personal website] ([http://web.archive.org/web/19990210173234/http://www.eskimo.com/~weidai/ old homepage]) |

* [http://lesswrong.com/user/Wei_Dai/overview/ Wei Dai's LessWrong account] | * [http://lesswrong.com/user/Wei_Dai/overview/ Wei Dai's LessWrong account] | ||

* [https://wiki.lesswrong.com/wiki/Special:Contributions/Wei_Dai LessWrong Wiki contributions] | * [https://wiki.lesswrong.com/wiki/Special:Contributions/Wei_Dai LessWrong Wiki contributions] | ||

| Line 549: | Line 618: | ||

* [https://medium.com/@weidai Wei Dai's Medium account] | * [https://medium.com/@weidai Wei Dai's Medium account] | ||

* [https://agentfoundations.org/threads?id=Wei_Dai Wei Dai's comments on the Intelligent Agent Foundations Forum] | * [https://agentfoundations.org/threads?id=Wei_Dai Wei Dai's comments on the Intelligent Agent Foundations Forum] | ||

| + | * [https://disqus.com/by/wei_dai/ Wei Dai's Disqus account] | ||

* [https://arbital.com/p/WeiDai/ Wei Dai's Arbital account] (only has one comment as of March 2018) | * [https://arbital.com/p/WeiDai/ Wei Dai's Arbital account] (only has one comment as of March 2018) | ||

* [https://scholar.google.com/citations?user=YQSmJLUAAAAJ&hl=en Google Scholar author page] | * [https://scholar.google.com/citations?user=YQSmJLUAAAAJ&hl=en Google Scholar author page] | ||

* [https://groups.google.com/forum/#!forum/one-logic one-logic mailing list] (Wei Dai's only thread-starting post is actually a reply so there is nothing to include in this timeline) | * [https://groups.google.com/forum/#!forum/one-logic one-logic mailing list] (Wei Dai's only thread-starting post is actually a reply so there is nothing to include in this timeline) | ||

| − | * [https://pastebin.com/u/weidai Pastebin account] (see comment [https://www.lesswrong.com/posts/9Yc7Pp7szcjPgPsjf/the-brain-as-a-universal-learning-machine#uaKADc9AC4h5tYghi here]) | + | * [https://pastebin.com/u/weidai Wei Dai's Pastebin account] (see comment [https://www.lesswrong.com/posts/9Yc7Pp7szcjPgPsjf/the-brain-as-a-universal-learning-machine#uaKADc9AC4h5tYghi here]) |

==References== | ==References== | ||

{{Reflist|30em}} | {{Reflist|30em}} | ||

Latest revision as of 21:14, 11 August 2024

This is a timeline of Wei Dai publications. The timeline takes a broad view of publications that includes blog posts and mailing list posts.

Contents

Big picture

| Venue | Time period | Details |

|---|---|---|

| Cypherpunks | 1994–1996 | |

| Extropians | 1997–2003 | |

| everything-list | 1998–2010 | |

| SL4 | 2002–2008 | |

| crypto-optimization | 2009 | |

| LessWrong | 2009–present | |

| LessWrong Wiki | 2009 |

Full timeline

Comment counts are as of February 15, 2018.

For mailing list posts, the main inclusion criterion is that the initial post in a thread is made by Wei. This will probably miss some substantive posts in other threads, but I'm not sure how to quickly identify those posts without spending much more time.

| Year | Month and date | Format | Venue | Title | Summary | Comment count | Word count |

|---|---|---|---|---|---|---|---|

| HTML file | Personal website | "Lucas Sequences in Cryptography" | |||||

| Text file | Personal website | "two attacks against a PipeNet-like protocol once used by the Freedom service" (no formal title so using description on website) | |||||

| Text file | Personal website | "Why good memory could be bad for you: game theoretic analysis of a monopolist with memory" (no formal title so using description on website) | |||||

| Text file | Personal website | "Why cleverness could be bad for you: a game where the smarter players lose" (no formal title so using description on website) | |||||

| Text file | Personal website | "A really simple interpretation of quantum mechanics" (no formal title so using description on website) | |||||

| Text file | Personal website | "How to make more money with your employee stock options at no extra risk" (no formal title so using description on website) | |||||

| Text file | Personal website | "What should advanced civilizations do with waste heat? Use black holes as heat sinks." (no formal title so using description on website) | |||||

| Text file | Personal website | "An alternative to intellectual property: government funding of information goods based on measured value" (no formal title so using description on website) | |||||

| 1994 | August 19 | Cypherpunks | "trusted time stamping", "timestamp.c", "timestamp.c mangled" | ||||

| 1995 | January 12 | Cypherpunks | "analysis of RemailerNet" | ||||

| 1995 | January 12 | Cypherpunks | "time stamping service (again)" | ||||

| 1995 | January 19 | Cypherpunks | "traffic analyzing Chaum's digital mix" | ||||

| 1995 | January 24 | Cypherpunks | "analysis of Chaum's MIX continued" | ||||

| 1995 | February 6 | Cypherpunks | "a simple explanation of DC-Net" | ||||

| 1995 | February 7 | Cypherpunks | "a new way to do anonymity" | ||||

| 1995 | February 9 | Cypherpunks | "LESM - Link Encrypted Session Manager" | ||||

| 1995 | February 10 | Cypherpunks | "law vs technology" | ||||

| 1995 | August 25 | Patent | Microsoft | "Computer-implemented method and computer for performing modular reduction" | With Josh Benaloh. 1995-08-25 is the filing date; the patent was granted on 1998-03-03. | ||

| 1995 | August 29 | Crypto '95 Rump Session | "Fast Modular Reduction" | This is cited by several others but it's not clear if there was a paper or just a presentation. | |||

| 1995 | September 6 | Cypherpunks | "fast modular reduction" | ||||

| 1995 | September 10 | Cypherpunks | "question about reputation" | ||||

| 1995 | October 5 | Cypherpunks | "subjective names and MITM" | ||||

| 1995 | October 8 | Cypherpunks | "anonymous cash without blinding" | ||||

| 1995 | October 16 | Cypherpunks | "transaction costs in anonymous markets" | ||||

| 1995 | October 21 | Cypherpunks | "Encrypted TCP Tunneler" | ||||

| 1995 | October 26 | Cypherpunks | "idle CPU markets" | ||||

| 1995 | November 4 | Cypherpunks | "Crypto++ 1.1" | ||||

| 1995 | November 10 | Cypherpunks | "Diffie-Hellman in GF(2^n)?" | ||||

| 1995 | November 21 | Cypherpunks | "towards a theory of reputation" | ||||

| 1995 | November 23 | Cypherpunks | "generating provable primes" | ||||

| 1995 | December 18 | Cypherpunks | "wish list for Crypto++?" | ||||

| 1996 | February 5 | Cypherpunks | "Disperse/Collect version 1.0" | Announces release of software that helps with sending large files. | |||

| 1996 | February 13 | Cypherpunks | "Crypto++ 2.0 beta" | ||||

| 1996 | February 20 | Cypherpunks | "ANNOUNCE: Crypto++ 2.0" | ||||

| 1996 | March 23 | Cypherpunks | "Java questions" | ||||

| 1996 | May 11 | Cypherpunks, Coderpunks | "Crypto++ 2.1" | ||||

| 1996 | July 29 | Cypherpunks | "game theory" | ||||

| 1996 | August | Text file | "PipeNet 1.1" | The text file containing a description of PipeNet 1.1. The publication date is a little unclear. A lot of the papers citing PipeNet use August 1996 for version 1.1. | |||

| 1996 | September 9 | Cypherpunks | "papers on anonymous protocols" | ||||

| 1997 | February 3 | Cypherpunks | "what's in a name?" | ||||

| 1997 | March 21 | Cypherpunks | "'why privacy' revisited" | ||||

| 1997 | March 26 | Cypherpunks | "game theoretic analysis of junk mail" | ||||

| 1997 | March 27 | Cypherpunks | "junk mail analysis, part 2" | ||||

| 1997 | April 8 | Cypherpunks | "some arguments for privacy" | ||||

| 1997 | April 12 | Cypherpunks | "anonymous credit" | ||||

| 1997 | September 18 | Cypherpunks | "sooner or later" | Assuming a crypto ban is inevitable, the message explores why an earlier ban may be preferable to cypherpunks. | |||

| 1997 | September 20 | Extropians | "copying related probability question" | ||||

| 1997 | September 22 | Cypherpunks | "encouraging digital pseudonyms" | ||||

| 1997 | September 24 | Extropians | "tunneling through the Singularity" | ||||

| 1997 | October 15 | Extropians | "many minds interpretation of probability" | ||||

| 1997 | October 20 | Patent | Microsoft | "Cryptographic system and method with fast decryption" | 1997-10-20 is the filing date; the patent was granted on 2000-06-27. | ||

| 1997 | October 24 | Extropians | "PHYS: dyson shell thermodynamics" | ||||

| 1997 | December 14 | Extropians | "the ultimate refrigerator" | ||||

| 1998 | January 6 | Cypherpunks | "cypherpunks and guns" | ||||

| 1998 | January 7 | Extropians | "personal identity" | ||||

| 1998 | January 11 | Extropians | "algorithmic complexity of God" | ||||

| 1998 | January 15 | Extropians | "ANNOUNCE: the 'everything' mailing list" (mirror) | The email announces the launch of everything-list. | |||

| 1998 | January 15 | Extropians | "adapting to an open universe" | ||||

| 1998 | January 19 | everything-list | "basic questions" | ||||

| 1998 | January 19 | Cypherpunks | "PipeNet description" | ||||

| 1998 | January 26 | everything-list | "continous universes" | ||||

| 1998 | January 28 | everything-list | "lost in the many worlds" | ||||

| 1998 | February 11 | everything-list | "relative state and decoherence" | ||||

| 1998 | February 11 | everything-list | "experimentation" | ||||

| 1998 | February 13 | Extropians | "human capital market" | ||||

| 1998 | February 16 | Cypherpunks | "payment mix" | ||||

| 1998 | February 18 | everything-list | "predictions" | ||||

| 1998 | February 21 | everything-list | "another paradox and a solution" | ||||

| 1998 | February 26 | Extropians | "spears versus shields" | ||||

| 1998 | March 15 | everything-list | "probability and decision theory" | ||||

| 1998 | April 14 | everything-list | "a baysian solution" | ||||

| 1998 | April 16 | everything-list | "the one universe" | ||||

| 1998 | May 28 | everything-list | "momentary and persistent minds" | ||||

| 1998 | June 9 | everything-list | "possibilities for I and Q" | ||||

| 1998 | November? | Text file | "b-money" | This document proposes b-money. It would eventually be cited in the bitcoin whitepaper. Discussion on Cypherpunks takes place starting in December.[1] | |||

| 1998 | November 26 | Cypherpunks | "PipeNet 1.1 and b-money" | ||||

| 1999 | January 9 | everything-list | "information content of measures" | ||||

| 1999 | January 14 | everything-list | "consciousness based on information or computation?" | ||||

| 1999 | January 15 | everything-list | "book recommendation" | The message recommends Li and Vitanyi's An Introduction to Kolmogorov Complexity and Its Applications to everyone on the mailing list. | |||

| 1999 | January 21 | everything-list | "Re: consciousness based on information or computation?" | This seems to be where Wei Dai proposes what would later be called UDASSA.[2][3] | |||

| 1999 | March 27 | Extropians | "reasoning under computational limitations" | ||||

| 1999 | April 1 | everything-list | "all of me or one of me" | ||||

| 1999 | June 1 | everything-list | "practical reasoning and strong SSA" | ||||

| 1999 | June 7 | everything-list | "why is death painful?" | ||||

| 1999 | July 12 | everything-list | "philosophical frameworks" | ||||

| 1999 | July 12 | everything-list | "minimal theory of consciousness" | ||||

| 2000 | September 3 | Extropians | "NewScientist article on the ultimate laptop" | ||||

| 2000 | September 12 | Extropians | "how to change values" | ||||

| 2001 | January 23 | everything-list | "Oracle by Greg Egan" | ||||

| 2001 | February 15 | everything-list | "no need for anthropic reasoning" | ||||

| 2001 | February 28 | everything-list | "another anthropic reasoning paradox" | ||||

| 2001 | August 16 | Extropians | "cloning protection technology" | ||||

| 2001 | November 6 | Extropians | "relativism vs equivalidity" | ||||

| 2001 | November 7 | Extropians | "the Blight not evil?" | ||||

| 2001 | November 20 | Extropians | "non-violent conflict resolution" | ||||

| 2001 | December 20 | everything-list | "relevance of the real measure" | ||||

| 2002 | February 6 | Text file | Personal website | "an attack against SSH2 protocol" | Also posted to sci.crypt and the IETF SSH working group mailing list. | ||

| 2002 | April 17 | everything-list | "decision theory papers" | Introduces two decision theory papers to the list. | |||

| 2002 | May 3 | SL4 | "supergoal stability" | ||||

| 2002 | May 23 | everything-list | "JOINING posts" | ||||

| 2002 | June 14 | everything-list, Extropians | "self-sampling assumption is incorrect" | ||||

| 2002 | August 11 | everything-list | "a framework for multiverse decision theory" | ||||

| 2002 | August 12 | everything-list | "modal logic and possible worlds" | ||||

| 2002 | August 13 | everything-list | "SIA and the presumptuous philosopher" | ||||

| 2002 | December 31 | SL4 | "moral symmetry" | ||||

| 2003 | February 20 | Extropians | "IRAQ: cost of inspections" | ||||

| 2003 | May 8 | Extropians | "a market approach to terrorism" | ||||

| 2003 | August 5 | Extropians | "a simulated utilitarian" | ||||

| 2003 | December 31 | SL4 | "'friendly' humans?" | ||||

| 2004 | January 24 | everything-list | "recommended books" | A list of books that are relevant for understanding topics discussed on the mailing list. | |||

| 2004 | March 27 | SL4 | "escape from simulation" | ||||

| 2004 | April 19 | everything-list | "conversation with a Bayesian" | ||||

| 2004 | June 28 | SL4 | "dream qualia" | ||||

| 2004 | July 23 | HTML file | Crypto++ website | "Crypto++ Benchmarks" | Speed benchmarks for algorithms provided by Crypto++. It's unclear when this page was first published; the earliest snapshot on Internet Archive is from December 2006, which has a last modification date of 2004-07-23. The page continues to be updated, and the latest version can be found on the Crypto++ website. | ||

| 2004 | October 23 | SL4 | "SIAI's direction" | ||||

| 2005 | July 13 | everything-list | "is induction unformalizable?" | ||||

| 2005 | August 24 | SL4 | "uncertainty in mathematics" | ||||

| 2005 | September 11 | SL4 | "mathematical reasoning" | ||||

| 2006 | March 29 | everything-list | "proper behavior for a mathematical substructure" | ||||

| 2006 | April 9 | everything-list | "why can't we erase information?" | ||||

| 2007 | April | Text file | Ted Krovetz's website | "VMAC: Message Authentication Code using Universal Hashing" | Co-authored with Ted Krovetz. | ||

| 2007 | August 28 | Paper | "VHASH Security" | Co-authored with Ted Krovetz. | |||

| 2007 | September 26 | everything-list | "against UD+ASSA, part 1" | This was reposted to LessWrong [1]. | |||

| 2007 | September 26 | everything-list | "against UD+ASSA, part 2" | This was reposted to LessWrong [2]. | |||

| 2007 | November 1 | SL4 | "how to do something with really small probability?" | ||||

| 2007 | November 10 | SL4 | "answers I'd like from an SI" | ||||

| 2007 | November 13 | SL4 | "answers I'd like, part 2" | ||||

| 2008 | Paper | NIST mailing list? | "Collisions for CubeHash1/45 and CubeHash2/89" | Citation information is from Google Scholar; I can't find the original posting (the PDF itself is undated). | |||

| 2008 | July 14 | SL4 | "prove your source code" | ||||

| 2008 | July 16 | SL4 | "trade or merge?" | ||||

| 2008 | September 7 | SL4 | "Bayesian rationality vs. voluntary mergers" | ||||

| 2009 | April 2 | crypto-optimization | "GCM with 2KB/key tables" | ||||

| 2009 | April 3 | crypto-optimization | "GCC compile farm" | ||||

| 2009 | April 3 | crypto-optimization | "tips for instruction scheduling?" | ||||

| 2009 | April 6 | crypto-optimization | "AES register saving tricks" | ||||

| 2009 | April 7 | Blog post | LessWrong | "Newcomb's Problem vs. One-Shot Prisoner's Dilemma" | 15 | ||

| 2009 | April 10 | crypto-optimization | "SSE2 Comba multiplication" | ||||

| 2009 | April 16 | crypto-optimization | "secrets of GMP 4.3" | ||||

| 2009 | April 16 | crypto-optimization | "Jacobi quartic form curves" | ||||

| 2009 | May 5 | crypto-optimization | "constant-time extended GCD?" | ||||

| 2009 | May 7 | Blog post | LessWrong | "Epistemic vs. Instrumental Rationality: Case of the Leaky Agent" | 19 | ||

| 2009 | June 7 | Blog post | LessWrong | "indexical uncertainty and the Axiom of Independence" | 70 | ||

| 2009 | July 16 | Blog post | LessWrong | "Fair Division of Black-Hole Negentropy: an Introduction to Cooperative Game Theory" | 34 | ||

| 2009 | July 17 | Blog post | LessWrong | "The Popularization Bias" | 53 | ||

| 2009 | July 23 | Blog post | LessWrong | "The Nature of Offense" | 173 | ||

| 2009 | July 26 | Blog post | LessWrong | "The Second Best" | 53 | ||

| 2009 | July 31 | Blog post | LessWrong | "An Alternative Approach to AI Cooperation" | 25 | ||

| 2009 | August 13 | Blog post | LessWrong | "Towards a New Decision Theory" | This post introduces what would be called updateless decision theory (UDT). | 142 | |

| 2009 | August 13 | Wiki page | LessWrong Wiki | "Counterfactual mugging" | |||

| 2009 | September 3 | Blog post | LessWrong | "Torture vs. Dust vs. the Presumptuous Philosopher: Anthropic Reasoning in UDT" | 28 | ||

| 2009 | September 16 | Blog post | LessWrong | "The Absent-Minded Driver" | 141 | ||

| 2009 | September 24 | Blog post | LessWrong | "Boredom vs. Scope Insensitivity" | 38 | ||

| 2009 | September 26 | Blog post | LessWrong | "Non-Malthusian Scenarios" | 88 | ||

| 2009 | October 2 | Blog post | LessWrong | "Scott Aaronson on Born Probabilities" | 7 | ||

| 2009 | October 13 | Blog post | LessWrong | "Anticipation vs. Faith: At What Cost Rationality?" | 105 | ||

| 2009 | October 20 | Blog post | LessWrong | "Why the beliefs/values dichotomy?" | 153 | ||

| 2009 | November 3 | Blog post | LessWrong | "Re-understanding Robin Hanson’s 'Pre-Rationality'" | 18 | ||

| 2009 | November 9 | Blog post | LessWrong | "Reflections on Pre-Rationality" | 30 | ||

| 2009 | November 16 | Blog post | LessWrong | "Why (and why not) Bayesian Updating?" | The post shares Paolo Ghirardato's paper "Revisiting Savage in a Conditional World", which gives axioms (based on Savage's axioms) which are "necessary and sufficient for an agent's preferences in a dynamic decision problem to be represented as expected utility maximization with Bayesian belief updating". The post points out that one of the axioms is violated in certain problems like counterfactual mugging and the absentminded driver; this motivates using something other than Bayesian updating, namely something like Wei Dai's own updateless decision theory. The postscript points out that in Ghirardato's decision theory, using the joint probability instead of conditional probability gives an equivalent theory (i.e. it is possible to have an agent that produces the same actions, but where the agent's beliefs about each thing can only get weaker and never stronger), and asks why human beliefs don't work like this. (The comments provide some answers.) | 26 | |

| 2009 | November 24 | Blog post | LessWrong | "Agree, Retort, or Ignore? A Post From the Future" | 87 | ||

| 2009 | November 27 | Wiki page | LessWrong Wiki | "The Hanson-Yudkowsky AI-Foom Debate" | |||

| 2009 | November 30 | Blog post | LessWrong | "The Moral Status of Independent Identical Copies" | 71 | ||

| 2009 | December 10 | Blog post | LessWrong | "Probability Space & Aumann Agreement" | 71 | ||

| 2009 | December 11 | Blog post | LessWrong | "What Are Probabilities, Anyway?" | 78 | ||

| 2009 | December 29 | Blog post | LessWrong | "A Master-Slave Model of Human Preferences" | 80 | ||

| 2010 | January 8 | Blog post | LessWrong | "Fictional Evidence vs. Fictional Insight" | 43 | ||

| 2010 | January 15 | Blog post | LessWrong | "The Preference Utilitarian’s Time Inconsistency Problem" | 104 | ||

| 2010 | January 17 | Blog post | LessWrong | "Tips and Tricks for Answering Hard Questions" | 52 | ||

| 2010 | January 24 | Blog post | LessWrong | "Value Uncertainty and the Singleton Scenario" | 28 | ||

| 2010 | January 30 | Blog post | LessWrong | "Complexity of Value ≠ Complexity of Outcome" | The post makes the distinction between simple vs complex values, and simple vs complex outcomes. Others on LessWrong have argued that human values are complex (in the Kolmogorov complexity sense). Wei Dai points out that there is a tendency on LessWrong to further assume that complex values lead to complex outcomes (thus a future that reflects human values will be complex). Wei Dai argues against this further assumption, saying that complex values can lead to simple outcomes: human values have many different components that don't reduce to each other, but most of them don't scale with the amount of available resources. This means that the few components of human values which do scale can come to dominate the future. The post then discusses the relevance of this idea to AI alignment: if the different components of human values interact additively, then instead of using something like Eliezer Yudkowsky's Coherent Extrapolated Volition, it may be possible to obtain almost all possible value by creating a superintelligence with just those components that do scale with resources. | 198 | |

| 2010 | February 9 | Blog post | LessWrong | "Shut Up and Divide?" | 258 | ||

| 2010 | February 19 | Blog post | LessWrong | "Explicit Optimization of Global Strategy (Fixing a Bug in UDT1)" | This post describes what would be known as UDT 1.1.[4] | 38 | |

| 2010 | March 2 | Blog post | LessWrong | "Individual vs. Group Epistemic Rationality" | 54 | ||

| 2010 | March 14 | everything-list | "everything-list and the Singularity" | Notes the connection between the modal realism and Singularity communities, and introduces LessWrong to everything-list. | |||

| 2010 | March 19 | Blog post | LessWrong | "Think Before You Speak (And Signal It)" | 39 | ||

| 2010 | April 4 | Blog post | LessWrong | "Late Great Filter Is Not Bad News" | 75 | ||

| 2010 | April 8 | Blog post | LessWrong | "Frequentist Magic vs. Bayesian Magic | 79 | ||

| 2010 | June 3 | Blog post | LessWrong | "Hacking the CEV for Fun and Profit" | 194 | ||

| 2010 | July 27 | Blog post | LessWrong | "Metaphilosophical Mysteries" | 255 | ||

| 2011 | January 8 | Blog post | LessWrong | "Why do some kinds of work not feel like work?" | 21 | ||

| 2011 | February 5 | Blog post | LessWrong | "Another Argument Against Eliezer's Meta-Ethics" | 35 | ||

| 2011 | February 7 | Blog post | LessWrong | "What does a calculator mean by '2'?" | 29 | ||

| 2011 | February 14 | Blog post | LessWrong | "Why Do We Engage in Moral Simplification?" | 35 | ||

| 2011 | March 11 | Blog post | LessWrong | "A Thought Experiment on Pain as a Moral Disvalue" | 41 | ||

| 2011 | May 3 | Blog post | LessWrong | "[link] Whole Brain Emulation and the Evolution of Superorganisms" | 7 | ||

| 2011 | May 22 | Blog post | LessWrong | "How To Be More Confident... That You're Wrong" | 24 | ||

| 2011 | May 24 | Blog post | LessWrong | "LessWrong Power Reader (Greasemonkey script, updated)" | 26 | ||

| 2011 | June 13 | Blog post | LessWrong | "What do bad clothes signal about you?" | 78 | ||

| 2011 | July 6 | Blog post | LessWrong | "Outline of possible Singularity scenarios (that are not completely disastrous)" | 40 | ||

| 2011 | July 13 | Blog post | LessWrong | "Some Thoughts on Singularity Strategies" | 29 | ||

| 2011 | July 17 | Blog post | LessWrong | "Experiment: Psychoanalyze Me" | 12 | ||

| 2011 | July 24 | Blog post | LessWrong | "What if sympathy depends on anthropomorphizing?" | 16 | ||

| 2011 | July 27 | Blog post | LessWrong | "What's wrong with simplicity of value?" | 37 | ||

| 2011 | August 22 | Blog post | LessWrong | "Do we want more publicity, and if so how?" | 54 | ||

| 2011 | September 28 | Blog post | LessWrong | "Wanted: backup plans for 'seed AI turns out to be easy'" | 62 | ||

| 2011 | November 18 | Blog post | LessWrong | "Where do selfish values come from?" | 57 | ||

| 2012 | March 21 | Blog post | LessWrong | "A Problem About Bargaining and Logical Uncertainty" | 45 | ||

| 2012 | March 22 | Blog post | LessWrong | "Modest Superintelligences" | 88 | ||

| 2012 | April 11 | Blog post | LessWrong | "against 'AI risk'" | 89 | ||

| 2012 | April 12 | Blog post | LessWrong | "Reframing the Problem of AI Progress" | 47 | ||

| 2012 | April 18 | Blog post | LessWrong | "How can we get more and better LW contrarians?" | 328 | ||

| 2012 | May 10 | Blog post | LessWrong | "Strong intutions. Weak arguments. What to do?" | 45 | ||

| 2012 | May 12 | Blog post | LessWrong | "Neuroimaging as alternative/supplement to cryonics?" | 68 | ||

| 2012 | May 16 | Blog post | LessWrong | "How can we ensure that a Friendly AI team will be sane enough?" | 64 | ||

| 2012 | June 6 | Blog post | LessWrong | "List of Problems That Motivated UDT" | 11 | ||

| 2012 | June 6 | Blog post | LessWrong | "Open Problems Related to Solomonoff Induction" | 103 | ||

| 2012 | July 21 | Blog post | LessWrong | "Work on Security Instead of Friendliness?" | 103 | ||

| 2012 | August 13 | Blog post | LessWrong | "Cynical explanations of FAI critics (including myself)" | 49 | ||

| 2012 | August 16 | Blog post | LessWrong | "Kelly Criteria and Two Envelopes" | 1 | ||

| 2012 | September 12 | Blog post | LessWrong | "Under-acknowledged Value Differences" | 68 | ||

| 2012 | October 8 | Blog post | LessWrong | "Reasons for someone to 'ignore' you" | 55 | ||

| 2012 | December 18 | Blog post | LessWrong | "Ontological Crisis in Humans" | 67 | ||

| 2012 | December 20 | Blog post | LessWrong | "Beware Selective Nihilism" | 46 | ||

| 2012 | December 26 | Blog post | LessWrong | "Morality Isn't Logical" | 85 | ||

| 2013 | January 11 | Blog post | LessWrong | "How to signal curiosity?" | 52 | ||

| 2013 | January 18 | Blog post | LessWrong | "Outline of Possible Sources of Values" | 28 | ||

| 2013 | April 23 | Blog post | LessWrong | "Normativity and Meta-Philosophy" | 55 | ||

| 2013 | July 17 | Blog post | LessWrong | "Three Approaches to 'Friendliness'" | 84 | ||

| 2013 | August 28 | Blog post | LessWrong | "Outside View(s) and MIRI's FAI Endgame" | 60 | ||

| 2014 | July 19 | Blog post | LessWrong | "Look for the Next Tech Gold Rush?" | 113 | ||

| 2014 | August 6 | Blog post | LessWrong | "Six Plausible Meta-Ethical Alternatives" | 36 | ||

| 2014 | August 13 | Blog post | LessWrong | "What is the difference between rationality and intelligence?" | 52 | ||

| 2014 | October 21 | Blog post | LessWrong | "Is the potential astronomical waste in our universe too small to care about?" | 14 | ||

| 2015 | June 6 | Blog post | LessWrong | "[link] Baidu cheats in an AI contest in order to gain a 0.24% advantage" | 32 | ||

| 2016 | December 8 | Blog post | LessWrong | "Combining Prediction Technologies to Help Moderate Discussions" | 15 | ||

| 2017 | September 5 | Blog post | LessWrong | "Online discussion is better than pre-publication peer review" | 25 | ||

| 2018 | March 10 | Blog post | LessWrong | "Multiplicity of 'enlightenment' states and contemplative practices" | |||

| 2018 | April 1 | Blog post | LessWrong | "Can corrigibility be learned safely?" | |||

| 2018 | June 7 | Blog post | LessWrong | "Beyond Astronomical Waste" | |||

| 2018 | June 11 | Blog post | LessWrong | "A general model of safety-oriented AI development" | |||

| 2018 | November 28 | Blog post | LessWrong | "Counterintuitive Comparative Advantage" | |||

| 2018 | December 13 | Blog post | LessWrong | "Three AI Safety Related Ideas" | |||

| 2018 | December 16 | Blog post | LessWrong | "Two Neglected Problems in Human-AI Safety" | |||

| 2019 | January 4 | Blog post | LessWrong | "Two More Decision Theory Problems for Humans" | |||

| 2019 | January 8 | Question post | Effective Altruism Forum | "How should large donors coordinate with small donors?" | |||

| 2019 | January 11 | Question post | LessWrong | "Why is so much discussion happening in private Google Docs?" | |||

| 2019 | February 9 | Blog post | LessWrong | "The Argument from Philosophical Difficulty" | |||

| 2019 | February 9 | Blog post | LessWrong | "Some Thoughts on Metaphilosophy" | |||

| 2019 | February 15 | Blog post | LessWrong | "Some disjunctive reasons for urgency on AI risk" | |||

| 2019 | February 15 | Question post | LessWrong | "Why didn't Agoric Computing become popular?" | |||

| 2019 | March 5 | Blog post | LessWrong | "Three ways that 'Sufficiently optimized agents appear coherent' can be false" | |||

| 2019 | March 20 | Question post | LessWrong | "What's wrong with these analogies for understanding Informed Oversight and IDA?" | |||

| 2019 | March 21 | Blog post | LessWrong | "The Main Sources of AI Risk?" | |||

| 2019 | March 28 | Blog post | LessWrong | "Please use real names, especially for Alignment Forum?" | |||

| 2019 | April 24 | Blog post | LessWrong, Alignment Forum | "Strategic implications of AIs' ability to coordinate at low cost, for example by merging" | The post argues that compared to humans, AIs will be able to coordinate much more easily with each other, for example by merging their utility functions. The post then discusses two implications of this: (1) Robin Hanson has argued that it is more important for AIs to obey laws than to share human values and that this implies humans should work to make sure institutions like laws will survive into the future; but if AIs can coordinate with each other without laws, it doesn't make sense to work toward this goal; (2) it is likely that an important part of competitiveness for AIs is the ability to coordinate with other AIs, so any AI alignment approach that aims to be competitive with unaligned AIs must preserve the ability to coordinate with other AIs, which might imply for instance that aligned AI will refuse to be shut down because doing so will make it unable to merge with other AIs. | 472 | |

| 2019 | May 10 | Blog post | LessWrong | "Disincentives for participating on LW/AF" | |||

| 2019 | May 11 | Blog post | LessWrong | "'UDT2' and 'against UD+ASSA'" | |||

| 2019 | May 21 | Question post | LessWrong | "Where are people thinking and talking about global coordination for AI safety?" | |||

| 2019 | May 29 | Blog post | LessWrong | "How to find a lost phone with dead battery, using Google Location History Takeout" | |||

| 2019 | June 7 | Blog post | LessWrong | "AGI will drastically increase economies of scale" | |||

| 2019 | July 25 | Blog post | LessWrong | "On the purposes of decision theory research" | |||

| 2019 | July 30 | Blog post | LessWrong | "Forum participation as a research strategy" | |||

| 2019 | August 17 | Blog post | LessWrong | "Problems in AI Alignment that philosophers could potentially contribute to" | |||

| 2019 | August 26 | Blog post | LessWrong | "Six AI Risk/Strategy Ideas" | |||

| 2019 | September 6 | Blog post | LessWrong | "AI Safety 'Success Stories'" | |||

| 2019 | September 10 | Blog post | LessWrong | "Counterfactual Oracles = online supervised learning with random selection of training episodes" | |||

| 2019 | September 18 | Blog post | LessWrong | "Don't depend on others to ask for explanations" | |||

| 2019 | September 30 | Blog post | LessWrong | "List of resolved confusions about IDA" | |||

| 2019 | November 4 | Link post | LessWrong | "Ways that China is surpassing the US" | |||

| 2019 | December 9 | Question post | LessWrong | "What determines the balance between intelligence signaling and virtue signaling?" | |||

| 2019 | December 18 | Blog post | LessWrong | "Against Premature Abstraction of Political Issues" | |||

| 2020 | January 24 | Question post | LessWrong | "Have epistemic conditions always been this bad?" |

Visual data

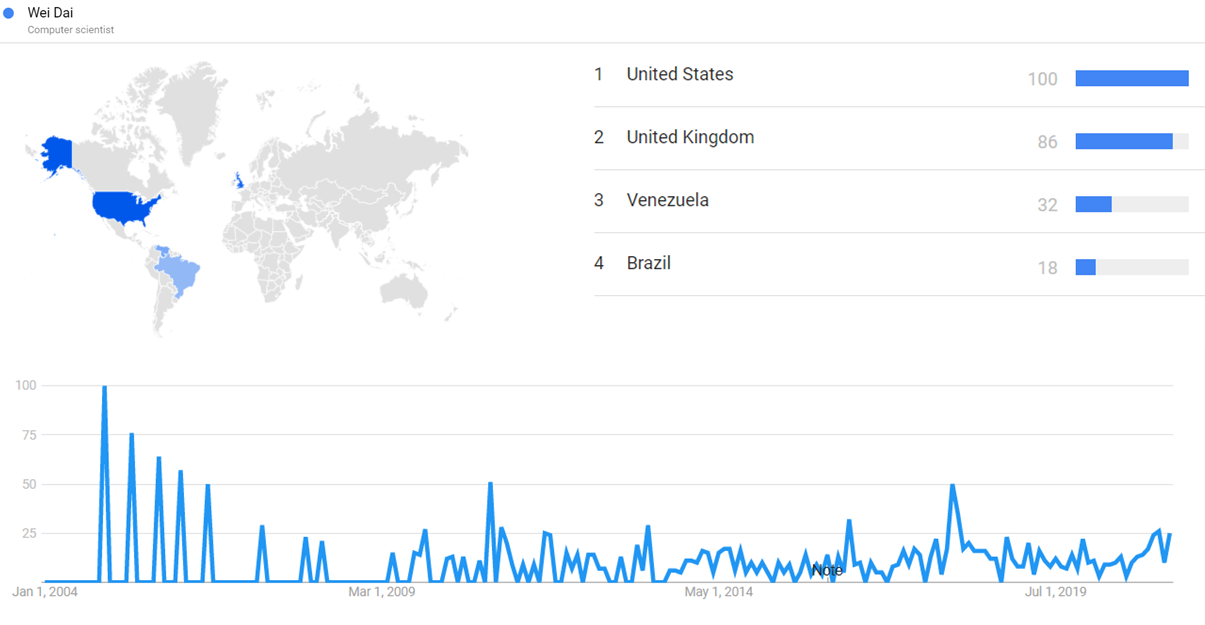

Google Trends

The chart below shows Google Trends data for Wei Dai (Computer scientist), from January 2004 to April 2021, when the screenshot was taken. Interest is also ranked by country and displayed on world map.[5]

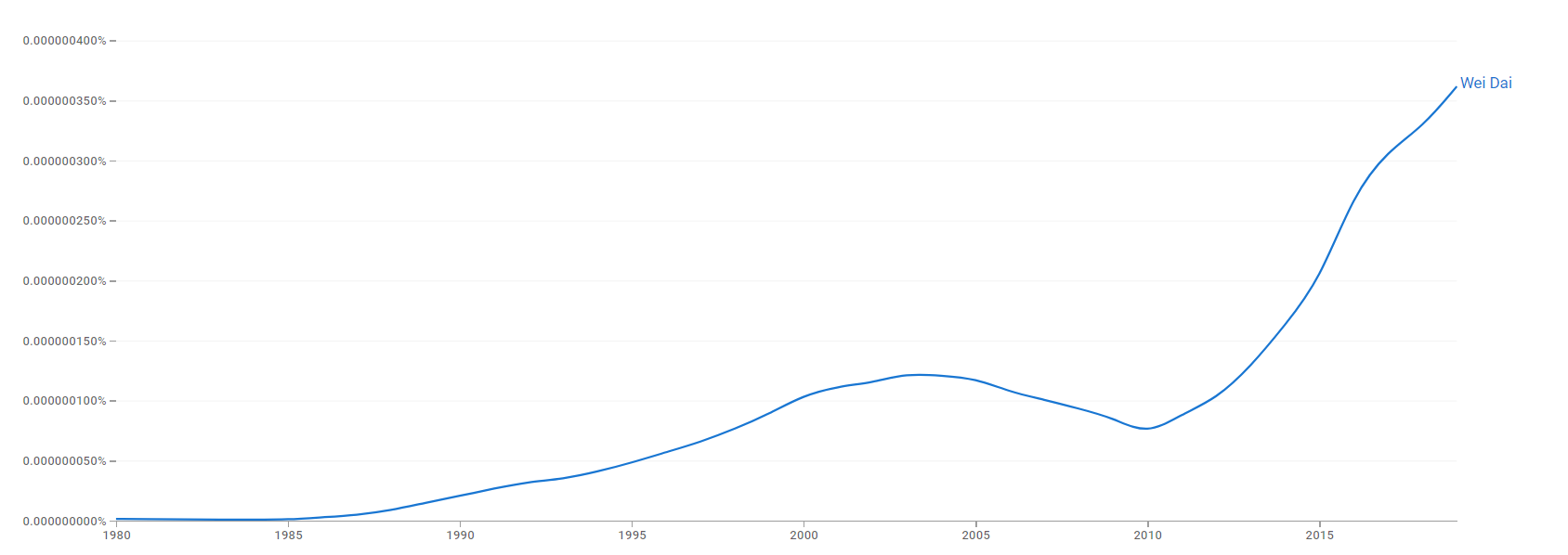

Google Ngram Viewer

The chart below shows Google Ngram Viewer data for Wei Dai, from 1980 to 2019.[6]

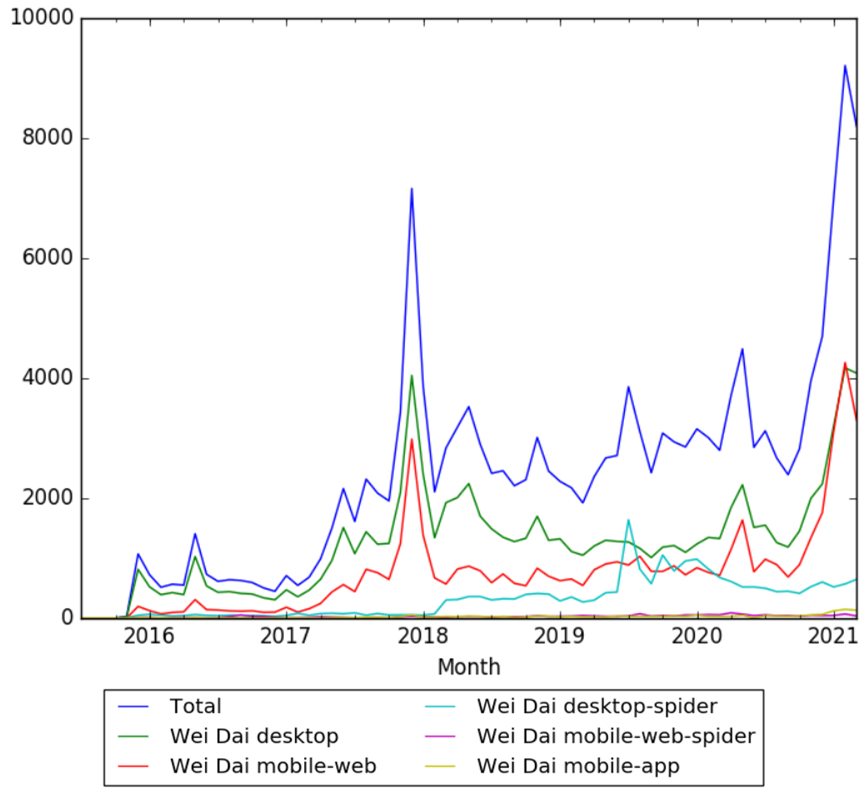

Wikipedia Views

The chart below shows pageviews of the English Wikipedia article Wei Dai, from July 2015 to March 2021.[7]

Meta information on the timeline

How the timeline was built

The initial version of the timeline was written by Issa Rice.

Funding information for this timeline is available.

Feedback and comments

Feedback for the timeline can be provided at the following places:

What the timeline is still missing

- There are some good comment threads that involve Wei on LW, EA Forum, Medium, and IAFF. I think some of these should be included. Some of these are now listed at https://causeprioritization.org/List_of_discussions_between_Paul_Christiano_and_Wei_Dai

- this email mentions him posting to "the NIST SHA-3 forum".

Timeline update strategy

I think the new stuff is posted on LessWrong.

Pingbacks

- Wei Dai on X cites this timeline as evidence against him being Satoshi Nakamoto, by showing that his interests had moved away from digital money by the time Bitcoin was introduced.

See also

External links

- Wei Dai's personal website (old homepage)

- Wei Dai's LessWrong account

- LessWrong Wiki contributions

- Wei Dai's Effective Altruism Forum account

- Wei Dai's Medium account

- Wei Dai's comments on the Intelligent Agent Foundations Forum

- Wei Dai's Disqus account

- Wei Dai's Arbital account (only has one comment as of March 2018)

- Google Scholar author page

- one-logic mailing list (Wei Dai's only thread-starting post is actually a reply so there is nothing to include in this timeline)

- Wei Dai's Pastebin account (see comment here)

References

- ↑ Adam Back (December 5, 1998). "Wei Dei's "b-money" protocol". Retrieved February 16, 2018.

- ↑ https://riceissa.github.io/everything-list-1998-2009/14000.html -- the nabble.com link is broken as of 2020-03-06, so I can't be certain of which email Wei is linking to, but at least I know that the thread is the right one.

- ↑ https://riceissa.github.io/everything-list-1998-2009/6919.html -- this email doesn't mention the name "UDASSA", but I'm pretty sure that they are talking about UDASSA, given the context.

- ↑ Wei Dai (July 1, 2018). "Wei_Dai comments on Another take on agent foundations: formalizing zero-shot reasoning". LessWrong. Retrieved July 3, 2018.

- ↑ "Wei Dai". Google Trends. Retrieved 28 April 2021.

- ↑ "Wei Dai". books.google.com. Retrieved 28 April 2021.

- ↑ "Wei Dai". wikipediaviews.org. Retrieved 28 April 2021.