Timeline of machine learning

This page is a timeline of machine learning. Major discoveries, achievements, milestones and other major events are included.

Big picture

| Time period | Development summary | More details |

|---|---|---|

| 1950s-1970s | Early days | The early days of machine learning are marked by the development of statistical methods and the use of simple algorithms. In the 1950s, Arthur Samuel develops a machine learning algorithm that can learn to play checkers. In the 1960s, Frank Rosenblatt develops the perceptron, a simple neural network that could learn to classify patterns. However, the early days of machine learning were also marked by a period of pessimism, known as the AI Winter. This was due to a number of factors, including the failure of some early AI projects and the difficulty of scaling up machine learning algorithms to large datasets. |

| 1980s-1990s | Resurgence | The rediscovery of backpropagation causes a resurgence in machine learning research. Convolutional neural networks emerge. Support vector machines and recurrent neural networks become popular. Machine learning shifts from a knowledge-driven approach to a data-driven approach.[1] |

| 2000s-present | Modern era | The modern era of machine learning begins in the 2000s, when the development of deep learning make it possible to train neural networks on even larger datasets. This leads to a resurgence of interest in neural networks, and they are now used in a wide variety of applications, including image recognition, natural language processing, speech recognition, machine translation, medical diagnosis, financial trading, and self-driving cars. |

Summary by decade

| Decade | Summary |

|---|---|

| <1950s | Statistical methods are discovered and refined. |

| 1950s | Pioneering machine learning research is conducted using simple algorithms. |

| 1960s | The field of neural network research experiences a notable development with the discovery and utilization of multilayers.[2] neural networks were primarily shallow in structure, meaning they consisted of only a few layers of interconnected neurons. These shallow neural networks had limitations in handling complex problems that required more sophisticated data representations. However, they laid the foundation for further advancements in neural network research and paved the way for the development of deeper and more powerful networks in the future.[3] |

| 1970s | The AI Winter is caused by pessimism about machine learning effectiveness. Backpropagation is developed, allowing a network to adjust its hidden layers of neurons/nodes to adapt to new situations.[2] |

| 1980s | During the mid-1980s, the focus of research in the field of machine learning shifts towards artificial neural networks (ANN). However, in the subsequent decade of the 1990s, statistical learning systems gain prominence and temporarily overshadows the popularity of ANN. A pivotal event during this period is the emergence of convolution as a significant concept in machine learning, while the rediscovery and renewed exploration of backpropagation techniques leads to a resurgence of interest and advancement in the field of machine learning research. Rediscovery of backpropagation causes a resurgence in machine learning research.[4][3] |

| 1990s | There is a shift away from neural networks and towards statistical learning methods. Statistical learning methods are able to achieve comparable or better performance than neural networks on a wider range of tasks. However, neural networks continue to be used for some specific tasks, such as natural language processing and image recognition.[5][6][7] |

| 2000s | Deep learning becomes feasible and neural networks see widespread commercial use.[3] |

| 2010s | Machine learning becomes integral to many widely used software services and receives great publicity. |

Full timeline

| Year | Event Type | Caption | Event |

|---|---|---|---|

| 1642 | At the age of 19, French child prodigy Blaise Pascal creates an "arithmetic machine" for his father, a tax collector. This machine has the capability to perform addition, subtraction, multiplication, and division. Fast forward three centuries, the Internal Revenue Service (IRS) now utilizes machine learning techniques to tackle tax evasion.[8] | ||

| 1679 | Gottfried Wilhelm Leibniz, a German mathematician, philosopher, and sometimes poet, is credited with inventing the binary code system, which serves as the basis for contemporary computing.[8] | ||

| 1763 | Discovery | The Underpinngs of Bayes' Theorem | Thomas Bayes's work An Essay towards solving a Problem in the Doctrine of Chances is published two years after his death, having been amended and edited by a friend of Bayes, Richard Price.[9] The essay presents work which underpins Bayes theorem. |

| 1801 | French weaver and merchant Joseph-Marie Jacquard introduces a groundbreaking innovation in data storage through the invention of a programmable weaving loom. The loom utilizes punched cards to control the movement of warp threads, enabling the creation of intricate patterns in fabric. This revolutionary technology not only allows weavers to produce complex designs more efficiently but also paves the way for future advancements in data storage. The concept of punched cards, pioneered by Jacquard, would become a fundamental principle in computer data storage systems during the 20th century. This significant development lays the foundation for the evolution of data storage technology as we know it today.[10][11] | ||

| 1805 | Discovery | Least Squares | Adrien-Marie Legendre describes the "méthode des moindres carrés", known in English as the least squares method.[12] The least squares method is used widely in data fitting, which in machine learning, refers to the process of finding a model or function that best represents or fits a given dataset. |

| 1812 | Bayes' Theorem | Pierre-Simon Laplace publishes Théorie Analytique des Probabilités, in which he expands upon the work of Bayes and defines what is now known as Bayes' Theorem.[13] | |

| 1834 | English polymath Charles Babbage, known as the father of the computer, envisions a machine that could be programmed using punch cards. Although the device would be never constructed, its logical framework forms the basis for all modern computers. Charles Babbage's contribution to punch-card programming is significant in the development of computer technology.[14][8] | ||

| 1842 | English mathematician and writer Ada Lovelace becomes the world's first computer programmer. She develops an algorithm that outlines a series of steps for solving mathematical problems on Charles Babbage's theoretical punch-card machine. Ada Lovelace's pioneering work in computer programming would be recognized years later when the US Department of Defense names a new software language "Ada" in her honor.[8] | ||

| 1847 | English mathematician, philosopher, and logician George Boole devises a type of algebra that allows all values to be simplified as either "true" or "false." This concept, known as Boolean logic, would play a crucial role in contemporary computing by aiding the central processing unit (CPU) in determining how to handle incoming inputs.[8][11] | ||

| 1854 | English physician John Snow, during a deadly cholera outbreak in London, challenges the prevailing belief that cholera spreads through "bad air." Using a map, Snow plots the locations of cholera cases and identifies the regions closest to each water pump. He makes a significant discovery by finding that most deaths occurred near a specific pump on Broad Street in the Soho district. Snow deduces that the contaminated water from that pump is responsible for the outbreak. By convincing the locals to disable the pump, the epidemic is brought under control. This event marks the birth of epidemiology and serves as an early success of the nearest-neighbor algorithm, even before its official invention, nearly a century later.[15] | ||

| 1890 | German-American statistician, inventor, and businessman Herman Hollerith develops a pioneering mechanical system that integrates punch cards with mechanical calculation methods. This groundbreaking system would enable the rapid computation of statistics compiled from vast amounts of data collected from millions of individuals.[11] Such advancement would contribute to the evolution of computing and provide a basis for future developments in machine learning. | ||

| 1913 | Discovery | Markov Chains | Andrey Markov first describes techniques he used to analyse a poem. The techniques later become known as Markov chains.[16] |

| 1936 | English mathematician Alan Turing proposes a theory outlining how a machine could identify and carry out a predefined set of instructions.[14] His theory of computation forms the foundation of modern computing and has direct relevance to machine learning. Turing's concept of a universal machine laid the groundwork for the development of computers capable of executing algorithms and processing data.[17] | ||

| 1940 | ENIAC (Electronic Numerical Integrator and Computer) is created as the first manually operated computer. This invention marks the birth of the first electronic general-purpose computer. Following this milestone, stored program computers such as EDSAC in 1949 and EDVAC in 1951 would be subsequently developed. These advancements introduce the concept of storing and executing programs electronically, paving the way for the evolution of modern computer systems.[14] | ||

| 1943 | American neurophysiologist Warren McCulloch and mathematician Walter Pitts publish a paper describing the functioning of neurons and their desire to create a model of it using an electrical circuit. This marks the first instance of neural networks. Building on this concept, they begin exploring the application of their idea and delve into the analysis of human neuron behavior.[2][14] | ||

| 1949 | Canadian psychologist Donald Hebb introduces a pioneering concept that marks the initial advancement in machine learning. Known as Hebbian Learning theory, it draws from a neuropsychological framework and aims to establish correlations among nodes within a recurrent neural network (RNN). This theory essentially captures and retains shared patterns within the network, functioning as a memory for future reference. In simpler terms, Hebbian Learning theory enables the network to identify connections and store relevant information for later use.[18] | ||

| 1950 | Turing's Learning Machine | Alan Turing proposes a 'learning machine' that could learn and become artificially intelligent. Turing's specific proposal foreshadows genetic algorithms.[19] "Alan Turing creates the “Turing Test” to determine if a computer has real intelligence. To pass the test, a computer must be able to fool a human into believing it is also human."[7][14] | |

| 1951 | First Neural Network Machine | Marvin Minsky and Dean Edmonds build the SNARC, the first neural network machine able to learn.[20] | |

| 1952 | Machines Playing Checkers | Arthur Samuel at IBM's Poughkeepsie Laboratory becomes one of the early pioneers of machine learning. He develops some of the first machine learning programs, starting with programs that play checkers. Samuel's program, designed for an IBM computer, analyzes winning strategies by studying gameplay. Over time, the program would improve its performance by incorporating successful moves into its algorithm, thereby enhancing its gameplay abilities. Samuel's use of alpha-beta pruning in his computer program enables it to play checkers at a championship level, marking a significant milestone in the application of machine learning to gaming.[21][7][22] | |

| 1957 | Discovery | Perceptron | Frank Rosenblatt invents the perceptron while working at the Cornell Aeronautical Laboratory. This groundbreaking invention garners significant attention and receives extensive media coverage. The perceptron is the first neural network for computers. It aims to simulate the cognitive processes of the human brain, marking a significant milestone in the field of artificial intelligence.[23][24][7] |

| 1959 | A significant advancement in neural networks occurrs when Bernard Widrow and Marcian Hoff develop two models at Stanford University. The initial model, known as ADELINE, showcases the ability to recognize binary patterns and make predictions about the next bit in a sequence. The subsequent generation, called MADELINE, proves to be highly practical as it effectively eliminates echo on phone lines, providing a valuable real-world application. Remarkably, this technology continues to be utilized to this day.[8][2] | ||

| 1959 | The term "Machine Learning" is first coined by Arthur Samuel[14], who defines it as the “field of study that gives computers the ability to learn without being explicitly programmed”.[25] | ||

| 1959 | The first practical application of a neural network occurrs when it is utilized to address the issue of echo removal on phone lines. This is achieved through the implementation of an adaptive filter.[14] | ||

| 1962 | U.S. professor Bernard Widrow and Ted Hoff introduce the ADALINE algorithm, a single-layer neural network that can be used for classification and regression tasks. The ADALINE algorithm is a significant breakthrough in the field of machine learning, but it is limited to a single layer. This is because it is difficult to train neural networks with multiple layers.[2] | ||

| 1963 | United States government agencies like the Defense Advanced Research Projects Agency (DARPA) fund AI research at universities such as MIT, hoping for machines that would translate Russian instantly. The Cold War is in full swing at the time, and the US government is eager to develop technologies that would give them an edge over the Soviet Union. Machine translation is seen as one such technology, and DARPA is willing to invest heavily in its development. MIT is one of the leading universities in the field of AI research at the time, and DARPA funds a number of projects at the university.[26] | ||

| 1965 | Soviet mathematician Alexey Ivakhnenko publishes a number of articles and books on group method of data handling (GMDH), a method for inductive inference that is used to build complex models from data. Ivakhnenko's work on GMDH is influential in the development of neural networks, as the GMDH algorithm is similar to the backpropagation algorithm, which is a widely used algorithm for training neural networks. Ivakhnenko's work on GMDH is considered to be one of the foundations of deep learning. His work would have a significant impact on the development of machine learning, and it is still used today in a variety of applications.[27] | ||

| 1967 | Nearest Neighbor | Thomas M. Cover and Peter E. Hart make a significant contribution to the field of pattern recognition by introducing the nearest neighbor algorithm. This algorithm marks the beginning of basic pattern recognition capabilities for computers. Its initial application is in mapping routes, particularly for traveling salesmen who needed to visit multiple cities in a short tour. By leveraging the nearest neighbor algorithm, computers could identify similarities between items in large datasets and automatically recognize patterns. This breakthrough paves the way for further advancements in pattern recognition and data analysis.[28][7] | |

| 1969 | Limitations of Neural Networks | Marvin Minsky and Seymour Papert publish their book Perceptrons, describing some of the limitations of perceptrons and neural networks. The interpretation that the book shows that neural networks are fundamentally limited is seen as a hindrance for research into neural networks.[29][30] | |

| 1970 | Automatic Differentation (Backpropagation) | Finnish mathematician and computer scientist Seppo Linnainmaa publishes the general method for automatic differentiation (AD) of discrete connected networks of nested differentiable functions.[31][32] This corresponds to the modern version of backpropagation, but is not yet named as such.[33][34][35][36][18] | |

| 1974 | Algorithm | Greek biomedical engineer Evangelia Micheli-Tzanakou and Harth introduce ALOPEX (ALgorithms Of Pattern EXtraction) as a correlation based machine learning algorithm, which focuses on extracting patterns from data by identifying correlations between variables or features. | |

| 1974 | Backpropagation | American social scientist and machine learning pioneer Paul Werbos lays the foundation for backpropagation in his dissertation, a technique that adjusts the weights of neural networks to improve prediction accuracy.[22] | |

| 1977 | Algorithm | The Expectation–maximization algorithm is explained and given its name in a paper by Arthur Dempster, Nan Laird, and Donald Rubin.[37] | |

| 1979 | Stanford Cart | Students at Stanford University develop a cart that can navigate and avoid obstacles in a room.[38] The Stanford Cart consists in a remote-controlled robot, successfully navigating a room filled with obstacles without human intervention, showcasing advancements in autonomous movement.[39][7] | |

| 1980 | Discovery | Neocognitron | Japanese computer scientist Kunihiko Fukushima introduces the neocognitron, a hierarchical multilayered convolutional neural network. This groundbreaking work lays the foundation for convolutional neural networks, which would become a fundamental architecture in the field of artificial neural networks. The neocognitron's innovative design would inspire further advancements and applications in image and pattern recognition.[40][41][27] |

| 1980 | The Linde–Buzo–Gray algorithm is introduced by Yoseph Linde, Andrés Buzo and Robert M. Gray.[42] | ||

| 1980 | The first instance of the International Conference on Machine Learning takes place. The conference serves as a platform for researchers, practitioners, and industry professionals to come together and present their latest research, share ideas, and discuss advancements in machine learning algorithms, methodologies, and applications. | ||

| 1981 | Explanation Based Learning | Gerald Dejong introduces Explanation Based Learning (EBL), a concept in machine learning where a computer algorithm analyzes training data to create a general rule by discarding unimportant information. This approach allows the algorithm to focus on relevant patterns and extract valuable knowledge from the data.[43][7] | |

| 1981 | American social scientist and machine learning pioneer Paul Werbos publishes a paper in the Mathematics of Control, Signals, and Systems journal that introduces the backpropagation algorithm for training multilayer perceptrons (MLPs). MLPs are a type of neural network that can learn to solve complex problems by adjusting the weights of its connections. Werbos's paper is a major breakthrough in the field of machine learning. It shows that MLPs can be trained to solve problems that are previously thought to be intractable. This would lead to a resurgence of interest in neural networks, paving the way for the development of more advanced neural network architectures.[18] | ||

| 1982 | Discovery | Recurrent Neural Network | John Hopfield popularizes Hopfield networks, a type of recurrent neural network that can serve as content-addressable memory systems.[44][2][3] |

| 1982 | Japan makes a significant announcement regarding its emphasis on the development of more sophisticated neural networks. This declaration serves as a catalyst for increased American funding in this field, subsequently leading to a surge of research endeavors in the same domain.[2] | ||

| 1982 | Self-learning as machine learning paradigm is introduced along with a neural network capable of self-learning named Crossbar Adaptive Array (CAA).[45] | ||

| 1985 | NetTalk | Terry Sejnowski, along with Charles Rosenberg, develop a neural network called NetTalk. This innovative system has the ability to learn the pronunciation of words similar to how a baby learns. NetTalk demonstrates impressive capabilities by teaching itself the correct pronunciation of approximately 20,000 words within just one week. This breakthrough in neural network technology showcases the potential of self-learning systems and their ability to acquire language skills. A program that learns to pronounce words the same way a baby does, is developed by Terry Sejnowski.[46][14] | |

| 1985–1986 | Researchers in the field of neural networks introduce the concept of Multilayer Perceptron (MLP) along with the practical Backpropagation (BP) training algorithm. Although the idea of BP was proposed earlier, the specific implementation for neural networks was suggested by Werbos in 1981. These developments mark a significant acceleration in neural network research and lay the foundation for the neural network architectures used today.[18] | ||

| 1986 | Discovery | Backpropagation | The process of backpropagation is described by David Rumelhart, Geoff Hinton and Ronald J. Williams.[47][48] |

| 1986 | Australian computer scientist Ross Quinlan proposes the ID3 algorithm, today a very-well known ML algorithm.[18] | ||

| 1986 | Algorithm | The Dehaene–Changeux model is developed by cognitive neuroscientists Stanislas Dehaene and Jean-Pierre Changeux.[49] It is used to provide a predictive framework to the study of inattentional blindness and the solving of the Tower of London test.[50][51] | |

| 1986 | Peer-reviewed scientific journal Machine Learning is first issued. Published by Springer Nature, it is considered to be one of the leading journals in the field of machine learning. The journal publishes articles on a wide range of topics related to machine learning, including statistical learning theory, natural language processing, computer vision, data mining, reinforcement learning, and robotics.[52] | ||

| 1986 | European Conference on Machine Learning and Principles and Practice of Knowledge Discovery in Databases | ||

| 1987 | The Conference on Neural Information Processing Systems (NeurIPS) is first held. It is a prominent conference in the field of artificial intelligence and machine learning, where researchers, academics, and industry professionals gather to present and discuss the latest advancements, research findings, and developments related to neural networks, deep learning, and various aspects of information processing systems. NeurIPS would become a significant platform for showcasing breakthroughs and fostering collaborations within the AI community. | ||

| 1988 | The Knowledge Engineering and Machine Learning Group is founded at the Technical University of Catalonia (UPC) in Barcelona, Spain. KEMLG is a research group that focuses on the development of knowledge engineering and machine learning techniques. The group would make significant contributions to the field of artificial intelligence, and its work would be used in a wide variety of applications, including medical diagnosis, fraud detection, and natural language processing. | ||

| 1989 | Discovery | Reinforcement Learning | Christopher Watkins develops Q-learning, which greatly improves the practicality and feasibility of reinforcement learning.[53] |

| 1989 | Commercialization | Commercialization of Machine Learning on Personal Computers | Axcelis, Inc. releases Evolver, the first software package to commercialize the use of genetic algorithms on personal computers.[54] |

| 1989 | Algorithm | Chris Watkins introduces Q-learning, a model-free reinforcement learning algorithm.[55][56] | |

| 1992 | Achievement | Machines Playing Backgammon | Gerald Tesauro develops TD-Gammon, a computer backgammon program that utilises an artificial neural network trained using temporal-difference learning (hence the 'TD' in the name). TD-Gammon is able to rival, but not consistently surpass, the abilities of top human backgammon players.[57] |

| 1995 | A significant breakthrough in machine learning occurrs with the introduction of Support Vector Machines (SVM) by Vapnik and Cortes. SVMs possesses a solid theoretical foundation and delivers impressive empirical results. This development would lead to a division within the machine learning community, with some advocating for neural networks (NN) while others supporting SVM as the preferred approach.[18] | ||

| 1995 | Discovery | Random Forest Algorithm | Tin Kam Ho publishes a paper describing random decision forests. Random decision forests are a type of ensemble learning algorithm that combines multiple decision trees to improve the accuracy of predictions. Ho's paper, titled Random Decision Forests, introduces the basic idea of random decision forests. He shows that by randomly selecting features and thresholds, it is possible to construct a large number of decision trees that are relatively independent of each other. This independence helps to reduce the variance of the predictions, which leads to improved accuracy. Ho's paper is met with a positive reception from the machine learning community. Random decision forests would since become one of the most popular machine learning algorithms, and they would be used in a wide variety of applications, including image classification, natural language processing, and medical diagnosis.[58] |

| 1995 | Discovery | Support Vector Machines | Corinna Cortes and Vladimir Vapnik publish their work on support vector machines in the journal Machine Learning. Their paper, titled "Support-Vector Networks", introduces SVMs as a new machine learning algorithm for classification and regression problems. SVMs are based on the idea of finding a hyperplane that separates two classes of data points with the maximum possible margin. The margin is the distance between the hyperplane and the closest data points on either side. The more data points that lie on the margin, the more robust the SVM will be to noise in the data. SVMs would show to be very effective for a wide variety of classification and regression problems. They are particularly well-suited for problems where the data is not linearly separable, as SVMs can be used to map the data to a higher-dimensional space where it becomes linearly separable. SVMs are also relatively easy to train and are very efficient in terms of computational resources. Today it is one of the most popular machine learning algorithms. They are used in a wide variety of applications, including spam filtering, image classification, and fraud detection.[18][59] |

| 1996 (Octgober 10) | Orange is released by the University of Ljubljana. It is a visual programming language and integrated development environment (IDE) for data mining and machine learning. | ||

| 1997 | IBM Deep Blue Beats Kasparov | Supercomputer Deep Blue, developed by IBM, achieves a historic victory by defeating chess grandmaster Garry Kasparov in a match. This landmark event demonstrates the potential of artificial intelligence to surpass human capability in complex tasks such as chess. It marks a pivotal moment in machine learning, highlighting the ability of AI systems to learn and evolve independently, posing new challenges and possibilities for mankind.[60][22] | |

| 1997 | Discovery | LSTM | Sepp Hochreiter and Jürgen Schmidhuber invent Long-short term memory recurrent neural networks,[61] greatly improving the efficiency and practicality of recurrent neural networks. |

| 1997 | Yoav Freund and Robert Schapire introduce Adaboost, which would become an influential machine learning model. Adaboost is an ensemble method that combines multiple weak classifiers to create a strong classifier. The model gained recognition and received the prestigious Godel Prize for its contributions. Adaboost works by iteratively training weak classifiers on difficult instances while giving them more importance. This approach has proven effective in various tasks such as face recognition and detection, and it continues to serve as a foundation for many machine learning applications.[18] | ||

| 1998 | MNIST database | A team led by Yann LeCun releases the MNIST database, a dataset comprising a mix of handwritten digits from American Census Bureau employees and American high school students.[62] The MNIST database has since become a benchmark for evaluating handwriting recognition. | |

| 1998 | Researchers at AT&T Bell Laboratories develop a neural network that can accurately recognize handwritten ZIP codes. The network was trained on a dataset of 100,000 ZIP codes, and it is able to achieve an accuracy of 99%. The network uses a technique called backpropagation to train itself. Backpropagation is a method for adjusting the weights of a neural network so that it can better predict the output for a given input. The development of this network is a major breakthrough in the field of machine learning. It shows that neural networks can be used to solve real-world problems, and it paves the way for the development of more advanced neural networks.[2] | ||

| 1999 | A study is published in the Journal of the National Cancer Institute showing that computer-aided diagnosis (CAD) is more accurate than radiologists at detecting breast cancer on mammograms. The study, which is conducted by researchers at the University of Chicago, finds that CAD detecta cancer 52% more accurately than radiologists do. | ||

| 2000 | Algorithm | In anomaly detection, the local outlier factor (LOF) is an algorithm proposed by Markus M. Breunig, Hans-Peter Kriegel, Raymond T. Ng and Jörg Sander for finding anomalous data points by measuring the local deviation of a given data point with respect to its neighbours.[63] | |

| 2000 | LogitBoost, a boosting algorithm In machine learning and computational learning theory, is formulated by Jerome H. Friedman, Trevor Hastie, and Robert Tibshirani.[64] | ||

| 2000 | The Journal of Machine Learning Research is first published by the JMLR Foundation. It is considered to be one of the leading journals in the field of machine learning. JMLR publishes articles on a wide range of topic, including statistical learning theory, natural language processing, computer vision, data mining, reinforcement learning, and robotics. | ||

| 2001 | Breiman introduces an alternative ensemble model that involves the combination of multiple decision trees. In this model, each decision tree is carefully constructed by considering only a random subset of instances, while the selection of each node is based on a random subset of features.[18] | ||

| 2001 | The iDistance indexing and query processing technique is first proposed by Cui Yu, Beng Chin Ooi, Kian-Lee Tan and H. V. Jagadish.[65] It is a method for indexing and querying data in high-dimensional metric spaces. A metric space is a space where the distance between two points can be measured. High-dimensional metric spaces are often used to represent data that has a large number of features, such as images or text documents. The iDistance indexing technique would show to be effective for a variety of applications, including image retrieval, text mining, and data mining. It is a powerful tool for indexing and querying data in high-dimensional metric spaces. | ||

| 2002 | (October) | Torch Machine Learning Library | Torch is first released. It is a scientific computing library that is used for machine learning research. Torch is a popular choice for deep learning research and development. It would be used to develop a wide variety of deep learning models.[66] |

| 2002 | Software release | Computer vision and machine learning library Dlib is first released by Davis King. It is a popular choice for developing facial recognition, object detection, and image processing applications. | |

| 2003 | Algorithm | The concept of manifold alignment is first introduced as by Ham, Lee, and Saul as a class of machine learning algorithms that produce projections between sets of data, given that the original data sets lie on a common manifold.[67] | |

| 2004 | Google unveils its MapReduce technology, which is a distributed programming model for processing and generating large data sets. MapReduce is based on the idea of breaking down a large data set into smaller chunks that can be processed in parallel on a cluster of computers. The development of MapReduce is a major breakthrough in the field of distributed computing. It makes possible to process large data sets more efficiently and cost-effectively. This would lead to a decrease in the cost of parallel computing and memory, in turn making possible to develop more powerful machine learning models.[27] | ||

| 2004 | Jeff Hawkins and Sandra Blakeslee introduce the concept of Hierarchical Temporal Memory (HTM), which can be regarded as a theory or model of intelligence that adheres to biological limitations. This concept is extensively explained in their book On Intelligence. | ||

| 2005 | The third rise of neural networks (NN) begins with the conjunction of many different discoveries from past and present by recent mavens Geoffrey Hinton, Yoshua Bengio, Yann LeCun, Andrew Ng, and other valuable older researchers. This is a time when a number of factors come together to enable a new wave of progress in NN. These factors include the availability of large datasets, such as the ImageNet dataset, the development of powerful computers, such as GPUs, the development of new algorithms for training NN, such as backpropagation, and the combination of these factors led to a rapid increase in the performance of NN. NN begin to achieve state-of-the-art results in a wide variety of tasks, including image classification, natural language processing, and speech recognition.[18] | ||

| 2006 | Concept development | Deep learning | British-Canadian cognitive psychologist and computer scientist Geoffrey Hinton introduces the term "deep learning" to describe a set of new algorithms that enable computers to analyze and recognize objects and text within images and videos. This development marks a significant advancement in the field of neural networks and would since become a prominent and widely adopted technology in various industries.[7][14][4] |

| 2006 | Competition | Face Recognition Grand Challenge | The Face Recognition Grand Challenge (FRGC) is held by the National Institute of Standards and Technology (NIST) to evaluate the state-of-the-art in face recognition technology. It would become a landmark event in the field of face recognition, helping to accelerate the development of new and more accurate face recognition algorithms. The FRGC uses a variety of data sets, including 3D face scans, iris images, and high-resolution face images. The results of the FRGC would show that the new algorithms are significantly more accurate than the facial recognition algorithms from 2002 and 1995. The FRGC would help to establish face recognition as a viable technology for a variety of applications. The results of the FRGC would also be used to improve the accuracy of face recognition algorithms in commercial products.[2] |

| 2006 | Big data processing | This is a significant year in the development of big data processing, as it sees the release of Hadoop, an open-source software framework that allows for the distributed processing of large data sets across clusters of computers. Hadoop was developed by Doug Cutting and Mike Cafarella at the Apache Software Foundation. It is based on the MapReduce programming model, which was originally developed by Google. MapReduce is a programming model that breaks down a large data processing task into a series of smaller tasks that can be run in parallel on a cluster of computers. This makes it possible to process very large data sets that would be too large to process on a single computer. Hadoop would be widely used framework for big data processing. It would be used by a variety of organizations, including Google, Facebook, and Yahoo.[27] | |

| 2006 | Software release | RapidMiner | RapidMiner is first released by Ingo Mierswa and Ralf Klinkenberg. It is a data mining and machine learning software platform. RapidMiner is a powerful tool for data mining and machine learning tasks. It is easy to use and has a wide range of features. RapidMiner would be used by a wide range of companies and organizations, including Google, Amazon, and IBM. |

| 2007 | Scientific development | Long Short-Term Memory | A significant breakthrough occurs in the field of speech recognition with the introduction of a neural network architecture called Long Short-Term Memory (LSTM), which demonstrates superior performance compared to more traditional speech recognition programs at the time.[2] |

| 2007 (June) | Scikit-learn | scikit-learn is released by David Cournapeau, Gael Varoquaux, and others. It is a free and open-source machine learning library for Python. Scikit-learn would become a popular choice for machine learning practitioners because it is easy to use, well-documented, and has a wide range of features. It includes implementations of a variety of machine learning algorithms, including support vector machines, decision trees, random forests, and k-nearest neighbors.[68] | |

| 2007 | Software release | Theano | Theano is initially released. It is an open source Python library that allows users to easily make use of various machine learning models.[69] |

| 2008 (January 11) | Software release | Pandas | American software developer Wes McKinney releases the first version of pandas, a software library written for the Python programming language for data manipulation and analysis. pandas is fast, efficient, easy to use, and well-documented. It is used by a wide range of companies and organizations, including Google, Facebook, and Amazon. It is also used by many academic researchers. The name pandas is a play on the phrase "panel data", which is a type of data that is commonly used in statistical analysis. The pandas library was created by Wes McKinney, who was working as a researcher at AQR Capital Management at the time. Since its release, pandas would become one of the most popular data analysis libraries in the Python ecosystem. It is used by a wide range of companies and organizations, and it is also used by many academic researchers.[70] |

| 2008 | Scientific development | Isolation Forest | The Isolation Forest (iForest) algorithm is initially proposed by Fei Tony Liu, Kai Ming Ting and Zhi-Hua Zhou.[71] |

| 2008 | Encog | Encog is created as a pure-Java/C# machine learning framework to support genetic programming, NEAT/HyperNEAT, and other neural network technologies.[72] | |

| 2009 (April 7) | Software release | Apache Mahout | Apache Mahout is first released.[73] |

| 2010 (April) | Kaggle, a website that serves as a platform for machine learning competitions, is launched.[74][75] | ||

| 2010 | Microsoft releases the Kinect, a motion-sensing input device that can track 20 human features at a rate of 30 times per second. This allows people to interact with the computer via movements and gestures. The Kinect is originally developed for the Xbox 360 gaming console, but it would since be used for a variety of other applications, including gaming, healthcare, and education. It can be used to play games that require physical movement, such as Dance Central and Kinect Sports. It can also be used for rehabilitation therapy, as it can track the movements of patients and provide feedback on their progress. In the education space, the Kinect can be used to help students learn languages or math, as it can track their movements and provide feedback on their answers. It is likely to be used in a variety of applications in the future, as the technology continues to develop.[7] | ||

| 2010 (May 20) | Software release | Accord.NET | Accord.NET is initially released.[76] |

| 2010 | George Konidaris, Scott Kuindersma, Andrew Barto, and Roderic Grupen introduce a hierarchical reinforcement learning algorithm called Constructing skill trees (CST), which can build skill trees from a set of sample solution trajectories obtained from demonstration. CST works by first segmenting the demonstration trajectories into a set of primitive skills. These skills are then combined to form a skill tree, with each node in the tree representing a different skill. The skill tree is then used to guide the agent's exploration of the environment, and to help the agent learn new skills. CST would be shown to be effective in a variety of domains, including robotics, video games, and board games. It is a promising approach to hierarchical reinforcement learning, and it is likely to be used in a variety of applications in the future. | ||

| 2011 | Achievement | Beating Humans in Jeopardy | Using a combination of machine learning, natural language processing and information retrieval techniques, IBM's Watson beats two human champions in a Jeopardy! competition.[77] |

| 2012 | Achievement | Recognizing Cats on YouTube | The Google Brain team, led by Andrew Ng and Jeff Dean, create a neural network that learns to recognize cats by watching unlabeled images taken from frames of YouTube videos.[78][79] " In 2012, Google created a deep neural network which learned to recognize the image of humans and cats in YouTube videos."[14] |

| 2012 | Google's X Lab develops a machine learning algorithm that can identify cat videos on YouTube. The algorithm was trained on a dataset of manually labeled videos. It works by extracting features from videos and training a classifier to distinguish between videos that contain cats and videos that do not. The algorithm can be used to recommend cat videos to users or generate statistics about cat videos on YouTube. The cat-detection algorithm is a powerful example of the use of machine learning to solve real-world problems. It shows that machine learning can be used to solve problems in a variety of domains.[7] | ||

| 2012 | Alex Krizhevsky and his colleagues develop the AlexNet convolutional neural network, which wins the ImageNet Large Scale Visual Recognition Challenge and becomes one of the first successful applications of deep learning to image recognition. This would lead to a surge in the use of GPUs and convolutional neural networks (CNNs) in machine learning. AlexNet is the first CNN to use GPUs for training, and it also introduces the ReLU activation function. These two innovations make it possible to train much larger and deeper CNNs than had been possible before, which would lead to significant improvements in the performance of CNNs on a variety of tasks.[2] | ||

| 2012 | Special Interest Group on Knowledge Discovery and Data Mining | ||

| 2012 (March 12) | mlpy is released by Richard Arp and Ralf Klinkenberg. It is a Python module for machine learning. mlpy is a free and open-source library that provides a wide range of machine learning algorithms, including support vector machines, decision trees, random forests, and k-nearest neighbors. It also includes a number of utility functions for data manipulation and visualization.[80] | ||

| 2013 | International Conference on Learning Representations | ||

| 2014 | Leap in Face Recognition | Facebook researchers publish their work on DeepFace, a system that uses neural networks that identifies faces with 97.35% accuracy. The results are an improvement of more than 27% over previous systems and rivals human performance.[81] "Facebook develops DeepFace, a software algorithm that is able to recognize or verify individuals on photos to the same level as humans can."[7] "DeepFace was a deep neural network created by Facebook, and they claimed that it could recognize a person with the same precision as a human can do."[14] | |

| 2014 (May 26) | Software release | Apache Spark is first released by Matei Zaharia, Andrew Fire, and others at the AMPLab at UC Berkeley. Spark is a unified analytics engine for large-scale data processing. It provides high-level APIs in Java, Scala, Python, and R, and an optimized engine that supports general execution graphs. It also supports a rich set of higher-level tools including Spark SQL for SQL and structured data processing, MLlib for machine learning, GraphX for graph processing, and Spark Streaming. It would become a popular choice for big data processing, and would be used by a wide variety of companies, including Uber, Airbnb, and Netflix.[27][82] | |

| 2014 | Sibyl | Researchers from Google detail their work on Sibyl,[83] a proprietary platform for massively parallel machine learning used internally by Google to make predictions about user behavior and provide recommendations.[84] | |

| 2014 | The Chabot named "Eugen Goostman" successfully passes the Turing Test by convincing 33% of human judges that it is a human rather than a machine. This marks the first instance in which a Chabot achieves such a level of deception in the test.[14] It is developed by Russian-born Vladimir Veselov, Ukrainian-born Eugene Demchenko, and Russian-born Sergey Ulasen.[85] | ||

| 2014 | British artificial intelligence company DeepMind is founded by Demis Hassabis, Shane Legg, and Mustafa Suleyman. The company is acquired by Google in the same year. DeepMind is known for its work in reinforcement learning, a type of machine learning that allows computers to learn by trial and error. DeepMind would develop a number of successful reinforcement learning algorithms, including AlphaGo, which would defeat a professional human Go player in 2016.[2] | ||

| 2014 | Ian Goodfellow and his colleagues invent Generative Adversarial Networks (GANs), a type of unsupervised learning algorithm that can be used to generate realistic images, text, and other data. GANs work by pitting two neural networks against each other: the generator, which creates new data, and the discriminator, which determines whether the data was generated by the generator or is real data. As the generator and discriminator are trained, they become better at their respective tasks, and GANs would be used to generate realistic images, text, and other data for a variety of applications.[3][86] | ||

| 2015 (February) | spaCy is released. It is a free, open-source natural language processing (NLP) library for Python. It is a powerful tool for NLP tasks such as text classification, named entity recognition, and part-of-speech tagging. It is fast, efficient, and easy to use. spaCy would be used by a wide range of companies and organizations, including Google, Facebook, and Amazon. It is also used by many academic researchers.[87][88] | ||

| 2015 (March 27) | Software release | Keras | Keras is first released. It is an open source software library designed to simplify the creation of deep learning models.[89] |

| 2015 (June 9) | Software release | Chainer | Chainer is released by Preferred Networks, Inc. in Japan. A deep learning framework written in Python, it would become a popular choice for deep learning research and development.[90][91] |

| 2015 (October 8) | Software release | Apache SINGA | Apache SINGA is first released. It is an open-source distributed machine learning library that facilitates the training of large-scale machine learning (especially deep learning) models over a cluster of machines. The SINGA project was initiated by the DB System Group at National University of Singapore in 2014, in collaboration with the database group of Zhejiang University. The goal of the project was to support complex analytics at scale, and make database systems more intelligent and autonomic. Apache SINGA would be used by a number of organizations, including Citigroup, NetEase, and Singapore General Hospital. It would become a popular choice for distributed deep learning because it is easy to use, scalable, and efficient.[92] |

| 2015 | Achievement | Beating Humans in Go | Google's AlphaGo program becomes the first Computer Go program to beat an unhandicapped professional human player[93] using a combination of machine learning and tree search techniques.[94] |

| 2015 | Software release | Google releases TensorFlow, an open source software library for machine learning.[95] | |

| 2015 | Amazon launches its own machine learning platform called Amazon Machine Learning (Amazon ML). It is a cloud-based service that allows developers to build, train, and deploy machine learning models without having to worry about the underlying infrastructure.[7][2] | ||

| 2015 | The Distributed Machine Learning Toolkit (DMTK) is first released. It is a Microsoft-developed open-source framework that enables the efficient distribution of machine learning problems across multiple computers. DMTK is based on the Apache Spark framework.[7] | ||

| 2015 | Over 3,000 AI and robotics researchers sign an open letter warning of the danger of autonomous weapons which select and engage targets without human intervention. The letter is endorsed by a number of high-profile figures, including Stephen Hawking, Elon Musk, and Steve Wozniak. The letter states that autonomous weapons pose a serious threat to humanity. They could be used to kill without discrimination, and they could be difficult to control. The letter calls for a ban on the development and use of autonomous weapons. The open letter would be influential in raising awareness of the dangers of autonomous weapons. It would also lead to a number of countries considering bans on the development and use of these weapons.[7] | ||

| 2015 | Google's speech recognition program has a 49% performance jump using CTC-trained LSTMs. This is a major milestone in the development of speech recognition technology, as it shows that CTC-trained LSTMs could be used to train speech recognition programs that were significantly more accurate than previous models. CTC-trained LSTMs would be later used in a variety of commercial speech recognition products, including Google's Voice Search and Amazon's Alexa. They have the potential to revolutionize the way we interact with computers, and they are likely to be used in a variety of applications in the years to come.[2] | ||

| 2015 | Organization | OpenAI | OpenAI is founded as a non-profit research company by Elon Musk, Sam Altman, Ilya Sutskever, and others. The company's mission is to ensure that artificial general intelligence benefits all of humanity. [2] |

| 2015 | PayPal | PayPal adopts a collaborative approach to combat fraud and money laundering on its platform by combining the efforts of humans and machines. Human detectives play a crucial role in identifying the patterns and traits associated with criminal behavior. This knowledge is then utilized by a machine learning program to effectively detect and eliminate fraudulent activity on the PayPal site. The synergy between human expertise and automated algorithms enhances PayPal's ability to identify and thwart fraudulent individuals.[8] | |

| 2016 (January 25) | Microsoft Cognitive Toolkit | Microsoft Cognitive Toolkit is initially released. It is an AI solution aimed at helping users to advance in their machine learning projects.[69] | |

| 2016 | AlphaGo | Google's artificial intelligence algorithm, AlphaGo, achieves a significant milestone by defeating a professional player in the complex Chinese board game Go. Considered more challenging than chess, Go is known for its intricate gameplay. AlphaGo, developed by Google DeepMind, emerges victorious in all five games of a Go competition against top players. It first defeates Lee Sedol, the world's second-ranked player, and later goes on to defeat Ke Jie, the game's number one player in 2017.[14][7] | |

| 2016 | Software release | FBLearner Flow | Facebook details FBLearner Flow, an internal software platform that allows Facebook software engineers to easily share, train and use machine learning algorithms.[96] FBLearner Flow is used by more than 25% of Facebook's engineers, more than a million models have been trained using the service and the service makes more than 6 million predictions per second.[97] |

| 2016 (October) | PyTorch | PyTorch is first released by Adam Paszke, Sam Gross, Soumith Chintala, Gregory Chanan, and others. It is an open-source machine learning framework that is based on the Torch library. Torch is a scientific computing library that is used for deep learning research. PyTorch would become a popular choice for deep learning research and development. It is easy to use and it is very flexible. It is also well-supported by a large community of developers.[98] | |

| 2017 | Alphabet's Jigsaw team develops an intelligent system to combat online trolling. This system is designed to learn and identify trolling behavior by analyzing millions of comments from various websites. The algorithms behind the system have the potential to assist websites with limited moderation resources in detecting and addressing online harassment.[14][8] | ||

| 2017 (May 1) | CellCognition | CellCognition[99][100] |

Visual data

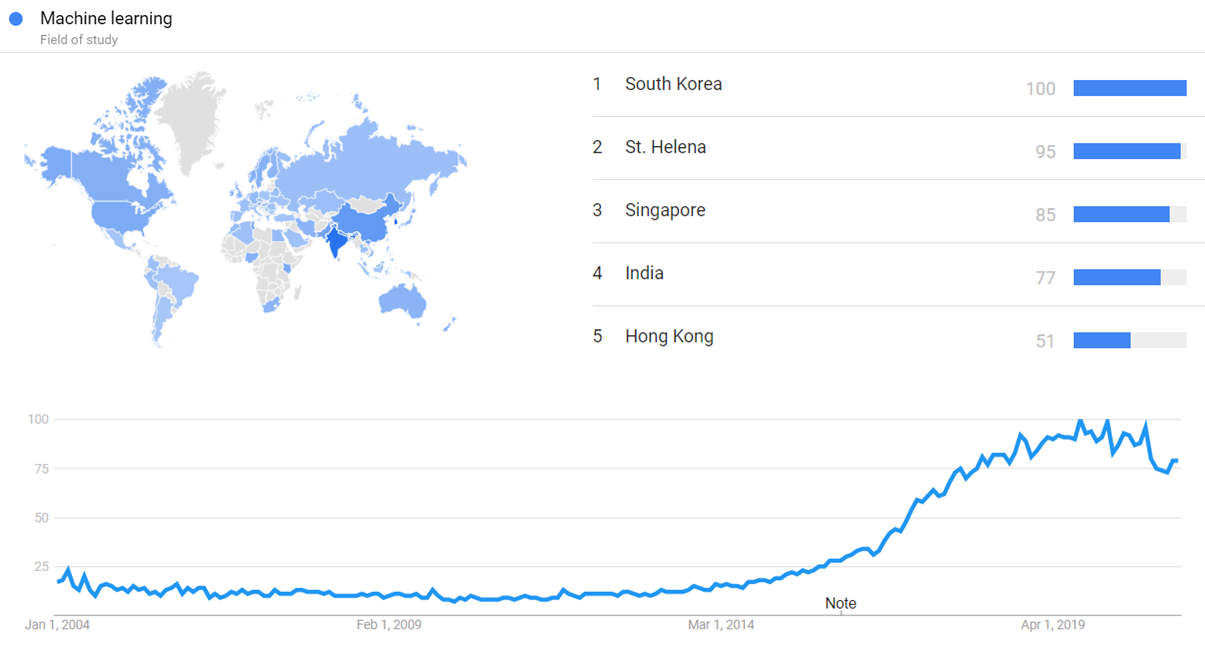

Google Trends

The image below shows Google Trends data for Machine learning (Field of study), from January 2004 to March 2021, when the screenshot was taken. Interest is also ranked by country and displayed on world map.[101]

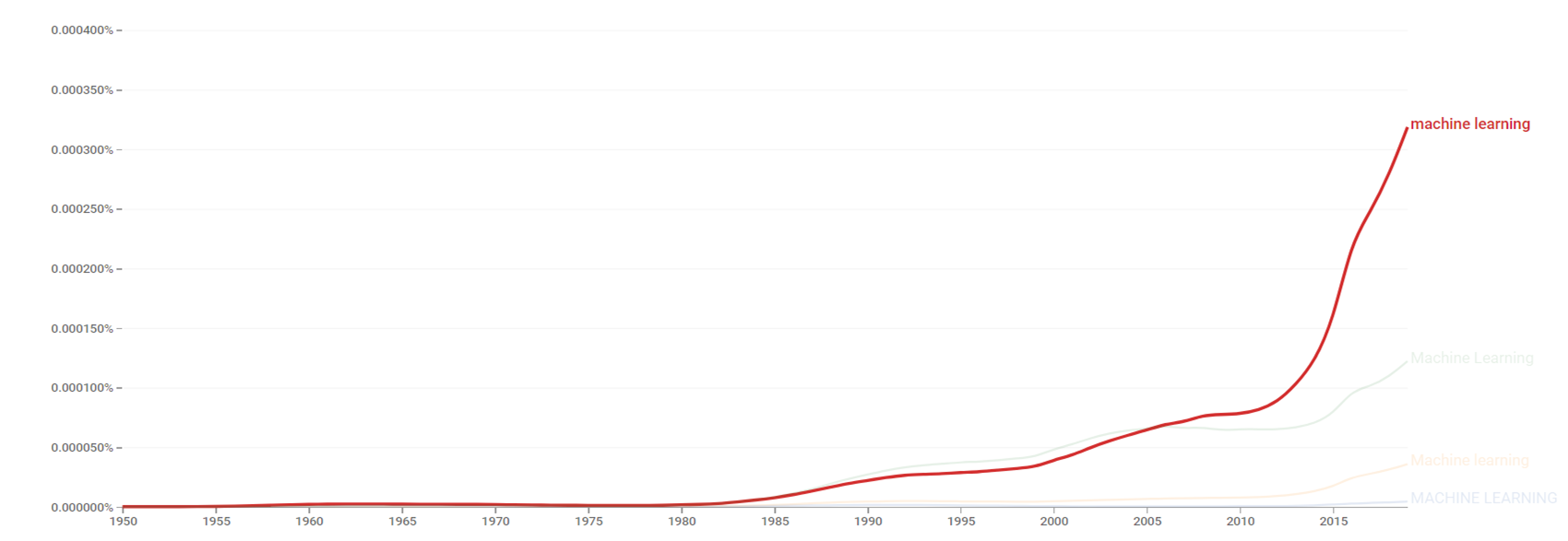

Google Ngram Viewer

The chart below shows Google Ngram Viewer data for Machine learning, from 1950 to 2019.[102]

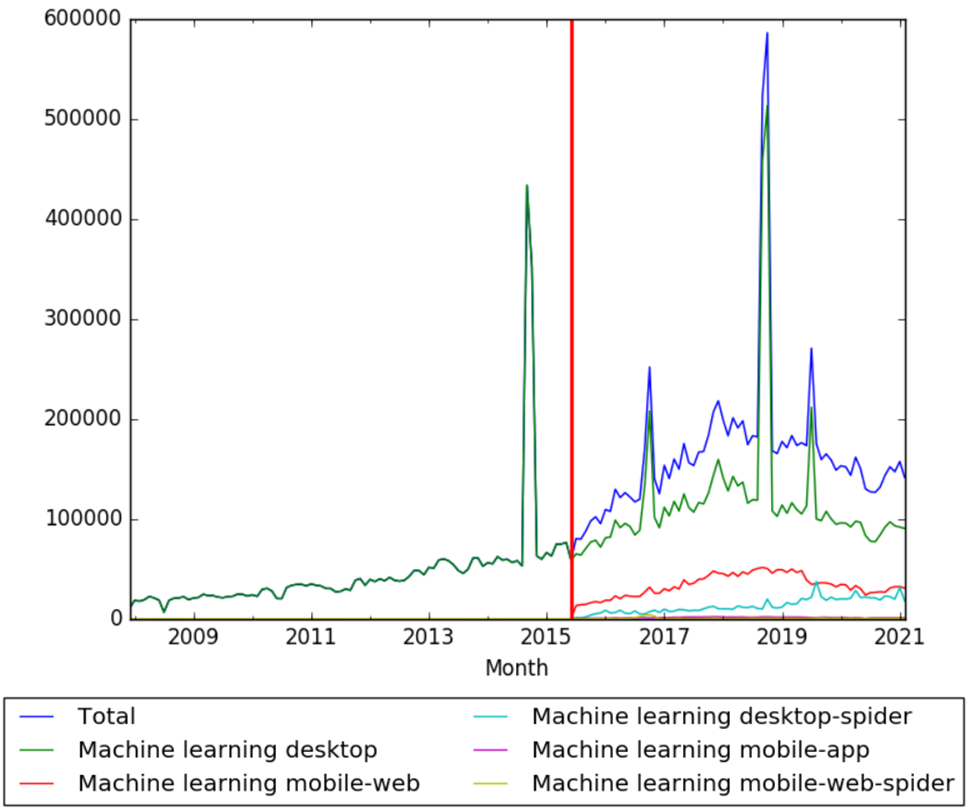

Wikipedia Views

The chart below shows pageviews of the English Wikipedia article Machine learning, on desktop from December 2007, and on mobile-web, desktop-spider, mobile-web-spider and mobile app, from July 2015; to February 2021.[103]

See also

- Timeline of DeepMind

- Timeline of AlphaGo

- History of artificial intelligence

- Machine learning

- Timeline of artificial intelligence

- Timeline of machine translation

Meta information on the timeline

How the timeline was built

The initial version of the timeline was written by User:Issa.

Funding information for this timeline is available.

Feedback and comments

Feedback for the timeline can be provided at the following places:

- FIXME

What the timeline is still missing

- Outline of machine learning

- https://factored.ai/machine-learning-engineering/

- https://www.dataversity.net/a-brief-history-of-machine-learning/

- https://www.techtarget.com/whatis/A-Timeline-of-Machine-Learning-History

- https://www.lightsondata.com/the-history-of-machine-learning/

- https://www.clickworker.com/customer-blog/history-of-machine-learning/

- https://analyticsindiamag.com/the-history-of-machine-learning-algorithms/

- https://www.startechup.com/blog/machine-learning-history/

- https://blog.bccresearch.com/brief-history-of-machine-learning

- https://dataconomy.com/2022/04/27/the-history-of-machine-learning/

- https://www.forbes.com/sites/bernardmarr/2016/02/19/a-short-history-of-machine-learning-every-manager-should-read/?sh=3dfbc88515e7

- https://techcrunch.com/2017/08/08/the-evolution-of-machine-learning/

- https://concisesoftware.com/blog/history-of-machine-learning/

- https://www.linkedin.com/pulse/rise-machine-learning-history-florian-steinig/

- https://labelyourdata.com/articles/history-of-machine-learning-how-did-it-all-start

- https://pandio.com/when-was-machine-learning-invented/

- https://medium.com/@codetain/a-brief-history-of-machine-learning-38f20c155c42

- https://medium.com/bloombench/history-of-machine-learning-7c9dc67857a5

- https://theintactone.com/2021/11/27/history-of-machine-learning/

- https://recro.io/blog/history-of-machine-learning/

- https://www.britannica.com/technology/machine-learning

- https://ifatwww.et.uni-magdeburg.de/ifac2020/media/pdfs/3439.pdf

- https://people.idsia.ch/~juergen/deep-learning-history.html

- https://www.researchgate.net/figure/History-of-machine-learning_fig1_366424883

- https://www.bvp.com/atlas/the-evolution-of-machine-learning-infrastructure

- https://www.securityinfowatch.com/cybersecurity/article/21114214/a-brief-history-of-machine-learning-in-cybersecurity

- https://builtin.com/artificial-intelligence/deep-learning-history

- https://www.interactions.com/blog/technology/history-machine-learning/

- https://besjournals.onlinelibrary.wiley.com/doi/full/10.1111/2041-210X.14061

- https://ourworldindata.org/brief-history-of-ai

Timeline update strategy

Pingbacks

See also

External links

References

- ↑ Firican, George (31 January 2022). "The history of Machine Learning". LightsOnData. Retrieved 5 July 2023.

- ↑ 2.00 2.01 2.02 2.03 2.04 2.05 2.06 2.07 2.08 2.09 2.10 2.11 2.12 2.13 2.14 "A Brief History of Machine Learning". dataversity.net. Retrieved 20 February 2020.

- ↑ 3.0 3.1 3.2 3.3 3.4 "A History of Machine Learning and Deep Learning". import.io. Retrieved 21 February 2020.

- ↑ 4.0 4.1 "A brief history of the development of machine learning algorithms". subscription.packtpub.com. Retrieved 25 February 2020.

- ↑ "A BRIEF HISTORY OF MACHINE LEARNING". provalisresearch.com. Retrieved 21 February 2020.

- ↑ "What is Machine Learning?". mlplatform.nl. Retrieved 25 February 2020.

- ↑ 7.00 7.01 7.02 7.03 7.04 7.05 7.06 7.07 7.08 7.09 7.10 7.11 7.12 7.13 7.14 "A Short History of Machine Learning". forbes.com. Retrieved 20 February 2020.

- ↑ 8.0 8.1 8.2 8.3 8.4 8.5 8.6 8.7 "A history of machine learning". cloud.withgoogle.com. Retrieved 21 February 2020.

- ↑ Bayes, Thomas (1 January 1763). "An Essay towards solving a Problem in the Doctrine of Chance" (PDF). Philosophical Transactions. 53: 370–418. doi:10.1098/rstl.1763.0053. Retrieved 15 June 2016.

- ↑ "Jacquard Loom, 1934 - The Henry Ford". www.thehenryford.org. Retrieved 14 June 2023.

- ↑ 11.0 11.1 11.2 "History of Machine Learning". medium.com. Retrieved 25 February 2020.

- ↑ Legendre, Adrien-Marie (1805). Nouvelles méthodes pour la détermination des orbites des comètes (in French). Paris: Firmin Didot. p. viii. Retrieved 13 June 2016.

{{cite book}}: CS1 maint: unrecognized language (link) - ↑ O'Connor, J J; Robertson, E F. "Pierre-Simon Laplace". School of Mathematics and Statistics, University of St Andrews, Scotland. Retrieved 15 June 2016.

- ↑ 14.00 14.01 14.02 14.03 14.04 14.05 14.06 14.07 14.08 14.09 14.10 14.11 14.12 14.13 "History of Machine Learning". javatpoint.com. Retrieved 21 February 2020.

- ↑ Domingos, Pedro (22 September 2015). The Master Algorithm: How the Quest for the Ultimate Learning Machine Will Remake Our World (1st ed.). Basic Books.

- ↑ Hayes, Brian. "First Links in the Markov Chain". American Scientist (March–April 2013). Sigma Xi, The Scientific Research Society: 92. doi:10.1511/2013.101.1. Retrieved 15 June 2016.

Delving into the text of Alexander Pushkin's novel in verse Eugene Onegin, Markov spent hours sifting through patterns of vowels and consonants. On January 23, 1913, he summarized his findings in an address to the Imperial Academy of Sciences in St. Petersburg. His analysis did not alter the understanding or appreciation of Pushkin's poem, but the technique he developed—now known as a Markov chain—extended the theory of probability in a new direction.

- ↑ Bernhardt, Chris (2016). "Turing's Vision: The Birth of Computer Science". The MIT Press.

{{cite journal}}: Cite journal requires|journal=(help) - ↑ 18.00 18.01 18.02 18.03 18.04 18.05 18.06 18.07 18.08 18.09 "Brief History of Machine Learning". erogol.com. Retrieved 24 February 2020.

- ↑ Turing, Alan (October 1950). "COMPUTING MACHINERY AND INTELLIGENCE". MIND. 59 (236): 433–460. doi:10.1093/mind/LIX.236.433. Retrieved 8 June 2016.

- ↑ Crevier 1993, pp. 34–35 and Russell & Norvig 2003, p. 17

- ↑ McCarthy, John; Feigenbaum, Ed. "Arthur Samuel: Pioneer in Machine Learning". AI Magazine. No. 3. Association for the Advancement of Artificial Intelligence. p. 10. Retrieved 5 June 2016.

- ↑ 22.0 22.1 22.2 Koch, Robert (1 September 2022). "History of Machine Learning - A Journey through the Timeline". clickworker.com. Retrieved 3 July 2023.

- ↑ Rosenblatt, Frank (1958). "THE PERCEPTRON: A PROBABILISTIC MODEL FOR INFORMATION STORAGE AND ORGANIZATION IN THE BRAIN" (PDF). Psychological Review. 65 (6): 386–408.

- ↑ Mason, Harding; Stewart, D; Gill, Brendan (6 December 1958). "Rival". The New Yorker. Retrieved 5 June 2016.

- ↑ Bheemaiah, Kariappa; Esposito, Mark; Tse, Terence (3 May 2017). "What is machine learning?". The Conversation. Retrieved 3 July 2023.

- ↑ "Seventy years of highs and lows in the history of machine learning". fastcompany.com. Retrieved 25 February 2020.

- ↑ 27.0 27.1 27.2 27.3 27.4 "History of deep machine learning". medium.com. Retrieved 21 February 2020.

- ↑ Marr, Marr. "A Short History of Machine Learning - Every Manager Should Read". Forbes. Retrieved 28 Sep 2016.

- ↑ Cohen, Harvey. "The Perceptron". Retrieved 5 June 2016.

- ↑ Colner, Robert. "A brief history of machine learning". SlideShare. Retrieved 5 June 2016.

- ↑ Seppo Linnainmaa (1970). The representation of the cumulative rounding error of an algorithm as a Taylor expansion of the local rounding errors. Master's Thesis (in Finnish), Univ. Helsinki, 6-7.

- ↑ Seppo Linnainmaa (1976). Taylor expansion of the accumulated rounding error. BIT Numerical Mathematics, 16(2), 146-160.

- ↑ Griewank, Andreas (2012). Who Invented the Reverse Mode of Differentiation?. Optimization Stories, Documenta Matematica, Extra Volume ISMP (2012), 389-400.

- ↑ Griewank, Andreas and Walther, A.. Principles and Techniques of Algorithmic Differentiation, Second Edition. SIAM, 2008.

- ↑ Jürgen Schmidhuber (2015). Deep learning in neural networks: An overview. Neural Networks 61 (2015): 85-117. ArXiv

- ↑ Jürgen Schmidhuber (2015). Deep Learning. Scholarpedia, 10(11):32832. Section on Backpropagation

- ↑ Dempster, A.P.; Laird, N.M.; Rubin, D.B. (1977). "Maximum Likelihood from Incomplete Data via the EM Algorithm". Journal of the Royal Statistical Society, Series B. 39 (1): 1–38.

- ↑ "Rise of the machines". mydigitalpublication.com. Retrieved 5 July 2023.

- ↑ Marr, Marr. "A Short History of Machine Learning - Every Manager Should Read". Forbes. Retrieved 28 Sep 2016.

- ↑ Fukushima, Kunihiko (1980). "Neocognitron: A Self-organizing Neural Network Model for a Mechanism of Pattern The Recognitron Unaffected by Shift in Position" (PDF). Biological Cybernetics. 36: 193–202. doi:10.1007/bf00344251. Retrieved 5 June 2016.

- ↑ Le Cun, Yann. "Deep Learning". Retrieved 5 June 2016.

- ↑ Linde, Y.; Buzo, A.; Gray, R. (1980). "An Algorithm for Vector Quantizer Design". IEEE Transactions on Communications. 28: 84–95. doi:10.1109/TCOM.1980.1094577.

- ↑ Marr, Marr. "A Short History of Machine Learning - Every Manager Should Read". Forbes. Retrieved 28 Sep 2016.

- ↑ Hopfield, John (April 1982). "Neural networks and physical systems with emergent collective computational abilities" (PDF). Proceedings of the National Academy of Sciences of the United States of America. 79: 2554–2558. doi:10.1073/pnas.79.8.2554. Retrieved 8 June 2016.

- ↑ Bozinovski, S. (1982). "A self-learning system using secondary reinforcement" . In Trappl, Robert (ed.). Cybernetics and Systems Research: Proceedings of the Sixth European Meeting on Cybernetics and Systems Research. North Holland. pp. 397–402.

- ↑ Marr, Marr. "A Short History of Machine Learning - Every Manager Should Read". Forbes. Retrieved 28 Sep 2016.

- ↑ Rumelhart, David; Hinton, Geoffrey; Williams, Ronald (9 October 1986). "Learning representations by back-propagating errors" (PDF). Nature. 323: 533–536. doi:10.1038/323533a0. Retrieved 5 June 2016.

- ↑ Dehaene S, Changeux JP. Experimental and theoretical approaches to conscious processing. Neuron. 2011 Apr 28;70(2):200-27.

- ↑ Changeux JP, Dehaene S. Hierarchical neuronal modeling of cognitive functions: from synaptic transmission to the Tower of London. Comptes Rendus de l'Académie des Sciences, Série III. 1998 Feb–Mar;321(2–3):241-7.

- ↑ Dehaene S, Changeux JP, Nadal JP. Neural networks that learn temporal sequences by selection. Proc Natl Acad Sci U S A. 1987 May;84(9):2727-31.

- ↑ "Machine Learning". springer.com. Retrieved 9 March 2020.

- ↑ Watksin, Christopher (1 May 1989). "Learning from Delayed Rewards" (PDF).

{{cite journal}}: Cite journal requires|journal=(help) - ↑ Markoff, John (29 August 1990). "BUSINESS TECHNOLOGY; What's the Best Answer? It's Survival of the Fittest". New York Times. Retrieved 8 June 2016.

- ↑ Watkins, C.J.C.H. (1989), Learning from Delayed Rewards (PDF) (Ph.D. thesis), Cambridge University

- ↑ Watkins and Dayan, C.J.C.H., (1992), 'Q-learning.Machine Learning'

- ↑ Tesauro, Gerald (March 1995). "Temporal Difference Learning and TD-Gammon". Communications of the ACM. 38 (3).

- ↑ Ho, Tin Kam (August 1995). "Random Decision Forests" (PDF). Proceedings of the Third International Conference on Document Analysis and Recognition. 1. Montreal, Quebec: IEEE: 278–282. doi:10.1109/ICDAR.1995.598994. ISBN 0-8186-7128-9. Retrieved 5 June 2016.

- ↑ Cortes, Corinna; Vapnik, Vladimir (September 1995). "Support-vector networks" (PDF). Machine Learning. 20 (3). Kluwer Academic Publishers: 273–297. doi:10.1007/BF00994018. ISSN 0885-6125. Retrieved 5 June 2016.

- ↑ Marr, Marr. "A Short History of Machine Learning - Every Manager Should Read". Forbes. Retrieved 28 Sep 2016.

- ↑ Hochreiter, Sepp; Schmidhuber, Jürgen (1997). "LONG SHORT-TERM MEMORY" (PDF). Neural Computation. 9 (8): 1735–1780. doi:10.1162/neco.1997.9.8.1735.

- ↑ LeCun, Yann; Cortes, Corinna; Burges, Christopher. "THE MNIST DATABASE of handwritten digits". Retrieved 16 June 2016.

- ↑ Breunig, M. M.; Kriegel, H.-P.; Ng, R. T.; Sander, J. (2000). LOF: Identifying Density-based Local Outliers (PDF). Proceedings of the 2000 ACM SIGMOD International Conference on Management of Data. SIGMOD. pp. 93–104. doi:10.1145/335191.335388. ISBN 1-58113-217-4.

- ↑ Friedman, Jerome; Hastie, Trevor; Tibshirani, Robert (2000). "Additive logistic regression: a statistical view of boosting". Annals of Statistics. 28 (2): 337–407. doi:10.1214/aos/1016218223.

- ↑ Cui Yu, Beng Chin Ooi, Kian-Lee Tan and H. V. Jagadish Indexing the distance: an efficient method to KNN processing, Proceedings of the 27th International Conference on Very Large Data Bases, Rome, Italy, 421-430, 2001.

- ↑ Collobert, Ronan; Benigo, Samy; Mariethoz, Johnny (30 October 2002). "Torch: a modular machine learning software library" (PDF). Retrieved 5 June 2016.

{{cite journal}}: Cite journal requires|journal=(help) - ↑ Ham, Ji Hun; Daniel D. Lee; Lawrence K. Saul (2003). "Learning high dimensional correspondences from low dimensional manifolds" (PDF). Proceedings of the Twentieth International Conference on Machine Learning (ICML-2003).

- ↑ "What is scikit-learn ?". njtrainingacademy.com. Retrieved 5 March 2020.

- ↑ 69.0 69.1 "Sharing is Caring with Algorithms". towardsdatascience.com. Retrieved 8 March 2020.

- ↑ "Python's pandas library is on its way to v.1.0.0 – first release candidate has arrived". jaxenter.com. Retrieved 9 March 2020.

- ↑ Liu, Fei Tony; Ting, Kai Ming; Zhou, Zhi-Hua (December 2008). "Isolation Forest". 2008 Eighth IEEE International Conference on Data Mining: 413–422. doi:10.1109/ICDM.2008.17. ISBN 978-0-7695-3502-9.

- ↑ "Encog Machine Learning Framework". heatonresearch.com. Retrieved 8 March 2020.

- ↑ "Apache Mahout". people.apache.org. Retrieved 9 March 2020.

- ↑ "About". Kaggle. Kaggle Inc. Retrieved 16 June 2016.

- ↑ Simon, Phil. Too Big to Ignore: The Business Case for Big Data.

- ↑ "Accord.NET Framework – An extension to AForge.NET". crsouza.com/. Retrieved 9 March 2020.

- ↑ Markoff, John (17 February 2011). "Computer Wins on 'Jeopardy!': Trivial, It's Not". New York Times. p. A1. Retrieved 5 June 2016.

- ↑ Le, Quoc; Ranzato, Marc’Aurelio; Monga, Rajat; Devin, Matthieu; Chen, Kai; Corrado, Greg; Dean, Jeff; Ng, Andrew (12 July 2012). "Building High-level Features Using Large Scale Unsupervised Learning". CoRR. arXiv:1112.6209.

- ↑ Markoff, John (26 June 2012). "How Many Computers to Identify a Cat? 16,000". New York Times. p. B1. Retrieved 5 June 2016.

- ↑ "mlpy". mlpy.sourceforge.net. Retrieved 8 March 2020.

- ↑ Taigman, Yaniv; Yang, Ming; Ranzato, Marc’Aurelio; Wolf, Lior (24 June 2014). "DeepFace: Closing the Gap to Human-Level Performance in Face Verification". Conference on Computer Vision and Pattern Recognition. Retrieved 8 June 2016.

- ↑ "Popular Big Data Engine Apache Spark 2.0 Released". adtmag.com. Retrieved 8 March 2020.

- ↑ Canini, Kevin; Chandra, Tushar; Ie, Eugene; McFadden, Jim; Goldman, Ken; Gunter, Mike; Harmsen, Jeremiah; LeFevre, Kristen; Lepikhin, Dmitry; Llinares, Tomas Lloret; Mukherjee, Indraneel; Pereira, Fernando; Redstone, Josh; Shaked, Tal; Singer, Yoram. "Sibyl: A system for large scale supervised machine learning" (PDF). Jack Baskin School Of Engineering. UC Santa Cruz. Retrieved 8 June 2016.

- ↑ Woodie, Alex (17 July 2014). "Inside Sibyl, Google's Massively Parallel Machine Learning Platform". Datanami. Tabor Communications. Retrieved 8 June 2016.

- ↑ "The Turing Test Is Not What You Think It Is | WNYC | New York Public Radio, Podcasts, Live Streaming Radio, News". WNYC. Retrieved 4 July 2023.

- ↑ Goodfellow, Ian; Pouget-Abadie, Jean; Mirza, Mehdi; Xu, Bing; Warde-Farley, David; Ozair, Sherjil; Courville, Aaron; Bengio, Yoshua (2014). Generative Adversarial Networks (PDF). Proceedings of the International Conference on Neural Information Processing Systems (NIPS 2014). pp. 2672–2680.

- ↑ "A Little spaCy Food for Thought: Easy to use NLP Framework". towardsdatascience.com. Retrieved 5 March 2020.

- ↑ "Introducing spaCy". explosion.ai. Retrieved 5 March 2020.

- ↑ "Keras". news.ycombinator.com. Retrieved 5 March 2020.

- ↑ "Big-in-Japan AI code 'Chainer' shows how Intel will gun for GPUs". The Register. 2017-04-07. Retrieved 8 March 2020.

- ↑ "Deep Learning のフレームワーク Chainer を公開しました" (in 日本語). 2015-06-09. Retrieved 8 March 2020.

- ↑ "Apache SINGA". singa.apache.org. Retrieved 8 March 2020.

- ↑ "Google achieves AI 'breakthrough' by beating Go champion". BBC News. BBC. 27 January 2016. Retrieved 5 June 2016.

- ↑ "AlphaGo". Google DeepMind. Google Inc. Retrieved 5 June 2016.

- ↑ Dean, Jeff; Monga, Rajat (9 November 2015). "TensorFlow - Google's latest machine learning system, open sourced for everyone". Google Research Blog. Retrieved 5 June 2016.

- ↑ Dunn, Jeffrey (10 May 2016). "Introducing FBLearner Flow: Facebook's AI backbone". Facebook Code. Facebook. Retrieved 8 June 2016.

- ↑ Shead, Sam (10 May 2016). "There's an 'AI backbone' that over 25% of Facebook's engineers are using to develop new products". Business Insider. Allure Media. Retrieved 8 June 2016.

- ↑ "PyTorch Releases Major Update, Now Officially Supports Windows". medium.com. Retrieved 8 March 2020.

- ↑ "CellCognition Explorer". software.cellcognition-project.org. Retrieved 8 March 2020.

- ↑ "A deep learning and novelty detection framework for rapid phenotyping in high-content screening". doi:10.1091/mbc.E17-05-0333. PMC 5687041. PMID 28954863.

{{cite journal}}: Cite journal requires|journal=(help) - ↑ "Machine learning". Google Trends. Retrieved 11 March 2021.

- ↑ "Machine learning". books.google.com. Retrieved 11 March 2021.

- ↑ "Machine learning". wikipediaviews.org. Retrieved 11 March 2021.