Difference between revisions of "Timeline of Center for Human-Compatible AI"

From Timelines

(→Full timeline) |

(→Full timeline) |

||

| (6 intermediate revisions by the same user not shown) | |||

| Line 6: | Line 6: | ||

! Time period !! Development summary !! More details | ! Time period !! Development summary !! More details | ||

|- | |- | ||

| − | | | + | | 2016–2021 || CHAI is established and grows || CHAI is established in 2016, begins producing research, and rapidly becomes a leading institution in AI safety. During this period, CHAI hosts numerous workshops, publishes key papers on AI alignment, and gains recognition within the AI safety community. Several PhD students join, and notable collaborations are formed with other institutions. |

| + | |- | ||

| + | | 2021–2024 || Continued expansion and recognition || From 2021 onwards, CHAI continues to expand its research efforts and impact. This period includes increased international recognition, major contributions to AI policy discussions, participation in global conferences, and the establishment of new research initiatives focused on AI-human collaboration and safety. CHAI's researchers receive prestigious awards, and the institution solidifies its role as a thought leader in AI alignment. | ||

|} | |} | ||

| + | ==Full timeline== | ||

| + | Here are the inclusion criteria for various event types in the timeline related to CHAI (Center for Human-Compatible AI): | ||

| + | |||

| + | *Publication: The intention is to include the most notable publications. These are typically those that have been highlighted by CHAI itself or have gained recognition in the broader AI safety community or media. Given the large volume of research papers, only papers that have significant impact, such as being accepted at prominent conferences or being discussed widely, are included. | ||

| + | *Website: The intention is to include all websites directly associated with CHAI or initiatives that CHAI is heavily involved in. This includes new websites launched by CHAI or collaborative websites for joint projects. | ||

| + | *Staff: The intention is to include significant changes or additions to CHAI staff, particularly new PhD students, research fellows, senior scientists, and leadership positions. Promotions and transitions in key roles are also included. | ||

| + | *Workshop: All workshops organized or hosted by CHAI are included, particularly those that focus on advancing AI safety, alignment research, or collaborations within the AI research community. Virtual and in-person events count equally if organized by CHAI. | ||

| + | *Conference: All conferences where CHAI organizes or leads are included. Conferences where CHAI staff give significant presentations, lead discussions, or organize major sessions are also featured. | ||

| + | *Internal Review: This includes annual or progress reports published by CHAI, summarizing achievements, research outputs, and strategic directions. These reports provide a comprehensive review of the organization's work over a given period. | ||

| + | *External Review: Includes substantive reviews of CHAI's work by external bodies or media. Only reviews that treat CHAI or its researchers in significant detail are included. | ||

| + | *Financial: The inclusion focuses on large donations or funding announcements of over $10,000. Funding that supports major initiatives or collaborations that advance AI safety research is highlighted. | ||

| + | *Staff Recognition: This includes awards, honors, and recognitions received by CHAI researchers for their contributions to AI safety or AI ethics. Recognitions such as TIME’s 100 Most Influential People or prestigious fellowships are included. | ||

| + | *Social Media: Significant milestones such as new social media account creations for CHAI or major social media events (like Reddit AMAs) hosted by CHAI-affiliated researchers are included. | ||

| + | *Project Announcement or Initiatives: Major projects, initiatives, or research programs launched by CHAI that are aimed at advancing AI alignment, existential risk mitigation, or improving AI-human cooperation are included. These announcements highlight new directions or collaborative efforts in AI safety. | ||

| + | *Collaboration: Includes significant collaborations with other institutions where CHAI plays a major role, such as co-authoring reports, leading joint research projects, or providing advisory roles. Collaborations aimed at policy, safety, or alignment are particularly relevant. | ||

| − | |||

{| class="sortable wikitable" | {| class="sortable wikitable" | ||

| Line 91: | Line 107: | ||

|- | |- | ||

| − | | 2018 || {{dts|December}} || Media Mention || Stuart Russell and Rosie Campbell from CHAI are quoted in a Vox article titled "The Case for Taking AI Seriously as a Threat to Humanity." | + | | 2018 || {{dts|December}} || Media Mention || Stuart Russell and Rosie Campbell from CHAI are quoted in a Vox article titled "The Case for Taking AI Seriously as a Threat to Humanity." They emphasize the potential for AI systems to be developed without adequate safety measures or ethical considerations, which could lead to unintended and potentially harmful consequences. The article highlights their advocacy for aligning AI with human values and ensuring that AI safety is prioritized to prevent misuse or detrimental effects on society.<ref>{{cite web|url=https://www.vox.com |title=The Case for Taking AI Seriously as a Threat to Humanity |publisher=Vox |date=December 2018 |accessdate=September 30, 2024}}</ref> |

| + | |||

| + | |- | ||

| + | |||

| + | | 2019 || {{dts|January 8}} || Talks || Rosie Campbell delivers insightful talks at AI Meetups in San Francisco and East Bay, discussing neural networks and CHAI's approach to AI safety. These talks fostered community engagement and highlighted the complexities of AI alignment.<ref>{{cite web|url=https://humancompatible.ai/news/2019/01/08/Rosie_Demystify/ |title=Rosie Campbell Speaks About AI Safety and Neural Networks at San Francisco and East Bay AI Meetups |website=CHAI |date=January 8, 2019 |accessdate=October 7, 2024}}</ref> | ||

| + | |||

| + | |- | ||

| + | |||

| + | | 2019 || {{dts|January 15}} || Award || Stuart Russell receives the prestigious AAAI Feigenbaum Prize for his groundbreaking work in probabilistic knowledge representation, which has had a profound impact on AI’s application to global challenges such as seismic monitoring for nuclear test detection.<ref>{{cite web|url=https://humancompatible.ai/news/2019/01/15/russel_prize/ |title=Stuart Russell Receives AAAI Feigenbaum Prize |website=CHAI |date=January 15, 2019 |accessdate=October 7, 2024}}</ref> | ||

| + | |||

| + | |- | ||

| + | |||

| + | | 2019 || {{dts|January 17}} || Conference || At AAAI 2019, CHAI faculty present multiple papers, covering topics from deception strategies in security games to advances in multi-agent reinforcement learning. Their work also tackles the social implications of AI, emphasizing ethical considerations in AI deployment.<ref>{{cite web|url=https://humancompatible.ai/news/2019/01/17/CHAI_AAAI2019/ |title=CHAI Papers at the AAAI 2019 Conference |website=CHAI |date=January 17, 2019 |accessdate=October 7, 2024}}</ref> | ||

| + | |||

| + | |- | ||

| + | |||

| + | | 2019 || {{dts|January 20}} || Publication || Former CHAI intern Alex Turner wins the AI Alignment Prize for his work on penalizing impact via attainable utility preservation. This research offers novel insights into AI safety by focusing on how AI can be regulated to minimize unintended harm.<ref>{{cite web|url=https://humancompatible.ai/news/2019/01/20/turner_prize/ |title=Former CHAI Intern Wins AI Alignment Prize |website=CHAI |date=January 20, 2019 |accessdate=October 7, 2024}}</ref> | ||

| + | |||

| + | |- | ||

| + | |||

| + | | 2019 || {{dts|January 29}} || Conference || At the ACM FAT* Conference, Smitha Milli and Anca Dragan present two papers addressing the ethical implications of AI systems, particularly the need for transparency in AI decision-making to ensure fairness and accountability.<ref>{{cite web|url=https://humancompatible.ai/news/2019/01/29/CHAI_FAT2019/ |title=CHAI Papers at FAT* 2019 |website=CHAI |date=January 29, 2019 |accessdate=October 7, 2024}}</ref> | ||

| + | |||

| + | |- | ||

| + | |||

| + | | 2019 || {{dts|June 15}} || Conference || CHAI faculty members Rohin Shah, Pieter Abbeel, and Anca Dragan present their research at ICML 2019, focusing on human-AI coordination and the challenge of addressing human biases in AI reward inference.<ref>{{cite web|url=https://humancompatible.ai/news/2019/06/15/talks_icml_2019/ |title=CHAI Presentations at ICML |website=CHAI |date=June 15, 2019 |accessdate=October 7, 2024}}</ref> | ||

| + | |||

| + | |- | ||

| + | |||

| + | | 2019 || {{dts|July 5}} || Publication || CHAI releases an open-source imitation learning library developed by Steven Wang, Adam Gleave, and Sam Toyer, providing benchmarks for critical algorithms like GAIL and AIRL. This marks a significant advancement in the tools available for imitation learning research.<ref>{{cite web|url=https://humancompatible.ai/news/2019/07/05/imitation_learning_library/ |title=CHAI Releases Imitation Learning Library |website=CHAI |date=July 5, 2019 |accessdate=October 7, 2024}}</ref> | ||

| + | |||

| + | |- | ||

| + | |||

| + | | 2019 || {{dts|July 5}} || Research Summary || Rohin Shah publishes a summary of CHAI's work on human biases in reward inference, shedding light on how AI systems can better align their decision-making processes with real-world human behavior.<ref>{{cite web|url=https://humancompatible.ai/news/2019/07/05/rohin_blogposts/ |title=Rohin Shah Summarizes CHAI's Research on Learning Human Biases |website=CHAI |date=July 5, 2019 |accessdate=October 7, 2024}}</ref> | ||

| + | |||

| + | |- | ||

| + | |||

| + | | 2019 || {{dts|August 15}} || Media Publication || Mark Nitzberg authors a widely-discussed article in WIRED advocating for an “FDA for algorithms,” calling for stricter regulatory oversight of AI development to ensure safety and transparency in AI systems.<ref>{{cite web|url=https://www.wired.com/story/fda-for-algorithms/ |title=Mark Nitzberg Writes in WIRED on the Need for an FDA for Algorithms |website=WIRED |date=August 15, 2019 |accessdate=October 7, 2024}}</ref> | ||

| + | |||

| + | |- | ||

| + | |||

| + | | 2019 || {{dts|August 28}} || Paper Submission || Thomas Krendl Gilbert submits The Passions and the Reward Functions: Rival Views of AI Safety? to FAT*2020, exploring philosophical perspectives on AI safety and the ethical alignment of AI reward systems with human emotions.<ref>{{cite web|url=https://humancompatible.ai/news/2019/08/28/gilbert_paper/ |title=Thomas Krendl Gilbert Submits Paper on Philosophical AI Safety |website=CHAI |date=August 28, 2019 |accessdate=October 7, 2024}}</ref> | ||

| + | |||

|- | |- | ||

| − | | 2019 || {{dts| | + | | 2019 || {{dts|September 28}} || Newsletter || Rohin Shah expands the AI Alignment Newsletter, turning it into a key resource for the AI safety community by offering detailed updates on the latest developments in AI safety research.<ref>{{cite web|url=https://humancompatible.ai/news/2019/09/28/newsletter_update/ |title=Rohin Shah Expands the AI Alignment Newsletter |website=CHAI |date=September 28, 2019 |accessdate=October 7, 2024}}</ref> |

| + | |- | ||

| + | |||

| + | | 2020 || {{dts|June 1}} || Workshop || CHAI holds its first virtual workshop due to COVID-19, bringing together around 150 participants from the AI safety community. The event features discussions, talks, and collaborations focused on reducing existential risks from advanced AI.<ref>{{cite web|url=https://humancompatible.ai/news/2020/06/01/first-virtual-workshop/ |title=CHAI Holds Its First Virtual Workshop |website=CHAI |date=June 1, 2020 |accessdate=October 7, 2024}}</ref> | ||

|- | |- | ||

| − | | | + | | 2020 || {{dts|September 1}} || Staff || Six new PhD students join CHAI, each advised by Principal Investigators. The incoming students—Yuxi Liu, Micah Carroll, Cassidy Laidlaw, Alex Gunning, Alyssa Dayan, and Jessy Lin—bring diverse interests ranging from mathematics to AI-human cooperation.<ref>{{cite web|url=https://humancompatible.ai/news/2020/09/01/six-new-phd-students-join-chai/ |title=Six New PhD Students Join CHAI |website=CHAI |date=September 1, 2020 |accessdate=October 7, 2024}}</ref> |

|- | |- | ||

| − | | | + | | 2020 || {{dts|September 10}} || Publication || CHAI PhD student Rachel Freedman has two papers accepted at IJCAI-20 workshops. The first paper, "Choice Set Misspecification in Reward Inference," analyzes errors in robot reward function inference. The second, "Aligning with Heterogeneous Preferences for Kidney Exchange," proposes AI solutions for preference aggregation in kidney exchange programs.<ref>{{cite web|url=https://humancompatible.ai/news/2020/09/10/rachel-freedman-papers-ijcai/ |title=IJCAI-20 Accepts Two Papers by CHAI PhD Student Rachel Freedman |website=CHAI |date=September 10, 2020 |accessdate=October 7, 2024}}</ref> |

|- | |- | ||

| − | | | + | | 2020 || {{dts|October 10}} || Publication || Brian Christian publishes "The Alignment Problem: Machine Learning and Human Values," highlighting key milestones and challenges in AI safety. The book showcases the work of many CHAI researchers and is aimed at understanding technical AI safety progress.<ref>{{cite web|url=https://humancompatible.ai/news/2020/10/10/alignment-problem-published/ |title=Brian Christian Publishes The Alignment Problem |website=CHAI |date=October 10, 2020 |accessdate=October 7, 2024}}</ref> |

|- | |- | ||

| − | | | + | | 2020 || {{dts|October 21}} || Workshop || CHAI hosts a virtual event to celebrate the launch of Brian Christian’s book "The Alignment Problem." The event includes an interview with the author and audience Q&A, hosted by journalist Nora Young, providing insight into AI safety and ethics.<ref>{{cite web|url=https://humancompatible.ai/news/2020/11/05/book-launch-alignment-problem/ |title=Watch the Book Launch of The Alignment Problem in Conversation with Brian Christian and Nora Young |website=CHAI |date=November 5, 2020 |accessdate=October 7, 2024}}</ref> |

|- | |- | ||

| − | | | + | | 2020 || {{dts|November 12}} || Internship || CHAI opens applications for its 2021 research internships. Interns work on research projects with mentors and participate in seminars and workshops. The early deadline is November 23, and the final deadline is December 13.<ref>{{cite web|url=https://humancompatible.ai/news/2020/11/12/internship-applications/ |title=CHAI Internship Application Is Now Open |website=CHAI |date=November 12, 2020 |accessdate=October 7, 2024}}</ref> |

|- | |- | ||

| − | | | + | | 2020 || {{dts|December 20}} || Financial || The Survival and Flourishing Fund (SFF) donates $799,000 to CHAI and $247,000 to the Berkeley Existential Risk Initiative for BERI-CHAI collaboration. These funds support AI safety research and collaborations aiming to improve humanity’s long-term survival prospects.<ref>{{cite web|url=https://humancompatible.ai/news/2020/12/20/chai-beri-donations/ |title=CHAI and BERI Receive Donations |website=CHAI |date=December 20, 2020 |accessdate=October 7, 2024}}</ref> |

|- | |- | ||

| − | | | + | | 2021 || {{dts|January 6}} || Podcast || Daniel Filan debuts the AI X-risk Research Podcast (AXRP). The podcast focuses on AI alignment, technical challenges, and existential risks, bringing insights from experts and researchers working on ensuring AI safety.<ref>{{cite web|url=https://humancompatible.ai/news/2021/01/06/daniel-filan-launches-ai-x-risk-research-podcast/ |title=Daniel Filan Launches AI X-risk Research Podcast |website=Center for Human-Compatible AI |date=January 6, 2021 |accessdate=October 7, 2024}}</ref> |

|- | |- | ||

| − | | | + | | 2021 || {{dts|February 5}} || Publication || Thomas Krendl Gilbert releases the paper "AI Development for the Public Interest: From Abstraction Traps to Sociotechnical Risks" at IEEE ISTAS20. The paper critiques limited abstraction in AI research and advocates for integrating social context and ethical considerations into AI systems development.<ref>{{cite web|url=https://humancompatible.ai/news/2021/02/05/thomas-gilbert-published-in-ieee-istas20/ |title=Tom Gilbert Published in IEEE ISTAS20 |website=Center for Human-Compatible AI |date=February 5, 2021 |accessdate=October 7, 2024}}</ref> |

|- | |- | ||

| − | | | + | | 2021 || {{dts|February 9}} || Debate || Stuart Russell debates Melanie Mitchell on The Munk Debates. Russell discusses AI safety concerns, the urgency of AI alignment research, and the risks associated with unregulated AI development. He stresses the importance of international governance to control AI technologies safely.<ref>{{cite web|url=https://humancompatible.ai/news/2021/02/09/stuart-russell-on-the-munk-debates/ |title=Stuart Russell on The Munk Debates |website=Center for Human-Compatible AI |date=February 9, 2021 |accessdate=October 7, 2024}}</ref> |

|- | |- | ||

| + | | 2021 || {{dts|January 25}} || Podcast || Michael Dennis appears on the TalkRL podcast, discussing reinforcement learning, AI safety, and the challenges in creating safe and reliable AI systems. Dennis addresses the complexities of reward design and behavior modeling to align AI with human objectives.<ref>{{cite web|url=https://www.talkrl.com/episodes/michael-dennis |title=Michael Dennis |website=TalkRL Podcast |date=January 25, 2021 |accessdate=October 7, 2024}}</ref> | ||

| − | | | + | |- |

| + | |||

| + | | 2021 || {{dts|March 18}} || Conference || At AAAI 2021, CHAI faculty and affiliates present multiple papers focusing on AI alignment, safe reinforcement learning, and improving AI-human interactions. Contributions include research on scalable reward modeling and methods for better interpretability of AI behavior.<ref>{{cite web|url=https://humancompatible.ai/news/2021/03/18/chai-faculty-and-affiliates-publish-at-aaai-2021/ |title=CHAI Faculty and Affiliates Publish at AAAI 2021 |website=Center for Human-Compatible AI |date=March 18, 2021 |accessdate=October 7, 2024}}</ref> | ||

|- | |- | ||

| − | | | + | | 2021 || {{dts|March 25}} || Award || Brian Christian's book "The Alignment Problem" is recognized with the Excellence in Science Communication Award by Eric and Wendy Schmidt and the National Academies. The book explores AI alignment challenges and ethical dilemmas in designing AI systems that behave as intended.<ref>{{cite web|url=https://humancompatible.ai/news/2022/11/01/brian-christians-the-alignment-problem-wins-the-excellence-in-science-communication-award-from-eric-wendy-schmidt-and-the-national-academies/ |title=Brian Christian’s “The Alignment Problem” wins the Excellence in Science Communication Award |website=Center for Human-Compatible AI |date=November 1, 2022 |accessdate=October 7, 2024}}</ref> |

|- | |- | ||

| − | | | + | | 2021 || {{dts|April 11}} || Workshop || Stuart Russell and Caroline Jeanmaire organize a virtual workshop titled "AI Economic Futures," in collaboration with the Global AI Council at the World Economic Forum. The series aims to discuss AI policy recommendations and their impact on future economic prosperity.<ref>{{cite web|url=https://humancompatible.ai/news/2020/04/19/inaugural-virtal-workshop/ |title=Professor Stuart Russell and Caroline Jeanmaire Organize Virtual Workshop Titled “AI Economic Futures” |website=Center for Human-Compatible AI |date=April 19, 2020 |accessdate=October 7, 2024}}</ref> |

|- | |- | ||

| − | | | + | | 2021 || {{dts|June 7}}-8 || Workshop || CHAI hosts its Fifth Annual Workshop, where researchers, students, and collaborators discuss advancements in AI safety, alignment, and research progress. The workshop addresses key challenges in AI reward modeling, interpretability, and scalable alignment techniques.<ref>{{cite web|url=https://humancompatible.ai/news/2021/06/16/fifth-annual-chai-workshop/ |title=Fifth Annual CHAI Workshop |website=Center for Human-Compatible AI |date=June 16, 2021 |accessdate=October 7, 2024}}</ref> |

| + | |||

|- | |- | ||

| − | | | + | | 2021 || {{dts|July 9}} || Competition || CHAI researchers contribute to the launch of the NeurIPS MineRL BASALT Competition. The competition aims to promote research in imitation learning, focusing on AI systems learning from human demonstration within the open-world Minecraft environment to improve behavior modeling.<ref>{{cite web|url=https://humancompatible.ai/news/2021/07/09/neurips-minerl-basalt-competition-launches/ |title=NeurIPS MineRL BASALT Competition Launches |website=Center for Human-Compatible AI |date=July 9, 2021 |accessdate=October 7, 2024}}</ref> |

| + | |||

|- | |- | ||

| − | | | + | |

| + | | 2021 || {{dts|August 7}} || Award || Stuart Russell is named an Officer of the Most Excellent Order of the British Empire (OBE) for his significant contributions to artificial intelligence research and AI safety. This recognition highlights his impact on AI ethics and governance.<ref>{{cite web|url=https://www.gov.uk/government/publications/birthday-honours-2021-overseas-and-international-list/birthday-honours-2021-overseas-and-international-list-knight-bachelor-and-order-of-the-british-empire |title=Birthday Honours 2021: Overseas and International List |website=GOV.UK |date=June 11, 2021 |accessdate=October 7, 2024}}</ref> | ||

|- | |- | ||

| − | | | + | | 2021 || {{dts|October 26}} || Internship || CHAI announces its 2022 AI safety research internship program, with applications due by November 13, 2021. The program offers 3-4 month mentorship opportunities to work on AI safety research projects, either in-person at UC Berkeley or remotely. The internship aims to provide experience in technical AI safety research for individuals with a background in mathematics, computer science, or related fields. The selection process includes a cover letter or research proposal, programming assessments, and interviews.<ref>{{cite web|url=https://www.lesswrong.com/posts/Xa4b8vgCLRATiqnJn/chai-internship-applications-are-open-due-nov-13 |title=CHAI Internship Applications are Open |website=LessWrong |date=October 26, 2021 |accessdate=October 7, 2024}}</ref> |

|- | |- | ||

| − | | | + | | 2022 || {{dts|January 18}} || Publication || Several papers were published by CHAI researchers. Tom Lenaerts and his co-authors explored "Voluntary safety commitments in AI development," suggesting that such commitments help escape over-regulation. Another paper, "Cross-Domain Imitation Learning via Optimal Transport," by Arnaud Fickinger, Stuart Russell, and others, discussed how to achieve cross-domain transfer in continuous control domains. Finally, Scott Emmons and his team published research on offline reinforcement learning, showing that simple design choices can improve empirical performance on RL benchmarks.<ref>{{cite web|url=https://humancompatible.ai/news/2022/01/18/new-papers-published/ |title=New Papers Published |website=Center for Human-Compatible AI |date=January 18, 2022 |accessdate=October 7, 2024}}</ref> |

|- | |- | ||

| − | | | + | | 2022 || {{dts|February 17}} || Award || Stuart Russell joins the inaugural cohort of AI2050 fellows, an initiative launched by Schmidt Futures with $125 million in funding over five years. The goal of AI2050 is to address the challenges of AI development. Russell’s focus is on enhancing AI interpretability, provable safety, and performance through probabilistic programming.<ref>{{cite web|url=https://humancompatible.ai/news/2022/02/17/schmidt-futures-launches-ai2050-to-protect-our-human-future-in-the-age-of-artificial-intelligence%EF%BF%BC/ |title=Schmidt Futures Launches AI2050 to Protect Our Human Future in the Age of Artificial Intelligence |website=Center for Human-Compatible AI |date=February 17, 2022 |accessdate=October 7, 2024}}</ref> |

|- | |- | ||

| − | | | + | | 2022 || {{dts|May 2022}} || Internal Review || CHAI releases a progress report detailing the growth, research outputs, and engagements from May 2022 to April 2023. This includes 32 papers on AI topics like assistance games, adversarial robustness, and social impacts. It also covers CHAI’s work on advising on AI regulation and policy. Additionally, CHAI's research on safe AI development in large language models and AI vulnerabilities is emphasized.<ref>{{cite web|url=https://humancompatible.ai/progress-report |title=Progress Report |website=Center for Human-Compatible AI |date=May 31, 2023 |accessdate=October 7, 2024}}</ref> |

|- | |- | ||

| − | | | + | | 2022 || {{dts|October 7}}-9 || Workshop || CHAI holds the NSF Convergence Accelerator Workshop on Provably Safe and Beneficial AI (PSBAI) to develop a research agenda for creating verifiable, well-founded AI systems. The workshop gathers 51 experts from diverse fields such as AI, ethics, and law, to ensure safe AI integration into society.<ref>{{cite web|url=https://humancompatible.ai/progress-report |title=Progress Report |website=Center for Human-Compatible AI |date=May 31, 2023 |accessdate=October 7, 2024}}</ref> |

|- | |- | ||

| − | | | + | | 2022 || {{dts|November 18}} || Publication || A paper titled “Time-Efficient Reward Learning via Visually Assisted Cluster Ranking” was accepted at the Human-in-the-loop Learning (HILL) Workshop at NeurIPS 2022. Written by Micah Carroll, Anca Dragan, and collaborators, the paper addresses improving reward learning efficiency through the use of data visualization techniques, enabling humans to label clusters of data points simultaneously rather than individually, optimizing human feedback usage.<ref>{{cite web|url=https://humancompatible.ai/news/2022/11/18/time-efficient-reward-learning-via-visually-assisted-cluster-ranking/ |title=Time-Efficient Reward Learning via Visually Assisted Cluster Ranking |website=Center for Human-Compatible AI |date=November 18, 2022 |accessdate=October 7, 2024}}</ref> |

|- | |- | ||

| − | | | + | | 2022 || {{dts|December 12}} || Publication || CHAI researcher Justin Svegliato publishes a paper in the Artificial Intelligence Journal on competence-aware systems (CAS). CAS are designed to understand and reason about their own competence and adjust their level of autonomy based on interactions with human authority, optimizing autonomy in varying situations.<ref>{{cite web|url=https://humancompatible.ai/news/2022/12/12/competence-aware-systems/ |title=Competence-Aware Systems |website=Center for Human-Compatible AI |date=December 12, 2022 |accessdate=October 7, 2024}}</ref> |

|- | |- | ||

| − | | | + | | 2022 || {{dts|December 29}} || Publication || CHAI researcher Tom Lenaerts co-authors a publication in Nature titled "Fast deliberation is related to unconditional behaviour in iterated Prisoners’ Dilemma experiments." The research investigates the relationship between cognitive effort and social value orientation, analyzing how response times in strategic situations reflect different social behaviors.<ref>{{cite web|url=https://humancompatible.ai/news/2022/12/29/fast-deliberation-is-related-to-unconditional-behaviour-in-iterated-prisoners-dilemma-experiments-scientific-reports-121-1-10/ |title=Fast Deliberation is Related to Unconditional Behaviour in Iterated Prisoners’ Dilemma Experiments |website=Center for Human-Compatible AI |date=December 29, 2022 |accessdate=October 7, 2024}}</ref> |

| + | |- | ||

| + | | 2023 || {{dts|March 10}} || Publication || CHAI begins contributing to discussions on AI takeover scenarios, focusing on maximizing objectives like productive output, leading to outer misalignment and potential existential risks. Research examines AI's impact on critical resources for humans and challenges in safely deploying transformative AI due to competitive pressures, emphasizing robust AI alignment and regulation.<ref>{{cite web|url=https://www.alignmentforum.org/posts/x7dKH4hFJcrw7dDnG/distinguishing-ai-takeover-scenarios |title=Distinguishing AI Takeover Scenarios |website=AI Alignment Forum |date=March 10, 2023 |accessdate=October 7, 2024}}</ref> | ||

| + | |||

| + | |- | ||

| + | |||

| + | | 2023 || {{dts|June 16}}-18 || Workshop || CHAI hosts its 7th annual workshop at Asilomar Conference Grounds, Pacific Grove, California. Nearly 200 attendees participate in discussions, lightning talks, and group activities on AI safety and alignment research. Casual activities like beach walks and bonfires build community within the AI safety field.<ref>{{cite web|url=https://humancompatible.ai/news/2023/06/20/seven-annual-chai-workshop/ |title=Seventh Annual CHAI Workshop |website=Center for Human-Compatible AI |date=June 20, 2023 |accessdate=October 7, 2024}}</ref> | ||

| + | |||

| + | |- | ||

| + | |||

| + | | 2023 || {{dts|September 22}} || Workshop || CHAI holds a virtual sister workshop on the Ethical Design of AIs (EDAI) that complements the Provably Safe and Beneficial AI (PSBAI) workshop. The sessions focus on ethical principles, human-centered AI design, governance, and addressing domain-specific challenges in implementing ethical AI systems.<ref>{{cite web|url=https://humancompatible.ai/progress-report |title=Progress Report |website=CHAI |date=2023 |accessdate=October 7, 2024}}</ref> | ||

|- | |- | ||

| − | | | + | | 2023 || {{dts|September 7}} || Award || Stuart Russell, founder of CHAI and professor at UC Berkeley, is named one of TIME's 100 Most Influential People in AI. Recognized as a leading thinker in AI safety, Russell is acknowledged for his contributions to responsible AI development and advocacy for AI safety, including support for pausing large-scale AI experiments.<ref>{{cite web|url=https://humancompatible.ai/news/2023/09/18/100-most-influential-people-in-ai/ |title=100 Most Influential People in AI |website=Center for Human-Compatible AI |date=September 18, 2023 |accessdate=October 7, 2024}}</ref> |

|- | |- | ||

| − | | | + | | 2023 || {{dts|May 2023}} || Award || Stuart Russell, Professor at UC Berkeley and founder of CHAI, receives the ACM’s AAAI Allen Newell Award for foundational contributions to AI. The award honors career achievements with a broad impact within computer science or across multiple disciplines. Russell is noted for his work, including the widely used textbook "Artificial Intelligence: A Modern Approach" and his focus on AI safety.<ref>{{cite web|url=https://engineering.berkeley.edu/news/2023/05/stuart-russell-receives-acms-aaai-allen-newell-award/ |title=Stuart Russell receives ACM’s AAAI Allen Newell Award |website=Berkeley Engineering |date=May 4, 2023 |accessdate=October 7, 2024}}</ref> |

|- | |- | ||

| − | | | + | | 2023 || {{dts|November 10}} || Presentation || Jonathan Stray, CHAI Senior Scientist, presents a talk titled “Orienting AI Toward Peace” at Stanford's "Beyond Moderation: How We Can Use Technology to De-Escalate Political Conflict" conference. He proposes strategies for AI to avoid escalating political conflicts, including defining desirable conflicts, developing conflict indicators, and incorporating this feedback into AI objectives.<ref>{{cite web|url=https://humancompatible.ai/news/2023/11/21/orienting-ai-toward-peace/ |title=Orienting AI Toward Peace |website=Center for Human-Compatible AI |date=November 21, 2023 |accessdate=October 7, 2024}}</ref> |

| + | |||

| + | |- | ||

| + | |||

| + | | 2024 || {{dts|March 5}} || Publication || CHAI researchers publish a study addressing challenges with partial observability in AI systems. The study explores issues related to AI misinterpreting human feedback under limited information, which can result in unintentional deception or overly compensatory actions by the AI. The research aims to improve AI's alignment with human interests through more refined feedback mechanisms.<ref>{{cite web|url=https://humancompatible.ai/news/2024/03/05/when-your-ais-deceive-you-challenges-with-partial-observability-of-human-evaluators-in-reward-learning/ |title=When Your AIs Deceive You: Challenges with Partial Observability of Human Evaluators in Reward Learning |website=CHAI |date=March 5, 2024 |accessdate=October 7, 2024}}</ref> | ||

| + | |||

| + | |- | ||

| + | |||

| + | | 2024 || {{dts|March 28}} || Publication || Brian Christian, CHAI affiliate and renowned author, shares insights from his academic journey exploring AI that better reflects human values. Christian's work focuses on closing the gap between AI systems' assumptions about human rationality and the complexities of human behavior, offering a more nuanced approach to AI ethics.<ref>{{cite web|url=https://humancompatible.ai/news/2024/03/28/embracing-ai-that-reflects-human-values-insights-from-brian-christians-journey/ |title=Embracing AI That Reflects Human Values: Insights from Brian Christian’s Journey |website=CHAI |date=March 28, 2024 |accessdate=October 7, 2024}}</ref> | ||

| + | |||

| + | |- | ||

| + | |||

| + | | 2024 || {{dts|April 30}} || Presentation || Rachel Freedman, a CHAI PhD graduate student, presents new developments in reinforcement learning with human feedback (RLHF) at Stanford. The research addresses key challenges in modeling human preferences, proposing solutions like active teacher selection (ATS) to improve AI's ability to learn from diverse human feedback.<ref>{{cite web|url=https://humancompatible.ai/news/2024/04/30/reinforcement-learning-with-human-feedback-and-active-teacher-selection-rlhf-and-ats/ |title=Reinforcement Learning with Human Feedback and Active Teacher Selection |website=CHAI |date=April 30, 2024 |accessdate=October 7, 2024}}</ref> | ||

| + | |||

| + | |- | ||

| + | |||

| + | | 2024 || {{dts|June 13}}-16 || Workshop || CHAI holds its 8th annual workshop at Asilomar Conference Grounds, with over 200 attendees. The workshop features more than 60 speakers, covering a range of topics such as societal impacts of AI, adversarial robustness, and AI alignment.<ref>{{cite web|url=https://humancompatible.ai/news/2024/06/18/8th-annual-chai-workshop/ |title=8th Annual CHAI Workshop |website=CHAI |date=June 18, 2024 |accessdate=October 7, 2024}}</ref> | ||

| + | |||

| + | |- | ||

| + | |||

| + | | 2024 || {{dts|July 23}} || Publication || CHAI researchers, including Stuart Russell and Anca Dragan, publish a paper on the challenges of AI alignment with changing and influenceable reward functions. The study introduces Dynamic Reward Markov Decision Processes (DR-MDPs), which model preference changes over time and address the risks associated with AI influencing human preferences.<ref>{{cite web|url=https://humancompatible.ai/news/2024/07/23/ai-alignment-with-changing-and-influenceable-reward-functions/ |title=AI Alignment with Changing and Influenceable Reward Functions |website=CHAI |date=July 23, 2024 |accessdate=October 7, 2024}}</ref> | ||

| + | |||

| + | |- | ||

| + | | 2024 || {{dts|August 7}} || Publication || Rachel Freedman and Wes Holliday publish a paper at the International Conference on Machine Learning (ICML) discussing how social choice theory can guide AI alignment when dealing with diverse human feedback. The paper explores approaches like reinforcement learning from human feedback and constitutional AI to better aggregate human preferences.<ref>{{cite web|url=https://humancompatible.ai/news/2024/08/07/social-choice-should-guide-ai-alignment-in-dealing-with-diverse-human-feedback/ |title=Social Choice Should Guide AI Alignment in Dealing with Diverse Human Feedback |website=CHAI |date=August 7, 2024 |accessdate=October 7, 2024}}</ref> | ||

|} | |} | ||

Latest revision as of 18:06, 8 October 2024

This is a timeline of Center for Human-Compatible AI (CHAI).

Contents

Big picture

| Time period | Development summary | More details |

|---|---|---|

| 2016–2021 | CHAI is established and grows | CHAI is established in 2016, begins producing research, and rapidly becomes a leading institution in AI safety. During this period, CHAI hosts numerous workshops, publishes key papers on AI alignment, and gains recognition within the AI safety community. Several PhD students join, and notable collaborations are formed with other institutions. |

| 2021–2024 | Continued expansion and recognition | From 2021 onwards, CHAI continues to expand its research efforts and impact. This period includes increased international recognition, major contributions to AI policy discussions, participation in global conferences, and the establishment of new research initiatives focused on AI-human collaboration and safety. CHAI's researchers receive prestigious awards, and the institution solidifies its role as a thought leader in AI alignment. |

Full timeline

Here are the inclusion criteria for various event types in the timeline related to CHAI (Center for Human-Compatible AI):

- Publication: The intention is to include the most notable publications. These are typically those that have been highlighted by CHAI itself or have gained recognition in the broader AI safety community or media. Given the large volume of research papers, only papers that have significant impact, such as being accepted at prominent conferences or being discussed widely, are included.

- Website: The intention is to include all websites directly associated with CHAI or initiatives that CHAI is heavily involved in. This includes new websites launched by CHAI or collaborative websites for joint projects.

- Staff: The intention is to include significant changes or additions to CHAI staff, particularly new PhD students, research fellows, senior scientists, and leadership positions. Promotions and transitions in key roles are also included.

- Workshop: All workshops organized or hosted by CHAI are included, particularly those that focus on advancing AI safety, alignment research, or collaborations within the AI research community. Virtual and in-person events count equally if organized by CHAI.

- Conference: All conferences where CHAI organizes or leads are included. Conferences where CHAI staff give significant presentations, lead discussions, or organize major sessions are also featured.

- Internal Review: This includes annual or progress reports published by CHAI, summarizing achievements, research outputs, and strategic directions. These reports provide a comprehensive review of the organization's work over a given period.

- External Review: Includes substantive reviews of CHAI's work by external bodies or media. Only reviews that treat CHAI or its researchers in significant detail are included.

- Financial: The inclusion focuses on large donations or funding announcements of over $10,000. Funding that supports major initiatives or collaborations that advance AI safety research is highlighted.

- Staff Recognition: This includes awards, honors, and recognitions received by CHAI researchers for their contributions to AI safety or AI ethics. Recognitions such as TIME’s 100 Most Influential People or prestigious fellowships are included.

- Social Media: Significant milestones such as new social media account creations for CHAI or major social media events (like Reddit AMAs) hosted by CHAI-affiliated researchers are included.

- Project Announcement or Initiatives: Major projects, initiatives, or research programs launched by CHAI that are aimed at advancing AI alignment, existential risk mitigation, or improving AI-human cooperation are included. These announcements highlight new directions or collaborative efforts in AI safety.

- Collaboration: Includes significant collaborations with other institutions where CHAI plays a major role, such as co-authoring reports, leading joint research projects, or providing advisory roles. Collaborations aimed at policy, safety, or alignment are particularly relevant.

| Year | Month and date | Event type | Details |

|---|---|---|---|

| 2016 | August | Organization | The UC Berkeley Center for Human-Compatible Artificial Intelligence launches. The focus of the center is "to ensure that AI systems are beneficial to humans".[1] |

| 2016 | August | Financial | The Open Philanthropy Project awards a grant of $5.6 million to the Center for Human-Compatible AI.[2] |

| 2016 | November 24 | Publication | The initial version of "The Off-Switch Game", a paper by Dylan Hadfield-Menell, Anca Dragan, Pieter Abbeel, and Stuart Russell, is uploaded to the arXiv.[3][4] |

| 2016 | December | Publication | CHAI's "Annotated bibliography of recommended materials" is published around this time.[5] |

| 2017 | May 5–6 | Workshop | CHAI's first annual workshop takes place. The annual workshop is "designed to advance discussion and research" to "reorient the field of artificial intelligence toward developing systems that are provably beneficial to humans".[6] |

| 2017 | May 28 | Publication | "Should Robots be Obedient?" by Smitha Milli, Dylan Hadfield-Menell, Anca Dragan, and Stuart Russell is uploaded to the arXiv.[7][4] |

| 2017 | October | Staff | Rosie Campbell joins CHAI as Assistant Director.[8] |

| 2018 | February 1 | Publication | Joseph Halpern publishes "Information Acquisition Under Resource Limitations in a Noisy Environment," contributing to discussions on optimizing decision-making processes when resources and data are limited. The paper offers insights into how intelligent agents can make informed decisions in complex environments. [9] |

| 2018 | February 7 | Media Mention | Anca Dragan, a CHAI researcher, is featured in Forbes in an article discussing AI research with a focus on value alignment and the broader ethical implications of AI development. This inclusion highlights Dragan's contributions to the field and raises awareness on the importance of aligning AI behavior with human values. [10] |

| 2018 | February 26 | Conference | Anca Dragan presents "Expressing Robot Incapability" at the ACM/IEEE International Conference on Human-Robot Interaction. The presentation explores how robots can effectively communicate their limitations to humans, an essential step in developing more trustworthy and transparent human-robot collaborations.[11] |

| 2018 | March 8 | Publication | Anca Dragan and her team publish "Learning from Physical Human Corrections, One Feature at a Time," a paper focusing on the dynamics of human-robot interaction. This research emphasizes how robots can learn more effectively from human corrections, improving overall performance in collaborative settings.[12] |

| 2018 | March | Staff | Andrew Critch, who was previously on leave from the Machine Intelligence Research Institute to help launch CHAI and the Berkeley Existential Risk Initiative, accepts a position as CHAI's first research scientist.[13] |

| 2018 | April 4–12 | Organization | CHAI gets a new logo (green background with white letters "CHAI") sometime during this period.[14][15] |

| 2018 | April 9 | Publication | The Alignment Newsletter is publicly announced. The weekly newsletter summarizes content relevant to AI alignment from the previous week. Before the Alignment Newsletter was made public, a similar series of emails was produced internally for CHAI.[16][17] (It's not clear from the announcement whether the Alignment Newsletter is being produced officially by CHAI, or whether the initial emails were produced by CHAI and the later public newsletters are being produced independently.) |

| 2018 | April 28–29 | Workshop | CHAI hosts its second annual workshop, which brings together researchers, industry experts, and collaborators to discuss progress and challenges in AI safety and alignment. The event covers themes such as reward modeling, interpretability, and human-AI collaboration, promoting open dialogue on cooperative AI development and fostering connections to advance safety goals.[18] |

| 2018 | July 2 | Publication | Thomas Krendl Gilbert, a CHAI researcher, publishes "A Broader View on Bias in Automated Decision-Making" at ICML 2018. This work critically analyzes how bias in AI systems can perpetuate unfairness, providing strategies for integrating fairness and ethical standards into AI decision-making processes. The publication stresses the importance of preemptive efforts to address biases in automated decision-making.[19] |

| 2018 | July 13 | Workshop | At the 1st Workshop on Goal Specifications for Reinforcement Learning, CHAI researcher Daniel Filan presents "Exploring Hierarchy-Aware Inverse Reinforcement Learning." The work aims to advance understanding of hierarchical human goals in AI systems, enhancing AI agents' capabilities to align their behaviour with complex human intentions in varied and multi-level environments.[20] |

| 2018 | July 14 | Workshop | CHAI researchers Adam Gleave and Rohin Shah present "Active Inverse Reward Design" at the same workshop. Their work explores how AI systems can actively query human users to resolve ambiguities in reward structures, refining AI behavior to better align with nuanced human preferences, thereby improving decision-making in complex tasks.[21] |

| 2018 | July 16 | Publication | Stuart Russell and Anca Dragan of CHAI publish "An Efficient, Generalized Bellman Update For Cooperative Inverse Reinforcement Learning" at ICML 2018. The paper introduces an innovative method to enhance AI's capability to cooperate with human partners by efficiently updating beliefs and learning shared goals, which is vital for developing AI systems that safely and effectively interact with humans.[22] |

| 2018 | August 26 | Workshop | CHAI students join the AI Alignment Workshop organized by MIRI. This collaborative event focuses on core challenges in AI safety, such as creating systems aligned with human values, exploring theoretical advances in AI behaviour, and generating ideas for ensuring safe AI development. Students engage with researchers from various backgrounds to discuss emerging issues and practical solutions for AI alignment.[23] |

| 2018 | September 4 | Conference | Jaime Fisac, a CHAI researcher, presents his work at three conferences, exploring themes in AI safety and control under uncertainty. Fisac’s research aims to enhance the understanding of robust and safe AI interactions in dynamic settings, focusing on developing methods that ensure AI systems act predictably and align with human expectations across diverse environments.[24] |

| 2018 | October 31 | Recognition | Rosie Campbell, Assistant Director at CHAI, is honored as one of the Top Women in AI Ethics on Social Media by Mia Dand. This recognition acknowledges Campbell’s leadership in AI ethics, her influence on responsible AI development, and her contributions to fostering discussions around the ethical challenges of advancing AI technologies.[25] |

| 2018 | December | Conference | CHAI researchers participate in the NeurIPS 2018 Conference, presenting their work and contributing to discussions on AI research, safety, and policy development. The event offers CHAI members the opportunity to engage with peers in the AI field, share findings on AI alignment, and explore new avenues for collaboration to enhance AI safety research.[26] |

| 2018 | December | Podcast | Rohin Shah, a CHAI researcher, features on the AI Alignment Podcast by the Future of Life Institute. In this episode, Shah discusses Inverse Reinforcement Learning, outlining how AI systems can better learn and adapt to human preferences, ultimately contributing to the development of safe, aligned AI technologies that act according to human values.[27] |

| 2018 | December | Media Mention | Stuart Russell and Rosie Campbell from CHAI are quoted in a Vox article titled "The Case for Taking AI Seriously as a Threat to Humanity." They emphasize the potential for AI systems to be developed without adequate safety measures or ethical considerations, which could lead to unintended and potentially harmful consequences. The article highlights their advocacy for aligning AI with human values and ensuring that AI safety is prioritized to prevent misuse or detrimental effects on society.[28] |

| 2019 | January 8 | Talks | Rosie Campbell delivers insightful talks at AI Meetups in San Francisco and East Bay, discussing neural networks and CHAI's approach to AI safety. These talks fostered community engagement and highlighted the complexities of AI alignment.[29] |

| 2019 | January 15 | Award | Stuart Russell receives the prestigious AAAI Feigenbaum Prize for his groundbreaking work in probabilistic knowledge representation, which has had a profound impact on AI’s application to global challenges such as seismic monitoring for nuclear test detection.[30] |

| 2019 | January 17 | Conference | At AAAI 2019, CHAI faculty present multiple papers, covering topics from deception strategies in security games to advances in multi-agent reinforcement learning. Their work also tackles the social implications of AI, emphasizing ethical considerations in AI deployment.[31] |

| 2019 | January 20 | Publication | Former CHAI intern Alex Turner wins the AI Alignment Prize for his work on penalizing impact via attainable utility preservation. This research offers novel insights into AI safety by focusing on how AI can be regulated to minimize unintended harm.[32] |

| 2019 | January 29 | Conference | At the ACM FAT* Conference, Smitha Milli and Anca Dragan present two papers addressing the ethical implications of AI systems, particularly the need for transparency in AI decision-making to ensure fairness and accountability.[33] |

| 2019 | June 15 | Conference | CHAI faculty members Rohin Shah, Pieter Abbeel, and Anca Dragan present their research at ICML 2019, focusing on human-AI coordination and the challenge of addressing human biases in AI reward inference.[34] |

| 2019 | July 5 | Publication | CHAI releases an open-source imitation learning library developed by Steven Wang, Adam Gleave, and Sam Toyer, providing benchmarks for critical algorithms like GAIL and AIRL. This marks a significant advancement in the tools available for imitation learning research.[35] |

| 2019 | July 5 | Research Summary | Rohin Shah publishes a summary of CHAI's work on human biases in reward inference, shedding light on how AI systems can better align their decision-making processes with real-world human behavior.[36] |

| 2019 | August 15 | Media Publication | Mark Nitzberg authors a widely-discussed article in WIRED advocating for an “FDA for algorithms,” calling for stricter regulatory oversight of AI development to ensure safety and transparency in AI systems.[37] |

| 2019 | August 28 | Paper Submission | Thomas Krendl Gilbert submits The Passions and the Reward Functions: Rival Views of AI Safety? to FAT*2020, exploring philosophical perspectives on AI safety and the ethical alignment of AI reward systems with human emotions.[38] |

| 2019 | September 28 | Newsletter | Rohin Shah expands the AI Alignment Newsletter, turning it into a key resource for the AI safety community by offering detailed updates on the latest developments in AI safety research.[39] |

| 2020 | June 1 | Workshop | CHAI holds its first virtual workshop due to COVID-19, bringing together around 150 participants from the AI safety community. The event features discussions, talks, and collaborations focused on reducing existential risks from advanced AI.[40] |

| 2020 | September 1 | Staff | Six new PhD students join CHAI, each advised by Principal Investigators. The incoming students—Yuxi Liu, Micah Carroll, Cassidy Laidlaw, Alex Gunning, Alyssa Dayan, and Jessy Lin—bring diverse interests ranging from mathematics to AI-human cooperation.[41] |

| 2020 | September 10 | Publication | CHAI PhD student Rachel Freedman has two papers accepted at IJCAI-20 workshops. The first paper, "Choice Set Misspecification in Reward Inference," analyzes errors in robot reward function inference. The second, "Aligning with Heterogeneous Preferences for Kidney Exchange," proposes AI solutions for preference aggregation in kidney exchange programs.[42] |

| 2020 | October 10 | Publication | Brian Christian publishes "The Alignment Problem: Machine Learning and Human Values," highlighting key milestones and challenges in AI safety. The book showcases the work of many CHAI researchers and is aimed at understanding technical AI safety progress.[43] |

| 2020 | October 21 | Workshop | CHAI hosts a virtual event to celebrate the launch of Brian Christian’s book "The Alignment Problem." The event includes an interview with the author and audience Q&A, hosted by journalist Nora Young, providing insight into AI safety and ethics.[44] |

| 2020 | November 12 | Internship | CHAI opens applications for its 2021 research internships. Interns work on research projects with mentors and participate in seminars and workshops. The early deadline is November 23, and the final deadline is December 13.[45] |

| 2020 | December 20 | Financial | The Survival and Flourishing Fund (SFF) donates $799,000 to CHAI and $247,000 to the Berkeley Existential Risk Initiative for BERI-CHAI collaboration. These funds support AI safety research and collaborations aiming to improve humanity’s long-term survival prospects.[46] |

| 2021 | January 6 | Podcast | Daniel Filan debuts the AI X-risk Research Podcast (AXRP). The podcast focuses on AI alignment, technical challenges, and existential risks, bringing insights from experts and researchers working on ensuring AI safety.[47] |

| 2021 | February 5 | Publication | Thomas Krendl Gilbert releases the paper "AI Development for the Public Interest: From Abstraction Traps to Sociotechnical Risks" at IEEE ISTAS20. The paper critiques limited abstraction in AI research and advocates for integrating social context and ethical considerations into AI systems development.[48] |

| 2021 | February 9 | Debate | Stuart Russell debates Melanie Mitchell on The Munk Debates. Russell discusses AI safety concerns, the urgency of AI alignment research, and the risks associated with unregulated AI development. He stresses the importance of international governance to control AI technologies safely.[49] |

| 2021 | January 25 | Podcast | Michael Dennis appears on the TalkRL podcast, discussing reinforcement learning, AI safety, and the challenges in creating safe and reliable AI systems. Dennis addresses the complexities of reward design and behavior modeling to align AI with human objectives.[50] |

| 2021 | March 18 | Conference | At AAAI 2021, CHAI faculty and affiliates present multiple papers focusing on AI alignment, safe reinforcement learning, and improving AI-human interactions. Contributions include research on scalable reward modeling and methods for better interpretability of AI behavior.[51] |

| 2021 | March 25 | Award | Brian Christian's book "The Alignment Problem" is recognized with the Excellence in Science Communication Award by Eric and Wendy Schmidt and the National Academies. The book explores AI alignment challenges and ethical dilemmas in designing AI systems that behave as intended.[52] |

| 2021 | April 11 | Workshop | Stuart Russell and Caroline Jeanmaire organize a virtual workshop titled "AI Economic Futures," in collaboration with the Global AI Council at the World Economic Forum. The series aims to discuss AI policy recommendations and their impact on future economic prosperity.[53] |

| 2021 | June 7-8 | Workshop | CHAI hosts its Fifth Annual Workshop, where researchers, students, and collaborators discuss advancements in AI safety, alignment, and research progress. The workshop addresses key challenges in AI reward modeling, interpretability, and scalable alignment techniques.[54] |

| 2021 | July 9 | Competition | CHAI researchers contribute to the launch of the NeurIPS MineRL BASALT Competition. The competition aims to promote research in imitation learning, focusing on AI systems learning from human demonstration within the open-world Minecraft environment to improve behavior modeling.[55] |

| 2021 | August 7 | Award | Stuart Russell is named an Officer of the Most Excellent Order of the British Empire (OBE) for his significant contributions to artificial intelligence research and AI safety. This recognition highlights his impact on AI ethics and governance.[56] |

| 2021 | October 26 | Internship | CHAI announces its 2022 AI safety research internship program, with applications due by November 13, 2021. The program offers 3-4 month mentorship opportunities to work on AI safety research projects, either in-person at UC Berkeley or remotely. The internship aims to provide experience in technical AI safety research for individuals with a background in mathematics, computer science, or related fields. The selection process includes a cover letter or research proposal, programming assessments, and interviews.[57] |

| 2022 | January 18 | Publication | Several papers were published by CHAI researchers. Tom Lenaerts and his co-authors explored "Voluntary safety commitments in AI development," suggesting that such commitments help escape over-regulation. Another paper, "Cross-Domain Imitation Learning via Optimal Transport," by Arnaud Fickinger, Stuart Russell, and others, discussed how to achieve cross-domain transfer in continuous control domains. Finally, Scott Emmons and his team published research on offline reinforcement learning, showing that simple design choices can improve empirical performance on RL benchmarks.[58] |

| 2022 | February 17 | Award | Stuart Russell joins the inaugural cohort of AI2050 fellows, an initiative launched by Schmidt Futures with $125 million in funding over five years. The goal of AI2050 is to address the challenges of AI development. Russell’s focus is on enhancing AI interpretability, provable safety, and performance through probabilistic programming.[59] |

| 2022 | May 2022 | Internal Review | CHAI releases a progress report detailing the growth, research outputs, and engagements from May 2022 to April 2023. This includes 32 papers on AI topics like assistance games, adversarial robustness, and social impacts. It also covers CHAI’s work on advising on AI regulation and policy. Additionally, CHAI's research on safe AI development in large language models and AI vulnerabilities is emphasized.[60] |

| 2022 | October 7-9 | Workshop | CHAI holds the NSF Convergence Accelerator Workshop on Provably Safe and Beneficial AI (PSBAI) to develop a research agenda for creating verifiable, well-founded AI systems. The workshop gathers 51 experts from diverse fields such as AI, ethics, and law, to ensure safe AI integration into society.[61] |

| 2022 | November 18 | Publication | A paper titled “Time-Efficient Reward Learning via Visually Assisted Cluster Ranking” was accepted at the Human-in-the-loop Learning (HILL) Workshop at NeurIPS 2022. Written by Micah Carroll, Anca Dragan, and collaborators, the paper addresses improving reward learning efficiency through the use of data visualization techniques, enabling humans to label clusters of data points simultaneously rather than individually, optimizing human feedback usage.[62] |

| 2022 | December 12 | Publication | CHAI researcher Justin Svegliato publishes a paper in the Artificial Intelligence Journal on competence-aware systems (CAS). CAS are designed to understand and reason about their own competence and adjust their level of autonomy based on interactions with human authority, optimizing autonomy in varying situations.[63] |

| 2022 | December 29 | Publication | CHAI researcher Tom Lenaerts co-authors a publication in Nature titled "Fast deliberation is related to unconditional behaviour in iterated Prisoners’ Dilemma experiments." The research investigates the relationship between cognitive effort and social value orientation, analyzing how response times in strategic situations reflect different social behaviors.[64] |

| 2023 | March 10 | Publication | CHAI begins contributing to discussions on AI takeover scenarios, focusing on maximizing objectives like productive output, leading to outer misalignment and potential existential risks. Research examines AI's impact on critical resources for humans and challenges in safely deploying transformative AI due to competitive pressures, emphasizing robust AI alignment and regulation.[65] |

| 2023 | June 16-18 | Workshop | CHAI hosts its 7th annual workshop at Asilomar Conference Grounds, Pacific Grove, California. Nearly 200 attendees participate in discussions, lightning talks, and group activities on AI safety and alignment research. Casual activities like beach walks and bonfires build community within the AI safety field.[66] |

| 2023 | September 22 | Workshop | CHAI holds a virtual sister workshop on the Ethical Design of AIs (EDAI) that complements the Provably Safe and Beneficial AI (PSBAI) workshop. The sessions focus on ethical principles, human-centered AI design, governance, and addressing domain-specific challenges in implementing ethical AI systems.[67] |

| 2023 | September 7 | Award | Stuart Russell, founder of CHAI and professor at UC Berkeley, is named one of TIME's 100 Most Influential People in AI. Recognized as a leading thinker in AI safety, Russell is acknowledged for his contributions to responsible AI development and advocacy for AI safety, including support for pausing large-scale AI experiments.[68] |

| 2023 | May 2023 | Award | Stuart Russell, Professor at UC Berkeley and founder of CHAI, receives the ACM’s AAAI Allen Newell Award for foundational contributions to AI. The award honors career achievements with a broad impact within computer science or across multiple disciplines. Russell is noted for his work, including the widely used textbook "Artificial Intelligence: A Modern Approach" and his focus on AI safety.[69] |

| 2023 | November 10 | Presentation | Jonathan Stray, CHAI Senior Scientist, presents a talk titled “Orienting AI Toward Peace” at Stanford's "Beyond Moderation: How We Can Use Technology to De-Escalate Political Conflict" conference. He proposes strategies for AI to avoid escalating political conflicts, including defining desirable conflicts, developing conflict indicators, and incorporating this feedback into AI objectives.[70] |

| 2024 | March 5 | Publication | CHAI researchers publish a study addressing challenges with partial observability in AI systems. The study explores issues related to AI misinterpreting human feedback under limited information, which can result in unintentional deception or overly compensatory actions by the AI. The research aims to improve AI's alignment with human interests through more refined feedback mechanisms.[71] |

| 2024 | March 28 | Publication | Brian Christian, CHAI affiliate and renowned author, shares insights from his academic journey exploring AI that better reflects human values. Christian's work focuses on closing the gap between AI systems' assumptions about human rationality and the complexities of human behavior, offering a more nuanced approach to AI ethics.[72] |

| 2024 | April 30 | Presentation | Rachel Freedman, a CHAI PhD graduate student, presents new developments in reinforcement learning with human feedback (RLHF) at Stanford. The research addresses key challenges in modeling human preferences, proposing solutions like active teacher selection (ATS) to improve AI's ability to learn from diverse human feedback.[73] |

| 2024 | June 13-16 | Workshop | CHAI holds its 8th annual workshop at Asilomar Conference Grounds, with over 200 attendees. The workshop features more than 60 speakers, covering a range of topics such as societal impacts of AI, adversarial robustness, and AI alignment.[74] |

| 2024 | July 23 | Publication | CHAI researchers, including Stuart Russell and Anca Dragan, publish a paper on the challenges of AI alignment with changing and influenceable reward functions. The study introduces Dynamic Reward Markov Decision Processes (DR-MDPs), which model preference changes over time and address the risks associated with AI influencing human preferences.[75] |

| 2024 | August 7 | Publication | Rachel Freedman and Wes Holliday publish a paper at the International Conference on Machine Learning (ICML) discussing how social choice theory can guide AI alignment when dealing with diverse human feedback. The paper explores approaches like reinforcement learning from human feedback and constitutional AI to better aggregate human preferences.[76] |

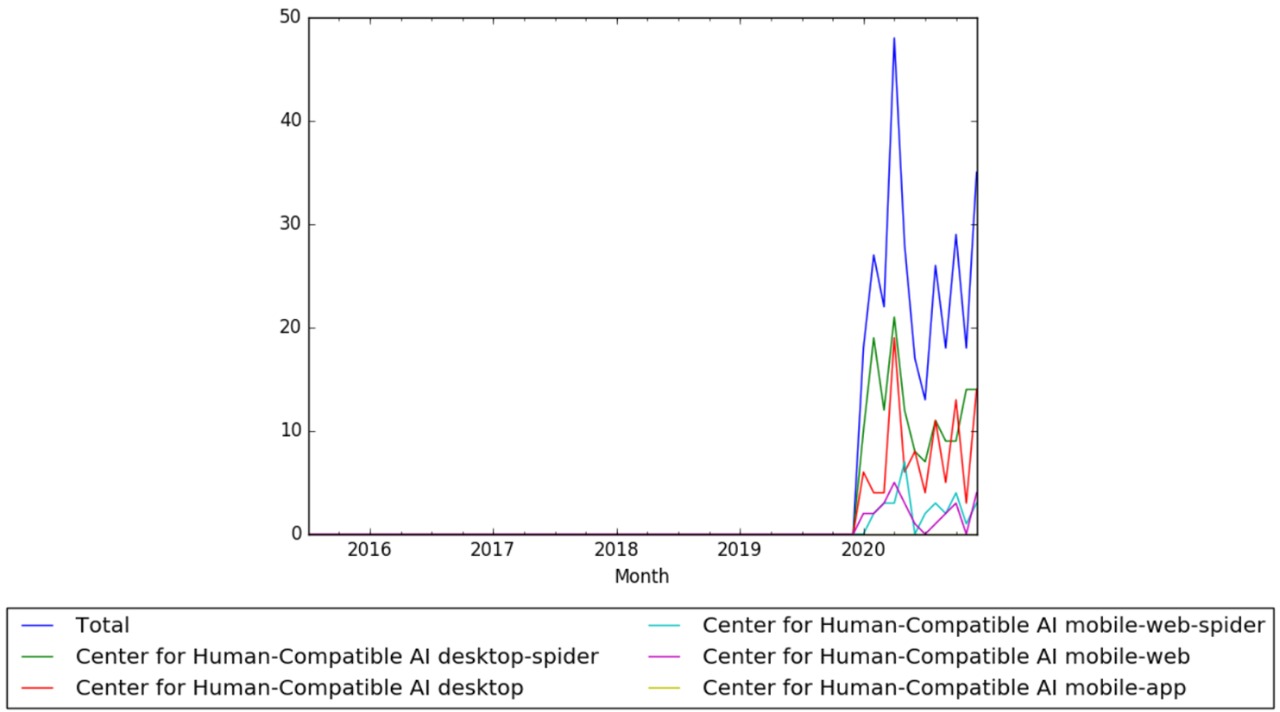

Visual data

Wikipedia Views

Meta information on the timeline

How the timeline was built

The initial version of the timeline was written by Issa Rice.

Funding information for this timeline is available.

What the timeline is still missing

Timeline update strategy

See also

- Timeline of Machine Intelligence Research Institute

- Timeline of Future of Humanity Institute

- Timeline of OpenAI

- Timeline of Berkeley Existential Risk Initiative

External links

References

- ↑ "UC Berkeley launches Center for Human-Compatible Artificial Intelligence". Berkeley News. August 29, 2016. Retrieved July 26, 2017.

- ↑ "Open Philanthropy Project donations made (filtered to cause areas matching AI risk)". Retrieved July 27, 2017.

- ↑ "[1611.08219] The Off-Switch Game". Retrieved February 9, 2018.

- ↑ 4.0 4.1 "2018 AI Safety Literature Review and Charity Comparison - Effective Altruism Forum". Retrieved February 9, 2018.

- ↑ "Center for Human-Compatible AI". Retrieved February 9, 2018.

- ↑ "Center for Human-Compatible AI". Archived from the original on February 9, 2018. Retrieved February 9, 2018.

- ↑ "[1705.09990] Should Robots be Obedient?". Retrieved February 9, 2018.

- ↑ "Rosie Campbell - BBC R&D". Archived from the original on May 11, 2018. Retrieved May 11, 2018.

Rosie left in October 2017 to take on the role of Assistant Director of the Center for Human-compatible AI at UC Berkeley, a research group which aims to ensure that artificially intelligent systems are provably beneficial to humans.

- ↑ "Information Acquisition Under Resource Limitations in a Noisy Environment". February 1, 2018. Retrieved September 30, 2024.

- ↑ "Anca Dragan on AI Value Alignment". February 7, 2018. Retrieved September 30, 2024.

- ↑ "Expressing Robot Incapability". ACM/IEEE International Conference. February 26, 2018. Retrieved September 30, 2024.

- ↑ "Learning from Physical Human Corrections, One Feature at a Time". March 8, 2018. Retrieved September 30, 2024.

- ↑ Bensinger, Rob (March 25, 2018). "March 2018 Newsletter - Machine Intelligence Research Institute". Machine Intelligence Research Institute. Retrieved May 10, 2018.

Andrew Critch, previously on leave from MIRI to help launch the Center for Human-Compatible AI and the Berkeley Existential Risk Initiative, has accepted a position as CHAI’s first research scientist. Critch will continue to work with and advise the MIRI team from his new academic home at UC Berkeley. Our congratulations to Critch!

- ↑ "Center for Human-Compatible AI". Archived from the original on April 4, 2018. Retrieved May 10, 2018.

- ↑ "Center for Human-Compatible AI". Archived from the original on April 12, 2018. Retrieved May 10, 2018.

- ↑ Shah, Rohin (April 9, 2018). "Announcing the Alignment Newsletter". LessWrong. Retrieved May 10, 2018.

- ↑ "Alignment Newsletter". Rohin Shah. Archived from the original on May 10, 2018. Retrieved May 10, 2018.

- ↑ "Center for Human-Compatible AI Workshop 2018". Archived from the original on February 9, 2018. Retrieved February 9, 2018.

- ↑ "A Broader View on Bias in Automated Decision-Making". ICML 2018. July 2, 2018. Retrieved September 30, 2024.

- ↑ "Exploring Hierarchy-Aware Inverse Reinforcement Learning". Goal Specifications Workshop. July 13, 2018. Retrieved September 30, 2024.

- ↑ "Active Inverse Reward Design". Goal Specifications Workshop. July 14, 2018. Retrieved September 30, 2024.

- ↑ "An Efficient, Generalized Bellman Update For Cooperative Inverse Reinforcement Learning". ICML 2018. July 16, 2018. Retrieved September 30, 2024.

- ↑ "MIRI AI Alignment Workshop". Machine Intelligence Research Institute. August 26, 2018. Retrieved September 30, 2024.

- ↑ "Jaime Fisac AI Safety Research". CHAI. September 4, 2018. Retrieved September 30, 2024.

- ↑ "Top Women in AI Ethics". Lighthouse3. October 31, 2018. Retrieved September 30, 2024.

- ↑ "NeurIPS 2018 Conference". NeurIPS. December 2018. Retrieved September 30, 2024.

- ↑ "AI Alignment Podcast: Rohin Shah". Future of Life Institute. December 2018. Retrieved September 30, 2024.

- ↑ "The Case for Taking AI Seriously as a Threat to Humanity". Vox. December 2018. Retrieved September 30, 2024.

- ↑ "Rosie Campbell Speaks About AI Safety and Neural Networks at San Francisco and East Bay AI Meetups". CHAI. January 8, 2019. Retrieved October 7, 2024.

- ↑ "Stuart Russell Receives AAAI Feigenbaum Prize". CHAI. January 15, 2019. Retrieved October 7, 2024.

- ↑ "CHAI Papers at the AAAI 2019 Conference". CHAI. January 17, 2019. Retrieved October 7, 2024.

- ↑ "Former CHAI Intern Wins AI Alignment Prize". CHAI. January 20, 2019. Retrieved October 7, 2024.

- ↑ "CHAI Papers at FAT* 2019". CHAI. January 29, 2019. Retrieved October 7, 2024.

- ↑ "CHAI Presentations at ICML". CHAI. June 15, 2019. Retrieved October 7, 2024.

- ↑ "CHAI Releases Imitation Learning Library". CHAI. July 5, 2019. Retrieved October 7, 2024.

- ↑ "Rohin Shah Summarizes CHAI's Research on Learning Human Biases". CHAI. July 5, 2019. Retrieved October 7, 2024.

- ↑ "Mark Nitzberg Writes in WIRED on the Need for an FDA for Algorithms". WIRED. August 15, 2019. Retrieved October 7, 2024.

- ↑ "Thomas Krendl Gilbert Submits Paper on Philosophical AI Safety". CHAI. August 28, 2019. Retrieved October 7, 2024.

- ↑ "Rohin Shah Expands the AI Alignment Newsletter". CHAI. September 28, 2019. Retrieved October 7, 2024.

- ↑ "CHAI Holds Its First Virtual Workshop". CHAI. June 1, 2020. Retrieved October 7, 2024.

- ↑ "Six New PhD Students Join CHAI". CHAI. September 1, 2020. Retrieved October 7, 2024.

- ↑ "IJCAI-20 Accepts Two Papers by CHAI PhD Student Rachel Freedman". CHAI. September 10, 2020. Retrieved October 7, 2024.

- ↑ "Brian Christian Publishes The Alignment Problem". CHAI. October 10, 2020. Retrieved October 7, 2024.

- ↑ "Watch the Book Launch of The Alignment Problem in Conversation with Brian Christian and Nora Young". CHAI. November 5, 2020. Retrieved October 7, 2024.

- ↑ "CHAI Internship Application Is Now Open". CHAI. November 12, 2020. Retrieved October 7, 2024.

- ↑ "CHAI and BERI Receive Donations". CHAI. December 20, 2020. Retrieved October 7, 2024.

- ↑ "Daniel Filan Launches AI X-risk Research Podcast". Center for Human-Compatible AI. January 6, 2021. Retrieved October 7, 2024.

- ↑ "Tom Gilbert Published in IEEE ISTAS20". Center for Human-Compatible AI. February 5, 2021. Retrieved October 7, 2024.

- ↑ "Stuart Russell on The Munk Debates". Center for Human-Compatible AI. February 9, 2021. Retrieved October 7, 2024.

- ↑ "Michael Dennis". TalkRL Podcast. January 25, 2021. Retrieved October 7, 2024.

- ↑ "CHAI Faculty and Affiliates Publish at AAAI 2021". Center for Human-Compatible AI. March 18, 2021. Retrieved October 7, 2024.

- ↑ "Brian Christian's "The Alignment Problem" wins the Excellence in Science Communication Award". Center for Human-Compatible AI. November 1, 2022. Retrieved October 7, 2024.

- ↑ "Professor Stuart Russell and Caroline Jeanmaire Organize Virtual Workshop Titled "AI Economic Futures"". Center for Human-Compatible AI. April 19, 2020. Retrieved October 7, 2024.

- ↑ "Fifth Annual CHAI Workshop". Center for Human-Compatible AI. June 16, 2021. Retrieved October 7, 2024.

- ↑ "NeurIPS MineRL BASALT Competition Launches". Center for Human-Compatible AI. July 9, 2021. Retrieved October 7, 2024.

- ↑ "Birthday Honours 2021: Overseas and International List". GOV.UK. June 11, 2021. Retrieved October 7, 2024.

- ↑ "CHAI Internship Applications are Open". LessWrong. October 26, 2021. Retrieved October 7, 2024.

- ↑ "New Papers Published". Center for Human-Compatible AI. January 18, 2022. Retrieved October 7, 2024.

- ↑ "Schmidt Futures Launches AI2050 to Protect Our Human Future in the Age of Artificial Intelligence". Center for Human-Compatible AI. February 17, 2022. Retrieved October 7, 2024.

- ↑ "Progress Report". Center for Human-Compatible AI. May 31, 2023. Retrieved October 7, 2024.

- ↑ "Progress Report". Center for Human-Compatible AI. May 31, 2023. Retrieved October 7, 2024.

- ↑ "Time-Efficient Reward Learning via Visually Assisted Cluster Ranking". Center for Human-Compatible AI. November 18, 2022. Retrieved October 7, 2024.

- ↑ "Competence-Aware Systems". Center for Human-Compatible AI. December 12, 2022. Retrieved October 7, 2024.

- ↑ "Fast Deliberation is Related to Unconditional Behaviour in Iterated Prisoners' Dilemma Experiments". Center for Human-Compatible AI. December 29, 2022. Retrieved October 7, 2024.

- ↑ "Distinguishing AI Takeover Scenarios". AI Alignment Forum. March 10, 2023. Retrieved October 7, 2024.

- ↑ "Seventh Annual CHAI Workshop". Center for Human-Compatible AI. June 20, 2023. Retrieved October 7, 2024.

- ↑ "Progress Report". CHAI. 2023. Retrieved October 7, 2024.

- ↑ "100 Most Influential People in AI". Center for Human-Compatible AI. September 18, 2023. Retrieved October 7, 2024.

- ↑ "Stuart Russell receives ACM's AAAI Allen Newell Award". Berkeley Engineering. May 4, 2023. Retrieved October 7, 2024.

- ↑ "Orienting AI Toward Peace". Center for Human-Compatible AI. November 21, 2023. Retrieved October 7, 2024.

- ↑ "When Your AIs Deceive You: Challenges with Partial Observability of Human Evaluators in Reward Learning". CHAI. March 5, 2024. Retrieved October 7, 2024.

- ↑ "Embracing AI That Reflects Human Values: Insights from Brian Christian's Journey". CHAI. March 28, 2024. Retrieved October 7, 2024.

- ↑ "Reinforcement Learning with Human Feedback and Active Teacher Selection". CHAI. April 30, 2024. Retrieved October 7, 2024.

- ↑ "8th Annual CHAI Workshop". CHAI. June 18, 2024. Retrieved October 7, 2024.

- ↑ "AI Alignment with Changing and Influenceable Reward Functions". CHAI. July 23, 2024. Retrieved October 7, 2024.

- ↑ "Social Choice Should Guide AI Alignment in Dealing with Diverse Human Feedback". CHAI. August 7, 2024. Retrieved October 7, 2024.