Difference between revisions of "Timeline of Machine Intelligence Research Institute"

| Line 454: | Line 454: | ||

|- | |- | ||

| 2021 || {{dts|November 29}} || || MIRI announces on the Alignment Forum that it is looking for assistance with getting datasets for its Visible Thoughts Project. The hypothesis is described as follows: "Language models can be made more understandable (and perhaps also more capable, though this is not the goal) by training them to produce visible thoughts."<ref>{{cite web|url = https://www.alignmentforum.org/posts/zRn6cLtxyNodudzhw/visible-thoughts-project-and-bounty-announcement|title = Visible Thoughts Project and Bounty Announcement|last = Soares|first = Nate|date = November 29, 2021|accessdate = December 2, 2021}}</ref> | | 2021 || {{dts|November 29}} || || MIRI announces on the Alignment Forum that it is looking for assistance with getting datasets for its Visible Thoughts Project. The hypothesis is described as follows: "Language models can be made more understandable (and perhaps also more capable, though this is not the goal) by training them to produce visible thoughts."<ref>{{cite web|url = https://www.alignmentforum.org/posts/zRn6cLtxyNodudzhw/visible-thoughts-project-and-bounty-announcement|title = Visible Thoughts Project and Bounty Announcement|last = Soares|first = Nate|date = November 29, 2021|accessdate = December 2, 2021}}</ref> | ||

| + | |- | ||

| + | | 2021 || {{dts|December}} || Financial || MIRI offered $200,000 to build an AI-dungeon-style writing dataset annotated with thoughts, and an additional $1,000,000 for scaling it 10x. | ||

| + | |- | ||

| + | | 2022 || {{dts|July}} || || MIRI released three major posts: "AGI Ruin: A List of Lethalities," "A central AI alignment problem," and "Six Dimensions of Operational Adequacy in AGI Projects." | ||

| + | |- | ||

| + | | 2022 || {{dts|July}} || Strategy || MIRI temporarily paused its newsletter and public communications to focus on refining internal strategies. | ||

| + | |- | ||

| + | | 2023 || {{dts|July}} || Advocacy || Eliezer Yudkowsky advocated for an indefinite worldwide moratorium on advanced AI training. | ||

| + | |- | ||

| + | | 2023 || {{dts|April}} || Leadership || Leadership change: Malo Bourgon became CEO, Nate Soares shifted to President, Akex Vermeer stepped up as COO and Eliezer Yudkowsky became the Chair of the Board.<ref>{{cite web|url = https://intelligence.org/2023/10/10/announcing-miris-new-ceo-and-leadership-team/|title = Announcing MIRI's New CEO and Leadership Team|date = October 10, 2023|accessdate = September 2, 2024|publisher = Machine Intelligence Research Institute}}</ref> | ||

| + | |- | ||

| + | | 2024 || {{dts|April}} || Strategy || MIRI released its 2024 Mission and Strategy update, announcing a major focus shift towards broad communication and policy change. <ref>{{cite web|url = https://intelligence.org/2023/10/10/announcing-miris-new-ceo-and-leadership-team/|title = Announcing MIRI's New CEO and Leadership Team|date = October 10, 2023|accessdate = September 2, 2024|publisher = Machine Intelligence Research Institute}}</ref> | ||

| + | |- | ||

| + | | 2024 || {{dts|April}} || || MIRI launched a new research team focused on technical AI governance.<ref>{{cite web|url = https://intelligence.org/2023/10/10/announcing-miris-new-ceo-and-leadership-team/|title = Announcing MIRI's New CEO and Leadership Team|date = October 10, 2023|accessdate = September 2, 2024|publisher = Machine Intelligence Research Institute}}</ref> | ||

| + | |- | ||

| + | | 2024 || {{dts|May 14}} || Communication || MIRI significantly expanded its communications team, emphasizing public outreach and policy influence. | ||

| + | |- | ||

| + | | 2024 || {{dts|May 29}} || Project || MIRI shut down the Visible Thoughts Project. <ref>{{cite web|url = https://intelligence.org/2024/05/14/may-2024-newsletter/|title = May 2024 Newsletter|date = May 14, 2024|accessdate = September 2, 2024|publisher = Machine Intelligence Research Institute}}</ref> | ||

| + | |- | ||

| + | | 2024 || {{dts|June}} || Communication || MIRI Communications Manager Gretta Dulebahere emphasized the need to shut down frontier AI development and install an "off-switch." | ||

| + | |- | ||

| + | | 2024 || {{dts|June}} || Research || The Agent Foundations team, including Scott Garrabrant, parted ways with MIRI to continue as independent researchers. | ||

|} | |} | ||

Revision as of 15:57, 1 September 2024

This is a timeline of Machine Intelligence Research Institute. Machine Intelligence Research Institute (MIRI) is a nonprofit organization that does work related to AI safety.

Contents

Sample questions

This is an experimental section that provides some sample questions for readers, similar to reading questions that might come with a book. Some readers of this timeline might come to the page aimlessly and might not have a good idea of what they want to get out of the page. Having some "interesting" questions can help in reading the page with more purpose and in getting a sense of why the timeline is an important tool to have.

The following are some interesting questions that can be answered by reading this timeline:

- Which Singularity Summits did MIRI host, and when did they happen? (Sort by the "Event type" column and look at the rows labeled "Conference".)

- What was MIRI up to for the first ten years of its existence (before Luke Muehlhauser joined, before Holden Karnofsky wrote his critique of the organization)? (Scan the years 2000–2009.)

- How has MIRI's explicit mission changed over the years? (Sort by the "Event type" column and look at the rows labeled "Mission".)

The following are some interesting questions that are difficult or impossible to answer just by reading the current version of this timeline, but might be possible to answer using a future version of this timeline:

- When did some big donations to MIRI take place (for instance, the one by Peter Thiel)?

- Has MIRI "done more things" between 2010–2013 or between 2014–2017? (More information)

Big picture

| Time period | Development summary | More details |

|---|---|---|

| 1998–2002 | Various publications related to creating a superhuman AI | Eliezer Yudkowsky writes various documents about designing a superhuman AI during this period, including "Coding a Transhuman AI", "The Plan to Singularity", and "Creating Friendly AI". The Flare Programming Language project launches to aid the creation of a superhuman AI. |

| 2004–2009 | Tyler Emerson's tenure as executive director | Under Emerson's leadership, MIRI starts the Singularity Summit, moves to the San Francisco Bay Area, and lands Peter Thiel as a donor and enthusiastic endorser. |

| 2006–2009 | Modern rationalist community forms | Overcoming Bias is created, LessWrong is created, Eliezer Yudkowsky writes the Sequences, and so on. |

| 2006–2012 | The Singularity Summits take place annually | After the summit in 2012, the organization renames itself from "Singularity Institute for Artificial Intelligence" to the current "Machine Intelligence Research Institute" and sells the Singularity Summit to Singularity University. |

| 2009–2012 | Michael Vassar's tenure as president | |

| 2011–2015 | Luke Muehlhauser's tenure as executive director | Muehlhauser would be credited with significantly turning MIRI around, improving professionalism and reputation with donors. The name change, shift in focus to research, and improvement of relations with the nascent effective altruism community and the AI research community occur under his watch.[1][2][3] |

| 2013–present | Change of focus | MIRI changes focus to put less effort into public outreach and shift its research to Friendly AI math research. |

| 2015–present | Nate Soares's tenure as executive director | Soares continues to move MIRI forward in the direction that it shifted to under Muehlhauser, with a focus on AI safety research, and increased coordination with the AI safety and AI risk communities. |

Full timeline

| Year | Month and date | Event type | Details |

|---|---|---|---|

| 1979 | September 11 | Eliezer Yudkowsky is born.[4] | |

| 1996 | November 18 | Eliezer Yudkowsky writes the first version of "Staring into the Singularity".[5] | |

| 1998 | Publication | The initial version of "Coding a Transhuman AI" (CaTAI) is published.[6] | |

| 1999 | March 11 | The Singularitarian mailing list is launched. The mailing list page notes that although hosted on MIRI's website, the mailing list "should be considered as being controlled by the individual Eliezer Yudkowsky".[7] | |

| 1999 | September 17 | The Singularitarian mailing list is first informed (by Yudkowsky?) of "The Plan to Singularity" (called "Creating the Singularity" at the time).[8] | |

| 2000–2003 | Eliezer Yudkowsky's "coming of age" (including his "naturalistic awakening", in which he realizes that a superintelligence would not necessarily follow human morality) takes place during this period.[9][10][11] | ||

| 2000 | January 1 | Publication | "The Plan to Singularity" version 1.0 is written and published by Eliezer Yudkowsky, and posted to the Singularitarian, Extropians, and transhuman mailing lists.[8] |

| 2000 | January 1 | Publication | "The Singularitarian Principles" version 1.0 by Eliezer Yudkowsky is published.[12] |

| 2000 | February 6 | The first email is sent on SL4 ("Shock Level Four"), a mailing list about transhumanism, superintelligent AI, existential risks, and so on.[13][14] | |

| 2000 | May 18 | Publication | "Coding a Transhuman AI" (CaTAI) version 2.0a is "rushed out in time for the Foresight Gathering".[15] |

| 2000 | July 27 | Mission | Machine Intelligence Research Institute is founded as the Singularity Institute for Artificial Intelligence by Brian Atkins, Sabine Atkins (then Sabine Stoeckel) and Eliezer Yudkowsky. The organization's mission ("organization's primary exempt purpose" on Form 990) at the time is "Create a Friendly, self-improving Artificial Intelligence"; this mission would be in use during 2000–2006 and would change in 2007.[16]:3[17] |

| 2000 | September 1 | Publication | Large parts of "The Plan to Singularity" are marked obsolete "due to formation of Singularity Institute, and due to fundamental shifts in AI strategy caused by publication of CaTAI [Coding a Transhuman AI] 2".[8] |

| 2000 | September 7 | Publication | "Coding a Transhuman AI" (CaTAI) version 2.2.0 is published.[15] |

| 2000 | September 14 | The first Wayback Machine snapshot of MIRI's website is from this day, using the singinst.org domain name.[18]

| |

| 2001 | April 8 | MIRI begins accepting donations after receiving tax-exempt status.[19] | |

| 2001 | April 18 | Publication | Version 0.9 of "Creating Friendly AI" is released.[20] |

| 2001 | June 14 | Publication | The "SIAI Guidelines on Friendly AI" are published.[21] |

| 2001 | June 15 | Publication | Version 1.0 of "Creating Friendly AI" is published.[22][20] |

| 2001 | July 23 | Project | MIRI announces that it has formally launched the development of the Flare programming language under Dmitriy Myshkin.[23] |

| 2001 | December 21 | Domain | MIRI obtains the flare.org domain name for its Flare language project.[23]

|

| 2002 | March 8 | AI box | The first AI box experiment by Eliezer Yudkowsky, against Nathan Russell as gatekeeper, takes place. The AI is released.[24] |

| 2002 | April 7 | Publication | A draft of "Levels of Organization in General Intelligence" is announced on SL4.[25][26] |

| 2002 | July 4–5 | AI box | The second AI box experiment by Eliezer Yudkowsky, against David McFadzean as gatekeeper, takes place. The AI is released.[27] |

| 2002 | September 6 | Staff | Christian Rovner is appointed as MIRI's volunteer coordinator.[23] |

| 2002 | October 1 | MIRI "releases a major new site upgrade" with various new pages.[23] | |

| 2002 | October 7 | Project | MIRI announces the creation of its volunteers mailing list.[23] |

| 2003 | Project | The Flare Programming language project is officially canceled.[28] | |

| 2003 | Publication | Eliezer Yudkowsky's "An Intuitive Explanation of Bayesian Reasoning" is published.[29] | |

| 2003 | April 30 | Eliezer Yudkowsky posts an update about MIRI to the SL4 mailing list. The update discusses the need for an executive director and "extremely bright programmers", and discusses Yudkowsky's hopes to write "a book on the underlying theory and specific human practice of rationality" (which presumably became the Sequences) in order to attract the attention of these programmers.[30] | |

| 2004 | March 4–11 | Staff | MIRI announces Tyler Emerson as executive director.[31][32] |

| 2004 | April 7 | Staff | Michael Anissimov is announced as MIRI's advocacy director.[33] |

| 2004 | April 14 | Outside review | The first version of the Wikipedia page for MIRI is created.[34] |

| 2004 | May | Publication | Eliezer Yudkowsky's paper "Coherent Extrapolated Volition" is published around this time.[35] It is originally called "Collective Volition", and is announced on the MIRI website on August 16.[36][31] |

| 2004 | August 5–8 | Conference | TransVision 2004 takes place. TransVision is the World Transhumanist Association's annual event. MIRI is a sponsor for the event.[31] |

| 2005 | January 4 | Publication | "A Technical Explanation of Technical Explanation" is published.[37] It is announced on the MIRI news page on this day.[31] |

| 2005 | Conference | MIRI does "AI and existential risk presentations at Stanford, Immortality Institute's Life Extension Conference, and the Terasem Foundation".[38] | |

| 2005 | Publication | Eliezer Yudkowsky writes chapters for Global Catastrophic Risks, edited by Nick Bostrom and Milan M. Ćirković.[38] The book would be published in 2008. | |

| 2005 | February 2 | MIRI relocates from the Atlanta metropolitan area of Georgia to the Bay Area of California.[31] | |

| 2005 | July 22–24 | Conference | TransVision 2005 takes place in Caracas, Venezuela. MIRI is a sponsor for the event.[31] |

| 2005 | August 21 | AI box | The third AI box experiment by Eliezer Yudkowsky, against Carl Shulman as gatekeeper, takes place. The AI is released.[39] |

| 2005–2006 | December 20, 2005 – February 19, 2006 | Financial | The 2006 $100,000 Singularity Challenge, a fundraiser in which Peter Thiel matches donations up to $100,000, takes place. The fundraiser successfully matches the $100,000 amount.[31][40] This would mark the beginning of Peter Thiel (and later, the Thiel Foundation) playing an important role in funding MIRI, which it would continue to do till 2015.[41] |

| 2006 | Publication | "Twelve Virtues of Rationality" is published.[42] | |

| 2006 | February 13 | Peter Thiel joins MIRI's Board of Advisors.[31] | |

| 2006 | May 13 | Conference | The first Singularity Summit takes place at Stanford University.[43][44][45] |

| 2006 | November | Robin Hanson starts Overcoming Bias.[46] | |

| 2007 | Mission | MIRI's organization mission ("Organization's Primary Exempt Purpose" on Form 990) changes to: "To develop safe, stable and self-modifying Artificial General Intelligence. And to support novel research and to foster the creation of a research community focused on Artificial General Intelligence and Safe and Friendly Artificial Intelligence."[47] This mission would be used in 2008 and 2009 as well. | |

| 2007 | Project | MIRI's outreach blog is started.[38] | |

| 2007 | Project | MIRI's Interview Series is started.[38] | |

| 2007 | Staff | Ben Goertzel becomes director of research at MIRI.[48] He would go on to lead work on OpenCog which would officially start in 2008. | |

| 2007 | May 16 | Project | MIRI's introductory video is published on YouTube.[49][38] |

| 2007 | July 10 | Publication | The oldest (surviving) post on the MIRI blog is from this day. The post is "The Power of Intelligence" by Eliezer Yudkowsky.[50] |

| 2007 | September 8–9 | Conference | The Singularity Summit 2007 takes place in the San Francisco Bay Area.[43][51][52] |

| 2008 | Publication | "The Simple Truth" is published.[53] | |

| 2008 | Project | MIRI expands its Interview Series.[38] | |

| 2008 | Project | MIRI begins its summer intern program.[38] | |

| 2008 | Project | OpenCog is founded "via a grant from the [MIRI], and the donation from Novamente LLC of a large body of software code and software designs developed during the period 2001–2007".[54] Ben Goertzel directs MIRI work on OpenCog; his work at MIRI is limited to work on OpenCog-related projects. Through funding from the Google Summer of Code, 11 interns get to work on the project in the summer of 2008.[48] After the departure of Tyler Emerson and MIRI's deemphasis of OpenCog, Goertzel would step down as research director in 2010.[55] See also OpenCog § Relation to Singularity Institute. | |

| 2008 | October 25 | Conference | The Singularity Summit 2008 takes place in San Jose.[56][57] |

| 2008 | November–December | Outside review | The AI-Foom debate between Robin Hanson and Eliezer Yudkowsky takes place. The blog posts from the debate would later be turned into an ebook by MIRI.[58][59] |

| 2009 | Project | MIRI establishes the Visiting Fellows Program.[38] | |

| 2009 (early) | Staff | Executive director Tyler Emerson departs MIRI.[60] | |

| 2009 (early) | Staff | Michael Anissimov is hired as a media director.[60] (Since he was advocacy director as far back as 2004, it's not clear if he left the organization and came back, or if just changed positions.) | |

| 2009 | February | Project | Eliezer Yudkowsky starts LessWrong using as seed material his posts on Overcoming Bias.[61] On the 2009 accomplishments page, MIRI describes LessWrong as being "important to the Singularity Institute's work towards a beneficial Singularity in providing an introduction to issues of cognitive biases and rationality relevant for careful thinking about optimal philanthropy and many of the problems that must be solved in advance of the creation of provably human-friendly powerful artificial intelligence". And: "Besides providing a home for an intellectual community dialoguing on rationality and decision theory, Less Wrong is also a key venue for SIAI recruitment. Many of the participants in SIAI's Visiting Fellows Program first discovered the organization through Less Wrong."[60] |

| 2009 | February 16 | Staff | Michael Vassar announces himself as president of MIRI.[62] |

| 2009 | April | Publication | Eliezer Yudkowsky completes the Sequences.[60] |

| 2009 | August 13 | Social media | The Singularity Institute Twitter account, singinst, is created.[63] |

| 2009 | September | Staff | Amy Willey Labenz begins an internship at MIRI. During the internship in November, she would uncover the embezzlement.[64] |

| 2009 | October | Project | A website maintained by MIRI, The Uncertain Future, first appears around this time.[65][66] The goal of the website is to "allow those interested in future technology to form their own rigorous, mathematically consistent model of how the development of advanced technologies will affect the evolution of civilization over the next hundred years".[67] Work on the project started in 2008.[68] |

| 2009 | October 3–4 | Conference | The Singularity Summit 2009 takes place in New York.[69][70] |

| 2009 | November | Financial | Embezzlement: "Misappropriation of assets, by a contractor, was discovered in November 2009."[71] |

| 2009 | December | Staff | Amy Willey Labenz, previously an intern, joins MIRI as Chief Compliance Officer, partly due to her uncovering of the embezzlement in November.[60][64] |

| 2009 | December 11 | Influence | The third edition of Artificial Intelligence: A Modern Approach by Stuart J. Russell and Peter Norvig is published. In this edition, for the first time, Friendly AI is mentioned and Eliezer Yudkowsky is cited. |

| 2009 | December 12 | Project | The Uncertain Future reaches beta and is announced on the MIRI blog.[72] |

| 2009 | Financial | MIRI reports $118,803.00 in theft during this year.[38][73][74][75] The theft was by two former employees.[76] | |

| 2010 | Mission | The organization mission changes to: "To develop the theory and particulars of safe self-improving Artificial Intelligence; to support novel research and foster the creation of a research community focused on safe Artificial General Intelligence; and to otherwise improve the probability of humanity surviving future technological advances."[77] This mission would be used in 2011 and 2012 as well. | |

| 2010 | February 28 | Publication | The first chapter of Eliezer Yudkowsky's fan fiction Harry Potter and the Methods of Rationality is published. The book would be published as a serial concluding on March 14, 2015.[78][79] The fan fiction would become the initial contact with MIRI of several larger donors to MIRI.[80] |

| 2010 | April | Staff | Amy Willey Labenz is promoted to Chief Operating Officer; she was previously the Chief Compliance Officer. From 2010 to 2012 she would also serve as the Executive Producer of the Singularity Summits.[64] |

| 2010 | June 17 | Popular culture | Zendegi, a science fiction book by Greg Egan, is published. The book includes a character called Nate Caplan (partly inspired by Eliezer Yudkowsky and Robin Hanson), a website called Overpowering Falsehood dot com (partly inspired by Overcoming Bias and LessWrong), and a Benign Superintelligence Bootstrap Project, inspired by the Singularity Institute's friendly AI project.[81][82][83] |

| 2010 | August 14–15 | Conference | The Singularity Summit 2010 takes place in San Francisco.[84] |

| 2010 | December 21 | Social media | The first post on the MIRI Facebook page is from this day.[85][86] |

| 2010–2011 | December 21, 2010 – January 20, 2011 | Financial | The Tallinn–Evans $125,000 Singularity Challenge takes place. The Challenge is a fundraiser in which Edwin Evans and Jaan Tallinn match each dollar donated to MIRI up to $125,000.[87][88] |

| 2011 | February 4 | Project | The Uncertain Future is open-sourced.[68] |

| 2011 | February | Outside review | Holden Karnofsky of GiveWell has a conversation with MIRI staff. The conversation reveals the existence of a "Persistent Problems Group" at MIRI, which will supposedly "assemble a blue-ribbon panel of recognizable experts to make sense of the academic literature on very applicable, popular, but poorly understood topics such as diet/nutrition".[89] On April 30, Karnofsky would post the conversation to the GiveWell mailing list.[90] |

| 2011 | April | Staff | Luke Muehlhauser begins as an intern at MIRI.[91] |

| 2011 | May 10 – June 24 | Outside review | Holden Karnofsky of GiveWell and Jaan Tallinn (with Dario Amodei being present in the initial phone conversation) correspond regarding MIRI's work. The correspondence is posted to the GiveWell mailing list on July 18.[92] |

| 2011 | June 24 | Domain | A Wayback Machine snapshot on this day shows that singularity.org has turned into a GoDaddy.com placeholder.[93] Before this, the domain is some blog, most likely unrelated to MIRI.[94]

|

| 2011 | July 18 – October 20 | Domain | At least during this period, the singularity.org domain name redirects to singinst.org/singularityfaq.[94]

|

| 2011 | September 6 | Domain | The first Wayback Machine capture of singularityvolunteers.org is from this day.[95] For a time the site is used to coordinate volunteer efforts.

|

| 2011 | October 15–16 | Conference | The Singularity Summit 2011 takes place in New York.[96] |

| 2011 | October 17 | Social media | The Singularity Summit YouTube account, SingularitySummits, is created.[97] |

| 2011 | November | Staff | Luke Muehlhauser is appointed executive director of MIRI.[98] |

| 2011 | December 12 | Project | Luke Muehlhauser announces the creation of Friendly-AI.com, a website introducing the idea of Friendly AI.[99] |

| 2012 | Staff | Michael Vassar leaves MIRI to found MetaMed, a personalized medical advising company.[100] | |

| 2011 – 2012 | December 10 and January 12 | Opinion | A two-part Q&A with MIRI's newly appointed Executive Director Luke Muehlhauser is published.[101][102] |

| 2012 | February 4 – May 4 | Domain | At least during this period, singularity.org redirects to singinst.org.[103]

|

| 2012 | May 8 | MIRI's April 2012 progress report is published, in which the Center for Applied Rationality's name is announced. Until this point, CFAR was known as the "Rationality Group" or "Rationality Org".[104] | |

| 2012 | May 11 | Outside review | Holden Karnofsky publishes "Thoughts on the Singularity Institute (SI)" on LessWrong. The post explains why GiveWell does not plan to recommend the Singularity Institute.[105] |

| 2012 | June 16–28 | Domain | Sometime during this period, singinst.org begins redirecting to singularity.org, both being controlled by MIRI.[106] The new website at singularity.org would be announced in the July 2012 newsletter.[107]

|

| 2012 | July, August 6 | Starting with July 2012, MIRI would start publishing monthly newsletters as blog posts. The July 2012 newsletter is posted on August 6.[107][108] | |

| 2012 | August 15 | Luke Muehlhauser does an "ask me anything" (AMA) on reddit's r/Futurology.[109] | |

| 2012 | September (approximate) | Project | MIRI begins to partner with Youtopia as its volunteer management platform.[110] |

| 2012 | October 13–14 | Conference | The Singularity Summit 2012 takes place.[111][112] |

| 2012 | November 11–18 | Workshop | The 1st Workshop on Logic, Probability, and Reflection takes place.[113] |

| 2012 | December 6 | Singularity University announces that it has acquired the Singularity Summit from MIRI.[114] Joshua Fox praises the move, noting: "The Singularity Summit was always off-topic for SI: more SU-like than SI-like."[115] However, Singularity University would not continue the original tradition of the Summit,[116] and the later EA Global conference (organized in some years by Amy Willey Labenz who used to work at MIRI) would inherit some of the characteristics of the Singularity Summit.[117] Around this time, Amy Willey Labenz also leaves MIRI.[64] | |

| 2013 | Mission | The organization mission changes to: "To ensure that the creation of smarter-than-human intelligence has a positive impact. Thus, the charitable purpose of the organization is to: a) perform research relevant to ensuring that smarter-than-human intelligence has a positive impact; b) raise awareness of this important issue; c) advise researchers, leasers and laypeople around the world; d) as necessary, implement a smarter-than-human intelligence with humane, stable goals."[118] This mission would stay the same for 2014 and 2015. | |

| 2013–2014 | Project | MIRI conducts a lot of conversations during this period. Out of 80 conversations listed as of July 14, 2017, 75 are from this period (19 in 2013 and 56 in 2014).[119] In the "2014 in review" post on MIRI's blog Luke Muehlhauser writes: "Nearly all of the interviews were begun in 2013 or early 2014, even if they were not finished and published until much later. Mid-way through 2014, we decided to de-prioritize expert interviews, due to apparent diminishing returns."[120] | |

| 2013 | January | Staff | Michael Anissimov leaves MIRI.[121] |

| 2013 | January 30 | MIRI announces that it has renamed itself from "Singularity Institute for Artificial Intelligence" to "Machine Intelligence Research Institute".[122] | |

| 2013 | February 1 | Publication | Facing the Intelligence Explosion by Luke Muehlhauser is published by MIRI.[123] |

| 2013 | February 11 – February 28 | Domain | Sometime during this period, MIRI's new website at intelligence.org begins to function.[124][125] The new website is announced by Executive Director Luke Muehlhauser in a blog post on February 28.[126]

|

| 2013 | March 2 – July 4 | Domain | At least during this period, singularity.org redirects to intelligence.org, MIRI's new domain.[127]

|

| 2013 | April 3 | Publication | Singularity Hypotheses: A Scientific and Philosophical Assessment is published by Springer. The book contains chapters written by MIRI researchers and research associates.[128][129] |

| 2013 | April 3–24 | Workshop | The 2nd Workshop on Logic, Probability, and Reflection takes place.[113] |

| 2013 | April 13 | Strategy | MIRI publishes an update on its strategy on its blog. In the blog post, MIRI executive director Luke Muehlhauser states that MIRI plans to put less effort into public outreach and shift its research to Friendly AI math research.[130] |

| 2013 | April 18 | Staff | MIRI announces that executive assistant Ioven Fables is leaving MIRI due to changes in MIRI's operational needs (from its transition to a research-oriented organization).[131] |

| 2013 | July 4 | Social media | MIRI's Twitter account, MIRIBerkeley, is created.[132] |

| 2013 | July 4 | Social media | The earliest post on MIRI's Google Plus account, IntelligenceOrg, is from this day.[133][134] |

| 2013 | July 8–14 | Workshop | The 3rd Workshop on Logic, Probability, and Reflection takes place.[113] |

| 2013 | August 4 | Domain | By this point, singularity.org is operated by Singularity University.[135]

|

| 2013 | September 1 | Publication | The Hanson-Yudkowsky AI-Foom Debate is published as an ebook by MIRI.[136] |

| 2013 | September 7–13 | Workshop | The 4th Workshop on Logic, Probability, and Reflection takes place.[113] |

| 2013 | October 25 | Social media | The MIRI YouTube account, MIRIBerkeley, is created.[137] |

| 2013 | October 27 | Outside review | MIRI meets with Holden Karnofsky, Jacob Steinhardt, and Dario Amodei for a discussion about MIRI's organizational strategy.[138][139] |

| 2013 | November 23–29 | Workshop | The 5th Workshop on Logic, Probability, and Reflection takes place.[113] |

| 2013 | December 10 | Domain | The first working Wayback Machine snapshot of the MIRI Volunteers website, available at mirivolunteers.org, is from this day.[140]

|

| 2013 | December 14–20 | Workshop | The 6th Workshop on Logic, Probability, and Reflection takes place.[113] This is the first workshop attended by Nate Soares (at Google at the time), who would later becomes executive director of MIRI.[1][141] |

| 2014 | January (approximate) | Financial | Jed McCaleb, the creator of Ripple and original founder of Mt. Gox, makes a donation worth $500,000 in XRP.[142] |

| 2014 | January 16 | Outside review | MIRI meets with Holden Karnofsky of GiveWell for a discussion on existential risk strategy.[143][139] |

| 2014 | February 1 | Publication | Smarter Than Us: The Rise of Machine Intelligence by Stuart Armstrong is published by MIRI.[144] |

| 2014 | March–May | Influence | Future of Life Institute (FLI) is founded.[145] MIRI is a parter organization to FLI.[146] The Singularity Summit, MIRI's annual conference from 2006–2012, also played "a key causal role in getting Max Tegmark interested and the FLI created".[147] "Tallinn, a co-founder of FLI and of the Cambridge Centre for the Study of Existential Risk (CSER), cites MIRI as a key source for his views on AI risk".[148] |

| 2014 | March 12–13 | Staff | Some recent hires at MIRI are announced. Among the new team members is Nate Soares, who would become MIRI's executive director in 2015.[141] MIRI also hosts an Expansion Party to announce these hires to local supporters.[149][150][151][152] |

| 2014 | May 3–11 | Workshop | The 7th Workshop on Logic, Probability, and Reflection takes place.[113] |

| 2014 | July–September | Influence | Nick Bostrom's book Superintelligence: Paths, Dangers, Strategies is published. While Bostrom has never worked for MIRI, he is a research advisor to MIRI. MIRI also contributed substantially to the publication of the book.[147] |

| 2014 | July 4 | Project | Earliest evidence of AI Impacts existing is from this day.[153] |

| 2014 | August | Project | The AI Impacts website launches.[154] |

| 2014 | November 4 | Project | The Intelligent Agent Foundations Forum, run by MIRI, is launched.[155] |

| 2015 | January | Project | AI Impacts rolls out a new website.[156] |

| 2015 | January 2–5 | Conference | The Future of AI: Opportunities and Challenges, an AI safety conference, takes place in Puerto Rico. The conference is organized by the Future of Life Institute, but several MIRI staff (including Luke Muehlhauser, Eliezer Yudkowsky, and Nate Soares) attend.[157] Nate Soares would later call this the "turning point" of when top academics begin to focus on AI risk.[158] |

| 2015 | March 11 | Influence | Rationality: From AI to Zombies is published. It is an ebook of Eliezer Yudkowsky's series of blog posts, called "the Sequences".[159][160][161] |

| 2015 | May 4–6 | Workshop | The 1st Introductory Workshop on Logical Decision Theory takes place.[113] |

| 2015 | May 6 | Staff | Executive director Luke Muehlhauser announces his departure from MIRI, for a position as a Research Analyst at Open Philanthropy. The announcement also states that Nate Soares will be the new executive director.[162] |

| 2015 | May 13–19 | Conference | Along with the Centre for the Study of Existential Risk, MIRI organizes the Self-prediction in Decision Theory and Artificial Intelligence Conference. Several MIRI researchers present at the conference.[163] |

| 2015 | May 29–31 | Workshop | The 1st Introductory Workshop on Logical Uncertainty takes place.[113] |

| 2015 | June 3–4 | Staff | Nate Soares begins as executive director of MIRI.[1] |

| 2015 | June 11 | Nate Soares, executive director of MIRI, does an "ask me anything" (AMA) on the Effective Altruism Forum.[164] | |

| 2015 | June 12–14 | Workshop | The 2nd Introductory Workshop on Logical Decision Theory takes place.[113] |

| 2015 | June 26–28 | Workshop | The 1st Introductory Workshop on Vingean Reflection takes place.[113] |

| 2015 | July 7–26 | Project | The MIRI Summer Fellows program 2015, run by the Center for Applied Rationality, takes place.[165] This program is apparently "relatively successful at recruiting staff for MIRI".[166] |

| 2015 | August 7–9 | Workshop | The 2nd Introductory Workshop on Logical Uncertainty takes place.[113] |

| 2015 | August 28–30 | Workshop | The 3rd Introductory Workshop on Logical Decision Theory takes place.[113] |

| 2015 | September 26 | Outside review | The Effective Altruism Wiki page on MIRI is created.[167] |

| 2016 | Publication | MIRI pays Eliezer Yudkowsky to produce AI alignment content for Arbital.[168][169] (Not sure if there are any more details of this available.) | |

| 2016 | March 30 | Staff | MIRI announces two internal staff promotions: Malo Bourgon, formerly a program management analyst, becomes Chief Operating Officer (COO). Also, Rob Bensinger, who was previously outreach coordinator, is promoted to the role of research communications manager.[170] |

| 2016 | April 1–3 | Workshop | The Self-Reference, Type Theory, and Formal Verification takes place.[113] |

| 2016 | May 6 (talk), December 28 (transcript release) | Publication | In May 2016, Eliezer Yudkowsky gives a talk titled "AI Alignment: Why It’s Hard, and Where to Start." On December 28, 2016, an edited version of the transcript is released on the MIRI blog.[171][172] |

| 2016 | May 28–29 | Workshop | The Colloquium Series on Robust and Beneficial AI (CSRBAI) Workshop on Transparency takes place.[113] |

| 2016 | June 4–5 | Workshop | The Colloquium Series on Robust and Beneficial AI (CSRBAI) Workshop on Robustness and Error-Tolerance takes place.[113] |

| 2016 | June 11–12 | Workshop | The Colloquium Series on Robust and Beneficial AI (CSRBAI) Workshop on Preference Specification takes place.[113] |

| 2016 | June 17 | Workshop | The Colloquium Series on Robust and Beneficial AI (CSRBAI) Workshop on Agent Models and Multi-Agent Dilemmas takes place.[113] |

| 2016 | July 27 | MIRI announces its machine learning technical agenda, called "Alignment for Advanced Machine Learning Systems".[173] | |

| 2016 | August | Financial | Open Philanthropy awards a grant worth $500,000 to Machine Intelligence Research Institute. The grant writeup notes, "Despite our strong reservations about the technical research we reviewed, we felt that awarding $500,000 was appropriate for multiple reasons".[174] |

| 2016 | August 12–14 | Workshop | The 8th Workshop on Logic, Probability, and Reflection takes place.[113] |

| 2016 | August 26–28 | Workshop | The 1st Workshop on Machine Learning and AI Safety takes place.[113] |

| 2016 | September 12 | Publication | MIRI announces the release of its new paper, "Logical Induction" by Scott Garrabrant, Tsvi Benson-Tilsen, Andrew Critch, Nate Soares, and Jessica Taylor.[175][176] A positive review of the paper by a machine learning researcher would be cited as a reason for Open Philanthropy's grant to MIRI in October 2017. |

| 2016 | October 12 | MIRI does an "ask me anything" (AMA) on the Effective Altruism Forum.[177] | |

| 2016 | October 21–23 | Workshop | The 2nd Workshop on Machine Learning and AI Safety takes place.[113] |

| 2016 | November 11–13 | Workshop | The 9th Workshop on Logic, Probability, and Reflection takes place.[113] |

| 2016 | December | Financial | Open Philanthropy awards a grant worth $32,000 to AI Impacts.[178] |

| 2016 | December 1–3 | Workshop | The 3rd Workshop on Machine Learning and AI Safety takes place.[113] |

| 2017 | March 25–26 | Workshop | The Workshop on Agent Foundations and AI Safety takes place.[113] |

| 2017 | April 1–2 | Workshop | The 4th Workshop on Machine Learning and AI Safety takes place.[113] |

| 2017 | May 24 | Publication | "When Will AI Exceed Human Performance? Evidence from AI Experts" is published on the arXiv.[179] Two researchers from AI Impacts are authors on the paper. The paper would be mentioned in more than twenty news articles.[180] |

| 2017 | July 4 | Strategy | MIRI announces that it will be putting relatively little work into the "Alignment for Advanced Machine Learning Systems" agenda over the next year due to the departure of Patrick LaVictoire and Jessica Taylor, and leave taken by Andrew Critch.[181] |

| 2017 | July 7 | Outside review | Daniel Dewey, program officer for potential risks from advanced artificial intelligence at Open Philanthropy, publishes a post giving his thoughts on MIRI's work on highly reliable agent design. The post is intended to provide "an unambiguous snapshot" of Dewey's beliefs, and gives the case for highly reliable agent design work (as he understands it) and why he finds other approaches (such as learning to reason from humans) more promising.[182] |

| 2017 | July 14 | Outside review | The timelines wiki page on MIRI is publicly circulated (see § External links). |

| 2017 | October 13 | Publication | "Functional Decision Theory: A New Theory of Instrumental Rationality" by Eliezer Yudkowsky and Nate Soares is posted to the arXiv.[183] The paper is announced on the Machine Intelligence Research Institute blog on October 22.[184] |

| 2017 | October 13 | Publication | Eliezer Yudkowsky's blog post There's No Fire Alarm for Artificial General Intelligence is published on the MIRI blog and on the new LessWrong (this is shortly after the launch of the new version of LessWrong).[185][186] |

| 2017 | October | Financial | Open Philanthropy awards MIRI a grant of $3.75 million over three years ($1.25 million per year). The cited reasons for the grant are a "very positive review" of MIRI's "Logical Induction" paper by an "outstanding" machine learning researcher, as well as Open Philanthropy having made more grants in the area so that a grant to MIRI is less likely to appear as an "outsized endorsement of MIRI's approach".[187][188] |

| 2017 | November 16 | Publication | Eliezer Yudkowsky's sequence/book Inadequate Equilibria is fully published. The book was published chapter-by-chapter on LessWrong 2.0 and the Effective Altruism Forum starting October 28.[189][190][191] The book is reviewed on multiple blogs including Slate Star Codex (Scott Alexander),[192] Shtetl-Optimized (Scott Aaronson),[193] and Overcoming Bias (Robin Hanson).[194] The book outlines Yudkowsky's approach to epistemology, covering topics such as whether to trust expert consensus and whether one can expect to do better than average. |

| 2017 | November 25, November 26 | Publication | A two-part series "Security Mindset and Ordinary Paranoia" and "Security Mindset and the Logistic Success Curve" by Eliezer Yudkowsky is published. The series uses the analogy of "security mindset" to highlight the importance and non-intuitiveness of AI safety. This is based on Eliezer Yudkowsky's 2016 talk "AI Alignment: Why It’s Hard, and Where to Start."[195][196] |

| 2017 | December 1 | Financial | MIRI's 2017 fundraiser begins. The announcement post describes MIRI's fundraising targets, recent work at MIRI (including recent hires), and MIRI's strategic background (which gives a high-level overview of how MIRI's work relates to long-term outcomes).[197] The fundraiser would conclude with $2.5 million raised from over 300 distinct donors. The largest donation would be from Vitalik Buterin ($763,970 worth of Ethereum).[198] |

| 2018 | February | Workshop | MIRI and the Center for Applied Rationality (CFAR) conduct the first AI Risk for Computer Scientists (AIRCS) workshop. This would be the first of several AIRCS workshops, with seven more in 2018 and many more in 2019.[199] The page about AIRCS says: "The material at the workshop is a mixture of human rationality content that’s loosely similar to some CFAR material, and a variety of topics related to AI risk, including thinking about forecasting, different people’s ideas of where the technical problems are, and various potential paths for research."[200] |

| 2018 | October 29 | Project | The launch of the AI Alignment Forum (often abbreviated to just "Alignment Forum") is announced on the MIRI blog. The Alignment Forum is built and maintained by the LessWrong 2.0 team (which is distinct from MIRI), but with help from MIRI. The Alignment Forum replaces MIRI's existing Intelligent Agent Foundations Forum, and is intended as "a single online hub for alignment researchers to have conversations about all ideas in the field".[201][202] The Alignment Forum had previously launched in beta on July 10, 2018,[203] with the day of launch chosen as the first "AI Alignment Writing Day" for the MIRI Summer Fellows Program (beginning an annual tradition).[204] |

| 2018 | October 29 – November 15 | Publication | The Embedded Agency sequence, by MIRI researchers Abram Demski and Scott Garrabrant, is published on the MIRI blog (text version),[205] on LessWrong 2.0 (illustrated version),[206] and on the Alignment Forum (illustrated version)[207] in serialized installments from October 29 to November 8; on November 15 a full-text version containing the entire sequence is published.[208] The term "embedded agency" is a renaming of an existing concept researched at MIRI, called "naturalized agency".[209] |

| 2018 | November 22 | Strategy | Nate Soares, executive director of MIRI, publishes MIRI's 2018 update post (the post was not written exclusively by Soares; see footnote 1, which begins "This post is an amalgam put together by a variety of MIRI staff"). The post describes new research directions at MIRI (which are not explained in detail due to MIRI's nondisclosure policy); explains the concept of "deconfusion" and why MIRI values it; announces MIRI's "nondisclosed-by-default" policy for most of its research; and gives a recruitment pitch for people to join MIRI.[210] |

| 2018 | November 26 | Financial | MIRI's 2018 fundraiser begins.[199] The fundraiser would conclude on December 31 with $951,817 raised from 348 donors.[211] |

| 2018 | August (joining) November 28 (announcement), December 1 (AMA) | Staff | MIRI announces that prolific Haskell developer Edward Kmett has joined.[212] Kmett participates in an Ask Me Anything (AMA) on Reddit's Haskell subreddit on December 1, 2018. In reply to questions, he clarifies that MIRI's nondisclosure policy will not affect the openness of his work, but as the main researcher at MIRI who publishes openly, he will feel more pressure to produce higher-quality work as the whole organization may be judged by the quality of his work.[213] |

| 2018 | December 15 | Publication | MIRI announces a new edition of Eliezer Yudkowsky's Rationality: From AI to Zombies (i.e. the book version of "the Sequences"). At the time of the announcement, the new edition of only two sequences, Map and Territory and How to Actually Change Your Mind, are available.[214][215] |

| 2019 | February | Financial | Open Philanthropy grants MIRI $2,112,500 over two years. The grant amount is decided by the Committee for Effective Altruism Support, which also decides on amounts for grants to 80,000 Hours and the Centre for Effective Altruism at around the same time.[216] The Berkeley Existential Risk Initiative (BERI) grants $600,000 to MIRI at around the same time. MIRI discusses both grants in a blog post.[217] |

| 2019 | April 23 | Financial | The Long-Term Future Fund announces that it is donating $50,000 to MIRI as part of this grant round. Oliver Habryka, the main grant investigator, explains the reasoning in detail, including his general positive impression of MIRI and his thoughts on funding gaps.[218] |

| 2019 | December | Financial | MIRI's 2019 fundraiser raises $601,120 from over 259 donors. A retrospective blog post on the fundraiser, published February 2020, discusses possible reasons the fundraiser raised less money than fundraisers in previous years, particularly 2017. Reasons include: lower cryptocurrency prices causing fewer donations from cryptocurrency donors, nondisclosed-by-default policy making it harder for donors to evaluate research, US tax law changes in 2018 causing more donation-bunching across years, fewer counterfactual matching opportunities, donor perception of reduced marginal value of donations, skew in donations from a few big donors, previous donors moving from earning-to-give to direct work, and donors responding to MIRI's urgent need for funds in previous years by donating in those years and having less to donate now.[219] |

| 2020 | February | Financial | Open Philanthropy grants $7,703,750 to MIRI over two years, with the money amount determined by the Committee for Effective Altruism Support (CEAS). Of the funding, $6.24 million comes from Good Ventures (the usual funding source) and $1.46 milion comes from Ben Delo, co-founder of BitMEX and recent Giving Pledgee signatory, via a co-funding partnership. Other organizations receiving money based on CEAS recommendations at around the same time are Ought (also focused on AI safety), the Centre for Effective Altruism, and 80,000 Hours.[220] MIRI would blog about the grant in April 2020, calling the grant "our largest grant to date."[221] |

| 2020 | March 2 | Financial | The Berkeley Existential Risk Initiative (BERI) grants $300,000 to MIRI. Writing about the grant in April 2020, MIRI says: "at the time of our 2019 fundraiser, we expected to receive a grant from BERI in early 2020, and incorporated this into our reserves estimates. However, we predicted the grant size would be $600k; now that we know the final grant amount, that estimate should be $300k lower."[221] |

| 2020 | April 14 | Financial | The Long-Term Future Fund grants $100,000 to MIRI.[222][221] |

| 2020 | May | Financial | The Survival and Flourishing Fund publishes the outcome of its recommendation S-process for the first half of 2020. This includes three grant recommendations to MIRI: $20,000 from SFF, $280,000 from Jaan Tallinn, and $40,000 from Jed McCaleb.[223] The grant from SFF to MIRI would also be included in SFF's grant list with a grant date of May 2020.[224] |

| 2020 | October 9 | A Facebook post by Rob Bensinger, MIRI's research communications manager, says that MIRI is considering moving its office from its current location in Berkeley, California (in the San Francisco Bay Area) to another location in the United States or Canada. Two areas under active consideration are the Northeastern US (New Hampshire in particular) and the area surrounding Toronto. In response to a question about reasons, Bensinger clarifies that he cannot disclose reasons yet, but that he wanted to announce preemptively so that people can factor this uncertainty into any plans to move or to start new rationalist hubs.[225] | |

| 2020 | October 22 | Publication | Scott Garrabrant publishes (cross-posted to LessWrong and the Effective Altruism Forum) a blog post titled "Introduction to Cartesian Frames" that is is first post in a sequence about Cartesian frames, a new conceptual framework for thinking about agency.[226][227] |

| 2020 | November (announcement) | Financial | Jaan Tallinn grants $543,000 to MIRI as an outcome of the S-process carried out by the Survival and Flourishing Fund for the second half of 2020.[228] |

| 2020 | November 30 (announcement) | Financial | In the November newsletter, MIRI announces that it will not be running a formal fundraiser this year, but that it will continue participating in Giving Tuesday and other matching opportunities.[229] |

| 2020 | December 21 | Strategy | Malo Bourgon publishes MIRI's "2020 Updates and Strategy" blog post. The post talks about MIRI's efforts to relocate staff after the COVID-19 pandemic as well as the generally positive result of the changes, and possible future implications for MIRI itself moving out of the Bay Area. It also talks about slow progress on the research directions initiated in 2017, leading to MIRI feeling the need to change course. The post also talks about the public part of MIRI's progress in other research areas.[230] |

| 2021 | May 8 | Rob Bensinger publishes a post on LessWrong providing an update on MIRI's current thoughts regarding the possibility of relocation from the San Francisco Bay Area.[231] | |

| 2021 | May 13 | Financial | MIRI announces two major donations to it: $15,592,829 in MakerDAO (MKR) from an anonymous donor with a restriction to spend a maximum of $2.5 million per year till 2024, and the remaining funds available in 2025, and 1050 ETH from Vitalik Buterin, worth $4,378,159.[232] |

| 2021 | May 23 | In a talk, MIRI researcher Scott Garrabrandt describes "finite factored sets" and usees it to introduce an "alternative to the Pearlian paradigm."[233] | |

| 2021 | July 1 | An update is added to Rob Bensinger's May 8 post about MIRI moving from the San Francisco Bay Area. The update links to a comment by MIRI board member Blake Borgeson, who had been tasked with leading/coordinating MIRI's relocation decision process. The update says that for now, MIRI has decided against moving from its current location, citing uncertainty about long-term strategy and trajectory. However, MIRI will show more flexibility in terms of its staff working remotely.[231] | |

| 2021 | November 15 | In the period around this date, several private conversations between MIRI people (Eliezer Yudkowsky, Nate Soares, and Rob Bensinger) and others in the AI safety community (Richard Ngo, Jaan Tallinn, Paul Christiano, Ajeya Cotra, Beth Barnes, Carl Shulman, Holden Karnofsky, and Rohin Shah) are published to the Alignment Forum and cross-posted to LessWrong; some are also cross-posted to the Effective Altruism Forum. On November 15, Rob Bensinger creates a sequence "Late 2021 MIRI Conversations" on the Alignment Forum for these posts.[234] | |

| 2021 | November 29 | MIRI announces on the Alignment Forum that it is looking for assistance with getting datasets for its Visible Thoughts Project. The hypothesis is described as follows: "Language models can be made more understandable (and perhaps also more capable, though this is not the goal) by training them to produce visible thoughts."[235] | |

| 2021 | December | Financial | MIRI offered $200,000 to build an AI-dungeon-style writing dataset annotated with thoughts, and an additional $1,000,000 for scaling it 10x. |

| 2022 | July | MIRI released three major posts: "AGI Ruin: A List of Lethalities," "A central AI alignment problem," and "Six Dimensions of Operational Adequacy in AGI Projects." | |

| 2022 | July | Strategy | MIRI temporarily paused its newsletter and public communications to focus on refining internal strategies. |

| 2023 | July | Advocacy | Eliezer Yudkowsky advocated for an indefinite worldwide moratorium on advanced AI training. |

| 2023 | April | Leadership | Leadership change: Malo Bourgon became CEO, Nate Soares shifted to President, Akex Vermeer stepped up as COO and Eliezer Yudkowsky became the Chair of the Board.[236] |

| 2024 | April | Strategy | MIRI released its 2024 Mission and Strategy update, announcing a major focus shift towards broad communication and policy change. [237] |

| 2024 | April | MIRI launched a new research team focused on technical AI governance.[238] | |

| 2024 | May 14 | Communication | MIRI significantly expanded its communications team, emphasizing public outreach and policy influence. |

| 2024 | May 29 | Project | MIRI shut down the Visible Thoughts Project. [239] |

| 2024 | June | Communication | MIRI Communications Manager Gretta Dulebahere emphasized the need to shut down frontier AI development and install an "off-switch." |

| 2024 | June | Research | The Agent Foundations team, including Scott Garrabrant, parted ways with MIRI to continue as independent researchers. |

Numerical and visual data

Google Scholar

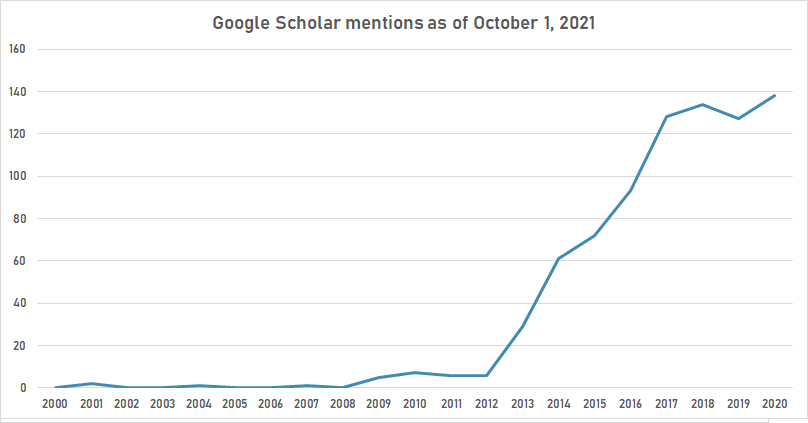

The following table summarizes per-year mentions on Google Scholar as of October 1, 2021.

| Year | "Machine Intelligence Research Institute" |

|---|---|

| 2000 | 0 |

| 2001 | 2 |

| 2002 | 0 |

| 2003 | 0 |

| 2004 | 1 |

| 2005 | 0 |

| 2006 | 0 |

| 2007 | 1 |

| 2008 | 0 |

| 2009 | 5 |

| 2010 | 7 |

| 2011 | 6 |

| 2012 | 6 |

| 2013 | 29 |

| 2014 | 61 |

| 2015 | 72 |

| 2016 | 93 |

| 2017 | 128 |

| 2018 | 134 |

| 2019 | 127 |

| 2020 | 138 |

Google Trends

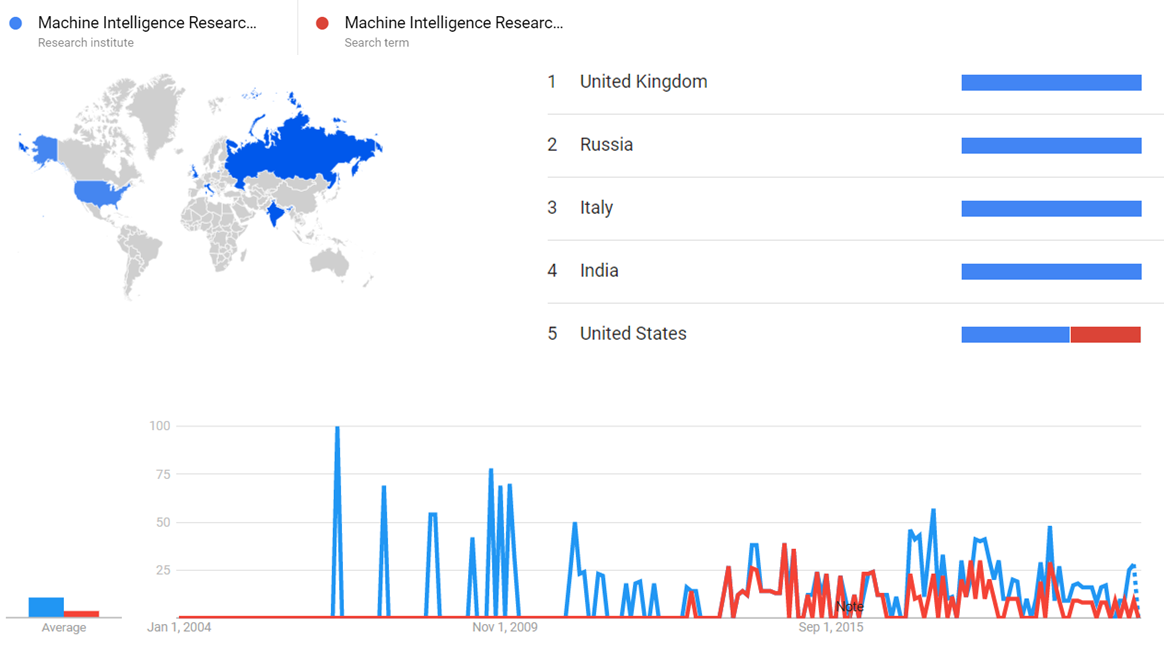

The comparative chart below shows Google Trends data Machine Intelligence Research Institute (Research institute) and Machine Intelligence Research Institute (Search term), from January 2004 to March 2021, when the screenshot was taken. Interest is also ranked by country and displayed on world map.[240]

Google Ngram Viewer

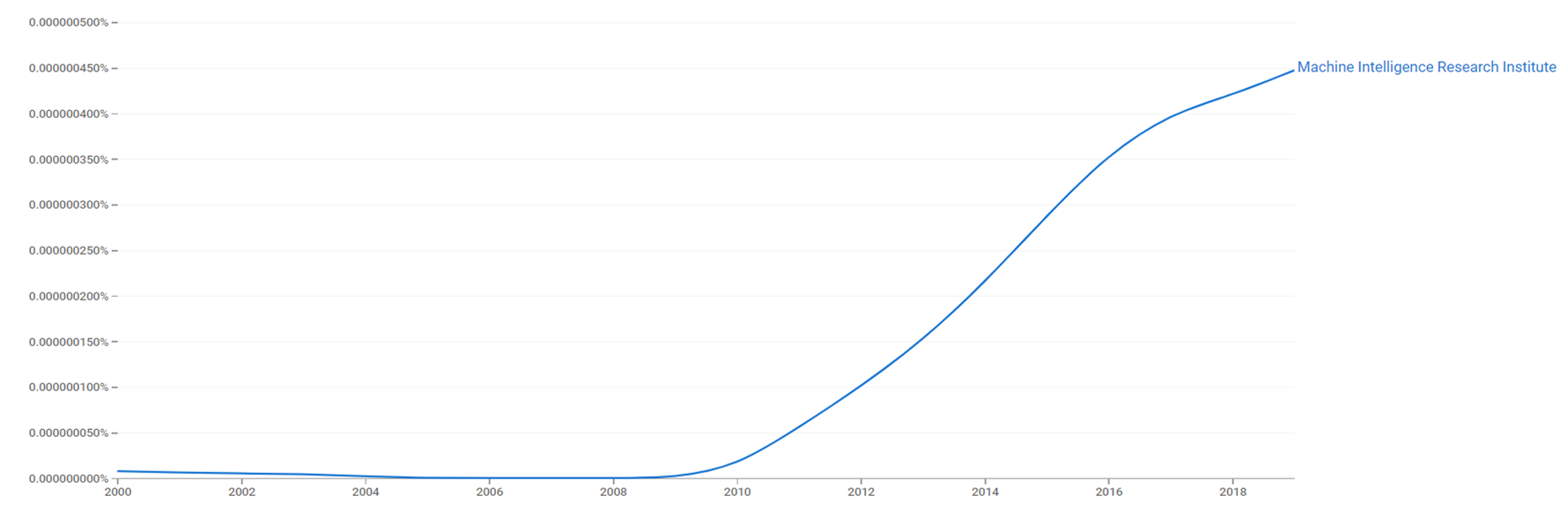

The chart below shows Google Ngram Viewer data for Machine Intelligence Research Institute, from 2000 to 2019.[241]

Wikipedia desktop pageviews across the different names

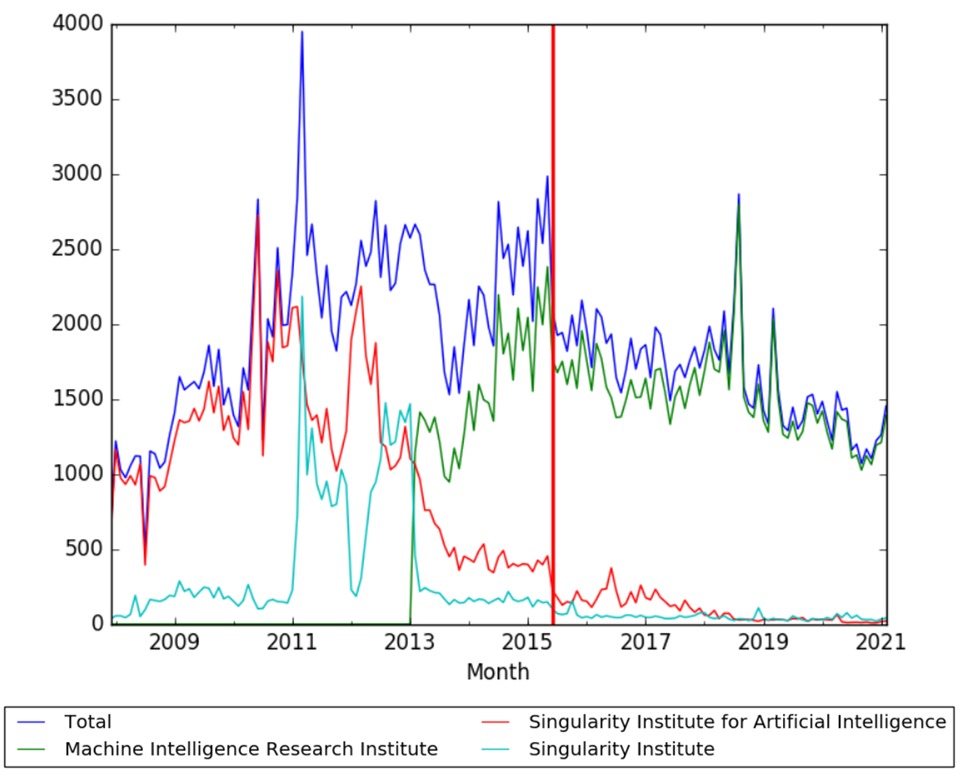

The image below shows desktop pageviews of the page Machine Intelligence Research Institute and its predecessor pages, "Singularity Institute for Artificial Intelligence" and "Singularity Institute".[242] The change in names occurred on these dates:[243][244]

- December 23, 2011: Two pages "Singularity Institute" and "Singularity Institute for Artificial Intelligence" merged into single page "Singularty Institute for Artificial Intelligence"

- April 16, 2012: Page moved from "Singularity Institute for Artificial Intelligence" to "Singularity Institute" with the old name redirecting to the new name

- February 1, 2013: Page moved from "Singularity Institute" to "Machine Intelligence Research Institute" with both old names redirecting to the new name

The red vertical line (for June 2015) represents a change in the method of estimating pageviews; specifically, pageviews by bots and spiders are excluded for months on the right of the line.

Meta information on the timeline

How the timeline was built

The initial version of the timeline was written by Issa Rice.

Issa likes to work locally and track changes with Git, so the revision history on this wiki only shows changes in bulk. To see more incremental changes, refer to the commit history.

Funding information for this timeline is available.

Feedback and comments

Feedback for the timeline can be provided at the following places:

What the timeline is still missing

- TODO Figure out how to cover publications

- TODO mention kurzweil

- TODO maybe include some of the largest donations (e.g. the XRP/ETH ones, tallinn, thiel)

- TODO maybe fundraisers

- TODO look more closely through some AMAs: [1], [2]

- TODO maybe more info in this SSC post [3]

- TODO more links at EA Wikia page [4]

- TODO lots of things from strategy updates, annual reviews, etc. [5]

- TODO Ben Goertzel talks about his involvement with MIRI [6], also more on opencog

- TODO giant thread on Ozy's blog [7]

- NOTE From 2017-07-06: "years that have few events so far: 2003 (one event), 2007 (one event), 2008 (three events), 2010 (three events), 2017 (three events)"

- TODO possibly include more from the old MIRI volunteers site. Some of the volunteering opportunities like proofreading and promoting MIRI by giving it good web of trust ratings seem to give a good flavor of what MIRI was like, the specific challenges in terms of switching domains, and so on.

- TODO cover Berkeley Existential Risk Initiative (BERI), kinda a successor to MIRI volunteers?

- TODO cover launch of Center for Human-Compatible AI

- TODO not sure how exactly to include this in the timeline, but something about MIRI's changing approach to funding certain types of contract work. e.g. Vipul says "I believe the work I did with Luke would no longer be sponsored by MIRI as their research agenda is now much more narrowly focused on the mathematical parts."

- TODO who is Tyler Emerson?

- modal combat and some other domains: [8], [9], [10]

- https://www.lesswrong.com/posts/yGZHQYqWkLMbXy3z7/video-q-and-a-with-singularity-institute-executive-director

- https://ea.greaterwrong.com/posts/NBgpPaz5vYe3tH4ga/on-deference-and-yudkowsky-s-ai-risk-estimates

Timeline update strategy

Some places to look on the MIRI blog:

Also general stuff like big news coverage.

See also

- Timeline of AI safety

- Timeline of Against Malaria Foundation

- Timeline of Center for Applied Rationality

- Timeline of decision theory

- Timeline of Future of Humanity Institute

External links

- Official website

- Intelligent Agent Foundations Forum

- LessWrong

- Machine Intelligence Research Institute (Wikipedia)

- The Singularity Wars (LessWrong) covers some of the early history of MIRI and the differences with Singularity University

- Donations information and other relevant documents, compiled by Vipul Naik

- Staff history and list of products on AI Watch

References

- ↑ 1.0 1.1 1.2 Nate Soares (June 3, 2015). "Taking the reins at MIRI". LessWrong. Retrieved July 5, 2017.

- ↑ "lukeprog comments on "Thoughts on the Singularity Institute"". LessWrong. May 10, 2012. Retrieved July 15, 2012.

- ↑ "Halfwitz comments on "Breaking the vicious cycle"". LessWrong. November 23, 2014. Retrieved August 3, 2017.

- ↑ Eliezer S. Yudkowsky (August 31, 2000). "Eliezer, the person". Archived from the original on February 5, 2001.

- ↑ "Yudkowsky - Staring into the Singularity 1.2.5". Retrieved June 1, 2017.

- ↑ Eliezer S. Yudkowsky. "Coding a Transhuman AI". Retrieved July 5, 2017.

- ↑ Eliezer S. Yudkowsky. "Singularitarian mailing list". Retrieved July 5, 2017.

The "Singularitarian" mailing list was first launched on Sunday, March 11th, 1999, to assist in the common goal of reaching the Singularity. It will do so by pooling the resources of time, brains, influence, and money available to Singularitarians; by enabling us to draw on the advice and experience of the whole; by bringing together individuals with compatible ideas and complementary resources; and by binding the Singularitarians into a community.

- ↑ 8.0 8.1 8.2 Eliezer S. Yudkowsky. "PtS: Version History". Retrieved July 4, 2017.

- ↑ "Yudkowsky's Coming of Age - Lesswrongwiki". LessWrong. Retrieved January 30, 2018.

- ↑ "My Naturalistic Awakening - Less Wrong". LessWrong. Retrieved January 30, 2018.

- ↑ "jacob_cannell comments on [link] FLI's recommended project grants for AI safety research announced - Less Wrong". LessWrong. Retrieved January 30, 2018.

- ↑ Eliezer S. Yudkowsky. "Singularitarian Principles 1.0". Retrieved July 5, 2017.

- ↑ "SL4: By Date". Retrieved June 1, 2017.

- ↑ Eliezer S. Yudkowsky. "SL4 Mailing List". Retrieved June 1, 2017.

- ↑ 15.0 15.1 Eliezer S. Yudkowsky. "Coding a Transhuman AI § Version History". Retrieved July 5, 2017.

- ↑ "Form 990-EZ 2000" (PDF). Retrieved June 1, 2017.

Organization was incorporated in July 2000 and does not have a financial history for years 1996-1999.

- ↑ "About the Singularity Institute for Artificial Intelligence". Retrieved July 1, 2017.

The Singularity Institute for Artificial Intelligence, Inc. (SIAI) was incorporated on July 27th, 2000 by Brian Atkins, Sabine Atkins (then Sabine Stoeckel) and Eliezer Yudkowsky. The Singularity Institute is a nonprofit corporation governed by the Georgia Nonprofit Corporation Code, and is federally tax-exempt as a 501(c)(3) public charity. At this time, the Singularity Institute is funded solely by individual donors.

- ↑ Eliezer S. Yudkowsky. "Singularity Institute for Artificial Intelligence, Inc.". Retrieved July 4, 2017.

- ↑ Eliezer S. Yudkowsky. "Singularity Institute: News". Retrieved July 1, 2017.

April 08, 2001: The Singularity Institute for Artificial Intelligence, Inc. announces that it has received tax-exempt status and is now accepting donations.

- ↑ 20.0 20.1 "Singularity Institute for Artificial Intelligence // News // Archive". Retrieved July 13, 2017.

- ↑ Singularity Institute for Artificial Intelligence. "SIAI Guidelines on Friendly AI". Retrieved July 13, 2017.

- ↑ Eliezer Yudkowsky (2001). "Creating Friendly AI 1.0: The Analysis and Design of Benevolent Goal Architectures" (PDF). The Singularity Institute. Retrieved July 5, 2017.

- ↑ 23.0 23.1 23.2 23.3 23.4 Eliezer S. Yudkowsky. "Singularity Institute: News". Retrieved July 1, 2017.

- ↑ "SL4: By Thread". Retrieved July 1, 2017.

- ↑ Eliezer S. Yudkowsky (April 7, 2002). "SL4: PAPER: Levels of Organization in General Intelligence". Retrieved July 5, 2017.

- ↑ Singularity Institute for Artificial Intelligence. "Levels of Organization in General Intelligence". Retrieved July 5, 2017.

- ↑ "SL4: By Thread". Retrieved July 1, 2017.

- ↑ "FlareProgrammingLanguage". SL4 Wiki. September 14, 2007. Retrieved July 13, 2017.

- ↑ "Yudkowsky - Bayes' Theorem". Retrieved July 5, 2017.

Eliezer Yudkowsky's work is supported by the Machine Intelligence Research Institute. If you've found Yudkowsky's pages on rationality useful, please consider donating to the Machine Intelligence Research Institute.

- ↑ Yudkowsky, Eliezer (April 30, 2003). "Singularity Institute - update". SL4.

- ↑ 31.0 31.1 31.2 31.3 31.4 31.5 31.6 31.7 "News of the Singularity Institute for Artificial Intelligence". Retrieved July 4, 2017.

- ↑ "Singularity Institute for Artificial Intelligence // The SIAI Voice". Retrieved July 4, 2017.

On March 4, 2004, the Singularity Institute announced Tyler Emerson as our Executive Director. Emerson will be responsible for guiding the Institute. His focus is in nonprofit management, marketing, relationship fundraising, leadership and planning. He will seek to cultivate a larger and more cohesive community that has the necessary resources to develop Friendly AI.

- ↑ Tyler Emerson (April 7, 2004). "SL4: Michael Anissimov - SIAI Advocacy Director". Retrieved July 1, 2017.

The Singularity Institute announces Michael Anissimov as our Advocacy Director. Michael has been an active volunteer for two years, and one of the more prominent voices in the singularity community. He is committed and thoughtful, and we feel very fortunate to have him help lead our advocacy.

- ↑ "Machine Intelligence Research Institute: This is an old revision of this page, as edited by 63.201.36.156 (talk) at 19:28, 14 April 2004.". Retrieved July 15, 2017.

- ↑ Eliezer Yudkowsky. "Coherent Extrapolated Volition" (PDF). Retrieved July 1, 2017.

The information is current as of May 2004, and should not become dreadfully obsolete until late June, when I plant to have an unexpected insight.

- ↑ "Collective Volition". Retrieved July 4, 2017.

- ↑ "Yudkowsky - Technical Explanation". Retrieved July 5, 2017.

Eliezer Yudkowsky's work is supported by the Machine Intelligence Research Institute.

- ↑ 38.0 38.1 38.2 38.3 38.4 38.5 38.6 38.7 38.8 Brandon Reinhart. "SIAI - An Examination - Less Wrong". LessWrong. Retrieved June 30, 2017.

- ↑ "SL4: By Thread". Retrieved July 1, 2017.

- ↑ "The Singularity Institute for Artificial Intelligence - 2006 $100,000 Singularity Challenge". Retrieved July 5, 2017.

- ↑ "Thiel Foundation donations made to Machine Intelligence Research Institute". Retrieved September 15, 2019.

- ↑ "Twelve Virtues of Rationality". Retrieved July 5, 2017.

Eliezer Yudkowsky's work is supported by the Machine Intelligence Research Institute.

- ↑ 43.0 43.1 "Singularity Summit". Machine Intelligence Research Institute. Retrieved June 30, 2017.

- ↑ Dan Farber. "The great Singularity debate". ZDNet. Retrieved June 30, 2017.

- ↑ Jerry Pournelle (May 20, 2006). "Chaos Manor Special Reports: The Stanford Singularity Summit". Retrieved June 30, 2017.

- ↑ "Overcoming Bias : Bio". Retrieved June 1, 2017.

- ↑ "Form 990 2007" (PDF). Retrieved July 8, 2017.

- ↑ 48.0 48.1 "Our History". Machine Intelligence Research Institute.

- ↑ "Singularity Institute for Artificial Intelligence". YouTube. Retrieved July 8, 2017.

- ↑ "The Power of Intelligence". Machine Intelligence Research Institute. July 10, 2007. Retrieved May 5, 2020.

- ↑ "The Singularity Summit 2007". Retrieved June 30, 2017.

- ↑ "Scientists Fear Day Computers Become Smarter Than Humans". Fox News. September 12, 2007. Retrieved July 5, 2017.

futurists gathered Saturday for a weekend conference

- ↑ "Yudkowsky - The Simple Truth". Retrieved July 5, 2017.

Eliezer Yudkowsky's work is supported by the Machine Intelligence Research Institute.

- ↑ "About". OpenCog Foundation. Retrieved July 6, 2017.

- ↑ Goertzel, Ben (October 29, 2010). "The Singularity Institute's Scary Idea (and Why I Don't Buy It)". Retrieved September 15, 2019.

- ↑ http://helldesign.net. "The Singularity Summit 2008: Opportunity, Risk, Leadership > Program". Retrieved June 30, 2017.

- ↑ Elise Ackerman (October 23, 2008). "Annual A.I. conference to be held this Saturday in San Jose". The Mercury News. Retrieved July 5, 2017.

- ↑ "The Hanson-Yudkowsky AI-Foom Debate". Lesswrongwiki. LessWrong. Retrieved July 1, 2017.

- ↑ "Eliezer_Yudkowsky comments on Thoughts on the Singularity Institute (SI) - Less Wrong". LessWrong. Retrieved July 15, 2017.

Nonetheless, it already has a warm place in my heart next to the debate with Robin Hanson as the second attempt to mount informed criticism of SIAI.

- ↑ 60.0 60.1 60.2 60.3 60.4 "Recent Singularity Institute Accomplishments". Singularity Institute for Artificial Intelligence. Retrieved July 6, 2017.

- ↑ "FAQ - Lesswrongwiki". LessWrong. Retrieved June 1, 2017.

- ↑ Michael Vassar (February 16, 2009). "Introducing Myself". Machine Intelligence Research Institute. Retrieved July 1, 2017.

- ↑ "SingularityInstitute (@singinst)". Twitter. Retrieved July 4, 2017.

- ↑ 64.0 64.1 64.2 64.3 Amy Willey Labenz. Personal communication. May 27, 2022.

- ↑ "Wayback Machine". Retrieved July 2, 2017. The first snapshot is from October 5, 2009.

- ↑ "theuncertainfuture.com - Google Search". Retrieved July 2, 2017. The earliest cache seems to be from October 25, 2009. Checking the Jan 1, 2008 – Jan 1, 2009 range produces no result.

- ↑ "The Uncertain Future". Machine Intelligence Research Institute. Retrieved July 2, 2017.

- ↑ 68.0 68.1 McCabe, Thomas (February 4, 2011). "The Uncertain Future Forecasting Project Goes Open-Source". H Plus Magazine. Archived from the original on April 13, 2012. Retrieved July 15, 2017.

- ↑ http://helldesign.net. "The Singularity Summit 2009 > Program". Retrieved June 30, 2017.

- ↑ Stuart Fox (October 2, 2009). "Singularity Summit 2009: The Singularity Is Near". Popular Science. Retrieved June 30, 2017.

- ↑ "Form 990 2009" (PDF). Retrieved July 8, 2017.

- ↑ Michael Anissimov (December 12, 1009). "The Uncertain Future". The Singularity Institute Blog. Retrieved July 5, 2017.

- ↑ "lukeprog comments on Thoughts on the Singularity Institute (SI) - Less Wrong". LessWrong. Retrieved June 30, 2017.

So little monitoring of funds that $118k was stolen in 2010 before SI noticed. (Note that we have won stipulated judgments to get much of this back, and have upcoming court dates to argue for stipulated judgments to get the rest back.)

- ↑ "cjb comments on SIAI Fundraising". LessWrong. Retrieved July 8, 2017.

- ↑ "Almanac Almanac: Police Calls (December 23, 2009)". Retrieved July 8, 2017.

Embezzlement report: Alicia Issac, 37, of Sunnyvale arrested on embezzlement, larceny and conspiracy charges in connection with $51,000 loss, Singularity Institute for Artificial Intelligence in 1400 block of Adams Drive, Dec. 10.

- ↑ "Reply to Holden on The Singularity Institute". LessWrong. July 10, 2012. Retrieved June 30, 2017.

Two former employees stole $118,000 from SI. Earlier this year we finally won stipulated judgments against both individuals, forcing them to pay back the full amounts they stole. We have already recovered several thousand dollars of this.

- ↑ "Form 990 2010" (PDF). Retrieved July 8, 2017.

- ↑ "Harry Potter and the Methods of Rationality Chapter 1: A Day of Very Low Probability, a harry potter fanfic". FanFiction. Retrieved July 1, 2017.

Updated: 3/14/2015 - Published: 2/28/2010

- ↑ David Whelan (March 2, 2015). "The Harry Potter Fan Fiction Author Who Wants to Make Everyone a Little More Rational". Vice. Retrieved July 1, 2017.

- ↑ "2013 in Review: Fundraising - Machine Intelligence Research Institute". Machine Intelligence Research Institute. August 13, 2014. Retrieved July 1, 2017.

Recently, we asked (nearly) every donor who gave more than $3,000 in 2013 about the source of their initial contact with MIRI, their reasons for donating in 2013, and their preferred methods for staying in contact with MIRI. […] Four came into contact with MIRI via HPMoR.

- ↑ Rees, Gareth (August 17, 2010). "Zendegi - Gareth Rees". Retrieved July 15, 2017.

- ↑ Sotala, Kaj (October 7, 2010). "Greg Egan disses stand-ins for Overcoming Bias, SIAI in new book". Retrieved July 15, 2017.

- ↑ Hanson, Robin (March 25, 2012). "Egan's Zendegi". Retrieved July 15, 2017.

- ↑ "Singularity Summit | Program". Retrieved June 30, 2017.

- ↑ "Machine Intelligence Research Institute - Posts". Retrieved July 4, 2017.

- ↑ "Machine Intelligence Research Institute - Posts". Retrieved July 4, 2017.

- ↑ Louie Helm (December 21, 2010). "Announcing the Tallinn-Evans $125,000 Singularity Challenge". Machine Intelligence Research Institute. Retrieved July 7, 2017.

- ↑ Kaj Sotala (December 26, 2010). "Tallinn-Evans $125,000 Singularity Challenge". LessWrong. Retrieved July 7, 2017.

- ↑ "GiveWell conversation with SIAI". GiveWell. February 2011. Retrieved July 4, 2017.

- ↑ Holden Karnofsky. "Singularity Institute for Artificial Intelligence". Yahoo! Groups. Retrieved July 4, 2017.

- ↑ "lukeprog comments on Thoughts on the Singularity Institute (SI)". LessWrong. Retrieved June 30, 2017.

When I began to intern with the Singularity Institute in April 2011, I felt uncomfortable suggesting that people donate to SingInst, because I could see it from the inside and it wasn't pretty.

- ↑ Holden Karnofsky. "Re: [givewell] Singularity Institute for Artificial Intelligence". Yahoo! Groups. Retrieved July 4, 2017.

- ↑ "singularity.org". Retrieved July 4, 2017.

- ↑ 94.0 94.1 "Wayback Machine". Retrieved July 4, 2017.

- ↑ "Singularity Institute Volunteering". Retrieved July 14, 2017.

- ↑ "Singularity Summit | Program". Retrieved June 30, 2017.

- ↑ "SingularitySummits". YouTube. Retrieved July 4, 2017.

Joined Oct 17, 2011

- ↑ Luke Muehlhauser (January 16, 2012). "Machine Intelligence Research Institute Progress Report, December 2011". Machine Intelligence Research Institute. Retrieved July 14, 2017.

- ↑ lukeprog (December 12, 2011). "New 'landing page' website: Friendly-AI.com". LessWrong. Retrieved July 2, 2017.

- ↑ Frank, Sam (January 1, 2015). "Come With Us If You Want to Live. Among the apocalyptic libertarians of Silicon Valley". Harper's Magazine. Retrieved July 15, 2017.

Vassar had left to found MetaMed, a personalized-medicine company, with Jaan Tallinn of Skype and Kazaa, $500,000 from Peter Thiel, and a staff that included young rationalists who had cut their teeth arguing on Yudkowsky’s website. The idea behind MetaMed was to apply rationality to medicine — "rationality" here defined as the ability to properly research, weight, and synthesize the flawed medical information that exists in the world. Prices ranged from $25,000 for a literature review to a few hundred thousand for a personalized study. "We can save lots and lots and lots of lives," Vassar said (if mostly moneyed ones at first). "But it’s the signal — it's the 'Hey! Reason works!' — that matters. It's not really about medicine." Our whole society was sick — root, branch, and memeplex — and rationality was the only cure.

- ↑ "Video Q&A with Singularity Institute Executive Director". LessWrong. December 10, 2011. Retrieved May 31, 2021.

- ↑ "Q&A #2 with Luke Muehlhauser, Machine Intelligence Research Institute Executive Director". Machine Intelligence Research Institute. January 12, 2012. Retrieved May 31, 2021.

- ↑ "Wayback Machine". Retrieved July 4, 2017.

- ↑ Louie Helm (May 8, 2012). "Machine Intelligence Research Institute Progress Report, April 2012". Machine Intelligence Research Institute. Retrieved June 30, 2017.

- ↑ Holden Karnofsky. "Thoughts on the Singularity Institute (SI) - Less Wrong". LessWrong. Retrieved June 30, 2017.

- ↑ "Wayback Machine". Retrieved July 4, 2017.

- ↑ "I am Luke Muehlhauser, CEO of the Singularity Institute for Artificial Intelligence. Ask me anything about the Singularity, AI progress, technological forecasting, and researching Friendly AI! • r/Futurology". reddit. Retrieved June 30, 2017.

- ↑ "November 2012 Newsletter". Machine Intelligence Research Institute. November 7, 2012. Retrieved July 14, 2017.

Over the past couple of months we thought hard about how to improve our volunteer program, with the goal of finding a system that makes it easier to engage volunteers, create a sense of community, and quantify volunteer contributions. After evaluating several different volunteer management platforms, we decided to partner with Youtopia — a young company with a lot of promise — and make heavy use of Google Docs.

- ↑ David J. Hill (August 29, 2012). "Singularity Summit 2012 Is Coming To San Francisco October 13-14". Singularity Hub. Retrieved July 6, 2017.

- ↑ "Singularity Summit 2012: the lion doesn't sleep tonight". Gene Expression. Discover. October 15, 2012. Retrieved July 6, 2017.

- ↑ 113.00 113.01 113.02 113.03 113.04 113.05 113.06 113.07 113.08 113.09 113.10 113.11 113.12 113.13 113.14 113.15 113.16 113.17 113.18 113.19 113.20 113.21 113.22 113.23 113.24 "Research Workshops - Machine Intelligence Research Institute". Machine Intelligence Research Institute. Retrieved July 1, 2017.

- ↑ "Singularity University Acquires the Singularity Summit". Singularity University. December 9, 2012. Retrieved June 30, 2017.

- ↑ Fox, Joshua (February 14, 2013). "The Singularity Wars". LessWrong. Retrieved July 15, 2017.

- ↑ Vance, Alyssa (May 27, 2017). "The Singularity Summit was an annual event from 2006 through 2012". Retrieved July 15, 2017.

- ↑ Vance, Alyssa; Sotala, Kaj; Luczkow, Vincent (June 6, 2017). "EA Global Boston". Retrieved July 15, 2017.

- ↑ "Form 990 2013" (PDF). Retrieved July 8, 2017.

- ↑ "Conversations Archives". Machine Intelligence Research Institute. Retrieved July 15, 2017.

- ↑ Luke Muehlhauser (March 22, 2015). "2014 in review". Machine Intelligence Research Institute. Retrieved July 15, 2017.

- ↑ "March Newsletter". Machine Intelligence Research Institute. March 7, 2013. Retrieved July 1, 2017.

Due to Singularity University's acquisition of the Singularity Summit and some major changes to MIRI's public communications strategy, Michael Anissimov left MIRI in January 2013. Michael continues to support our mission and continues to volunteer for us.

- ↑ "We are now the "Machine Intelligence Research Institute" (MIRI)". Machine Intelligence Research Institute. January 30, 2013. Retrieved June 30, 2017.

- ↑ "Facing the Intelligence Explosion, Luke Muehlhauser". Amazon.com. Retrieved July 1, 2017.

Publisher: Machine Intelligence Research Institute (February 1, 2013)

- ↑ "Machine Intelligence Research Institute - Coming soon...". Retrieved July 4, 2017.

- ↑ "Machine Intelligence Research Institute". Retrieved July 4, 2017.

- ↑ Muehlhauser, Luke (February 28, 2013). "Welcome to Intelligence.org". Machine Intelligence Research Institute. Retrieved May 5, 2020.

- ↑ "Wayback Machine". Retrieved July 4, 2017.

- ↑ Luke Muehlhauser (April 25, 2013). ""Singularity Hypotheses" Published". Machine Intelligence Research Institute. Retrieved July 14, 2017.

- ↑ "Singularity Hypotheses: A Scientific and Philosophical Assessment (The Frontiers Collection): 9783642325595: Medicine & Health Science Books". Amazon.com. Retrieved July 14, 2017.

Publisher: Springer; 2012 edition (April 3, 2013)