Difference between revisions of "Timeline of Center for Human-Compatible AI"

From Timelines

(→Full timeline) |

|||

| Line 51: | Line 51: | ||

| 2018 || {{Dts|April 9}} || Publication || The ''Alignment Newsletter'' is publicly announced. The weekly newsletter summarizes content relevant to AI alignment from the previous week. Before the ''Alignment Newsletter'' was made public, a similar series of emails was produced internally for CHAI.<ref>{{cite web |url=https://www.lesswrong.com/posts/RvysgkLAHvsjTZECW/announcing-the-alignment-newsletter |title=Announcing the Alignment Newsletter |date=April 9, 2018 |first=Rohin |last=Shah |accessdate=May 10, 2018 |publisher=[[wikipedia:LessWrong|LessWrong]]}}</ref><ref>{{cite web |url=http://rohinshah.com/alignment-newsletter/ |title=Alignment Newsletter |publisher=Rohin Shah |accessdate=May 10, 2018 |archiveurl=https://archive.is/Ouvf1 |archivedate=May 10, 2018 |dead-url=no}}</ref> (It's not clear from the announcement whether the ''Alignment Newsletter'' is being produced officially by CHAI, or whether the initial emails were produced by CHAI and the later public newsletters are being produced independently.) | | 2018 || {{Dts|April 9}} || Publication || The ''Alignment Newsletter'' is publicly announced. The weekly newsletter summarizes content relevant to AI alignment from the previous week. Before the ''Alignment Newsletter'' was made public, a similar series of emails was produced internally for CHAI.<ref>{{cite web |url=https://www.lesswrong.com/posts/RvysgkLAHvsjTZECW/announcing-the-alignment-newsletter |title=Announcing the Alignment Newsletter |date=April 9, 2018 |first=Rohin |last=Shah |accessdate=May 10, 2018 |publisher=[[wikipedia:LessWrong|LessWrong]]}}</ref><ref>{{cite web |url=http://rohinshah.com/alignment-newsletter/ |title=Alignment Newsletter |publisher=Rohin Shah |accessdate=May 10, 2018 |archiveurl=https://archive.is/Ouvf1 |archivedate=May 10, 2018 |dead-url=no}}</ref> (It's not clear from the announcement whether the ''Alignment Newsletter'' is being produced officially by CHAI, or whether the initial emails were produced by CHAI and the later public newsletters are being produced independently.) | ||

|- | |- | ||

| − | | 2018 || {{dts|April 28}}–29 || Workshop || CHAI hosts its second annual workshop, which brings together researchers, industry experts, and collaborators to discuss progress and challenges in AI safety and alignment. The event covers themes such as reward modeling, interpretability, and human-AI collaboration, promoting open dialogue on cooperative AI development and fostering connections to advance safety goals. <ref>{{cite web |url=http://humancompatible.ai/workshop-2018 |title=Center for Human-Compatible AI Workshop 2018 |accessdate=February 9, 2018 |archiveurl=https://archive.is/XcxCZ |archivedate=February 9, 2018 |dead-url=no}}</ref> | + | | 2018 || {{dts|April 28}}–29 || Workshop || CHAI hosts its second annual workshop, which brings together researchers, industry experts, and collaborators to discuss progress and challenges in AI safety and alignment. The event covers themes such as reward modeling, interpretability, and human-AI collaboration, promoting open dialogue on cooperative AI development and fostering connections to advance safety goals.<ref>{{cite web |url=http://humancompatible.ai/workshop-2018 |title=Center for Human-Compatible AI Workshop 2018 |accessdate=February 9, 2018 |archiveurl=https://archive.is/XcxCZ |archivedate=February 9, 2018 |dead-url=no}}</ref> |

|- | |- | ||

| − | | 2018 || {{dts|July 2}} || Publication || Thomas Krendl Gilbert, a CHAI researcher, publishes "A Broader View on Bias in Automated Decision-Making" at ICML 2018. This work critically analyzes how bias in AI systems can perpetuate unfairness, providing strategies for integrating fairness and ethical standards into AI decision-making processes. The publication stresses the importance of preemptive efforts to address biases in automated decision-making. <ref>{{cite web|url=https://arxiv.org |title=A Broader View on Bias in Automated Decision-Making |publisher=ICML 2018 |date=July 2, 2018 |accessdate= | + | | 2018 || {{dts|July 2}} || Publication || Thomas Krendl Gilbert, a CHAI researcher, publishes "A Broader View on Bias in Automated Decision-Making" at ICML 2018. This work critically analyzes how bias in AI systems can perpetuate unfairness, providing strategies for integrating fairness and ethical standards into AI decision-making processes. The publication stresses the importance of preemptive efforts to address biases in automated decision-making.<ref>{{cite web|url=https://arxiv.org |title=A Broader View on Bias in Automated Decision-Making |publisher=ICML 2018 |date=July 2, 2018 |accessdate=September 30, 2024}}</ref> |

|- | |- | ||

| − | | 2018 || {{dts|July 13}} || Workshop || At the 1st Workshop on Goal Specifications for Reinforcement Learning, CHAI researcher Daniel Filan presents "Exploring Hierarchy-Aware Inverse Reinforcement Learning." The work aims to advance understanding of hierarchical human goals in AI systems, enhancing AI agents' capabilities to align their behaviour with complex human intentions in varied and multi-level environments. <ref>{{cite web|url=https://goal-spec.rlworkshop.com/ |title=Exploring Hierarchy-Aware Inverse Reinforcement Learning |publisher=Goal Specifications Workshop |date=July 13, 2018 |accessdate= | + | | 2018 || {{dts|July 13}} || Workshop || At the 1st Workshop on Goal Specifications for Reinforcement Learning, CHAI researcher Daniel Filan presents "Exploring Hierarchy-Aware Inverse Reinforcement Learning." The work aims to advance understanding of hierarchical human goals in AI systems, enhancing AI agents' capabilities to align their behaviour with complex human intentions in varied and multi-level environments.<ref>{{cite web|url=https://goal-spec.rlworkshop.com/ |title=Exploring Hierarchy-Aware Inverse Reinforcement Learning |publisher=Goal Specifications Workshop |date=July 13, 2018 |accessdate=September 30, 2024}}</ref> |

|- | |- | ||

| − | | 2018 || {{dts|July 14}} || Workshop || CHAI researchers Adam Gleave and Rohin Shah present "Active Inverse Reward Design" at the same workshop. Their work explores how AI systems can actively query human users to resolve ambiguities in reward structures, refining AI behavior to better align with nuanced human preferences, thereby improving decision-making in complex tasks. <ref>{{cite web|url=https://goal-spec.rlworkshop.com/ |title=Active Inverse Reward Design |publisher=Goal Specifications Workshop |date=July 14, 2018 |accessdate= | + | | 2018 || {{dts|July 14}} || Workshop || CHAI researchers Adam Gleave and Rohin Shah present "Active Inverse Reward Design" at the same workshop. Their work explores how AI systems can actively query human users to resolve ambiguities in reward structures, refining AI behavior to better align with nuanced human preferences, thereby improving decision-making in complex tasks.<ref>{{cite web|url=https://goal-spec.rlworkshop.com/ |title=Active Inverse Reward Design |publisher=Goal Specifications Workshop |date=July 14, 2018 |accessdate=September 30, 2024}}</ref> |

|- | |- | ||

| − | | 2018 || {{dts|July 16}} || Publication || Stuart Russell and Anca Dragan of CHAI publish "An Efficient, Generalized Bellman Update For Cooperative Inverse Reinforcement Learning" at ICML 2018. The paper introduces an innovative method to enhance AI's capability to cooperate with human partners by efficiently updating beliefs and learning shared goals, which is vital for developing AI systems that safely and effectively interact with humans. <ref>{{cite web|url=https://arxiv.org |title=An Efficient, Generalized Bellman Update For Cooperative Inverse Reinforcement Learning |publisher=ICML 2018 |date=July 16, 2018 |accessdate= | + | | 2018 || {{dts|July 16}} || Publication || Stuart Russell and Anca Dragan of CHAI publish "An Efficient, Generalized Bellman Update For Cooperative Inverse Reinforcement Learning" at ICML 2018. The paper introduces an innovative method to enhance AI's capability to cooperate with human partners by efficiently updating beliefs and learning shared goals, which is vital for developing AI systems that safely and effectively interact with humans.<ref>{{cite web|url=https://arxiv.org |title=An Efficient, Generalized Bellman Update For Cooperative Inverse Reinforcement Learning |publisher=ICML 2018 |date=July 16, 2018 |accessdate=September 30, 2024}}</ref> |

|- | |- | ||

| − | | 2018 || {{dts|August 26}} || Workshop || CHAI students join the AI Alignment Workshop organized by MIRI. This collaborative event focuses on core challenges in AI safety, such as creating systems aligned with human values, exploring theoretical advances in AI behaviour, and generating ideas for ensuring safe AI development. Students engage with researchers from various backgrounds to discuss emerging issues and practical solutions for AI alignment. <ref>{{cite web|url=https://intelligence.org |title=MIRI AI Alignment Workshop |publisher=Machine Intelligence Research Institute |date=August 26, 2018 |accessdate= | + | | 2018 || {{dts|August 26}} || Workshop || CHAI students join the AI Alignment Workshop organized by MIRI. This collaborative event focuses on core challenges in AI safety, such as creating systems aligned with human values, exploring theoretical advances in AI behaviour, and generating ideas for ensuring safe AI development. Students engage with researchers from various backgrounds to discuss emerging issues and practical solutions for AI alignment.<ref>{{cite web|url=https://intelligence.org |title=MIRI AI Alignment Workshop |publisher=Machine Intelligence Research Institute |date=August 26, 2018 |accessdate=September 30, 2024}}</ref> |

|- | |- | ||

| − | | 2018 || {{dts|September 4}} || Conference || Jaime Fisac, a CHAI researcher, presents his work at three conferences, exploring themes in AI safety and control under uncertainty. Fisac’s research aims to enhance the understanding of robust and safe AI interactions in dynamic settings, focusing on developing methods that ensure AI systems act predictably and align with human expectations across diverse environments. <ref>{{cite web|url=https://www.chai.berkeley.edu |title=Jaime Fisac AI Safety Research |publisher=CHAI |date=September 4, 2018 |accessdate= | + | | 2018 || {{dts|September 4}} || Conference || Jaime Fisac, a CHAI researcher, presents his work at three conferences, exploring themes in AI safety and control under uncertainty. Fisac’s research aims to enhance the understanding of robust and safe AI interactions in dynamic settings, focusing on developing methods that ensure AI systems act predictably and align with human expectations across diverse environments.<ref>{{cite web|url=https://www.chai.berkeley.edu |title=Jaime Fisac AI Safety Research |publisher=CHAI |date=September 4, 2018 |accessdate=September 30, 2024}}</ref> |

|- | |- | ||

| − | | 2018 || {{dts|October 31}} || Recognition || Rosie Campbell, Assistant Director at CHAI, is honored as one of the Top Women in AI Ethics on Social Media by Mia Dand. This recognition acknowledges Campbell’s leadership in AI ethics, her influence on responsible AI development, and her contributions to fostering discussions around the ethical challenges of advancing AI technologies. <ref>{{cite web|url=https://lighthouse3.com |title=Top Women in AI Ethics |publisher=Lighthouse3 |date=October 31, 2018 |accessdate= | + | | 2018 || {{dts|October 31}} || Recognition || Rosie Campbell, Assistant Director at CHAI, is honored as one of the Top Women in AI Ethics on Social Media by Mia Dand. This recognition acknowledges Campbell’s leadership in AI ethics, her influence on responsible AI development, and her contributions to fostering discussions around the ethical challenges of advancing AI technologies.<ref>{{cite web|url=https://lighthouse3.com |title=Top Women in AI Ethics |publisher=Lighthouse3 |date=October 31, 2018 |accessdate=September 30, 2024}}</ref> |

|- | |- | ||

| − | | 2018 || {{dts|December}} || Conference || CHAI researchers participate in the NeurIPS 2018 Conference, presenting their work and contributing to discussions on AI research, safety, and policy development. The event offers CHAI members the opportunity to engage with peers in the AI field, share findings on AI alignment, and explore new avenues for collaboration to enhance AI safety research. <ref>{{cite web|url=https://neurips.cc |title=NeurIPS 2018 Conference |publisher=NeurIPS |date=December 2018 |accessdate= | + | | 2018 || {{dts|December}} || Conference || CHAI researchers participate in the NeurIPS 2018 Conference, presenting their work and contributing to discussions on AI research, safety, and policy development. The event offers CHAI members the opportunity to engage with peers in the AI field, share findings on AI alignment, and explore new avenues for collaboration to enhance AI safety research.<ref>{{cite web|url=https://neurips.cc |title=NeurIPS 2018 Conference |publisher=NeurIPS |date=December 2018 |accessdate=September 30, 2024}}</ref> |

|- | |- | ||

| − | | 2018 || {{dts|December}} || Podcast || Rohin Shah, a CHAI researcher, features on the AI Alignment Podcast by the Future of Life Institute. In this episode, Shah discusses Inverse Reinforcement Learning, outlining how AI systems can better learn and adapt to human preferences, ultimately contributing to the development of safe, aligned AI technologies that act according to human values. <ref>{{cite web|url=https://futureoflife.org |title=AI Alignment Podcast: Rohin Shah |publisher=Future of Life Institute |date=December 2018 |accessdate= | + | | 2018 || {{dts|December}} || Podcast || Rohin Shah, a CHAI researcher, features on the AI Alignment Podcast by the Future of Life Institute. In this episode, Shah discusses Inverse Reinforcement Learning, outlining how AI systems can better learn and adapt to human preferences, ultimately contributing to the development of safe, aligned AI technologies that act according to human values.<ref>{{cite web|url=https://futureoflife.org |title=AI Alignment Podcast: Rohin Shah |publisher=Future of Life Institute |date=December 2018 |accessdate=September 30, 2024}}</ref> |

|- | |- | ||

| 2018 || {{dts|December}} || Media Mention || Stuart Russell and Rosie Campbell from CHAI are quoted in a Vox article titled "The Case for Taking AI Seriously as a Threat to Humanity." They emphasize the potential for AI systems to be developed without adequate safety measures or ethical considerations, which could lead to unintended and potentially harmful consequences. The article highlights their advocacy for aligning AI with human values and ensuring that AI safety is prioritized to prevent misuse or detrimental effects on society.<ref>{{cite web|url=https://www.vox.com |title=The Case for Taking AI Seriously as a Threat to Humanity |publisher=Vox |date=December 2018 |accessdate=October 14, 2024}}</ref> | | 2018 || {{dts|December}} || Media Mention || Stuart Russell and Rosie Campbell from CHAI are quoted in a Vox article titled "The Case for Taking AI Seriously as a Threat to Humanity." They emphasize the potential for AI systems to be developed without adequate safety measures or ethical considerations, which could lead to unintended and potentially harmful consequences. The article highlights their advocacy for aligning AI with human values and ensuring that AI safety is prioritized to prevent misuse or detrimental effects on society.<ref>{{cite web|url=https://www.vox.com |title=The Case for Taking AI Seriously as a Threat to Humanity |publisher=Vox |date=December 2018 |accessdate=October 14, 2024}}</ref> | ||

| + | |- | ||

| + | |||

| + | | 2019 || {{dts|January 8}} || Talks || Rosie Campbell, Assistant Director at CHAI, delivers talks at AI Meetups in San Francisco and East Bay. She discusses challenges in AI safety, emphasizing the importance of neural networks and CHAI’s unique approach to AI alignment. Her talks aim to raise awareness about creating AI systems that are reliable and safe, sparking discussions on ethical AI development and fostering community engagement in AI safety topics.<ref>{{cite web|url=https://humancompatible.ai/news/2019/01/08/rosie_talks/ |title=CHAI News January 8, 2019 |accessdate=September 30, 2024}}</ref> | ||

| + | |||

| + | |- | ||

| + | |||

| + | | 2019 || {{dts|January 15}} || Award || Stuart Russell, a pioneer in AI safety research and Director of CHAI, receives the AAAI Feigenbaum Prize for advancing probabilistic knowledge representation and reasoning. This prestigious award highlights his contributions to the development of algorithms for intelligent agents, and his groundbreaking work in applying AI to real-world challenges, such as seismic monitoring for nuclear test detection and ethical considerations in AI development.<ref>{{cite web|url=https://humancompatible.ai/news/2019/01/15/stuart_feigenbaum/ |title=CHAI News January 15, 2019 |accessdate=September 30, 2024}}</ref> | ||

| + | |||

| + | |- | ||

| + | |||

| + | | 2019 || {{dts|January 17}} || Conference || CHAI faculty and staff contribute several papers to AAAI 2019, including: "Deception in Finitely Repeated Security Games," "Robust Multi-Agent Reinforcement Learning via Minimax Deep Deterministic Policy Gradient," "A Unified Framework for Planning in Adversarial and Cooperative Environments," "Partial Unawareness," "Abstracting Causal Models," "Blameworthiness in Multi-Agent Settings," and a presentation on AI's social implications at the AIES Conference. <ref>{{cite web|url=https://humancompatible.ai/news/2019/01/17/CHAI_AAAI2019/ |title=CHAI News January 17, 2019 |accessdate=September 30, 2024}}</ref> | ||

| + | |||

| + | |- | ||

| + | |||

| + | | 2019 || {{dts|January 21}} || Talk || Stuart Russell speaks to the Netherlands Ministry of Foreign Affairs, outlining the risks of autonomous weapons. His presentation emphasizes how AI technology, if misused, could lead to the development of weapons of mass destruction. He calls for international regulations and AI safety mechanisms to manage autonomous weaponry, framing the global need to address ethical concerns and prevent the escalation of conflict driven by AI. <ref>{{cite web|url=https://humancompatible.ai/news/2019/01/21/stuart_talk/ |title=CHAI News January 21, 2019 |accessdate=September 30, 2024}}</ref> | ||

| + | |||

| + | |- | ||

| + | |||

| + | | 2019 || {{dts|March 20}} || Conference || Anca Dragan and Smitha Milli have a paper accepted at UAI 2019, focusing on uncertainties within AI systems. Their research enhances the understanding of AI's interactions with uncertain environments, proposing frameworks for more reliable AI decisions when dealing with ambiguous or incomplete information, which is crucial for safe deployment in real-world applications.<ref>{{cite web|url=https://humancompatible.ai/news/2019/03/20/anca_smith_milli/ |title=CHAI News March 20, 2019 |accessdate=September 30, 2024}}</ref> | ||

| + | |||

| + | |- | ||

| + | |||

| + | | 2019 || {{dts|May 20}} || Publication || Stuart Russell’s book, "Human Compatible: Artificial Intelligence and the Problem of Control," is announced for pre-order, offering a deep dive into the challenges and ethical considerations of controlling AI systems. The book delves into the need for aligning AI behavior with human values and preventing unintended consequences as AI systems grow more advanced and autonomous.<ref>{{cite web|url=https://humancompatible.ai/news/2019/05/20/human_compatible_book/ |title=CHAI News May 20, 2019 |accessdate=September 30, 2024}}</ref> | ||

| + | |||

| + | |- | ||

| + | |||

| + | | 2019 || {{dts|May 24}} || Conference || CHAI researchers submit the paper "Adversarial Policies: Attacking Deep Reinforcement Learning" to NeurIPS 2019. The research investigates how deep reinforcement learning models can be vulnerable to adversarial attacks, proposing strategies for increasing their resilience and robustness to ensure that AI systems behave safely under various conditions.<ref>{{cite web|url=https://humancompatible.ai/news/2019/05/24/adversarial_policies/ |title=CHAI News May 24, 2019 |accessdate=September 30, 2024}}</ref> | ||

| + | |||

| + | |- | ||

| + | |||

| + | | 2019 || {{dts|May 30}} || Research Collaboration || Vincent Corruble, from Sorbonne University, collaborates with CHAI as a visiting researcher. During his three-month tenure, Corruble works on the risks associated with comprehensive AI systems, contributing to ongoing CHAI projects aimed at developing ethical frameworks and practical safety measures for AI applications.<ref>{{cite web|url=https://humancompatible.ai/news/2019/05/30/vincent_corruble/ |title=CHAI News May 30, 2019 |accessdate=September 30, 2024}}</ref> | ||

| + | |||

| + | |- | ||

| + | |||

| + | | 2019 || {{dts|July 5}} || Research Summary || Rohin Shah publishes a summary of CHAI's research paper "On the Feasibility of Learning, Rather than Assuming, Human Biases for Reward Inference." The summary highlights the potential for AI systems to learn from human biases, offering a pathway to improve how AI models reward structures based on observed human behaviours and decisions.<ref>{{cite web|url=https://humancompatible.ai/news/2019/07/05/rohin_blogposts/ |title=CHAI News July 5, 2019 |accessdate=September 30, 2024}}</ref> | ||

| + | |||

| + | |- | ||

| + | |||

| + | | 2019 || {{dts|August 15}} || Media Publication || Mark Nitzberg, Executive Director of CHAI, writes a WIRED article advocating for an “FDA for algorithms.” The article argues for the establishment of a regulatory body to oversee the development of AI technologies, ensuring their safety, transparency, and ethical use to mitigate potential harms caused by unchecked AI deployment.<ref>{{cite web|url=https://www.wired.com/story/fda-for-algorithms/ |title=WIRED Article by Mark Nitzberg |date=August 15, 2019 |accessdate=September 30, 2024}}</ref> | ||

| + | |||

| + | |- | ||

| + | |||

| + | | 2019 || {{dts|August 16}} || Talk || Michael Wellman, a principal investigator at CHAI, presents his work at the ICML Workshop on AI and Finance. His talk, "Trend-Following Trading Strategies and Financial Market Stability," examines how the use of AI in algorithmic trading impacts market stability and raises questions about the ethical implications of autonomous financial decision-making.<ref>{{cite web|url=https://humancompatible.ai/news/2019/08/16/wellman_finance/ |title=CHAI News August 16, 2019 |accessdate=September 30, 2024}}</ref> | ||

| + | |||

| + | |- | ||

| + | |||

| + | | 2019 || {{dts|August 28}} || Paper Submission || Thomas Krendl Gilbert submits "The Passions and the Reward Functions: Rival Views of AI Safety?" to FAT*2020. The paper delves into philosophical perspectives on AI safety, debating different approaches to constructing reward functions that align with human emotions and societal values, and offering insights into balancing technical and ethical considerations in AI design.<ref>{{cite web|url=https://humancompatible.ai/news/2019/08/28/gilbert_paper/ |title=CHAI News August 28, 2019 |accessdate=September 30, 2024}}</ref> | ||

| + | |||

| + | |- | ||

| + | |||

| + | | 2019 || {{dts|September 28}} || Newsletter || Rohin Shah expands and professionalizes the AI Alignment Newsletter, transitioning it from a volunteer-based effort to a team-driven project. This enables regular and detailed updates on AI safety and alignment research, serving as a comprehensive resource for the AI community on developments and discussions in AI safety.<ref>{{cite web|url=https://humancompatible.ai/news/2019/09/28/newsletter_update/ |title=CHAI News September 28, 2019 |accessdate=September 30, 2024}}</ref> | ||

| + | |||

| + | |- | ||

| + | |||

| + | | 2019 || {{dts|October 8}} || Book Release || Stuart Russell publishes "Human Compatible: Artificial Intelligence and the Problem of Control," exploring the potential risks of advanced AI systems and proposing a vision for their ethical design and control. The book examines the broader implications of...controlling advanced AI. The book discusses the dangers of unaligned AI, the need for ethical design, and strategies for ensuring AI systems serve human interests, providing a roadmap for the safe development of AI technologies.<ref>{{cite web|url=https://humancompatible.ai/news/2019/10/08/human_compatible_release/ |title=CHAI News October 8, 2019 |accessdate=September 30, 2024}}</ref> | ||

|} | |} | ||

| − | |||

== Visual data == | == Visual data == | ||

Revision as of 06:50, 30 September 2024

This is a timeline of Center for Human-Compatible AI (CHAI).

Contents

Big picture

| Time period | Development summary | More details |

|---|---|---|

| 2016–present | CHAI is established | CHAI is established and begins producing research. |

Full timeline

| Year | Month and date | Event type | Details |

|---|---|---|---|

| 2016 | August | Organization | The UC Berkeley Center for Human-Compatible Artificial Intelligence launches. The focus of the center is "to ensure that AI systems are beneficial to humans".[1] |

| 2016 | August | Financial | The Open Philanthropy Project awards a grant of $5.6 million to the Center for Human-Compatible AI.[2] |

| 2016 | November 24 | Publication | The initial version of "The Off-Switch Game", a paper by Dylan Hadfield-Menell, Anca Dragan, Pieter Abbeel, and Stuart Russell, is uploaded to the arXiv.[3][4] |

| 2016 | December | Publication | CHAI's "Annotated bibliography of recommended materials" is published around this time.[5] |

| 2017 | May 5–6 | Workshop | CHAI's first annual workshop takes place. The annual workshop is "designed to advance discussion and research" to "reorient the field of artificial intelligence toward developing systems that are provably beneficial to humans".[6] |

| 2017 | May 28 | Publication | "Should Robots be Obedient?" by Smitha Milli, Dylan Hadfield-Menell, Anca Dragan, and Stuart Russell is uploaded to the arXiv.[7][4] |

| 2017 | October | Staff | Rosie Campbell joins CHAI as Assistant Director.[8] |

| 2018 | February 1 | Publication | Joseph Halpern publishes "Information Acquisition Under Resource Limitations in a Noisy Environment," contributing to discussions on optimizing decision-making processes when resources and data are limited. The paper offers insights into how intelligent agents can make informed decisions in complex environments. [9] |

| 2018 | February 7 | Media Mention | Anca Dragan, a CHAI researcher, is featured in Forbes in an article discussing AI research with a focus on value alignment and the broader ethical implications of AI development. This inclusion highlights Dragan's contributions to the field and raises awareness on the importance of aligning AI behavior with human values. [10] |

| 2018 | February 26 | Conference | Anca Dragan presents "Expressing Robot Incapability" at the ACM/IEEE International Conference on Human-Robot Interaction. The presentation explores how robots can effectively communicate their limitations to humans, an essential step in developing more trustworthy and transparent human-robot collaborations.[11] |

| 2018 | March 8 | Publication | Anca Dragan and her team publish "Learning from Physical Human Corrections, One Feature at a Time," a paper focusing on the dynamics of human-robot interaction. This research emphasizes how robots can learn more effectively from human corrections, improving overall performance in collaborative settings.[12] |

| 2018 | March | Staff | Andrew Critch, who was previously on leave from the Machine Intelligence Research Institute to help launch CHAI and the Berkeley Existential Risk Initiative, accepts a position as CHAI's first research scientist.[13] |

| 2018 | April 4–12 | Organization | CHAI gets a new logo (green background with white letters "CHAI") sometime during this period.[14][15] |

| 2018 | April 9 | Publication | The Alignment Newsletter is publicly announced. The weekly newsletter summarizes content relevant to AI alignment from the previous week. Before the Alignment Newsletter was made public, a similar series of emails was produced internally for CHAI.[16][17] (It's not clear from the announcement whether the Alignment Newsletter is being produced officially by CHAI, or whether the initial emails were produced by CHAI and the later public newsletters are being produced independently.) |

| 2018 | April 28–29 | Workshop | CHAI hosts its second annual workshop, which brings together researchers, industry experts, and collaborators to discuss progress and challenges in AI safety and alignment. The event covers themes such as reward modeling, interpretability, and human-AI collaboration, promoting open dialogue on cooperative AI development and fostering connections to advance safety goals.[18] |

| 2018 | July 2 | Publication | Thomas Krendl Gilbert, a CHAI researcher, publishes "A Broader View on Bias in Automated Decision-Making" at ICML 2018. This work critically analyzes how bias in AI systems can perpetuate unfairness, providing strategies for integrating fairness and ethical standards into AI decision-making processes. The publication stresses the importance of preemptive efforts to address biases in automated decision-making.[19] |

| 2018 | July 13 | Workshop | At the 1st Workshop on Goal Specifications for Reinforcement Learning, CHAI researcher Daniel Filan presents "Exploring Hierarchy-Aware Inverse Reinforcement Learning." The work aims to advance understanding of hierarchical human goals in AI systems, enhancing AI agents' capabilities to align their behaviour with complex human intentions in varied and multi-level environments.[20] |

| 2018 | July 14 | Workshop | CHAI researchers Adam Gleave and Rohin Shah present "Active Inverse Reward Design" at the same workshop. Their work explores how AI systems can actively query human users to resolve ambiguities in reward structures, refining AI behavior to better align with nuanced human preferences, thereby improving decision-making in complex tasks.[21] |

| 2018 | July 16 | Publication | Stuart Russell and Anca Dragan of CHAI publish "An Efficient, Generalized Bellman Update For Cooperative Inverse Reinforcement Learning" at ICML 2018. The paper introduces an innovative method to enhance AI's capability to cooperate with human partners by efficiently updating beliefs and learning shared goals, which is vital for developing AI systems that safely and effectively interact with humans.[22] |

| 2018 | August 26 | Workshop | CHAI students join the AI Alignment Workshop organized by MIRI. This collaborative event focuses on core challenges in AI safety, such as creating systems aligned with human values, exploring theoretical advances in AI behaviour, and generating ideas for ensuring safe AI development. Students engage with researchers from various backgrounds to discuss emerging issues and practical solutions for AI alignment.[23] |

| 2018 | September 4 | Conference | Jaime Fisac, a CHAI researcher, presents his work at three conferences, exploring themes in AI safety and control under uncertainty. Fisac’s research aims to enhance the understanding of robust and safe AI interactions in dynamic settings, focusing on developing methods that ensure AI systems act predictably and align with human expectations across diverse environments.[24] |

| 2018 | October 31 | Recognition | Rosie Campbell, Assistant Director at CHAI, is honored as one of the Top Women in AI Ethics on Social Media by Mia Dand. This recognition acknowledges Campbell’s leadership in AI ethics, her influence on responsible AI development, and her contributions to fostering discussions around the ethical challenges of advancing AI technologies.[25] |

| 2018 | December | Conference | CHAI researchers participate in the NeurIPS 2018 Conference, presenting their work and contributing to discussions on AI research, safety, and policy development. The event offers CHAI members the opportunity to engage with peers in the AI field, share findings on AI alignment, and explore new avenues for collaboration to enhance AI safety research.[26] |

| 2018 | December | Podcast | Rohin Shah, a CHAI researcher, features on the AI Alignment Podcast by the Future of Life Institute. In this episode, Shah discusses Inverse Reinforcement Learning, outlining how AI systems can better learn and adapt to human preferences, ultimately contributing to the development of safe, aligned AI technologies that act according to human values.[27] |

| 2018 | December | Media Mention | Stuart Russell and Rosie Campbell from CHAI are quoted in a Vox article titled "The Case for Taking AI Seriously as a Threat to Humanity." They emphasize the potential for AI systems to be developed without adequate safety measures or ethical considerations, which could lead to unintended and potentially harmful consequences. The article highlights their advocacy for aligning AI with human values and ensuring that AI safety is prioritized to prevent misuse or detrimental effects on society.[28] |

| 2019 | January 8 | Talks | Rosie Campbell, Assistant Director at CHAI, delivers talks at AI Meetups in San Francisco and East Bay. She discusses challenges in AI safety, emphasizing the importance of neural networks and CHAI’s unique approach to AI alignment. Her talks aim to raise awareness about creating AI systems that are reliable and safe, sparking discussions on ethical AI development and fostering community engagement in AI safety topics.[29] |

| 2019 | January 15 | Award | Stuart Russell, a pioneer in AI safety research and Director of CHAI, receives the AAAI Feigenbaum Prize for advancing probabilistic knowledge representation and reasoning. This prestigious award highlights his contributions to the development of algorithms for intelligent agents, and his groundbreaking work in applying AI to real-world challenges, such as seismic monitoring for nuclear test detection and ethical considerations in AI development.[30] |

| 2019 | January 17 | Conference | CHAI faculty and staff contribute several papers to AAAI 2019, including: "Deception in Finitely Repeated Security Games," "Robust Multi-Agent Reinforcement Learning via Minimax Deep Deterministic Policy Gradient," "A Unified Framework for Planning in Adversarial and Cooperative Environments," "Partial Unawareness," "Abstracting Causal Models," "Blameworthiness in Multi-Agent Settings," and a presentation on AI's social implications at the AIES Conference. [31] |

| 2019 | January 21 | Talk | Stuart Russell speaks to the Netherlands Ministry of Foreign Affairs, outlining the risks of autonomous weapons. His presentation emphasizes how AI technology, if misused, could lead to the development of weapons of mass destruction. He calls for international regulations and AI safety mechanisms to manage autonomous weaponry, framing the global need to address ethical concerns and prevent the escalation of conflict driven by AI. [32] |

| 2019 | March 20 | Conference | Anca Dragan and Smitha Milli have a paper accepted at UAI 2019, focusing on uncertainties within AI systems. Their research enhances the understanding of AI's interactions with uncertain environments, proposing frameworks for more reliable AI decisions when dealing with ambiguous or incomplete information, which is crucial for safe deployment in real-world applications.[33] |

| 2019 | May 20 | Publication | Stuart Russell’s book, "Human Compatible: Artificial Intelligence and the Problem of Control," is announced for pre-order, offering a deep dive into the challenges and ethical considerations of controlling AI systems. The book delves into the need for aligning AI behavior with human values and preventing unintended consequences as AI systems grow more advanced and autonomous.[34] |

| 2019 | May 24 | Conference | CHAI researchers submit the paper "Adversarial Policies: Attacking Deep Reinforcement Learning" to NeurIPS 2019. The research investigates how deep reinforcement learning models can be vulnerable to adversarial attacks, proposing strategies for increasing their resilience and robustness to ensure that AI systems behave safely under various conditions.[35] |

| 2019 | May 30 | Research Collaboration | Vincent Corruble, from Sorbonne University, collaborates with CHAI as a visiting researcher. During his three-month tenure, Corruble works on the risks associated with comprehensive AI systems, contributing to ongoing CHAI projects aimed at developing ethical frameworks and practical safety measures for AI applications.[36] |

| 2019 | July 5 | Research Summary | Rohin Shah publishes a summary of CHAI's research paper "On the Feasibility of Learning, Rather than Assuming, Human Biases for Reward Inference." The summary highlights the potential for AI systems to learn from human biases, offering a pathway to improve how AI models reward structures based on observed human behaviours and decisions.[37] |

| 2019 | August 15 | Media Publication | Mark Nitzberg, Executive Director of CHAI, writes a WIRED article advocating for an “FDA for algorithms.” The article argues for the establishment of a regulatory body to oversee the development of AI technologies, ensuring their safety, transparency, and ethical use to mitigate potential harms caused by unchecked AI deployment.[38] |

| 2019 | August 16 | Talk | Michael Wellman, a principal investigator at CHAI, presents his work at the ICML Workshop on AI and Finance. His talk, "Trend-Following Trading Strategies and Financial Market Stability," examines how the use of AI in algorithmic trading impacts market stability and raises questions about the ethical implications of autonomous financial decision-making.[39] |

| 2019 | August 28 | Paper Submission | Thomas Krendl Gilbert submits "The Passions and the Reward Functions: Rival Views of AI Safety?" to FAT*2020. The paper delves into philosophical perspectives on AI safety, debating different approaches to constructing reward functions that align with human emotions and societal values, and offering insights into balancing technical and ethical considerations in AI design.[40] |

| 2019 | September 28 | Newsletter | Rohin Shah expands and professionalizes the AI Alignment Newsletter, transitioning it from a volunteer-based effort to a team-driven project. This enables regular and detailed updates on AI safety and alignment research, serving as a comprehensive resource for the AI community on developments and discussions in AI safety.[41] |

| 2019 | October 8 | Book Release | Stuart Russell publishes "Human Compatible: Artificial Intelligence and the Problem of Control," exploring the potential risks of advanced AI systems and proposing a vision for their ethical design and control. The book examines the broader implications of...controlling advanced AI. The book discusses the dangers of unaligned AI, the need for ethical design, and strategies for ensuring AI systems serve human interests, providing a roadmap for the safe development of AI technologies.[42] |

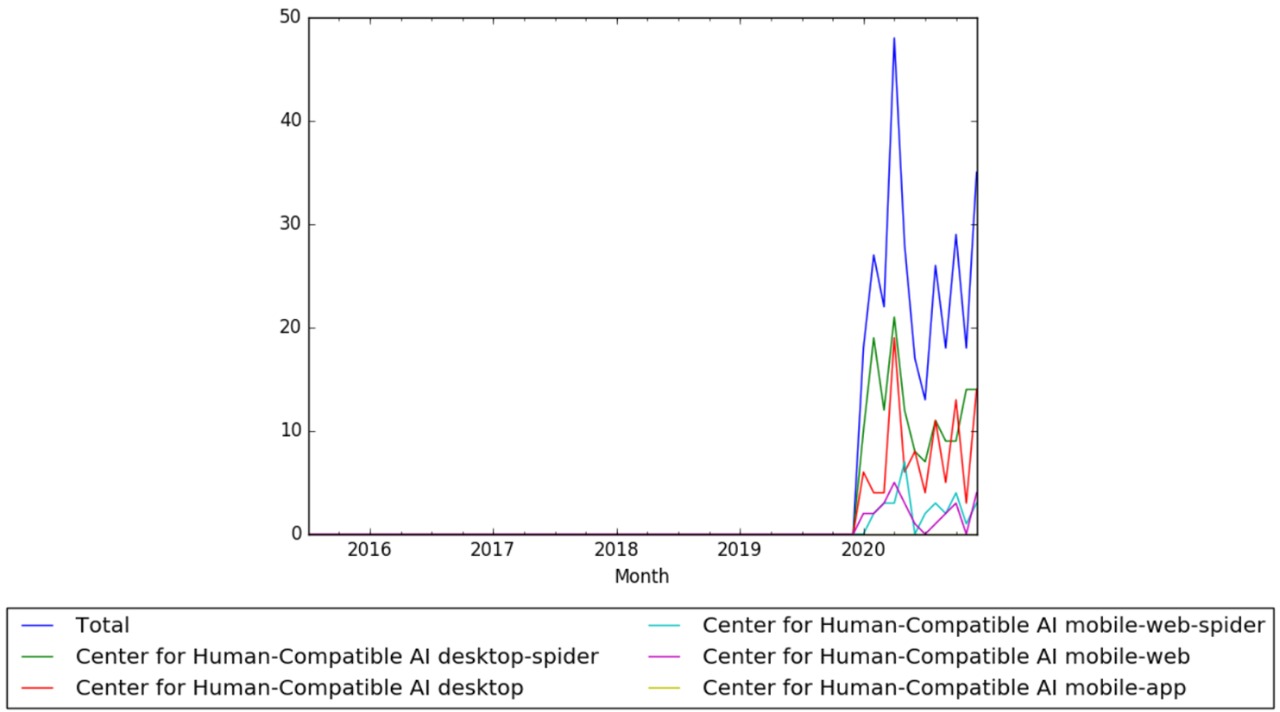

Visual data

Wikipedia Views

Meta information on the timeline

How the timeline was built

The initial version of the timeline was written by Issa Rice.

Funding information for this timeline is available.

What the timeline is still missing

Timeline update strategy

See also

- Timeline of Machine Intelligence Research Institute

- Timeline of Future of Humanity Institute

- Timeline of OpenAI

- Timeline of Berkeley Existential Risk Initiative

External links

References

- ↑ "UC Berkeley launches Center for Human-Compatible Artificial Intelligence". Berkeley News. August 29, 2016. Retrieved July 26, 2017.

- ↑ "Open Philanthropy Project donations made (filtered to cause areas matching AI risk)". Retrieved July 27, 2017.

- ↑ "[1611.08219] The Off-Switch Game". Retrieved February 9, 2018.

- ↑ 4.0 4.1 "2018 AI Safety Literature Review and Charity Comparison - Effective Altruism Forum". Retrieved February 9, 2018.

- ↑ "Center for Human-Compatible AI". Retrieved February 9, 2018.

- ↑ "Center for Human-Compatible AI". Archived from the original on February 9, 2018. Retrieved February 9, 2018.

- ↑ "[1705.09990] Should Robots be Obedient?". Retrieved February 9, 2018.

- ↑ "Rosie Campbell - BBC R&D". Archived from the original on May 11, 2018. Retrieved May 11, 2018.

Rosie left in October 2017 to take on the role of Assistant Director of the Center for Human-compatible AI at UC Berkeley, a research group which aims to ensure that artificially intelligent systems are provably beneficial to humans.

- ↑ "Information Acquisition Under Resource Limitations in a Noisy Environment". February 1, 2018. Retrieved October 14, 2024.

- ↑ "Anca Dragan on AI Value Alignment". February 7, 2018. Retrieved October 14, 2024.

- ↑ "Expressing Robot Incapability". ACM/IEEE International Conference. February 26, 2018. Retrieved October 14, 2024.

- ↑ "Learning from Physical Human Corrections, One Feature at a Time". March 8, 2018. Retrieved October 14, 2024.

- ↑ Bensinger, Rob (March 25, 2018). "March 2018 Newsletter - Machine Intelligence Research Institute". Machine Intelligence Research Institute. Retrieved May 10, 2018.

Andrew Critch, previously on leave from MIRI to help launch the Center for Human-Compatible AI and the Berkeley Existential Risk Initiative, has accepted a position as CHAI’s first research scientist. Critch will continue to work with and advise the MIRI team from his new academic home at UC Berkeley. Our congratulations to Critch!

- ↑ "Center for Human-Compatible AI". Archived from the original on April 4, 2018. Retrieved May 10, 2018.

- ↑ "Center for Human-Compatible AI". Archived from the original on April 12, 2018. Retrieved May 10, 2018.

- ↑ Shah, Rohin (April 9, 2018). "Announcing the Alignment Newsletter". LessWrong. Retrieved May 10, 2018.

- ↑ "Alignment Newsletter". Rohin Shah. Archived from the original on May 10, 2018. Retrieved May 10, 2018.

- ↑ "Center for Human-Compatible AI Workshop 2018". Archived from the original on February 9, 2018. Retrieved February 9, 2018.

- ↑ "A Broader View on Bias in Automated Decision-Making". ICML 2018. July 2, 2018. Retrieved September 30, 2024.

- ↑ "Exploring Hierarchy-Aware Inverse Reinforcement Learning". Goal Specifications Workshop. July 13, 2018. Retrieved September 30, 2024.

- ↑ "Active Inverse Reward Design". Goal Specifications Workshop. July 14, 2018. Retrieved September 30, 2024.

- ↑ "An Efficient, Generalized Bellman Update For Cooperative Inverse Reinforcement Learning". ICML 2018. July 16, 2018. Retrieved September 30, 2024.

- ↑ "MIRI AI Alignment Workshop". Machine Intelligence Research Institute. August 26, 2018. Retrieved September 30, 2024.

- ↑ "Jaime Fisac AI Safety Research". CHAI. September 4, 2018. Retrieved September 30, 2024.

- ↑ "Top Women in AI Ethics". Lighthouse3. October 31, 2018. Retrieved September 30, 2024.

- ↑ "NeurIPS 2018 Conference". NeurIPS. December 2018. Retrieved September 30, 2024.

- ↑ "AI Alignment Podcast: Rohin Shah". Future of Life Institute. December 2018. Retrieved September 30, 2024.

- ↑ "The Case for Taking AI Seriously as a Threat to Humanity". Vox. December 2018. Retrieved October 14, 2024.

- ↑ "CHAI News January 8, 2019". Retrieved September 30, 2024.

- ↑ "CHAI News January 15, 2019". Retrieved September 30, 2024.

- ↑ "CHAI News January 17, 2019". Retrieved September 30, 2024.

- ↑ "CHAI News January 21, 2019". Retrieved September 30, 2024.

- ↑ "CHAI News March 20, 2019". Retrieved September 30, 2024.

- ↑ "CHAI News May 20, 2019". Retrieved September 30, 2024.

- ↑ "CHAI News May 24, 2019". Retrieved September 30, 2024.

- ↑ "CHAI News May 30, 2019". Retrieved September 30, 2024.

- ↑ "CHAI News July 5, 2019". Retrieved September 30, 2024.

- ↑ "WIRED Article by Mark Nitzberg". August 15, 2019. Retrieved September 30, 2024.

- ↑ "CHAI News August 16, 2019". Retrieved September 30, 2024.

- ↑ "CHAI News August 28, 2019". Retrieved September 30, 2024.

- ↑ "CHAI News September 28, 2019". Retrieved September 30, 2024.

- ↑ "CHAI News October 8, 2019". Retrieved September 30, 2024.