Timeline of experiment design

This is a timeline of experiment design, attempting to describe significant and illustrative events in the history of the field.

Sample questions

The following are some interesting questions that can be answered by reading this timeline:

Big picture

| Time period | Development summary | More details |

|---|---|---|

| Ancient Times – 1700s | Early foundations | Experimental thinking has ancient roots, notably in medicine and agriculture. The Earliest known clinical trial dates to 500 BCE, when Persian king Cyrus tests diets on his soldiers.[1] Greek physicians like Hippocrates[2] and Galen emphasize observation and rudimentary comparative methods. However, formal experimentation remains rare. The Scientific Revolution in the 1600s shifts focus from authority to empirical investigation. Francis Bacon promotes inductive reasoning and systematic observation, laying philosophical groundwork for modern science.[3] Nonetheless, experiments lack standardized design or statistical rigor. Scientists like Galileo[4] and Boyle[5] use controlled observations, but their methods are more qualitative than quantitative. The period ends with the emergence of probability theory by Pascal[6], Fermat[7], and Bernoulli[8], which begins to offer tools later essential for experimental statistics. |

| 1700s – Late 1800s | Classical period | The Age of Enlightenment brings advances in both scientific methodology and mathematical statistics.[9] Controlled experiments become more common in chemistry, physics, and agriculture. Notably, James Lind conducts one of the first controlled clinical trials in 1747, testing treatments for scurvy.[10] In parallel, Carl Linnaeus systematizes biological classification, influencing observational rigor.[11] Pierre-Simon Laplace and Carl Friedrich Gauss develop early statistical tools such as the normal distribution and least squares estimation, crucial for data analysis. Agricultural trials, especially in Europe, begin to use basic comparison and replication. However, randomness, replication, and formal control are not yet standardized. This period sets the stage for modern experimentation by linking empirical testing with early statistical theory, but experiments still lack formal design structures and reproducibility standards. The idea of a placebo effect—a therapeutic outcome derived from an inert treatment— is already discussed in 18th century psychology[12] |

| Early 1900s – 1950s | Formalization and statistics | This period marks the birth of modern experimental design. Ronald A. Fisher revolutionizes the field in the 1920s and 1930s with work at Rothamsted Experimental Station in England.[13] Fisher introduces core principles: randomization, replication, and blocking. He develops the analysis of variance (ANOVA) and emphasizes the role of statistical inference in drawing conclusions. His 1935 book, The Design of Experiments, would remain foundational. Experimental design becomes central to agriculture[14][14][15], biology, and later psychology and social sciences. Jerzy Neyman and Egon Pearson introduce hypothesis testing frameworks, further refining experimental methodology.[16] This era institutionalizes statistics as an essential scientific tool and formalizes the structure of experiments, enabling reproducibility and greater objectivity in scientific inference. The need to blind researchers becomes widely recognized in the mid-century.[17] |

| 1960s – present | Modern and computational era | Post-1950s, experimental design expand into diverse fields: psychology, economics, medicine, and engineering. With the rise of computers, simulation, factorial designs, and response surface methodology become widespread. Clinical trials adopt strict protocols, often randomized and double-blind, guided by ethical oversight. In social sciences, randomized controlled trials (RCTs) gain ground, supported by behavioral economics and development studies. The Bayesian approach gains traction, offering alternative inference frameworks. From the 1990s, machine learning and A/B testing transform experimental design in tech industries, allowing real-time, large-scale experimentation. Today, experimental design is deeply intertwined with data science, AI, and evidence-based policy, continually evolving to address high-dimensional data, ethical concerns, and reproducibility challenges across disciplines. |

Full timeline

| Year | Event type | Details | Geographical location |

|---|---|---|---|

| ca. 500 BCE | Clinical trial | Persian physician conducts early controlled experiment on diet among soldiers (pulse vs. meat). | Persia (Iran) |

| ca. 400 BCE | Philosophical basis | Hippocrates promotes systematic observation in medicine. | Greece |

| 1620 | Philosophical basis | Francis Bacon publishes Novum Organum, advocating empirical and inductive reasoning. | England |

| 1665 | Scientific discovery | Robert Boyle emphasizes controlled experimentation in chemistry. | England |

| 1700 | Korean mathematician Choi Seok-jeong is the first to publish an example of Latin squares of order nine, in order to construct a magic square, predating Leonhard Euler by 67 years.[18] Latin squares are used in combinatorics and in experimental design. | ||

| 1747 | Scottish doctor James Lind conducts the first clinical trial when investigating the efficacy of citrus fruit in cases of scurvy. He randomly divides twelve scurvy patients, whose "cases were as similar as I could have them", into six pairs. Each pair is given a different remedy. According to Lind’s 1753 Treatise on the Scurvy in Three Parts Containing an Inquiry into the Nature, Causes, and Cure of the Disease, Together with a Critical and Chronological View of what has been Published of the Subject, the remedies were: one quart of cider per day, twenty-five drops of elixir vitriol (sulfuric acid) three times a day, two spoonfuls of vinegar three times a day, a course of sea-water (half a pint every day), two oranges and one lemon each day, and electuary, (a mixture containing garlic, mustard, balsam of Peru, and myrrh).[19] Lind would note that the pair who had been given the oranges and lemons were so restored to health within six days of treatment that one of them returned to duty, and the other was well enough to attend the rest of the sick.[19] | ||

| 1784 | The first blinded experiment is conducted by the French Academy of Sciences to investigate the claims of mesmerism as proposed by Franz Mesmer. In the experiment, researchers blindfolded mesmerists and asked them to identify objects that the experimenters had previously filled with "vital fluid". The subjects are unable to do so.[20] | ||

| 1805 | Statistical method | Gauss formalizes the method of least squares for data fitting. | Germany |

| 1815 | An article on optimal designs for polynomial regression is published by Joseph Diaz Gergonne.[21] | ||

| 1817 | The first blinded experiment recorded outside of a scientific setting compares the musical quality of a Stradivarius violin to one with a guitar-like design. A violinist plays each instrument while a committee of scientists and musicians listen from another room so as to avoid prejudice.[22][23] | ||

| 1827 | Pierre-Simon Laplace uses least squares methods to address analysis of variance problems regarding measurements of atmospheric tides.[24] | France | |

| 1835 | An early example of a double-blind protocol is the Nuremberg salt test performed by Friedrich Wilhelm von Hoven, Nuremberg's highest-ranking public health official.[25] | Germany | |

| 1835 | Statistical text | Adolphe Quetelet introduces the concept of the "average man" and applies statistics to social science. | Belgium |

| 1860 | German psychologist and physicist Gustav Theodor Fechner makes a groundbreaking contribution to the development of experimental psychology with the publication of Elements of Psychophysics. In this work, he seeks to test and justify the relationship between physical stimuli and the sensations they produce. Fechner proposes that mental experiences can be quantified by linking them to measurable physical changes, laying the foundation for psychophysics. Using experimental data, he formulates mathematical laws—most notably the Weber-Fechner law—that describe how perceived intensity varies with stimulus magnitude. His efforts help establish psychology as a quantitative science rooted in empirical observation.[26] | ||

| 1876 | Literature | American scientist Charles S. Peirce contributes the first English-language publication on an optimal design for regression models.[27] | |

| 1877 | Microbiology experiment | Louis Pasteur designs experiments to disprove spontaneous generation. | France |

| 1879 | Experimental psychology emerges as a modern scientific discipline in Germany with the establishment of the first experimental laboratory by Wilhelm Wundt at the University of Leipzig. This marks a pivotal moment in the history of psychology, as Wundt seeks to separate psychology from philosophy by applying rigorous scientific methods. He introduces a mathematical and experimental approach to studying the human mind, emphasizing observation, measurement, and controlled experimentation. Wundt's work lays the foundation for psychology as an empirical science, influencing future researchers and schools of thought.[26] | ||

| 1882 | In his published lecture at Johns Hopkins University, Peirce introduces experimental design with these words:

|

||

| 1885 | Analysis of variance. An eloquent non-mathematical explanation of the additive effects model becomes available.[29] | ||

| 1880s | Charles Sanders Peirce and Joseph Jastrow introduce randomized experiments in the field of psychology.[30] | ||

| 1897 | Psychological experiment | Norman Triplett conducts one of the first social psychology experiments on cyclist performance. | United States |

| 1900 | Field development | The P-value is first formally introduced by Karl Pearson, in his Pearson's chi-squared test, using the chi-squared distribution and notated as capital P.[31] Since then, P-values would become the preferred method to summarize the results of medical articles.[32][33] | |

| 1903 | American physician Richard Clarke Cabot concludes that the placebo should be avoided because it is deceptive.[34] | United States | |

| 1907 | The first study recorded to have a blinded researcher is conducted by W. H. R. Rivers and H. N. Webber to investigate the effects of caffeine.[35] | ||

| 1908 | Statistical distribution | William Sealy Gosset (as “Student”) introduces the t-distribution. | United Kingdom |

| 1918 | Concept development | English statistician Ronald Fisher introduces the term variance and proposes its formal analysis in his article The Correlation Between Relatives on the Supposition of Mendelian Inheritance.[36] | |

| 1918 | Field development | Kirstine Smith proposes optimal designs for polynomial models. | |

| 1919 | Field development | R. A. Fisher at the Rothamsted Experimental Station in England starts developing modern concepts of experimental design in the planning of agricultural field experiments.[37] | England |

| 1921 | Field development | Ronald Fisher publishes his first application of the analysis of variance.[38] | |

| 1923 | Field development | The first randomization model is published in Polish by Jerzy Neyman.[39] | |

| 1925 | Statistical significance: British polymath Ronald Fisher advances the idea of statistical hypothesis testing, which he calls "tests of significance", in his publication Statistical Methods for Research Workers.[40][41][42] Fisher suggests a probability of one in twenty (0.05) as a convenient cutoff level to reject the null hypothesis.[43] | ||

| 1925 | Analysis of variance becomes widely known after being included in Ronald Fisher's book Statistical Methods for Research Workers. | ||

| 1925 | Literature | British statistician Ronald Fisher publishes Statistical Methods for Research Workers, which is considered a seminal book in which he explains the concept of statistical significance.[44] | |

| 1926 | Factorial experiment. Ronald Fisher argues that "complex" designs (such as factorial designs) are more efficient than studying one factor at a time.[45] | ||

| 1926 | Sir John Russell publishes an article under the title, "Field Experiments: How They Are Made and What They Are", which exhibits the state of the art of experimental design as it was generally understood at the time.[46] | ||

| 1935 | Literature | Ronald Fisher publishes The Design of Experiments. This book is considered a foundational work in experimental design.[47][48][49] Fisher emphasizes that the efficient design of experiments gives no less important a gain in accuracy than does the optimal processing of the results of measurements.[50] | |

| 1937 | Educational study | First large-scale randomized experiment in education conducted by the U.S. Office of Education. | United States |

| 1939 | Concept development | A publication by Bose and Nair underlie the concept of association scheme. In their paper, they introduced the concept of association schemes as a way to study the structure of contingency tables. They show that association schemes can be used to represent the dependencies between the variables in a contingency table, and that they can be used to derive statistical tests for independence.[51] | |

| 1940 | Raj Chandra Bose and K. Kishen at the Indian Statistical Institute independently find some efficient designs for estimating several main effects. | India | |

| 1946 | R.L. Plackett and J.P. Burman publish a renowned paper titled "The Design of Optimal Multifactorial Experiments". The paper introduces what would be called Plackett–Burman designs, which are highly efficient screening designs with run numbers that are multiples of four. These designs are particularly useful for experiments where only main effects are of interest. In a Plackett-Burman design, main effects are often heavily confounded with two-factor interactions, making them suitable for screening experiments. For instance, a Plackett-Burman design with 12 runs can be utilized for an experiment containing up to 11 factors.[52] | ||

| 1948 | Concept development | British statistician Frank Yates introduces the concept of restricted randomization.[53][54] | |

| 1950 | Gertrude Mary Cox and William Gemmell Cochran publish the book Experimental Designs, which would become the major reference work on the design of experiments for statisticians for years afterwards.[55] | ||

| 1951 | Field development | The response surface methodology method is introduced by George E. P. Box and K. B. Wilson. The main idea of RSM is to use a sequence of designed experiments to obtain an optimal response. Box and Wilson suggest using a second-degree polynomial model to do this. They acknowledge that this model is only an approximation, but they use it because such a model is easy to estimate and apply, even when little is known about the process.[56] | |

| 1952 | American mathematician and statistician Herbert Robbins recognizes the significance of a problem where a gambler faces a trade-off between "exploitation" of the machine with the highest expected payoff and "exploration" to learn about other machines' payoffs. This problem involves pulling levers on different machines, each providing random rewards from unknown probability distributions. The gambler aims to maximize the total rewards earned over a sequence of lever pulls. Robbins devised convergent population selection strategies in his work on "some aspects of the sequential design of experiments."[57] | ||

| 1952 | Concept development | Bose and Shimamoto introduce the term association scheme.[58] | |

| 1954 | American experimental psychologist Edward Boring writes an article titled The History of Experimental Design. In this article, Boring notes that the early history of ideas on the planning of experiments has been "but little studied".[37] | ||

| 1955 | An influential study entitled The Powerful Placebo firmly establishes the idea that placebo effects are clinically important.[59] | ||

| 1957 | Industrial statistics | Genichi Taguchi promotes robust design methods for industrial experimentation. | Japan |

| 1960 | George E. P. Box and Donald Behnken devise what is statistics is known as Box–Behnken designs, which are experimental designs for response surface methodology[60] | ||

| 1961 | Concept development | Leslie Kish introduces the term design effect.[61] | |

| 1961 | Concept development | The term nocebo (Latin nocēbō, "I shall harm", from noceō, "I harm")[62] is coined by Walter Kennedy to denote the counterpart to the use of placebo (Latin placēbō, "I shall please", from placeō, "I please"; a substance that may produce a beneficial, healthful, pleasant, or desirable effect). Kennedy emphasized that his use of the term "nocebo" refers strictly to a subject-centered response, a quality inherent in the patient rather than in the remedy".[63] | |

| 1962 | British statistician John Nelder proposes a set of systematic, circular experimental designs as an alternative to the replicated, full factorial spacing experiments. These designs, known as the Nelder 'wheel' design, are developed to address limitations related to space and plant material. The design consists of a circular plot with concentric circumferences radiating outward, connected by spokes that extend from the center to the farthest circumference. Trees are planted at the intersections of spokes and circumferences within the plot.[64] | ||

| 1963 | Campbell and Stanley discuss design according to the categories of preexperimental designs, experimental designs, and quasi-experimental designs.[65] | ||

| 1972 | Herman Chernoff writes an overview of optimal sequential designs[66] In the design of experiments, optimal designs is a class of experimental designs that are optimal with respect to some statistical criterion. | ||

| 1976 | Literature | Douglas C. Montgomery publishes Design and Analysis of Experiments, a comprehensive textbook on the design and analysis of experiments. The book covers a wide range of topics, including principles of experimental design, different types of experimental designs, analysis of experimental data, and use of experimental design in a variety of fields, such as agriculture, industry, and medicine.[67] | |

| 1977 | The concept of Pocock boundary is introduced by the medical statistician Stuart Pocock.[68] | ||

| 1978 | According to Box et al., experimental design refers to the systematic layout of combinations of variables. The layouts in the case of concepts are test concepts or test vignettes.[69] | ||

| 1978 | The classical single-item Prophet inequality is published by Krengel and Sucheston. | ||

| 1979 | Marvin Zelen publishes his new method, which would later be called Zelen's design.[70][71] | ||

| 1979 | Michael McKay at Los Alamos National Laboratory makes a significant contribution to the field of statistical sampling by introducing the concept of latin hypercube sampling.[72] | ||

| 1980 | Kazdin classifies research designs as experimental, quasi-experimental, and correlational designs.[65] | ||

| 1980 | Experimental Economics | Vernon Smith develops laboratory methods to test economic theories, launching experimental economics. | United States |

| 1981 | Allen Neuringer first proposes the idea of using single case designs (sometimes referred to as n-of-1 trials) for self-experimentation.[73] | ||

| 1982 | Literature | British statistician George Box publishes Improving Almost Anything: Ideas and Essays, which gives many examples of the benefits of factorial experiments.[74] | |

| 1984 | Stuart Hurlbert publishes a paper in Ecological Monographs where he analyzes 176 experimental studies in ecology. He discovers that 27% of these studies suffer from 'pseudoreplication,' meaning they use statistical testing in situations where treatments are not replicated or replicates were not independent. When considering only studies that use inferential statistics, the percentage of pseudoreplication increases to 48%. To address this issue, Hurlbert suggests interspersing treatments in experiments, even if it means sacrificing randomized samples, particularly in smaller experiments. This approach aims to overcome the problem of pseudoreplication in ecological studies.[75] | ||

| 1986 | Robert LaLonde finds that findings of econometric procedures assessing the effect of an employment program on trainee earnings do not recover the experimental findings. This is considered to be the start of experimental benchmarking in social science. [76] | ||

| 1986 | Kerlinger describes the MAXMINCON principle.[65] | ||

| 1987 | Literature | Australian mathematician Anne Penfold Street publishes Combinatorics of Experimental Design, a textbook on the design of experiments.[77] | |

| 1988 | Literature | R. Mead publishes The Design of Experiments: Statistical Principles for Practical Applications.[78] | |

| 1989 | Literature | Perry D. Haaland publishes Experimental Design in Biotechnology, which describes statistical experimental design and analysis as a problem solving tool.[79][80][81] | |

| 1989 | Sacks et al. discuss statistical issues in the design and analysis of computer/simulation experiments.[82] | ||

| 1991 | The first International Data Farming Workshop takes place. Since then, 16 additional workshops would be held. These workshops would witness broad participation from various countries, including Canada, Singapore, Mexico, Turkey, and the United States.[83] | ||

| 1994 | The Neyer-d optimal test is first described by Barry T. Neyer.[84] | ||

| 1994 | Ethical oversight | International Conference on Harmonisation (ICH) guidelines for Good Clinical Practice (GCP) established. | Global |

| 1998 | Stat-Ease releases its first version of Design–Expert, a statistical software package specifically dedicated to performing design of experiments.[85] | ||

| 1999 | Basili et al use the term family of experiments to refer to a group of experiments that pursue the same goal and whose results can be combined into joint—and potentially more mature—findings than those that can be achieved in isolated experiments.[86] | ||

| 2000 (January 19) | Literature | A First Course in Design and Analysis of Experiments.[87] | |

| 2001 | Daniel Kahneman initiates the practice of adversarial collaboration.[88] | ||

| 2002 | The terms exploratory thought and confirmatory thought are introduced by social psychologist Jennifer Lerner and psychology professor Philip Tetlock in their book Emerging Perspectives in Judgment and Decision Making.[89] | ||

| 2005 | Study determines that most clinical trials have unclear allocation concealment in their protocols, in their publications, or both.[90] | ||

| 2009 | Adversarial collaboration is recommended by Daniel Kahneman[91] and others as a way of resolving contentious issues in fringe science, such as the existence or nonexistence of extrasensory perception.[92] | ||

| 2010 | In a meta-analysis of the placebo effect, Asbjørn Hróbjartsson and Peter C. Gøtzsche argue that "even if there were no true effect of placebo, one would expect to record differences between placebo and no-treatment groups due to bias associated with lack of blinding."[93] | ||

| 2014 | A study by Nosek and Lakens finds that preregistered studies are more likely to replicate than non-preregistered studies.[94] | ||

| 2019 | The US Food and Drug Administration provides guidelines for using adaptive designs in clinical trials.[95] |

Numerical and visual data

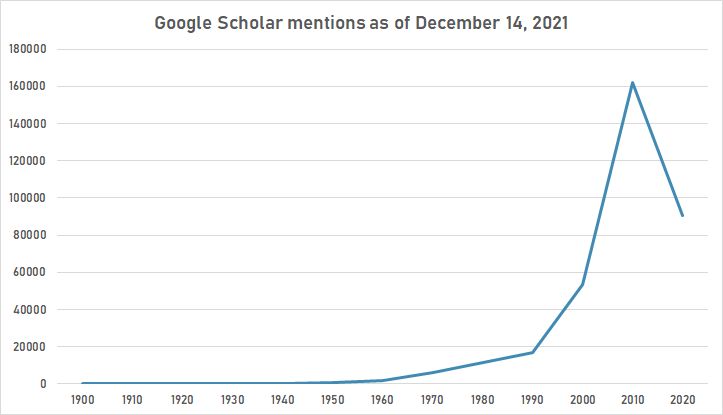

Google Scholar

The following table summarizes per-year mentions on Google Scholar as of December 14, 2021.

| Year | "experimental design" |

|---|---|

| 1900 | 30 |

| 1910 | 17 |

| 1920 | 13 |

| 1930 | 19 |

| 1940 | 62 |

| 1950 | 425 |

| 1960 | 1,590 |

| 1970 | 6,240 |

| 1980 | 11,400 |

| 1990 | 17,000 |

| 2000 | 53,200 |

| 2010 | 162,000 |

| 2020 | 90,600 |

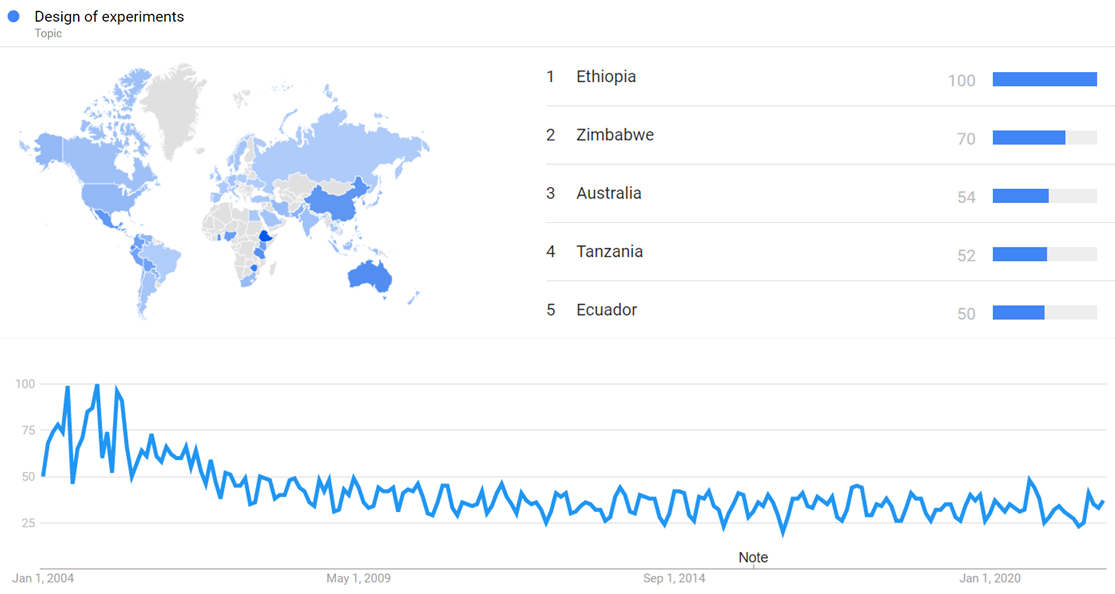

Google Trends

The chart below shows Google Trends data for Design of experiments (Topic), from January 2004 to December 2021, when the screenshot was taken. Interest is also ranked by country and displayed on world map.[96]

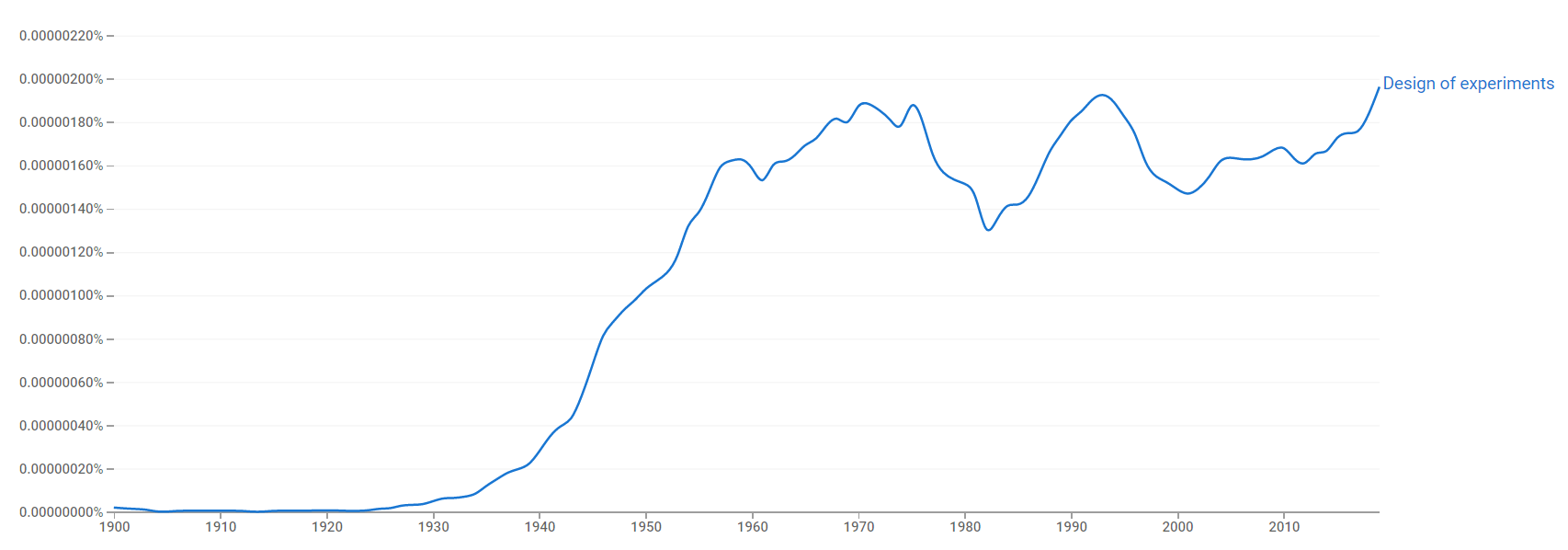

Google Ngram Viewer

The chart below shows Google Ngram Viewer data for Design of experiments, from 1900 to 2019.[97]

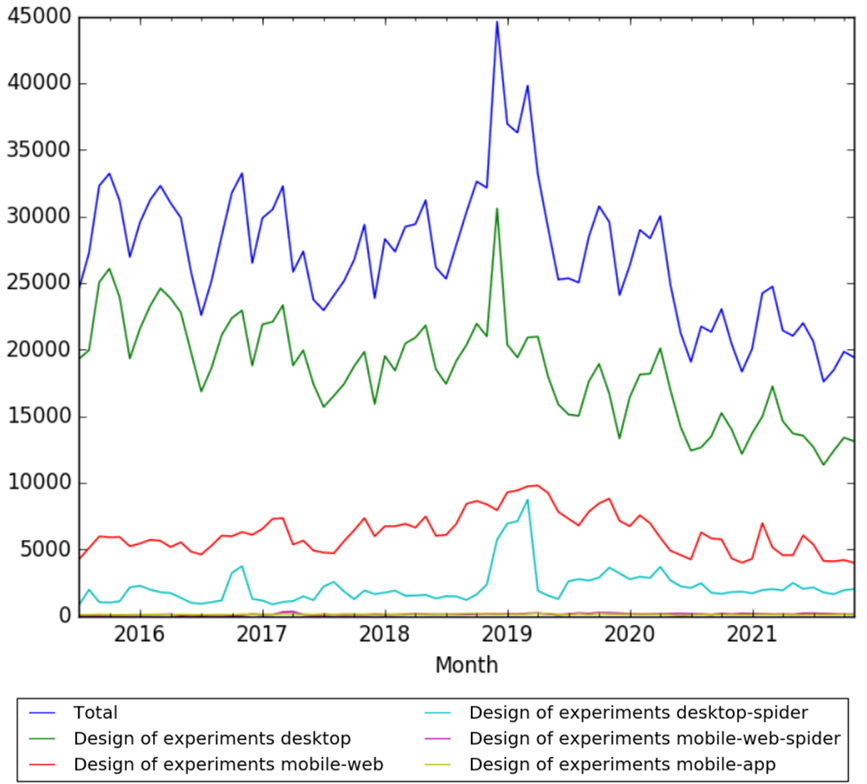

Wikipedia Views

The chart below shows pageviews of the English Wikipedia article Design of experiments, from July 2015 to November 2021.[98]

Meta information on the timeline

How the timeline was built

The initial version of the timeline was written by Sebastian Sanchez.

Funding information for this timeline is available.

Feedback and comments

Feedback for the timeline can be provided at the following places:

- FIXME

What the timeline is still missing

- Updated visual data

- for books: https://academic-accelerator.com/encyclopedia/optimal-design

- doi: 10.1007/978-3-319-33781-4_1

- experiment design/design of experiments "in 1800..2020"

- Add Google Scholar table

- Vipul: "will this timeline eventually talk of things like double-blinding, triple-blinding, placebos, RCTs, etc., right? You have blinding but I guess the rest are variants on the idea".

- Vipul: "Cover "Statistical significance", "p-values" and preregistration."

- Books

- Placebo in history

- Glossary of experimental design

- Category:Design of experiments

- Design of experiments (check See also list)

Timeline update strategy

See also

External links

References

- ↑ Yao, Mengxuan; Wang, Haicheng; Chen, Wei (May 2023). "Clinical research–When it matters". Injury. 54 (Supplement 3): S35 – S38. doi:10.1016/j.injury.2022.01.049. Retrieved April 9, 2025.

- ↑ Kleisiaris, Christos F.; Sfakianakis, Chrisanthos; Papathanasiou, Ioanna V. (March 15, 2014). "Health care practices in ancient Greece: The Hippocratic ideal". Journal of Medical Ethics and History of Medicine. 7. PMC 4263393. PMID 25512827. Retrieved April 9, 2025.

- ↑ "Baconian method". Encyclopaedia Britannica. Retrieved April 9, 2025.

- ↑ Lienhard, John H. (January 4, 1989). "Galileo's Experiment". The Engines of Our Ingenuity. University of Houston. Retrieved April 9, 2025.

- ↑ "Robert Boyle". Internet Encyclopedia of Philosophy. Retrieved April 9, 2025.

- ↑ Ore, Øystein (May 1960). "Pascal and the Invention of Probability Theory" (PDF). The American Mathematical Monthly. 67 (5). Mathematical Association of America: 409–419. JSTOR 2309286. Retrieved April 9, 2025.

- ↑ "Fermat and Pascal on Probability" (PDF). University of York. Retrieved April 9, 2025.

- ↑ Polasek, Wolfgang (August 2000). "The Bernoullis and the Origin of Probability Theory: Looking back after 300 Years". Resonance – Journal of Science Education. 5 (8): 26–42. Retrieved April 9, 2025.

- ↑ Cline, Douglas (August 9, 2020). "Age of Enlightenment". Physics LibreTexts. University of Rochester. Retrieved April 9, 2025.

- ↑ "The 'father of modern statistics' honoured". BBC News. September 9, 2016. Retrieved April 9, 2025.

- ↑ Schulz, Kathryn (August 14, 2023). "How Carl Linnaeus Set Out to Label All of Life". The New Yorker. Retrieved April 9, 2025.

- ↑ Schwarz, K. A., & Pfister, R.: Scientific psychology in the 18th century: a historical rediscovery. In: Perspectives on Psychological Science, Nr. 11, p. 399-407.

- ↑ "Statement on R A Fisher". Rothamsted Research. June 2020. Retrieved April 9, 2025.

- ↑ 14.0 14.1 "1.1 - A Quick History of the Design of Experiments (DOE) | STAT 503". PennState: Statistics Online Courses. Retrieved 11 May 2021.

- ↑ Preece, D. A. (December 1990). "R. A. Fisher and Experimental Design: A Review". Biometrics. 46 (4). International Biometric Society: 925–935. doi:10.2307/2532438. JSTOR 2532438. Retrieved April 9, 2025.

- ↑ Biau, David Jean; Jolles, Brigitte M.; Porcher, Raphaël (March 2010). "P Value and the Theory of Hypothesis Testing: An Explanation for New Researchers". Clinical Orthopaedics and Related Research. 468 (3): 885–892. doi:10.1007/s11999-009-1164-4. PMC 2816758. PMID 19921345. Retrieved April 9, 2025.

- ↑ Kramer, Lloyd; Maza, Sarah (23 June 2006). A Companion to Western Historical Thought. Wiley. ISBN 978-1-4051-4961-7.

Shortly after the start of the Cold War [...] double-blind reviews became the norm for conducting scientific medical research, as well as the means by which peers evaluated scholarship, both in science and in history.

- ↑ Colbourn, Charles J.; Dinitz, Jeffrey H. Handbook of Combinatorial Designs (2nd ed.). CRC Press. p. 12. ISBN 9781420010541. Retrieved 28 March 2017.

- ↑ 19.0 19.1 Dunn, Peter M. (January 1, 1997). "James Lind (1716-94) of Edinburgh and the treatment of scurvy". Archives of Disease in Childhood: Fetal and Neonatal Edition. 76 (1): F64–5. doi:10.1136/fn.76.1.F64. PMC 1720613. PMID 9059193.

- ↑ "Kent Academic Repository" (PDF). kar.kent.ac.uk. Retrieved 23 October 2021.

- ↑ "Polynomial regression". frontend. Retrieved 18 March 2022.

- ↑ Fétis, François-Joseph (1868). Biographie Universelle des Musiciens et Bibliographie Générale de la Musique, Tome 1 (Second ed.). Paris: Firmin Didot Frères, Fils, et Cie. p. 249. Retrieved 2011-07-21.

{{cite book}}: Unknown parameter|name-list-format=ignored (|name-list-style=suggested) (help) - ↑ Dubourg, George (1852). The Violin: Some Account of That Leading Instrument and its Most Eminent Professors... (Fourth ed.). London: Robert Cocks and Co. pp. 356–357. Retrieved 2011-07-21.

{{cite book}}: Unknown parameter|name-list-format=ignored (|name-list-style=suggested) (help) - ↑ Stigler (1986, pp 154–155)

- ↑ Stolberg, M. (December 2006). "Inventing the randomized double-blind trial: the Nuremberg salt test of 1835". Journal of the Royal Society of Medicine. 99 (12): 642–643. doi:10.1258/jrsm.99.12.642. PMC 1676327. PMID 17139070.

- ↑ 26.0 26.1 "Experimental Psychology: History, Features, and Method". Psychologs Magazine. Retrieved April 9, 2025.

- ↑ Peirce, C. S. (August 1967). "Note on the Theory of the Economy of Research". Operations Research. 15 (4): 643–648. doi:10.1287/opre.15.4.643.

- ↑ Peirce, C. S. (1882), "Introductory Lecture on the Study of Logic" delivered September 1882, published in Johns Hopkins University Circulars, v. 2, n. 19, pp. 11–12, November 1882, see p. 11, Google Books Eprint. Reprinted in Collected Papers v. 7, paragraphs 59–76, see 59, 63, Writings of Charles S. Peirce v. 4, pp. 378–82, see 378, 379, and The Essential Peirce v. 1, pp. 210–14, see 210–1, also lower down on 211.

- ↑ Stigler (1986, pp 314–315)

- ↑ Charles Sanders Peirce and Joseph Jastrow (1885). "On Small Differences in Sensation". Memoirs of the National Academy of Sciences. 3: 73–83. http://psychclassics.yorku.ca/Peirce/small-diffs.htm

- ↑ Pearson, Karl (1900). "On the criterion that a given system of deviations from the probable in the case of a correlated system of variables is such that it can be reasonably supposed to have arisen from random sampling" (PDF). Philosophical Magazine. Series 5. 50 (302): 157–175. doi:10.1080/14786440009463897.

- ↑ Nahm, Francis Sahngun (2017). "What the P values really tell us". The Korean Journal of Pain. 30 (4): 241. doi:10.3344/kjp.2017.30.4.241.

- ↑ Nahm, Francis Sahngun (October 2017). "What the P values really tell us". The Korean Journal of Pain. 30 (4): 241–242. doi:10.3344/kjp.2017.30.4.241. ISSN 2005-9159.

- ↑ Newman, David H., M.D. (2008). Hippocrates' shadow : secrets from the house of medicine (1st Scribner hardcover ed.). New York, NY: Scribner. ISBN 978-1-4165-5153-9.

{{cite book}}: CS1 maint: multiple names: authors list (link) - ↑ Rivers WH, Webber HN (August 1907). "The action of caffeine on the capacity for muscular work". The Journal of Physiology. 36 (1): 33–47. doi:10.1113/jphysiol.1907.sp001215. PMC 1533733. PMID 16992882.

- ↑ The Correlation Between Relatives on the Supposition of Mendelian Inheritance. Ronald A. Fisher. Philosophical Transactions of the Royal Society of Edinburgh. 1918. (volume 52, pages 399–433)

- ↑ 37.0 37.1 "Experimental Design | Encyclopedia.com". www.encyclopedia.com. Retrieved 5 April 2021.

- ↑ On the "Probable Error" of a Coefficient of Correlation Deduced from a Small Sample. Ronald A. Fisher. Metron, 1: 3–32 (1921)

- ↑ Scheffé (1959, p 291, "Randomization models were first formulated by Neyman (1923) for the completely randomized design, by Neyman (1935) for randomized blocks, by Welch (1937) and Pitman (1937) for the Latin square under a certain null hypothesis, and by Kempthorne (1952, 1955) and Wilk (1955) for many other designs.")

- ↑ Cumming, Geoff (2011). "From null hypothesis significance to testing effect sizes". Understanding The New Statistics: Effect Sizes, Confidence Intervals, and Meta-Analysis. Multivariate Applications Series. East Sussex, United Kingdom: Routledge. pp. 21–52. ISBN 978-0-415-87968-2.

- ↑ Fisher, Ronald A. (1925). Statistical Methods for Research Workers. Edinburgh, UK: Oliver and Boyd. pp. 43. ISBN 978-0-050-02170-5.

{{cite book}}: ISBN / Date incompatibility (help) - ↑ Poletiek, Fenna H. (2001). "Formal theories of testing". Hypothesis-testing Behaviour. Essays in Cognitive Psychology (1st ed.). East Sussex, United Kingdom: Psychology Press. pp. 29–48. ISBN 978-1-841-69159-6.

- ↑ Quinn, Geoffrey R.; Keough, Michael J. (2002). Experimental Design and Data Analysis for Biologists (1st ed.). Cambridge, UK: Cambridge University Press. pp. 46–69. ISBN 978-0-521-00976-8.

- ↑ Kopf, Dan. "An error made in 1925 led to a crisis in modern science—now researchers are joining to fix it". Quartz. Retrieved 13 March 2021.

- ↑ Fisher, Ronald. "The Arrangement of Field Experiments" (PDF). Journal of the Ministry of Agriculture of Great Britain. London, England: Ministry of Agriculture and Fisheries.

- ↑ Box, Joan Fisher (February 1980). "R. A. Fisher and the Design of Experiments, 1922-1926". The American Statistician. 34 (1): 1. doi:10.2307/2682986.

- ↑ Box, JF (February 1980). "R. A. Fisher and the Design of Experiments, 1922–1926". The American Statistician. 34 (1): 1–7. doi:10.2307/2682986. JSTOR 2682986.

- ↑ Yates, F (June 1964). "Sir Ronald Fisher and the Design of Experiments". Biometrics. 20 (2): 307–321. doi:10.2307/2528399. JSTOR 2528399.

- ↑ Stanley, Julian C. (1966). "The Influence of Fisher's "The Design of Experiments" on Educational Research Thirty Years Later". American Educational Research Journal. 3 (3): 223–229. doi:10.3102/00028312003003223. JSTOR 1161806.

- ↑ "Completely Randomized Design". TheFreeDictionary.com. Retrieved 16 March 2021.

- ↑ Bose, R. C.; Nair, K. R. (1939), "Partially balanced incomplete block designs", Sankhyā, 4: 337–372

- ↑ "5.3.3.5. Plackett-Burman designs". www.itl.nist.gov. Retrieved 22 July 2023.

- ↑ Healy, M. J. R. (1995). "Frank Yates, 1902-1994: The Work of a Statistician". International Statistical Review / Revue Internationale de Statistique. 63 (3): 271–288. ISSN 0306-7734.

- ↑ Grundy, P. M.; Healy, M. J. R. (1950). "Restricted Randomization and Quasi-Latin Squares". Journal of the Royal Statistical Society. Series B (Methodological). 12 (2): 286–291. ISSN 0035-9246.

- ↑ "Experimental Designs". www.amazon.com.

- ↑ Draper, Norman R. (1992). "Introduction to Box and Wilson (1951) On the Experimental Attainment of Optimum Conditions". Breakthroughs in Statistics: Methodology and Distribution. Springer. pp. 267–269. doi:10.1007/978-1-4612-4380-9_22.

- ↑ Robbins, Herbert (1952). "Some aspects of the sequential design of experiments". Bulletin of the American Mathematical Society. 58 (5): 527–535. doi:10.1090/S0002-9904-1952-09620-8.

- ↑ Bose, R. C.; Shimamoto, T. (June 1952). "Classification and Analysis of Partially Balanced Incomplete Block Designs with Two Associate Classes". Journal of the American Statistical Association. 47 (258): 151–184. doi:10.1080/01621459.1952.10501161.

- ↑ Hróbjartsson A, Gøtzsche PC (May 2001). "Is the placebo powerless? An analysis of clinical trials comparing placebo with no treatment". The New England Journal of Medicine. 344 (21): 1594–602. doi:10.1056/NEJM200105243442106. PMID 11372012.

- ↑ Ranade, Shruti Sunil; Thiagarajan, Padma (November 2017). "Selection of a design for response surface". IOP Conference Series: Materials Science and Engineering. 263: 022043. doi:10.1088/1757-899X/263/2/022043.

- ↑ Kish, Leslie (1965). "Survey Sampling". New York: John Wiley & Sons, Inc. ISBN 0-471-10949-5.

{{cite journal}}: Cite journal requires|journal=(help) - ↑ "Definition of NOCEBO". www.merriam-webster.com. Retrieved 5 March 2022.

- ↑ Kennedy, 1961

- ↑ Stankova, Tatiana (30 June 2020). "Application of Nelder wheel experimental design in forestry research". Silva Balcanica. 21 (1): 29–40. doi:10.3897/silvabalcanica.21.e54425.

{{cite journal}}: CS1 maint: unflagged free DOI (link) - ↑ 65.0 65.1 65.2 Heppner, Puncky Paul; Wampold, Bruce E.; Owen, Jesse; Wang, Kenneth T. (21 August 2015). Research Design in Counseling. Cengage Learning. ISBN 978-1-305-46501-5.

- ↑ Chernoff, H. (1972) Sequential Analysis and Optimal Design, SIAM Monograph.

- ↑ Montgomery, Douglas C. (2013). Design and Analysis of Experiments. John Wiley & Sons Incorporated. ISBN 978-1-62198-227-2.

- ↑ Pocock S (2005). "When (not) to stop a clinical trial for benefit" (PDF). JAMA. 294 (17): 2228–2230. doi:10.1001/jama.294.17.2228. PMID 16264167.

- ↑ "Experimental Design - an overview | ScienceDirect Topics". www.sciencedirect.com. Retrieved 23 March 2021.

- ↑ Richter, Felicitas; Dewey, Marc (September 2014). "Zelen Design in Randomized Controlled Clinical Trials". Radiology. 272 (3): 919–919. doi:10.1148/radiol.14140834.

- ↑ Homer, Caroline S.E. (April 2002). "Using the Zelen design in randomized controlled trials: debates and controversies". Journal of Advanced Nursing. 38 (2): 200–207. doi:10.1046/j.1365-2648.2002.02164.x.

- ↑ McKay, M.D.; Beckman, R.J.; Conover, W.J. (May 1979). "A Comparison of Three Methods for Selecting Values of Input Variables in the Analysis of Output from a Computer Code". Technometrics. 21 (2). American Statistical Association: 239–245. doi:10.2307/1268522. ISSN 0040-1706. JSTOR 1268522.

- ↑ Karkar, Ravi; Zia, Jasmine; Vilardaga, Roger; Mishra, Sonali R; Fogarty, James; Munson, Sean A; Kientz, Julie A (1 May 2016). "A framework for self-experimentation in personalized health". Journal of the American Medical Informatics Association. 23 (3): 440–448. doi:10.1093/jamia/ocv150.

- ↑ George E.P., Box (2006). Improving Almost Anything: Ideas and Essays (Revised ed.). Hoboken, New Jersey: Wiley.

- ↑ "Revisiting Hurlbert 1984". Reflections on Papers Past. 29 November 2020. Retrieved 29 March 2022.

- ↑ LaLonde, Robert (1986). "Evaluating the Econometric Evaluations of Training Programs with Experimental Data". American Economic Review. 4 (76): 604–620.

- ↑ Street, Anne Penfold; Street, Professor of Mathematics Anne Penfold; Street, Deborah J.; Street, Lecturer in Biometry Deborah J. (1987). "Combinatorics of Experimental Design". Clarendon Press.

- ↑ Mead, R. (26 July 1990). The Design of Experiments: Statistical Principles for Practical Applications. Cambridge University Press. ISBN 978-0-521-28762-3.

- ↑ Haaland, Perry D. (1989). Experimental design in biotechnology. New York: Marcel Dekker. ISBN 9780824778811.

- ↑ Haaland, Perry D. (June 1991). "BOOK REVIEW: EXPERIMENTAL DESIGN IN BIOTECHNOLOGY Perry D. Haaland Marcel Dekkwe, Inc., New York, 1989". Drying Technology. 9 (3): 817–817. doi:10.1080/07373939108916715.

- ↑ Haaland, Perry D. (25 November 2020). "Experimental Design in Biotechnology". doi:10.1201/9781003065968.

{{cite journal}}: Cite journal requires|journal=(help) - ↑ "Read "Statistical Methods for Testing and Evaluating Defense Systems: Interim Report" at NAP.edu". Retrieved 14 March 2021.

- ↑ Horne, G., & Schwierz, K. (2008). Data farming around the world overview. Paper presented at the 1442-1447. doi:10.1109/WSC.2008.4736222

- ↑ Neyer, Barry T. (February 1994). "A D-Optimality-Based Sensitivity Test". Technometrics. 36 (1): 61. doi:10.2307/1269199.

- ↑ Li He, "Design of Experiments Software, DOE software", The Chemical Information Network, July 17, 2003.

- ↑ "Analyzing Families of Experiments in SE: a Systematic Mapping Study" (PDF). arxiv.org. Retrieved 12 March 2022.

- ↑ Oehlert, Gary W. (2010). A First Course in Design and Analysis of Experiments. Gary W. Oehlert.

- ↑ "Adversarial Collaboration: An EDGE Lecture by Daniel Kahneman | Edge.org". www.edge.org. Retrieved 8 March 2022.

- ↑ Schneider, ed. by Sandra L.; Shanteau, James (2003). Emerging perspectives on judgment and decision research. Cambridge [u.a.]: Cambridge Univ. Press. pp. 438–9. ISBN 052152718X.

{{cite book}}:|first=has generic name (help) - ↑ Pildal J, Chan AW, Hróbjartsson A, Forfang E, Altman DG, Gøtzsche PC (2005). "Comparison of descriptions of allocation concealment in trial protocols and the published reports: cohort study". BMJ. 330 (7499): 1049. doi:10.1136/bmj.38414.422650.8F. PMC 557221. PMID 15817527.

- ↑ Kahneman, Daniel; Klein, Gary. Conditions for intuitive expertise: A failure to disagree. American Psychologist, Vol 64(6), Sep 2009, 515-526. doi: 10.1037/a0016755

- ↑ Wagenmakers, E.-J., Wetzels, R., Borsboom, D., & van der Maas, H. L. J. (2010). Why psychologists must change the way they analyze their data: The case of psi.

- ↑ Hróbjartsson A, Gøtzsche PC (January 2010). Hróbjartsson A (ed.). "Placebo interventions for all clinical conditions" (PDF). The Cochrane Database of Systematic Reviews. 106 (1): CD003974. doi:10.1002/14651858.CD003974.pub3. PMID 20091554.

- ↑ Nosek, Brian A.; Ebersole, Charles R.; DeHaven, Alexander C.; Mellor, David T. (13 March 2018). "The preregistration revolution". Proceedings of the National Academy of Sciences. 115 (11): 2600–2606. doi:10.1073/pnas.1708274114.

- ↑ "Adaptive designs for clinical trials of drugs and biologics: Guidance for industry". U.S. Food and Drug Administration (FDA). 1 November 2019. Retrieved 7 April 2021.

- ↑ "Design of experiments". Google Trends. Retrieved 14 December 2021.

- ↑ "Design of experiments". books.google.com. Retrieved 14 December 2021.

- ↑ "Design of experiments". wikipediaviews.org. Retrieved 14 December 2021.