Difference between revisions of "Timeline of OpenAI"

From Timelines

(→What the timeline is still missing) |

(→Full timeline) |

||

| Line 268: | Line 268: | ||

| 2020 || {{dts|February 17}} || || Coverage || AI reporter Karen Hao at ''MIT Technology Review'' publishes review on OpenAI titled ''The messy, secretive reality behind OpenAI’s bid to save the world'', which suggests the company is surrendering its declaration to be transparent in order to outpace competitors.<ref>{{cite web|url = https://www.technologyreview.com/2020/02/17/844721/ai-openai-moonshot-elon-musk-sam-altman-greg-brockman-messy-secretive-reality/|title = The messy, secretive reality behind OpenAI’s bid to save the world. The AI moonshot was founded in the spirit of transparency. This is the inside story of how competitive pressure eroded that idealism.|last = Hao|first = Karen|publisher = Technology Review}}</ref> As a response, {{w|Elon Musk}} criticizes OpenAI, saying it lacks transparency.<ref name="Aaron">{{cite web |last1=Holmes |first1=Aaron |title=Elon Musk just criticized the artificial intelligence company he helped found — and said his confidence in the safety of its AI is 'not high' |url=https://www.businessinsider.com/elon-musk-criticizes-openai-dario-amodei-artificial-intelligence-safety-2020-2 |website=businessinsider.com |accessdate=29 February 2020}}</ref> On his {{w|Twitter}} account, Musk writes "I have no control & only very limited insight into OpenAI. Confidence in Dario for safety is not high", alluding to OpenAI Vice President of Research Dario Amodei.<ref>{{cite web |title=Elon Musk |url=https://twitter.com/elonmusk/status/1229546206948462597 |website=twitter.com |accessdate=29 February 2020}}</ref> | | 2020 || {{dts|February 17}} || || Coverage || AI reporter Karen Hao at ''MIT Technology Review'' publishes review on OpenAI titled ''The messy, secretive reality behind OpenAI’s bid to save the world'', which suggests the company is surrendering its declaration to be transparent in order to outpace competitors.<ref>{{cite web|url = https://www.technologyreview.com/2020/02/17/844721/ai-openai-moonshot-elon-musk-sam-altman-greg-brockman-messy-secretive-reality/|title = The messy, secretive reality behind OpenAI’s bid to save the world. The AI moonshot was founded in the spirit of transparency. This is the inside story of how competitive pressure eroded that idealism.|last = Hao|first = Karen|publisher = Technology Review}}</ref> As a response, {{w|Elon Musk}} criticizes OpenAI, saying it lacks transparency.<ref name="Aaron">{{cite web |last1=Holmes |first1=Aaron |title=Elon Musk just criticized the artificial intelligence company he helped found — and said his confidence in the safety of its AI is 'not high' |url=https://www.businessinsider.com/elon-musk-criticizes-openai-dario-amodei-artificial-intelligence-safety-2020-2 |website=businessinsider.com |accessdate=29 February 2020}}</ref> On his {{w|Twitter}} account, Musk writes "I have no control & only very limited insight into OpenAI. Confidence in Dario for safety is not high", alluding to OpenAI Vice President of Research Dario Amodei.<ref>{{cite web |title=Elon Musk |url=https://twitter.com/elonmusk/status/1229546206948462597 |website=twitter.com |accessdate=29 February 2020}}</ref> | ||

|- | |- | ||

| − | | 2020 || {{dts|May 28}} (release), June and July (discussion and exploration) || {{w|Natural-language generation}} || Software release || OpenAI releases the natural language model GPT-3 on GitHub<ref>{{cite web|url = http://github.com/openai/gpt-3|title = GPT-3 on GitHub|publisher = OpenAI|accessdate = July 19, 2020}}</ref> and uploads to the ArXiV the paper ''Language Models are Few-Shot Learners'' explaining how GPT-3 was trained and how it performs.<ref>{{cite web|url = https://arxiv.org/abs/2005.14165|title = Language Models are Few-Shot Learners|date = May 28, 2020|accessdate = July 19, 2020}}</ref> Games, websites, and chatbots based on GPT-3 are created for exploratory purposes in the next two months (mostly by people unaffiliated with OpenAI), with a general takeaway that GPT-3 performs significantly better than GPT-2 and past natural language models.<ref>{{cite web|url = https://twitter.com/nicklovescode/status/1283300424418619393|title = Nick Cammarata on Twitter: GPT-3 as therapist|date = July 14, 2020|accessdate = July 19, 2020}}</ref><ref>{{cite web|url = https://www.gwern.net/GPT-3|title = GPT-3 Creative Fiction|date = June 19, 2020|accessdate = July 19, 2020|author = Gwern}}</ref><ref>{{cite web|url = https://medium.com/@aidungeon/ai-dungeon-dragon-model-upgrade-7e8ea579abfe|title = AI Dungeon: Dragon Model Upgrade. You can now play AI Dungeon with one of the most powerful AI models in the world.|last = Walton|first = Nick|date = July 14, 2020|accessdate = July 19, 2020}}</ref><ref>{{cite web|url = https://twitter.com/sharifshameem/status/1282676454690451457|title = Sharif Shameem on Twitter: With GPT-3, I built a layout generator where you just describe any layout you want, and it generates the JSX code for you.|date = July 13, 2020|accessdate = July 19, 2020|publisher = Twitter|last = Shameem|first = Sharif}}</ref> Commentators also note many weaknesses such as: trouble with arithmetic because of incorrect pattern | + | | 2020 || {{dts|May 28}} (release), June and July (discussion and exploration) || {{w|Natural-language generation}} || Software release || OpenAI releases the natural language model GPT-3 on GitHub<ref>{{cite web|url = http://github.com/openai/gpt-3|title = GPT-3 on GitHub|publisher = OpenAI|accessdate = July 19, 2020}}</ref> and uploads to the ArXiV the paper ''Language Models are Few-Shot Learners'' explaining how GPT-3 was trained and how it performs.<ref>{{cite web|url = https://arxiv.org/abs/2005.14165|title = Language Models are Few-Shot Learners|date = May 28, 2020|accessdate = July 19, 2020}}</ref> Games, websites, and chatbots based on GPT-3 are created for exploratory purposes in the next two months (mostly by people unaffiliated with OpenAI), with a general takeaway that GPT-3 performs significantly better than GPT-2 and past natural language models.<ref>{{cite web|url = https://twitter.com/nicklovescode/status/1283300424418619393|title = Nick Cammarata on Twitter: GPT-3 as therapist|date = July 14, 2020|accessdate = July 19, 2020}}</ref><ref>{{cite web|url = https://www.gwern.net/GPT-3|title = GPT-3 Creative Fiction|date = June 19, 2020|accessdate = July 19, 2020|author = Gwern}}</ref><ref>{{cite web|url = https://medium.com/@aidungeon/ai-dungeon-dragon-model-upgrade-7e8ea579abfe|title = AI Dungeon: Dragon Model Upgrade. You can now play AI Dungeon with one of the most powerful AI models in the world.|last = Walton|first = Nick|date = July 14, 2020|accessdate = July 19, 2020}}</ref><ref>{{cite web|url = https://twitter.com/sharifshameem/status/1282676454690451457|title = Sharif Shameem on Twitter: With GPT-3, I built a layout generator where you just describe any layout you want, and it generates the JSX code for you.|date = July 13, 2020|accessdate = July 19, 2020|publisher = Twitter|last = Shameem|first = Sharif}}</ref> Commentators also note many weaknesses such as: trouble with arithmetic because of incorrect pattern matching, trouble with multi-step logical reasoning even though it could do the individual steps separately, inability to identify that a question is nonsense, inability to identify that it does not know the answer to a question, and picking up of racist and sexist content when trained on corpuses that contain some such content.<ref>{{cite web|url = http://lacker.io/ai/2020/07/06/giving-gpt-3-a-turing-test.html|title = Giving GPT-3 a Turing Test|last = Lacker|first = Kevin|date = July 6, 2020|accessdate = July 19, 2020}}</ref><ref>{{cite web|url = https://minimaxir.com/2020/07/gpt3-expectations/|title = Tempering Expectations for GPT-3 and OpenAI’s API|date = July 18, 2020|accessdate = July 19, 2020|last = Woolf|first = Max}}</ref><ref>{{cite web|url = https://delian.substack.com/p/quick-thoughts-on-gpt3|title = Quick thoughts on GPT3|date = July 17, 2020|accessdate = July 19, 2020|last = Asparouhov|first = Delian}}</ref> |

|} | |} | ||

Revision as of 10:50, 20 December 2020

This is a timeline of OpenAI. OpenAI is a non-profit safety-conscious artificial intelligence capabilities company.

Contents

Sample questions

The following are some interesting questions that can be answered by reading this timeline:

- What are some significant events previous to the creation of OpenAI?

- Sort the full timeline by "Event type" and look for the group of rows with value "Prelude".

- You will see some events involving key people like Elon Musk and Sam Altman, that would eventually lead to the creation of OpenAI.

- What are the various papers and posts published by OpenAI?

- Sort the full timeline by "Event type" and look for the group of rows with value "Publication".

- You will see mostly papers submitted to the ArXiv by OpenAI-affiliated researchers. Also blog posts.

- What are the several toolkits, implementations, algorithms, systems and software in general released by OpenAI?

- Sort the full timeline by "Event type" and look for the group of rows with value "Software release".

- You will see a variety of releases, some of them open-sourced.

- What are some other significant events describing advances in research?

- Sort the full timeline by "Event type" and look for the group of rows with value "Research progress".

- You will see some discoveries and other significant results obtained by OpenAI.

- What is the staff composition and what are the different roles in the organization?

- Sort the full timeline by "Event type" and look for the group of rows with value "Staff".

- You will see the names of incorporated people and their roles.

- What are the several partnerships between OpenAI and other organizations?

- What are some significant fundings granted to OpenAI by donors?

- Sort the full timeline by "Event type" and look for the group of rows with value "Donation".

- You will see names like the Open Philanthropy Project, and Nvidia, among others.

- What are some notable events hosted by OpenAI?

- Sort the full timeline by "Event type" and look for the group of rows with value "Event hosting".

- What are some notable publications by third parties about OpenAI?

- Sort the full timeline by "Event type" and look for the group of rows with value "Coverage".

Big picture

| Time period | Development summary | More details |

|---|---|---|

| 2014–2015 | Background | Nick Bostrom's book Superintelligence: Paths, Dangers, Strategies, about the dangers of superhuman machine intelligence, is published. Soon after the book's publication, Elon Musk and Sam Altman, the two people who would become co-chairs and initial donors of OpenAI, publicly state their concern of superhuman machine intelligence. |

| 2015 | Establishment | OpenAI is founded as a nonprofit and begins producing research. |

| 2019 | Reorganization | OpenAI shifts from nonprofit to ‘capped-profit’ with the purpose to attract capital. |

Visual data

Wikipedia Views

The image below shows Wikipedia Views data for Open AI entry on English Wikipedia on desktop, mobile web, mobile app, desktop-spider, and mobile-web-spider; from July 2015 (see OpenAI creation around December 2015) to January 2020.[1]

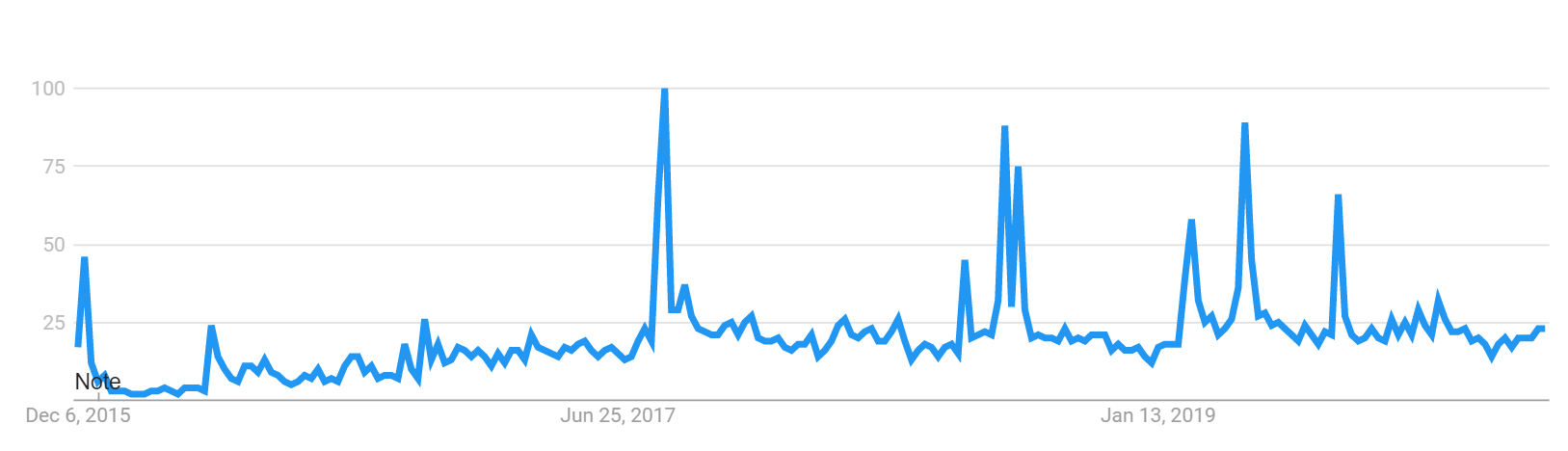

Google Trends

The image below shows Google Trends data for OpenAI entry from December 2015 (OpenAI creation) to February 2020.[2]

Full timeline

| Year | Month and date | Domain | Event type | Details |

|---|---|---|---|---|

| 2014 | October 22–24 | Prelude | During an interview at the AeroAstro Centennial Symposium, Elon Musk, who would later become co-chair of OpenAI, calls artificial intelligence humanity's "biggest existential threat".[3][4] | |

| 2015 | February 25 | Prelude | Sam Altman, president of Y Combinator who would later become a co-chair of OpenAI, publishes a blog post in which he writes that the development of superhuman AI is "probably the greatest threat to the continued existence of humanity".[5] | |

| 2015 | May 6 | Prelude | Greg Brockman, who would become CTO of OpenAI, announces in a blog post that he is leaving his role as CTO of Stripe. In the post, in the section "What comes next" he writes "I haven't decided exactly what I'll be building (feel free to ping if you want to chat)".[6][7] | |

| 2015 | June | Prelude | Sam Altman and Greg Brockman have a conversation about next steps for Brockman.[8] | |

| 2015 | June 4 | Prelude | At Airbnb's Open Air 2015 conference, Sam Altman, president of Y Combinator who would later become a co-chair of OpenAI, states his concern for advanced artificial intelligence and shares that he recently invested in a company doing AI safety research.[9] | |

| 2015 | July (approximate) | Prelude | Sam Altman sets up a dinner in Menlo Park, California to talk about starting an organization to do AI research. Attendees include Greg Brockman, Dario Amodei, Chris Olah, Paul Christiano, Ilya Sutskever, and Elon Musk.[8] | |

| 2015 | December 11 | Creation | OpenAI is announced to the public. (The news articles from this period make it sound like OpenAI launched sometime after this date.)[10][11][12] Co-founders include Wojciech Zaremba[13], | |

| 2015 | December | Coverage | The article "OpenAI" is created on Wikipedia.[14] | |

| 2015 | December | Team | OpenAI announces Y Combinator founding partner Jessica Livingston as one of its financial backers.[15] | |

| 2016 | January | Team | Ilya Sutskever joins OpenAI as Research Director.[16][17] | |

| 2016 | January 9 | Education | The OpenAI research team does an AMA ("ask me anything") on r/MachineLearning, the subreddit dedicated to machine learning.[18] | |

| 2016 | February 25 | Optimization | Publication | "Weight Normalization: A Simple Reparameterization to Accelerate Training of Deep Neural Networks", a paper on optimization, is first submitted to the ArXiv. The paper presents weight normalization: a reparameterization of the weight vectors in a neural network that decouples the length of those weight vectors from their direction.[19] |

| 2016 | March 31 | Team | A blog post from this day announces that Ian Goodfellow has joined OpenAI.[20] Previously, Goodfellow worked as Senior Research Scientist at Google.[21][17] | |

| 2016 | April 26 | Team | A blog post from this day announces that Pieter Abbeel has joined OpenAI.[22][17] | |

| 2016 | April 27 | Software release | The public beta of OpenAI Gym, an open source toolkit that provides environments to test AI bots, is released.[23][24][25] | |

| 2016 | May 25 | Safety | Publication | "Adversarial Training Methods for Semi-Supervised Text Classification" is submitted to the ArXiv. The paper proposes a method that achieves better results on multiple benchmark semi-supervised and purely supervised tasks.[26] |

| 2016 | May 31 | Generative models | Publication | "VIME: Variational Information Maximizing Exploration", a paper on generative models, is submitted to the ArXiv. The paper introduces Variational Information Maximizing Exploration (VIME), an exploration strategy based on maximization of information gain about the agent's belief of environment dynamics.[27] |

| 2016 | June 5 | Reinforcement learning | Publication | "OpenAI Gym", a paper on reinforcement learning, is submitted to the ArXiv. It presents OpenAI Gym as a toolkit for reinforcement learning research.[28] OpenAI Gym is considered by some as "a huge opportunity for speeding up the progress in the creation of better reinforcement algorithms, since it provides an easy way of comparing them, on the same conditions, independently of where the algorithm is executed".[29] |

| 2016 | June 10 | Generative models | Publication | "Improved Techniques for Training GANs", a paper on generative models, is submitted to the ArXiv. It presents a variety of new architectural features and training procedures that OpenAI applies to the generative adversarial networks (GANs) framework.[30] |

| 2016 | June 12 | Generative models | Publication | "InfoGAN: Interpretable Representation Learning by Information Maximizing Generative Adversarial Nets", a paper on generative models, is submitted to ArXiv. It describes InfoGAN, an information-theoretic extension to the Generative Adversarial Network that is able to learn disentangled representations in a completely unsupervised manner.[31] |

| 2016 | June 15 | Generative models | Publication | "Improving Variational Inference with Inverse Autoregressive Flow", a paper on generative models, is submitted to the ArXiv. We propose a new type of normalizing flow, inverse autoregressive flow (IAF), that, in contrast to earlier published flows, scales well to high-dimensional latent spaces.[32] |

| 2016 | June 16 | Generative models | Publication | OpenAI publishes post describing four projects on generative models, a branch of unsupervised learning techniques in machine learning.[33] |

| 2016 | June 21 | Publication | "Concrete Problems in AI Safety" by Dario Amodei, Chris Olah, Jacob Steinhardt, Paul Christiano, John Schulman, and Dan Mané is submitted to the arXiv. The paper explores practical problems in machine learning systems.[34] The paper would receive a shoutout from the Open Philanthropy Project.[35] It would become a landmark in AI safety literature, and many of its authors would continue to do AI safety work at OpenAI in the years to come. | |

| 2016 | July | Team | Dario Amodei joins OpenAI[36], working on the Team Lead for AI Safety.[37][17] | |

| 2016 | July 8 | Publication | "Adversarial Examples in the Physical World" is published. One of the authors is Ian Goodfellow, who is at OpenAI at the time.[38] | |

| 2016 | July 28 | OpenAI publishes post calling for applicants to work in the following problem areas of interest:

| ||

| 2016 | August 15 | Donation | The technology company Nvidia announces that it has donated the first Nvidia DGX-1 (a supercomputer) to OpenAI. OpenAI plans to use the supercomputer to train its AI on a corpus of conversations from Reddit.[40][41][42] | |

| 2016 | August 29 | Infrastructure | Publication | "Infrastructure for Deep Learning" is published. The post shows how deep learning research usually proceeds. It also describes the infrastructure choices OpenAI made to support it, and open-source kubernetes-ec2-autoscaler, a batch-optimized scaling manager for Kubernetes.[43] |

| 2016 | October 11 | Robotics | Publication | "Transfer from Simulation to Real World through Learning Deep Inverse Dynamics Model", a paper on robotics, is submitted to the ArXiv. It investigates settings where the sequence of states traversed in simulation remains reasonable for the real world.[44] |

| 2016 | October 18 | Safety | Publication | "Semi-supervised Knowledge Transfer for Deep Learning from Private Training Data", a paper on safety, is submitted to the ArXiv. It shows an approach to providing strong privacy guarantees for training data: Private Aggregation of Teacher Ensembles (PATE).[45] |

| 2016 | November 14 | Generative models | Publication | "On the Quantitative Analysis of Decoder-Based Generative Models", a paper on generative models, is submitted to the ArXiv. It introduces a technique to analyze the performance of decoder-based models.[46] |

| 2016 | November 15 | Partnership | A partnership between OpenAI and Microsoft's artificial intelligence division is announced. As part of the partnership, Microsoft provides a price reduction on computing resources to OpenAI through Microsoft Azure.[47][48] | |

| 2016 | December 5 | Software release | OpenAI's Universe, "a software platform for measuring and training an AI's general intelligence across the world's supply of games, websites and other applications", is released.[49][50][51][52] | |

| 2017 | January | Staff | Paul Christiano joins OpenAI to work on AI alignment.[53] He was previously an intern at OpenAI in 2016.[54] | |

| 2017 | March | Donation | The Open Philanthropy Project awards a grant of $30 million to OpenAI for general support.[55] The grant initiates a partnership between Open Philanthropy Project and OpenAI, in which Holden Karnofsky (executive director of Open Philanthropy Project) joins OpenAI's board of directors to oversee OpenAI's safety and governance work.[56] The grant is criticized by Maciej Cegłowski[57] and Benjamin Hoffman (who would write the blog post "OpenAI makes humanity less safe")[58][59][60] among others.[61] | |

| 2017 | March 24 | Research progress | OpenAI announces having discovered that evolution strategies rival the performance of standard reinforcement learning techniques on modern RL benchmarks (e.g. Atari/MuJoCo), while overcoming many of RL’s inconveniences.[62] | |

| 2017 | March | Reorganization | Greg Brockman and a few other core members of OpenAI begin drafting an internal document to lay out a path to artificial general intelligence. As the team studies trends within the field, they realize staying a nonprofit is financially untenable.[63] | |

| 2017 | April | Coverage | An article entitled "The People Behind OpenAI" is published on Red Hat's Open Source Stories website, covering work at OpenAI.[64][65][66] | |

| 2017 | April 6 | Software release | OpenAI unveils an unsupervised system which is able to perform a excellent sentiment analysis, despite being trained only to predict the next character in the text of Amazon reviews.[67][68] | |

| 2017 | April 6 | Publication | "Learning to Generate Reviews and Discovering Sentiment" is published.[69] | |

| 2017 | April 6 | Neuroevolution | Research progress | OpenAI unveils reuse of an old field called “neuroevolution”, and a subset of algorithms from it called “evolution strategies,” which are aimed at solving optimization problems. In one hour training on an Atari challenge, an algorithm is found to reach a level of mastery that took a reinforcement-learning system published by DeepMind in 2016 a whole day to learn. On the walking problem the system took 10 minutes, compared to 10 hours for DeepMind's approach.[70] |

| 2017 | May 15 | Robotics | Software release | OpenAI releases Roboschool, an open-source software for robot simulation, integrated with OpenAI Gym.[71] |

| 2017 | May 16 | Robotics | Software release | OpenAI introduces a robotics system, trained entirely in simulation and deployed on a physical robot, which can learn a new task after seeing it done once.[72] |

| 2017 | May 24 | Reinforcement learning | Software release | OpenAI releases Baselines, a set of implementations of reinforcement learning algorithms.[73][74] |

| 2017 | June 12 | Safety | Publication | "Deep reinforcement learning from human preferences" is first uploaded to the arXiv. The paper is a collaboration between researchers at OpenAI and Google DeepMind.[75][76][77] |

| 2017 | June 28 | Robotics | Open sourcing | OpenAI open sources a high-performance Python library for robotic simulation using the MuJoCo engine, developed over OpenAI research on robotics.[78] |

| 2017 | June | Reinforcement learning | Partnership | OpenAI partners with DeepMind’s safety team in the development of an algorithm which can infer what humans want by being told which of two proposed behaviors is better. The learning algorithm uses small amounts of human feedback to solve modern reinforcement learning environments.[79] |

| 2017 | July 27 | Reinforcement learning | Research progress | OpenAI announces having found that adding adaptive noise to the parameters of reinforcement learning algorithms frequently boosts performance.[80] |

| 2017 | August 12 | Achievement | OpenAI's Dota 2 bot beats Danil "Dendi" Ishutin, a professional human player, (and possibly others?) in one-on-one battles.[81][82][83] | |

| 2017 | August 13 | Coverage | The New York Times publishes a story covering the AI safety work (by Dario Amodei, Geoffrey Irving, and Paul Christiano) at OpenAI.[84] | |

| 2017 | August 18 | Reinforcement learning | Software release | OpenAI releases two implementations: ACKTR, a reinforcement learning algorithm, and A2C, a synchronous, deterministic variant of Asynchronous Advantage Actor Critic (A3C).[85] |

| 2017 | September 13 | Reinforcement learning | Publication | "Learning with Opponent-Learning Awareness" is first uploaded to the ArXiv. The paper presents Learning with Opponent-Learning Awareness (LOLA), a method in which each agent shapes the anticipated learning of the other agents in an environment.[86][87] |

| 2017 | October 11 | Software release | RoboSumo, a game that simulates sumo wrestling for AI to learn to play, is released.[88][89] | |

| 2017 | November 6 | Team | The New York Times reports that Pieter Abbeel (a researcher at OpenAI) and three other researchers from Berkeley and OpenAI have left to start their own company called Embodied Intelligence.[90] | |

| 2017 | December 6 | Neural network | Software release | OpenAI releases highly-optimized GPU kernels for networks with block-sparse weights, an underexplored class of neural network architectures. Depending on the chosen sparsity, these kernels can run orders of magnitude faster than cuBLAS or cuSPARSE.[91] |

| 2017 | December | Publication | The 2017 AI Index is published. OpenAI contributed to the report.[92] | |

| 2018 | February 20 | Safety | Publication | The report "The Malicious Use of Artificial Intelligence: Forecasting, Prevention, and Mitigation" is submitted to the ArXiv. It forecasts malicious use of artificial intelligence in the short term and makes recommendations on how to mitigate these risks from AI. The report is authored by individuals at Future of Humanity Institute, Centre for the Study of Existential Risk, OpenAI, Electronic Frontier Foundation, Center for a New American Security, and other institutions.[93][94][95][96][97] |

| 2018 | February 20 | Donation | OpenAI announces changes in donors and advisors. New donors are: Jed McCaleb, Gabe Newell, Michael Seibel, Jaan Tallinn, and Ashton Eaton and Brianne Theisen-Eaton. Reid Hoffman is "significantly increasing his contribution". Pieter Abbeel (previously at OpenAI), Julia Galef, and Maran Nelson become advisors. Elon Musk departs the board but remains as a donor and advisor.[98][96] | |

| 2018 | February 26 | Robotics | Software release | OpenAI releases eight simulated robotics environments and a Baselines implementation of Hindsight Experience Replay, all developed for OpenAI research over the previous year. These environments were to train models which work on physical robots.[99] |

| 2018 | March 3 | Event hosting | OpenAI hosts its first hackathon. Applicants include high schoolers, industry practitioners, engineers, researchers at universities, and others, with interests spanning healthcare to AGI.[100][101] | |

| 2018 | April 5 – June 5 | Event hosting | The OpenAI Retro Contest takes place.[102][103] As a result of the release of the Gym Retro library, OpenAI's Universe become deprecated.[104] | |

| 2018 | April 9 | Commitment | OpenAI releases a charter stating that the organization commits to stop competing with a value-aligned and safety-conscious project that comes close to building artificial general intelligence, and also that OpenAI expects to reduce its traditional publishing in the future due to safety concerns.[105][106][107][108][109] | |

| 2018 | April 19 | Financial | The New York Times publishes a story detailing the salaries of researchers at OpenAI, using information from OpenAI's 2016 Form 990. The salaries include $1.9 million paid to Ilya Sutskever and $800,000 paid to Ian Goodfellow (hired in March of that year).[110][111][112] | |

| 2018 | May 2 | safety | Publication | The paper "AI safety via debate" by Geoffrey Irving, Paul Christiano, and Dario Amodei is uploaded to the arXiv. The paper proposes training agents via self play on a zero sum debate game, in order to adress tasks that are too complicated for a human to directly judge.[113][114] |

| 2018 | May 16 | Computation | Publication | OpenAI releases an analysis showing that since 2012, the amount of compute used in the largest AI training runs has been increasing exponentially with a 3.4-month doubling time.[115] |

| 2018 | June 11 | Unsupervised learning | Research progress | OpenAI announces having obtained significant results on a suite of diverse language tasks with a scalable, task-agnostic system, which uses a combination of transformers and unsupervised pre-training.[116] |

| 2018 | June 25 | Neural network | Software release | OpenAI announces set of AI algorithms able to hold their own as a team of five and defeat human amateur players at Dota 2, a multiplayer online battle arena video game popular in e-sports for its complexity and necessity for teamwork.[117] In the algorithmic A team, called OpenAI Five, each algorithm uses a neural network to learn both how to play the game, and how to cooperate with its AI teammates.[118][119] |

| 2018 | June 26 | Notable comment | Bill Gates comments on Twitter: AI bots just beat humans at the video game Dota 2. That’s a big deal, because their victory required teamwork and collaboration – a huge milestone in advancing artificial intelligence.[120] | |

| 2018 | July 18 | Commitment | Elon Musk, along with other tech leaders, sign a pledge promising to not develop “lethal autonomous weapons.” They also call on governments to institute laws against such technology. The pledge is organized by the Future of Life Institute, an outreach group focused on tackling existential risks.[121][122][123] | |

| 2018 | July 30 | Robotics | Software release | OpenAI announces a robotics system that can manipulate objects with humanlike dexterity. The system is able to develop these behaviors all on its own. It uses a reinforcement model, where the AI learns through trial and error, to direct robot hands in grasping and manipulating objects with great precision.[124][125] |

| 2018 | August 7 | Achievement | Algorithmic team OpenAI Five defeats a team of semi-professional Dota 2 players ranked in the 99.95th percentile in the world, in their second public match in the traditional five-versus-five settings, hosted in San Francisco.[126][127][128][129] | |

| 2018 | August 16 | Arboricity | Publication | OpenAI publishes paper on constant arboricity spectral sparsifiers. The paper shows that every graph is spectrally similar to the union of a constant number of forests.[130] |

| 2018 | September | Team | Dario Amodei becomes OpenAI's Research Director.[37] | |

| 2018 | October 31 | Reinforcement learning | Software release | OpenAI unveils its Random Network Distillation (RND), a prediction-based method for encouraging reinforcement learning agents to explore their environments through curiosity, which for the first time exceeds average human performance on videogame Montezuma’s Revenge.[131] |

| 2018 | November 8 | Reinforcement learning | Education | OpenAI launches Spinning Up, an educational resource designed to teach anyone deep reinforcement learning. The program consists of crystal-clear examples of RL code, educational exercises, documentation, and tutorials.[132][133][134] |

| 2018 | November 9 | Notable comment | Ilya Sutskever gives speech at the AI Frontiers Conference in San Jose, and declares: We (OpenAI) have reviewed progress in the field over the past six years. Our conclusion is near term AGI should be taken as a serious possibility.[135] | |

| 2018 | November 19 | Reinforcement learning | Partnership | OpenAI partners with DeepMind in a new paper that proposes a new method to train reinforcement learning agents in ways that enables them to surpass human performance. The paper, titled Reward learning from human preferences and demonstrations in Atari, introduces a training model that combines human feedback and reward optimization to maximize the knowledge of RL agents.[136] |

| 2018 | December 4 | Reinforcement learning | Researh progress | OpenAI announces having discovered that the gradient noise scale, a simple statistical metric, predicts the parallelizability of neural network training on a wide range of tasks.[137] |

| 2018 | December 6 | Reinforcement learning | Software release | OpenAI releases CoinRun, a training environment designed to test the adaptability of reinforcement learning agents.[138][139] |

| 2019 | February 14 | Natural-language generation | Software release | OpenAI unveils its language-generating system called GPT-2, a system able to write news, answer reading comprehension problems, and shows promise at tasks like translation.[140] However, the data or the parameters of the model are not released, under expressed concerns about potential abuse.[141] OpenAI initially tries to communicate the risk posed by this technology.[142] |

| 2019 | February 19 | Safety | Publication | "AI Safety Needs Social Scientists" is published. The paper argues that long-term AI safety research needs social scientists to ensure AI alignment algorithms succeed when actual humans are involved.[143][144] |

| 2019 | March 4 | Reinforcement learning | Software release | OpenAI releases a Neural MMO (massively multiplayer online), a multiagent game environment for reinforcement learning agents. The platform supports a large, variable number of agents within a persistent and open-ended task.[145] |

| 2019 | March 6 | Software release | OpenAI introduces activation atlases, created in collaboration with Google researchers. Activation atlases comprise a new technique for visualizing what interactions between neurons can represent.[146] | |

| 2019 | March 11 | Reorganization | OpenAI announces the creation of OpenAI LP, a new “capped-profit” company owned and controlled by the OpenAI nonprofit organization’s board of directors. The new company is purposed to allow OpenAI to rapidly increase their investments in compute and talent while including checks and balances to actualize their mission.[147][148] | |

| 2019 | March 21 | Software release | OpenAI announces progress towards stable and scalable training of energy-based models (EBMs) resulting in better sample quality and generalization ability than existing models.[149] | |

| 2019 | March | Team | Sam Altman leaves his role as the president of Y Combinator to become the Chief executive officer of OpenAI.[150][151][17] | |

| 2019 | April 23 | Deep learning | Publication | OpenAI publishes paper announcing Sparse Transformers, a deep neural network for learning sequences of data, including text, sound, and images. It utilizes an improved algorithm based on the attention mechanism, being able to extract patterns from sequences 30 times longer than possible previously.[152][153][154] |

| 2019 | April 25 | Neural network | Software release | OpenAI announces MuseNet, a deep neural network able to generate 4-minute musical compositions with 10 different instruments, and can combine multiple styles from country to Mozart to The Beatles. The neural network uses general-purpose unsupervised technology.[155] |

| 2019 | April 27 | Event hosting | OpenAI hosts the OpenAI Robotics Symposium 2019.[156] | |

| 2019 | May | Natural-language generation | Software release | OpenAI releases a limited version of its language-generating system GPT-2. This version is more powerful (though still significantly limited compared to the whole thing) than the extremely restricted initial release of the system, citing concerns that it’d be abused.[157] The potential of the new system is recognized by various experts.[158] |

| 2019 | June 13 | Natural-language generation | Coverage | Connor Leahy publishes article entitled The Hacker Learns to Trust which discusses the work of OpenAI, and particularly the potential danger of its language-generating system GPT-2. Leahy highlights: "Because this isn’t just about GPT2. What matters is that at some point in the future, someone will create something truly dangerous and there need to be commonly accepted safety norms before that happens."[142] |

| 2019 | July 22 | Partnership | OpenAI announces an exclusive partnership with Microsoft. As part of the partnership, Microsoft invests $1 billion in OpenAI, and OpenAI switches to exclusively using Microsoft Azure (Microsoft's cloud solution) as the platform on which it will develop its AI tools. Microsoft will also be OpenAI's "preferred partner for commercializing new AI technologies."[159][160][161][162] | |

| 2019 | August 20 | Natural-language generation | Software release | OpenAI announces plan to release a version of its language-generating system GPT-2, which stirred controversy after it release in February.[163][164][165] |

| 2019 | September 17 | Research progress | OpenAI announces having observed agents discovering progressively more complex tool use while playing a simple game of hide-and-seek. Through training, the agents were able to build a series of six distinct strategies and counterstrategies, some of which were unknown to be supported by the environment.[166][167] | |

| 2019 | October 16 | Neural networks | Research progress | OpenAI announces having trained a pair of neural networks to solve the Rubik’s Cube with a human-like robot hand. The experiment demonstrates that models trained only in simulation can be used to solve a manipulation problem of unprecedented complexity on a real robot.[168][169] |

| 2019 | November 5 | Natural-language generation | Software release | OpenAI releases the largest version (1.5B parameters) of its language-generating system GPT-2 along with code and model weights to facilitate detection of outputs of GPT-2 models.[170] |

| 2019 | November 21 | Reinforcement learning | Software release | OpenAI releases Safety Gym, a suite of environments and tools for measuring progress towards reinforcement learning agents that respect safety constraints while training.[171] |

| 2019 | December 3 | Reinforcement learning | Software release | OpenAI releases Procgen Benchmark, a set of 16 simple-to-use procedurally-generated environments (CoinRun, StarPilot, CaveFlyer, Dodgeball, FruitBot, Chaser, Miner, Jumper, Leaper, Maze, BigFish, Heist, Climber, Plunder, Ninja, and BossFight) which provide a direct measure of how quickly a reinforcement learning agent learns generalizable skills. Procgen Benchmark prevents AI model overfitting.[172][173][174] |

| 2019 | December 4 | Publication | "Deep Double Descent: Where Bigger Models and More Data Hurt" is submitted to the ArXiv. The paper shows that a variety of modern deep learning tasks exhibit a "double-descent" phenomenon where, as the model size increases, performance first gets worse and then gets better.[175] The paper is summarized on the OpenAI blog.[176] MIRI researcher Evan Hubinger writes an explanatory post on the subject on LessWrong and the AI Alignment Forum,[177] and follows up with a post on the AI safety implications.[178] | |

| 2019 | December | Team | Dario Amodei is promoted as OpenAI's Vice President of Research.[37] | |

| 2020 | January 30 | Deep learning | Software adoption | OpenAI announces migration to the social network’s PyTorch machine learning framework in future projects, setting it as its new standard deep learning framework.[179][180] |

| 2020 | February 5 | Safety | Publication | Beth Barnes and Paul Christiano on lesswrong.com publish Writeup: Progress on AI Safety via Debate, a writeup of the research done by the "Reflection-Humans" team at OpenAI in third and fourth quarter of 2019.[181]

|

| 2020 | February 17 | Coverage | AI reporter Karen Hao at MIT Technology Review publishes review on OpenAI titled The messy, secretive reality behind OpenAI’s bid to save the world, which suggests the company is surrendering its declaration to be transparent in order to outpace competitors.[182] As a response, Elon Musk criticizes OpenAI, saying it lacks transparency.[183] On his Twitter account, Musk writes "I have no control & only very limited insight into OpenAI. Confidence in Dario for safety is not high", alluding to OpenAI Vice President of Research Dario Amodei.[184] | |

| 2020 | May 28 (release), June and July (discussion and exploration) | Natural-language generation | Software release | OpenAI releases the natural language model GPT-3 on GitHub[185] and uploads to the ArXiV the paper Language Models are Few-Shot Learners explaining how GPT-3 was trained and how it performs.[186] Games, websites, and chatbots based on GPT-3 are created for exploratory purposes in the next two months (mostly by people unaffiliated with OpenAI), with a general takeaway that GPT-3 performs significantly better than GPT-2 and past natural language models.[187][188][189][190] Commentators also note many weaknesses such as: trouble with arithmetic because of incorrect pattern matching, trouble with multi-step logical reasoning even though it could do the individual steps separately, inability to identify that a question is nonsense, inability to identify that it does not know the answer to a question, and picking up of racist and sexist content when trained on corpuses that contain some such content.[191][192][193] |

Meta information on the timeline

How the timeline was built

The initial version of the timeline was written by Issa Rice. It has been expanded considerably by Sebastian.

Funding information for this timeline is available.

What the timeline is still missing

Timeline update strategy

- https://www.google.com/search?q=site:nytimes.com+%22OpenAI%22

- https://blog.OpenAI.com/ (but check to see if the announcement of a blog post is covered by other sources)

See also

- Timeline of DeepMind

- Timeline of Future of Humanity Institute

- Timeline of Centre for the Study of Existential Risk

External links

References

- ↑ "OpenAI". wikipediaviews.org. Retrieved 1 March 2020.

- ↑ "OpenAI". trends.google.com. Retrieved 1 March 2020.

- ↑ Samuel Gibbs (October 27, 2014). "Elon Musk: artificial intelligence is our biggest existential threat". The Guardian. Retrieved July 25, 2017.

- ↑ "AeroAstro Centennial Webcast". Retrieved July 25, 2017.

The high point of the MIT Aeronautics and Astronautics Department's 2014 Centennial celebration is the October 22-24 Centennial Symposium

- ↑ "Machine intelligence, part 1". Sam Altman. Retrieved July 27, 2017.

- ↑ Brockman, Greg (May 6, 2015). "Leaving Stripe". Greg Brockman on Svbtle. Retrieved May 6, 2018.

- ↑ Carson, Biz (May 6, 2015). "One of the first employees of $3.5 billion startup Stripe is leaving to form his own company". Business Insider. Retrieved May 6, 2018.

- ↑ 8.0 8.1 "My path to OpenAI". Greg Brockman on Svbtle. May 3, 2016. Retrieved May 8, 2018.

- ↑ Matt Weinberger (June 4, 2015). "Head of Silicon Valley's most important startup farm says we're in a 'mega bubble' that won't last". Business Insider. Retrieved July 27, 2017.

- ↑ John Markoff (December 11, 2015). "Artificial-Intelligence Research Center Is Founded by Silicon Valley Investors". The New York Times. Retrieved July 26, 2017.

The organization, to be named OpenAI, will be established as a nonprofit, and will be based in San Francisco.

- ↑ "Introducing OpenAI". OpenAI Blog. December 11, 2015. Retrieved July 26, 2017.

- ↑ Drew Olanoff (December 11, 2015). "Artificial Intelligence Nonprofit OpenAI Launches With Backing From Elon Musk And Sam Altman". TechCrunch. Retrieved March 2, 2018.

- ↑ "Wojciech Zaremba". linkedin.com. Retrieved 28 February 2020.

- ↑ "OpenAI: Revision history". wikipedia.org. Retrieved 6 April 2020.

- ↑ Priestly, Theo (December 11, 2015). "Elon Musk And Peter Thiel Launch OpenAI, A Non-Profit Artificial Intelligence Research Company". Forbes. Retrieved 8 July 2019.

- ↑ "Ilya Sutskever". AI Watch. April 8, 2018. Retrieved May 6, 2018.

- ↑ 17.0 17.1 17.2 17.3 17.4 "Information for OpenAI". orgwatch.issarice.com. Retrieved 5 May 2020.

- ↑ "AMA: the OpenAI Research Team • r/MachineLearning". reddit. Retrieved May 5, 2018.

- ↑ Salimans, Tim; Kingma, Diederik P. "Weight Normalization: A Simple Reparameterization to Accelerate Training of Deep Neural Networks". arxiv.org. Retrieved 27 March 2020.

- ↑ Brockman, Greg (March 22, 2017). "Team++". OpenAI Blog. Retrieved May 6, 2018.

- ↑ "Ian Goodfellow". linkedin.com. Retrieved 24 April 2020.

- ↑ Sutskever, Ilya (March 20, 2017). "Welcome, Pieter and Shivon!". OpenAI Blog. Retrieved May 6, 2018.

- ↑ "OpenAI Gym Beta". OpenAI Blog. March 20, 2017. Retrieved March 2, 2018.

- ↑ "Inside OpenAI, Elon Musk's Wild Plan to Set Artificial Intelligence Free". WIRED. April 27, 2016. Retrieved March 2, 2018.

This morning, OpenAI will release its first batch of AI software, a toolkit for building artificially intelligent systems by way of a technology called "reinforcement learning"

- ↑ Shead, Sam (April 28, 2016). "Elon Musk's $1 billion AI company launches a 'gym' where developers train their computers". Business Insider. Retrieved March 3, 2018.

- ↑ Miyato, Takeru; Dai, Andrew M.; Goodfellow, Ian. "Adversarial Training Methods for Semi-Supervised Text Classification". arxiv.org. Retrieved 28 March 2020.

- ↑ Houthooft, Rein; Chen, Xi; Duan, Yan; Schulman, John; De Turck, Filip; Abbeel, Pieter. "VIME: Variational Information Maximizing Exploration". arxiv.org. Retrieved 27 March 2020.

- ↑ Brockman, Greg; Cheung, Vicki; Pettersson, Ludwig; Schneider, Jonas; Schulman, John; Tang, Jie; Zaremba, Wojciech. "OpenAI Gym". arxiv.org. Retrieved 27 March 2020.

- ↑ "OPENAI GYM". theconstructsim.com. Retrieved 16 May 2020.

- ↑ Salimans, Tim; Goodfellow, Ian; Zaremba, Wojciech; Cheung, Vicki; Radford, Alec; Chen, Xi. "Improved Techniques for Training GANs". arxiv.org. Retrieved 27 March 2020.

- ↑ "InfoGAN: Interpretable Representation Learning by Information Maximizing Generative Adversarial Nets". arxiv.org. Retrieved 27 March 2020.

- ↑ Kingma, Diederik P.; Salimans, Tim; Jozefowicz, Rafal; Chen, Xi; Sutskever, Ilya; Welling, Max. "Improving Variational Inference with Inverse Autoregressive Flow". arxiv.org. Retrieved 28 March 2020.

- ↑ "Generative Models". openai.com. Retrieved 5 April 2020.

- ↑ "[1606.06565] Concrete Problems in AI Safety". June 21, 2016. Retrieved July 25, 2017.

- ↑ Karnofsky, Holden (June 23, 2016). "Concrete Problems in AI Safety". Retrieved April 18, 2020.

- ↑ "Dario Amodei - Research Scientist @ OpenAI". Crunchbase. Retrieved May 6, 2018.

- ↑ 37.0 37.1 37.2 "Dario Amodei". linkedin.com. Retrieved 29 February 2020.

- ↑ Metz, Cade (July 29, 2016). "How To Fool AI Into Seeing Something That Isn't There". WIRED. Retrieved March 3, 2018.

- ↑ "Special Projects". openai.com. Retrieved 5 April 2020.

- ↑ "NVIDIA Brings DGX-1 AI Supercomputer in a Box to OpenAI". The Official NVIDIA Blog. August 15, 2016. Retrieved May 5, 2018.

- ↑ Vanian, Jonathan (August 15, 2016). "Nvidia Just Gave A Supercomputer to Elon Musk-backed Artificial Intelligence Group". Fortune. Retrieved May 5, 2018.

- ↑ De Jesus, Cecille (August 17, 2016). "Elon Musk's OpenAI is Using Reddit to Teach An Artificial Intelligence How to Speak". Futurism. Retrieved May 5, 2018.

- ↑ "Infrastructure for Deep Learning". openai.com. Retrieved 28 March 2020.

- ↑ Christiano, Paul; Shah, Zain; Mordatch, Igor; Schneider, Jonas; Blackwell, Trevor; Tobin, Joshua; Abbeel, Pieter; Zaremba, Wojciech. "Transfer from Simulation to Real World through Learning Deep Inverse Dynamics Model". arxiv.org. Retrieved 28 March 2020.

- ↑ Papernot, Nicolas; Abadi, Martín; Erlingsson, Úlfar; Goodfellow, Ian; Talwar, Kunal. "Semi-supervised Knowledge Transfer for Deep Learning from Private Training Data". arxiv.org. Retrieved 28 March 2020.

- ↑ Wu, Yuhuai; Burda, Yuri; Salakhutdinov, Ruslan; Grosse, Roger. "On the Quantitative Analysis of Decoder-Based Generative Models". arxiv.org. Retrieved 28 March 2020.

- ↑ Statt, Nick (November 15, 2016). "Microsoft is partnering with Elon Musk's OpenAI to protect humanity's best interests". The Verge. Retrieved March 2, 2018.

- ↑ Metz, Cade. "The Next Big Front in the Battle of the Clouds Is AI Chips. And Microsoft Just Scored a Win". WIRED. Retrieved March 2, 2018.

According to Altman and Harry Shum, head of Microsoft new AI and research group, OpenAI's use of Azure is part of a larger partnership between the two companies. In the future, Altman and Shum tell WIRED, the two companies may also collaborate on research. "We're exploring a couple of specific projects," Altman says. "I'm assuming something will happen there." That too will require some serious hardware.

- ↑ "universe". GitHub. Retrieved March 1, 2018.

- ↑ John Mannes (December 5, 2016). "OpenAI's Universe is the fun parent every artificial intelligence deserves". TechCrunch. Retrieved March 2, 2018.

- ↑ "Elon Musk's Lab Wants to Teach Computers to Use Apps Just Like Humans Do". WIRED. Retrieved March 2, 2018.

- ↑ "OpenAI Universe". Hacker News. Retrieved May 5, 2018.

- ↑ "AI Alignment". Paul Christiano. May 13, 2017. Retrieved May 6, 2018.

- ↑ "Team Update". OpenAI Blog. March 22, 2017. Retrieved May 6, 2018.

- ↑ "Open Philanthropy Project donations made (filtered to cause areas matching AI safety)". Retrieved July 27, 2017.

- ↑ "OpenAI — General Support". Open Philanthropy Project. December 15, 2017. Retrieved May 6, 2018.

- ↑ "Pinboard on Twitter". Twitter. Retrieved May 8, 2018.

What the actual fuck… “Open Philanthropy” dude gives a $30M grant to his roommate / future brother-in-law. Trumpy!

- ↑ "OpenAI makes humanity less safe". Compass Rose. April 13, 2017. Retrieved May 6, 2018.

- ↑ "OpenAI makes humanity less safe". LessWrong. Retrieved May 6, 2018.

- ↑ "OpenAI donations received". Retrieved May 6, 2018.

- ↑ Naik, Vipul. "I'm having a hard time understanding the rationale...". Retrieved May 8, 2018.

- ↑ "Evolution Strategies as a Scalable Alternative to Reinforcement Learning". openai.com. Retrieved 5 April 2020.

- ↑ "The messy, secretive reality behind OpenAI's bid to save the world". technologyreview.com. Retrieved 28 February 2020.

- ↑ Simoneaux, Brent; Stegman, Casey. "Open Source Stories: The People Behind OpenAI". Retrieved May 5, 2018. In the HTML source, last-publish-date is shown as Tue, 25 Apr 2017 04:00:00 GMT as of 2018-05-05.

- ↑ "Profile of the people behind OpenAI • r/OpenAI". reddit. April 7, 2017. Retrieved May 5, 2018.

- ↑ "The People Behind OpenAI". Hacker News. July 23, 2017. Retrieved May 5, 2018.

- ↑ "Unsupervised Sentiment Neuron". openai.com. Retrieved 5 April 2020.

- ↑ John Mannes (April 7, 2017). "OpenAI sets benchmark for sentiment analysis using an efficient mLSTM". TechCrunch. Retrieved March 2, 2018.

- ↑ John Mannes (April 7, 2017). "OpenAI sets benchmark for sentiment analysis using an efficient mLSTM". TechCrunch. Retrieved March 2, 2018.

- ↑ "OpenAI Just Beat Google DeepMind at Atari With an Algorithm From the 80s". singularityhub.com. Retrieved 29 June 2019.

- ↑ "Roboschool". openai.com. Retrieved 5 April 2020.

- ↑ "Robots that Learn". openai.com. Retrieved 5 April 2020.

- ↑ "OpenAI Baselines: DQN". OpenAI Blog. November 28, 2017. Retrieved May 5, 2018.

- ↑ "OpenAI/baselines". GitHub. Retrieved May 5, 2018.

- ↑ "[1706.03741] Deep reinforcement learning from human preferences". Retrieved March 2, 2018.

- ↑ gwern (June 3, 2017). "June 2017 news - Gwern.net". Retrieved March 2, 2018.

- ↑ "Two Giants of AI Team Up to Head Off the Robot Apocalypse". WIRED. Retrieved March 2, 2018.

A new paper from the two organizations on a machine learning system that uses pointers from humans to learn a new task, rather than figuring out its own—potentially unpredictable—approach, follows through on that. Amodei says the project shows it's possible to do practical work right now on making machine learning systems less able to produce nasty surprises.

- ↑ "Faster Physics in Python". openai.com. Retrieved 5 April 2020.

- ↑ "Learning from Human Preferences". OpenAI.com. Retrieved 29 June 2019.

- ↑ "Better Exploration with Parameter Noise". openai.com. Retrieved 5 April 2020.

- ↑ Jordan Crook (August 12, 2017). "OpenAI bot remains undefeated against world's greatest Dota 2 players". TechCrunch. Retrieved March 2, 2018.

- ↑ "Did Elon Musk's AI champ destroy humans at video games? It's complicated". The Verge. August 14, 2017. Retrieved March 2, 2018.

- ↑ "Elon Musk's $1 billion AI startup made a surprise appearance at a $24 million video game tournament — and crushed a pro gamer". Business Insider. August 11, 2017. Retrieved March 3, 2018.

- ↑ Cade Metz (August 13, 2017). "Teaching A.I. Systems to Behave Themselves". The New York Times. Retrieved May 5, 2018.

- ↑ "OpenAI Baselines: ACKTR & A2C". openai.com. Retrieved 5 April 2020.

- ↑ "[1709.04326] Learning with Opponent-Learning Awareness". Retrieved March 2, 2018.

- ↑ gwern (August 16, 2017). "September 2017 news - Gwern.net". Retrieved March 2, 2018.

- ↑ "AI Sumo Wrestlers Could Make Future Robots More Nimble". WIRED. Retrieved March 3, 2018.

- ↑ Appolonia, Alexandra; Gmoser, Justin (October 20, 2017). "Elon Musk's artificial intelligence company created virtual robots that can sumo wrestle and play soccer". Business Insider. Retrieved March 3, 2018.

- ↑ Cade Metz (November 6, 2017). "A.I. Researchers Leave Elon Musk Lab to Begin Robotics Start-Up". The New York Times. Retrieved May 5, 2018.

- ↑ "Block-Sparse GPU Kernels". openai.com. Retrieved 5 April 2020.

- ↑ Vincent, James (December 1, 2017). "Artificial intelligence isn't as clever as we think, but that doesn't stop it being a threat". The Verge. Retrieved March 2, 2018.

- ↑ "[1802.07228] The Malicious Use of Artificial Intelligence: Forecasting, Prevention, and Mitigation". Retrieved February 24, 2018.

- ↑ "Preparing for Malicious Uses of AI". OpenAI Blog. February 21, 2018. Retrieved February 24, 2018.

- ↑ Malicious AI Report. "The Malicious Use of Artificial Intelligence". Malicious AI Report. Retrieved February 24, 2018.

- ↑ 96.0 96.1 "Elon Musk leaves board of AI safety group to avoid conflict of interest with Tesla". The Verge. February 21, 2018. Retrieved March 2, 2018.

- ↑ Simonite, Tom. "Why Artificial Intelligence Researchers Should Be More Paranoid". WIRED. Retrieved March 2, 2018.

- ↑ "OpenAI Supporters". OpenAI Blog. February 21, 2018. Retrieved March 1, 2018.

- ↑ "Ingredients for Robotics Research". openai.com. Retrieved 5 April 2020.

- ↑ "OpenAI Hackathon". OpenAI Blog. February 24, 2018. Retrieved March 1, 2018.

- ↑ "Report from the OpenAI Hackathon". OpenAI Blog. March 15, 2018. Retrieved May 5, 2018.

- ↑ "OpenAI Retro Contest". OpenAI. Retrieved May 5, 2018.

- ↑ "Retro Contest". OpenAI Blog. April 13, 2018. Retrieved May 5, 2018.

- ↑ "OpenAI/universe". GitHub. Retrieved May 5, 2018.

- ↑ "OpenAI Charter". OpenAI Blog. April 9, 2018. Retrieved May 5, 2018.

- ↑ wunan (April 9, 2018). "OpenAI charter". LessWrong. Retrieved May 5, 2018.

- ↑ "[D] OpenAI Charter • r/MachineLearning". reddit. Retrieved May 5, 2018.

- ↑ "OpenAI Charter". Hacker News. Retrieved May 5, 2018.

- ↑ Tristan Greene (April 10, 2018). "The AI company Elon Musk co-founded intends to create machines with real intelligence". The Next Web. Retrieved May 5, 2018.

- ↑ Cade Metz (April 19, 2018). "A.I. Researchers Are Making More Than $1 Million, Even at a Nonprofit". The New York Times. Retrieved May 5, 2018.

- ↑ ""A.I. Researchers Are Making More Than $1 Million, Even at a Nonprofit [OpenAI]" • r/reinforcementlearning". reddit. Retrieved May 5, 2018.

- ↑ "gwern comments on A.I. Researchers Are Making More Than $1M, Even at a Nonprofit". Hacker News. Retrieved May 5, 2018.

- ↑ "[1805.00899] AI safety via debate". Retrieved May 5, 2018.

- ↑ Irving, Geoffrey; Amodei, Dario (May 3, 2018). "AI Safety via Debate". OpenAI Blog. Retrieved May 5, 2018.

- ↑ "AI and Compute". openai.com. Retrieved 5 April 2020.

- ↑ "Improving Language Understanding with Unsupervised Learning". openai.com. Retrieved 5 April 2020.

- ↑ Gershgorn, Dave. "OpenAI built gaming bots that can work as a team with inhuman precision". qz.com. Retrieved 14 June 2019.

- ↑ Knight, Will. "A team of AI algorithms just crushed humans in a complex computer game". technologyreview.com. Retrieved 14 June 2019.

- ↑ "OpenAI's bot can now defeat skilled Dota 2 teams". venturebeat.com. Retrieved 14 June 2019.

- ↑ Papadopoulos, Loukia. "Bill Gates Praises Elon Musk-Founded OpenAI's Latest Dota 2 Win as "Huge Milestone" in Field". interestingengineering.com. Retrieved 14 June 2019.

- ↑ Vincent, James. "Elon Musk, DeepMind founders, and others sign pledge to not develop lethal AI weapon systems". theverge.com. Retrieved 1 June 2019.

- ↑ Locklear, Mallory. "DeepMind, Elon Musk and others pledge not to make autonomous AI weapons". engadget.com. Retrieved 1 June 2019.

- ↑ Quach, Katyanna. "Elon Musk, his arch nemesis DeepMind swear off AI weapons". theregister.co.uk. Retrieved 1 June 2019.

- ↑ "OpenAI's 'state-of-the-art' system gives robots humanlike dexterity". venturebeat.com. Retrieved 14 June 2019.

- ↑ Coldewey, Devin. "OpenAI's robotic hand doesn't need humans to teach it human behaviors". techcrunch.com. Retrieved 14 June 2019.

- ↑ Whitwam, Ryan. "OpenAI Bots Crush the Best Human Dota 2 Players in the World". extremetech.com. Retrieved 15 June 2019.

- ↑ Quach, Katyanna. "OpenAI bots thrash team of Dota 2 semi-pros, set eyes on mega-tourney". theregister.co.uk. Retrieved 15 June 2019.

- ↑ Savov, Vlad. "The OpenAI Dota 2 bots just defeated a team of former pros". theverge.com. Retrieved 15 June 2019.

- ↑ Rigg, Jamie. "'Dota 2' veterans steamrolled by AI team in exhibition match". engadget.com. Retrieved 15 June 2019.

- ↑ Chu, Timothy; Cohen, Michael B.; Pachocki, Jakub W.; Peng, Richard. "Constant Arboricity Spectral Sparsifiers". arxiv.org. Retrieved 26 March 2020.

- ↑ "Reinforcement Learning with Prediction-Based Rewards". openai.com. Retrieved 5 April 2020.

- ↑ "Spinning Up in Deep RL". OpenAI.com. Retrieved 15 June 2019.

- ↑ Ramesh, Prasad. "OpenAI launches Spinning Up, a learning resource for potential deep learning practitioners". hub.packtpub.com. Retrieved 15 June 2019.

- ↑ Johnson, Khari. "OpenAI launches reinforcement learning training to prepare for artificial general intelligence". flipboard.com. Retrieved 15 June 2019.

- ↑ "OpenAI Founder: Short-Term AGI Is a Serious Possibility". syncedreview.com. Retrieved 15 June 2019.

- ↑ Rodriguez, Jesus. "What's New in Deep Learning Research: OpenAI and DeepMind Join Forces to Achieve Superhuman Performance in Reinforcement Learning". towardsdatascience.com. Retrieved 29 June 2019.

- ↑ "How AI Training Scales". openai.com. Retrieved 4 April 2020.

- ↑ "OpenAI teaches AI teamwork by playing hide-and-seek". venturebeat.com. Retrieved 24 February 2020.

- ↑ "OpenAI's CoinRun tests the adaptability of reinforcement learning agents". venturebeat.com. Retrieved 24 February 2020.

- ↑ "An AI helped us write this article". vox.com. Retrieved 28 June 2019.

- ↑ Lowe, Ryan. "OpenAI's GPT-2: the model, the hype, and the controversy". towardsdatascience.com. Retrieved 10 July 2019.

- ↑ 142.0 142.1 "The Hacker Learns to Trust". medium.com. Retrieved 5 May 2020.

- ↑ Irving, Geoffrey; Askell, Amanda. "AI Safety Needs Social Scientists". doi:10.23915/distill.00014.

- ↑ "AI Safety Needs Social Scientists". openai.com. Retrieved 5 April 2020.

- ↑ "Neural MMO: A Massively Multiagent Game Environment". openai.com. Retrieved 5 April 2020.

- ↑ "Introducing Activation Atlases". openai.com. Retrieved 5 April 2020.

- ↑ Johnson, Khari. "OpenAI launches new company for funding safe artificial general intelligence". venturebeat.com. Retrieved 15 June 2019.

- ↑ Trazzi, Michaël. "Considerateness in OpenAI LP Debate". medium.com. Retrieved 15 June 2019.

- ↑ "Implicit Generation and Generalization Methods for Energy-Based Models". openai.com. Retrieved 5 April 2020.

- ↑ "Sam Altman's leap of faith". techcrunch.com. Retrieved 24 February 2020.

- ↑ "Y Combinator president Sam Altman is stepping down amid a series of changes at the accelerator". techcrunch.com. Retrieved 24 February 2020.

- ↑ Alford, Anthony. "OpenAI Introduces Sparse Transformers for Deep Learning of Longer Sequences". infoq.com. Retrieved 15 June 2019.

- ↑ "OpenAI Sparse Transformer Improves Predictable Sequence Length by 30x". medium.com. Retrieved 15 June 2019.

- ↑ "Generative Modeling with Sparse Transformers". OpenAI.com. Retrieved 15 June 2019.

- ↑ "MuseNet". OpenAI.com. Retrieved 15 June 2019.

- ↑ "OpenAI Robotics Symposium 2019". OpenAI.com. Retrieved 14 June 2019.

- ↑ "A poetry-writing AI has just been unveiled. It's ... pretty good.". vox.com. Retrieved 11 July 2019.

- ↑ Vincent, James. "AND OpenAI's new multitalented AI writes, translates, and slanders". theverge.com. Retrieved 11 July 2019.

- ↑ "Microsoft Invests In and Partners with OpenAI to Support Us Building Beneficial AGI". OpenAI. July 22, 2019. Retrieved July 26, 2019.

- ↑ "OpenAI forms exclusive computing partnership with Microsoft to build new Azure AI supercomputing technologies". Microsoft. July 22, 2019. Retrieved July 26, 2019.

- ↑ Chan, Rosalie (July 22, 2019). "Microsoft is investing $1 billion in OpenAI, the Elon Musk-founded company that's trying to build human-like artificial intelligence". Business Insider. Retrieved July 26, 2019.

- ↑ Sawhney, Mohanbir (July 24, 2019). "The Real Reasons Microsoft Invested In OpenAI". Forbes. Retrieved July 26, 2019.

- ↑ "OpenAI releases curtailed version of GPT-2 language model". venturebeat.com. Retrieved 24 February 2020.

- ↑ "OpenAI Just Released an Even Scarier Fake News-Writing Algorithm". interestingengineering.com. Retrieved 24 February 2020.

- ↑ "OPENAI JUST RELEASED A NEW VERSION OF ITS FAKE NEWS-WRITING AI". futurism.com. Retrieved 24 February 2020.

- ↑ "Emergent Tool Use from Multi-Agent Interaction". openai.com. Retrieved 4 April 2020.

- ↑ "Emergent Tool Use From Multi-Agent Autocurricula". arxiv.org. Retrieved 4 April 2020.

- ↑ "Solving Rubik's Cube with a Robot Hand". arxiv.org. Retrieved 4 April 2020.

- ↑ "Solving Rubik's Cube with a Robot Hand". openai.com. Retrieved 4 April 2020.

- ↑ "GPT-2: 1.5B Release". openai.com. Retrieved 5 April 2020.

- ↑ "Safety Gym". openai.com. Retrieved 5 April 2020.

- ↑ "Procgen Benchmark". openai.com. Retrieved 2 March 2020.

- ↑ "OpenAI's Procgen Benchmark prevents AI model overfitting". venturebeat.com. Retrieved 2 March 2020.

- ↑ "GENERALIZATION IN REINFORCEMENT LEARNING – EXPLORATION VS EXPLOITATION". analyticsindiamag.com. Retrieved 2 March 2020.

- ↑ Nakkiran, Preetum; Kaplun, Gal; Bansal, Yamini; Yang, Tristan; Barak, Boaz; Sutskever, Ilya. "Deep Double Descent: Where Bigger Models and More Data Hurt". arxiv.org. Retrieved 5 April 2020.

- ↑ "Deep Double Descent". OpenAI. December 5, 2019. Retrieved May 23, 2020.

- ↑ Hubinger, Evan (December 5, 2019). "Understanding "Deep Double Descent"". LessWrong. Retrieved 24 May 2020.

- ↑ Hubinger, Evan (December 18, 2019). "Inductive biases stick around". Retrieved 24 May 2020.

- ↑ "OpenAI sets PyTorch as its new standard deep learning framework". jaxenter.com. Retrieved 23 February 2020.

- ↑ "OpenAI goes all-in on Facebook's Pytorch machine learning framework". venturebeat.com. Retrieved 23 February 2020.

- ↑ "Writeup: Progress on AI Safety via Debate". lesswrong.com. Retrieved 16 May 2020.

- ↑ Hao, Karen. "The messy, secretive reality behind OpenAI's bid to save the world. The AI moonshot was founded in the spirit of transparency. This is the inside story of how competitive pressure eroded that idealism.". Technology Review.

- ↑ Holmes, Aaron. "Elon Musk just criticized the artificial intelligence company he helped found — and said his confidence in the safety of its AI is 'not high'". businessinsider.com. Retrieved 29 February 2020.

- ↑ "Elon Musk". twitter.com. Retrieved 29 February 2020.

- ↑ "GPT-3 on GitHub". OpenAI. Retrieved July 19, 2020.

- ↑ "Language Models are Few-Shot Learners". May 28, 2020. Retrieved July 19, 2020.

- ↑ "Nick Cammarata on Twitter: GPT-3 as therapist". July 14, 2020. Retrieved July 19, 2020.

- ↑ Gwern (June 19, 2020). "GPT-3 Creative Fiction". Retrieved July 19, 2020.

- ↑ Walton, Nick (July 14, 2020). "AI Dungeon: Dragon Model Upgrade. You can now play AI Dungeon with one of the most powerful AI models in the world.". Retrieved July 19, 2020.

- ↑ Shameem, Sharif (July 13, 2020). "Sharif Shameem on Twitter: With GPT-3, I built a layout generator where you just describe any layout you want, and it generates the JSX code for you.". Twitter. Retrieved July 19, 2020.

- ↑ Lacker, Kevin (July 6, 2020). "Giving GPT-3 a Turing Test". Retrieved July 19, 2020.

- ↑ Woolf, Max (July 18, 2020). "Tempering Expectations for GPT-3 and OpenAI's API". Retrieved July 19, 2020.

- ↑ Asparouhov, Delian (July 17, 2020). "Quick thoughts on GPT3". Retrieved July 19, 2020.