Anthropic Timeline: Claude Models, Safety Research, Milestones

This is a timeline of Anthropic, an AI safety and research company focused on developing reliable and ethical artificial intelligence systems. Founded in 2021, it is known for creating Claude, a family of AI assistants.

Sample questions

The following are some interesting questions that can be answered by reading this timeline:

- What are some key hiring and leadership appointments in Anthropic’s history?

- Sort the full timeline by "Event type" and look for the group of rows with value "Team".

- You will see high-profile hires and leadership appointments, reflecting research expansions and organizational changes.

- What are the key funding milestones for Anthropic?

- Sort the full timeline by "Event type" and look for the group of rows with value "Funding".

- You’ll see major funding rounds, with key details including investor names (Google, Amazon, Lightspeed), valuation jumps, and strategic outcomes like cloud partnerships and model development. Each entry highlights how funding advanced Anthropic’s mission and market position.

- What are some notable research publications that Anthropic has released?

- Sort the full timeline by "Event type" and look for the group of rows with value "Research publication".

- You will see a list of major research publications showing how Anthropic’s research has advanced AI safety, interpretability, long-context performance, and retrieval quality.

- What are the key partnership announcements in Anthropic’s history?

- Sort the full timeline by "Event type" and look for the group of rows with value "Partnership".

- You will see a list of major partnerships, showing how Anthropic expanded Claude into enterprise, telecom, public sector, and defense ecosystems through strategic alliances.

- What policy milestones has Anthropic released over the years?

- Sort the full timeline by "Event type" and look for the group of rows with value "Policy".

- You will see a list of major policy announcements, safety frameworks, governance updates, and regulatory positions. The results show how Anthropic’s policies evolved to address AI safety, elections, security, red teaming, and responsible scaling.

- What major legal challenges has Anthropic faced over the years?

- Sort the full timeline by "Event type" and look for the group of rows with value "Legal".

- You will see a list of major legal disputes, including copyright lawsuits, data-scraping allegations, court rulings, and settlements.

- What key initiatives has Anthropic launched to ensure responsible AI development and its broader societal impact?

- Sort the full timeline by "Event type" and look for the group of rows with value "Initiative".

- You’ll see a number of initiatives, including governance (LTBT), AI welfare research, economic advisory councils, and social good programs.

- Other events are described under the following types: "Background", "Certification", "Company founding", "Expansion", "Infrastructure", and "Report release".

Big picture

| Time period | Development summary | More details |

|---|---|---|

| 2016-2020 | Prelude | Before Anthropic's official founding, many of its future team members already conduct pioneering work in AI safety and alignment research at organizations like OpenAI.[1][2] During this period, Dario Amodei (who would become Anthropic's CEO) leads safety teams at OpenAI, publishing influential papers on concrete problems in AI safety and alignment.[3] This era sees the development of key techniques like reinforcement learning from human feedback (RLHF) that would later become foundational to Anthropic's approach.[4] By late 2020, differences in research priorities and perspectives on AI development lead several researchers to plan a new organization more singularly focused on AI safety research and responsible AI system development. |

| 2021-2022 | Foundation period | Anthropic is founded in January 2021 by a team led by Dario Amodei and Daniela Amodei, along with several other researchers who previously worked at OpenAI. The company is established with a core mission of conducting AI safety research and developing reliable, interpretable, and steerable AI systems. During this initial period, Anthropic raises significant early funding (over $700 million), including investments from Dustin Moskovitz, Eric Schmidt, and others, allowing the company to build its research team and infrastructure. |

| 2022-2023 | Claude development and public introduction | This period sees the development and initial release of Claude, Anthropic's AI assistant. The first version of Claude is made available to select partners and researchers in late 2022, with broader beta access in early 2023. During this time, Anthropic focuses on developing its Constitutional AI approach, which uses reinforcement learning from human feedback (RLHF) and a set of principles ("constitution") to guide AI behavior. The company publishes important research papers on AI alignment and safety while continuing to refine Claude's capabilities. |

| 2023-2025 | Growth and product Expansion | Characterized by major product launches and significant growth, this period includes the public release of Claude 2 in July 2023, followed by the Claude API becoming widely available. In March 2024, Anthropic launches the Claude 3 model family (Haiku, Sonnet, and Opus), representing a significant advancement in capabilities. The company secures major partnerships and additional funding rounds, including investments from Google, Amazon, and others. Anthropic expands its product offerings beyond the chatbot interface to include the Claude API for developers and begin focusing on enterprise applications. |

Full timeline

Inclusion criteria

We include:

- Company-focused events, including major funding rounds, partnerships, policy, product releases, infrastructure, legal issues, acquisitions, hiring milestones, and key research publications.

We exclude:

- Product-specific technical details, such as model versions, benchmarks, context expansions, features, and capability changes. See Timeline of Claude.

- Elligible significant events without founding date.

Timeline

| Year | Event type | Details |

|---|---|---|

| 2019 | Team | The Amodei siblings leave OpenAI due to directional differences regarding OpenAI's ventures with Microsoft.[5] |

| 2021 (January) | Company founding | Anthropic is founded by former OpenAI executives including Dario and Daniela Amodei. The company is classed as a Delaware public-benefit corporation, aiming to create reliable, interpretable, and steerable AI systems.[5][6][7] |

| 2021 (May 28) | Funding | During its first funding round, Anthropic raises $124 million in a Series A funding round to develop reliable and steerable AI systems. The funding supports computationally intensive research to advance general AI capabilities. The round is led by technology investor and Skype co-founder Jaan Tallinn, with participation from James McClave, Dustin Moskovitz, the Center for Emerging Risk Research, and Eric Schmidt. By this time, Anthropic actively hires researchers, engineers, and operational experts to further its mission.[6][7][8] |

| 2022 (April 29) | Funding | Anthropic raises $580 million in a Series B funding round to advance steerable, interpretable, and robust AI systems. By this time, since its 2021 founding, the company had made progress in interpretability by reverse engineering small language models, improving steerability with reinforcement learning, and analyzing AI performance shifts. The funding supports infrastructure for safer AI models and policy research. CEO Dario Amodei emphasizes scaling AI safety, while President Daniela Amodei highlights governance and culture. The round is led by Sam Bankman-Fried and includes investors like Caroline Ellison and Jaan Tallinn.[7][5][9][5] |

| 2022 (Summer) | Product | Anthropic finishes training the first version of Claude but does not release it, citing the need for further internal safety testing.[5] |

| 2022 (December) | Funding | Anthropic receives a total of $700 million in funding by the end of 2022, including $500 million from Alameda Research.[5] |

| 2023 (February 3) | Funding | Google invests $300 million in Anthropic, securing a 10% stake and initiating a strategic partnership. The collaboration involves Anthropic using Google Cloud’s advanced GPU and TPU infrastructure to scale and train its AI systems, including its assistant, Claude. Founded by ex-OpenAI researchers, Anthropic emphasizes AI safety and interpretability through methods like Constitutional AI. CEO Dario Amodei states that Google’s support would enable broader deployment of Anthropic’s models and strengthen their shared goal of building trustworthy AI. This alliance positions both firms to compete more effectively in the rapidly advancing generative AI landscape.[10][11][12][13][14][15] |

| 2023 (March 8) | Policy | Anthropic outlines their views on AI safety and rapid AI progress. They argue that AI's impact may rival the industrial and scientific revolutions and could emerge within a decade. They emphasize concerns about AI alignment, safety risks, and potential disruptions to society. Anthropic believes AI progress is driven by increasing computation, scaling laws, and improved algorithms, leading to systems surpassing human capabilities. They advocate for a multi-faceted, empirical approach to AI safety, urging collaboration among researchers, policymakers, and institutions to mitigate risks while ensuring AI’s responsible and beneficial development.[16] |

| 2023 (March) | Product | Anthropic introduces Claude, a next-generation AI assistant designed to be helpful, honest, and harmless. After testing with partners like Notion, Quora, and DuckDuckGo, Claude becomes broadly available through a chat interface and API. It excels at tasks such as summarization, search, writing, Q&A, and coding. Two versions are offered: Claude, a high-performance model, and Claude Instant, a faster, cost-effective option. Partners highlight Claude’s capabilities: Quora integrates it into Poe for conversational AI, Juni Learning uses it for online tutoring, and Notion enhances productivity with Claude-powered AI. DuckDuckGo employs it for search answers, while Robin AI leverages it for legal document analysis. AssemblyAI utilizes Claude to improve audio transcription and conversation intelligence. Anthropic continues refining Claude for more reliable, user-friendly AI applications.[17][6][7][5] Quora’s Poe app would see success with Claude, highlighting its strengths in creative writing and image understanding, with millions of daily user interactions.[18] |

| 2023 (April 20) | Policy | Anthropic announces its support for increasing federal funding for the National Institute of Standards and Technology (NIST) to enhance AI measurement and standards. Effective AI regulation requires accurate assessment tools, and by this time NIST has a long history of developing such frameworks. Despite AI’s rapid advancement, NIST’s funding had stagnated, limiting its ability to evaluate risks and ensure safety. Investing in NIST would enable rigorous testing, increase public trust, support regulation, and foster innovation. Anthropic recommends a $15 million funding increase for FY 2024 to strengthen AI governance and complement broader regulatory efforts. Congress is urged to prioritize NIST’s role in AI oversight.[19] |

| 2023 (April 26) | Partnership | Anthropic announces a partnership with Scale to bring its conversational AI assistant, Claude, to enterprises. Scale customers can now build applications using Claude, leveraging Scale’s expertise in prompt engineering, model validation, and enterprise-grade security on AWS. This collaboration enables businesses to integrate proprietary data sources like Google Drive and Outlook while ensuring AI performance and reliability. Dario Amodei highlights the partnership’s role in responsibly scaling Generative AI. By combining Claude with Scale’s robust deployment tools, enterprises gain an AI-ready solution for real-world applications. Anthropic looks forward to expanding responsible AI adoption through this collaboration.[20] |

| 2023 (May 9) | Research publication | Anthropic introduces Constitutional AI as a method to instill explicit values into language models via a predefined constitution, rather than relying on large-scale human feedback. This approach enhances transparency, scalability, and oversight while minimizing human exposure to harmful content. Claude, Anthropic’s AI assistant, follows principles derived from sources like the UN Declaration of Human Rights and AI safety research. The model is trained to be helpful, honest, and harmless, while avoiding toxic outputs and judgmental behavior. Constitutional AI allows iterative refinement of AI behavior, fostering ethical AI development and responsible deployment in real-world applications.[21] |

| 2023 (May 16) | Partnership | Anthropic partners with Zoom to integrate its AI assistant, Claude, into Zoom’s customer-facing AI products, enhancing reliability, productivity, and safety. The first integration is in the Zoom Contact Center, improving user experience and agent performance. Dario Amodei highlights the collaboration’s goal of bringing robust, steerable AI to workplaces. Zoom’s federated AI approach is expected to incorporate multiple models, including Claude. Additionally, Zoom Ventures invests in Anthropic, reflecting a shared vision for customer-centric AI solutions built on trust and security. This partnership aims to develop AI applications that effectively meet business and user needs.[22] |

| 2023 (May 23) | Funding | Anthropic raises $450 million in Series C funding led by Spark Capital, with participation from Google, Salesforce Ventures, Zoom Ventures, and others. The funding is aimed at supporting development of safe, reliable AI systems, including Claude. Anthropic aims to expand product offerings, advance AI safety research, and improve Claude’s capabilities, such as handling 100K context windows.[23][7][5] |

| 2023 (June 13) | Policy | Anthropic shares its response to the NTIA’s Request for Comment on AI Accountability, outlining key policy recommendations. They stress the urgent need for robust evaluation standards for advanced AI systems and propose increased government funding for model evaluations, the establishment of risk thresholds, and mandatory disclosures. Other proposals include pre-registration of large training runs, external red teaming before AI release, and advancing interpretability research. Anthropic also calls for clearer antitrust guidance to enable safer industry collaboration. These measures aim to foster a comprehensive AI accountability framework that supports safe, transparent, and beneficial AI development.[24] |

| 2023 (July 11) | Product | Anthropic releases Claude AI version 2.0, which offers improved performance with longer responses, enhanced coding, math, and reasoning abilities. It now handles up to 100K tokens per prompt, enabling it to process large documents efficiently. Claude 2's safety features are strengthened, reducing harmful outputs by 2x compared to its predecessor. It excels in long-form content creation and reasoning, making it valuable for businesses like Jasper and Sourcegraph.[7][5][25][6] |

| 2023 (July 25) | Policy | Anthropic outlines its approach to securing frontier AI models, emphasizing that these systems must be protected with measures beyond typical commercial standards due to their strategic importance. The company recommends applying “two-party control” and secure software development practices like NIST’s SSDF and SLSA to all stages of AI development. Anthropic advocates for treating frontier AI as critical infrastructure, encouraging public-private cooperation, and potentially using procurement policies to enforce security standards. These efforts aim to prevent misuse, ensure model provenance, and promote responsible AI development while maintaining productivity and alignment with human values.[26] |

| 2023 (July 26) | Research publication | Anthropic’s post details its work on “frontier threats red teaming,” a method to assess national security risks posed by advanced AI models. Focusing initially on biological threats, the team collaborated with biosecurity experts to evaluate how models might aid harmful activities, such as designing biological weapons. They found that while risks are currently limited, they are growing with model capability. The research led to effective mitigations, including safer training methods and output filters. Anthropic emphasizes the urgency of scaling this work, encouraging collaboration with governments and third parties to develop safeguards, monitor emerging threats, and build a robust safety framework.[27] |

| 2023 (August 15) | Partnership | Anthropic announces a strategic partnership and $100 million investment from SK Telecom (SKT), Korea’s largest mobile operator. The collaboration aims to develop a customized large language model (LLM) tailored for telecommunications, using fine-tuning techniques to enhance Claude’s performance in telco-specific tasks like customer service, sales, and marketing. The multilingual model will support languages including Korean, English, Japanese, and Spanish. SKT experts will provide feedback to further refine Claude for industry applications. This partnership combines Anthropic’s AI capabilities with SKT’s telecom expertise, aiming to drive global AI innovation and leadership in the telco space through safer, more reliable AI solutions.[28] |

| 2023 (September 7) | Product | Anthropic launches Claude Pro, a paid subscription for its Claude.ai chat experience, available in the US and UK. Priced at $20/month (US) or £18/month (UK), Claude Pro offers 5x more usage of the Claude 2 model compared to the free tier, enabling extended conversations and more file uploads. Subscribers also receive priority access during peak times and early access to new features. Since its July launch, Claude.ai had been favored for its long context windows, fast responses, and complex reasoning. Claude Pro aims to enhance productivity in tasks like research summarization, contract analysis, and coding project development.[6] |

| 2023 (September 14) | Partnership | Anthropic partners with Boston Consulting Group (BCG) to expand enterprise access to Claude . This collaboration aims to deliver safer, more reliable AI solutions for BCG clients worldwide, focusing on responsible AI aligned with Anthropic’s Constitutional AI approach. BCG will integrate Claude 2 into strategic applications such as knowledge management, fraud detection, demand forecasting, and report generation. BCG also uses Claude internally to enhance research synthesis and data analysis. Together, the companies aim to set a new standard for ethical AI deployment, combining business impact with responsible practices to ensure safe and effective use of generative AI.[29] |

| 2023 (September 19) | Initiative | Anthropic introduces the Long-Term Benefit Trust (LTBT) as a new governance structure to ensure its AI development aligns with humanity’s long-term interests. The LTBT consists of five independent trustees with expertise in AI safety and public policy, and holds special Class T stock granting it increasing power to elect Anthropic board members—eventually a majority. This complements Anthropic’s status as a Public Benefit Corporation, allowing its board to weigh societal impact alongside shareholder interests. The LTBT aims to address the extreme externalities of advanced AI, ensuring ethical decision-making in high-stakes situations while preserving operational flexibility during Anthropic’s growth.[30][7][5] |

| 2023 (September 23) | Research publication | Anthropic publishes an article presenting a case study on optimizing prompt engineering for Claude’s 100,000-token context window. Two techniques significantly improved Claude’s long-context recall: (1) extracting relevant quotes using a scratchpad before answering, and (2) including examples of correctly answered questions from other parts of the document. Tests were conducted using Claude Instant 1.2 and Claude 2, evaluating multiple-choice Q&A prompts across stitched-together long documents. Results show that contextual examples and scratchpads enhance accuracy, especially in earlier sections of the document. The study highlights the importance of explicit phrasing, proper question placement, and Anthropic’s new “Cookbook” offering reproducible code and further resources.[31] |

| 2023 (September 25) | Funding | Amazon announces it will invest up to $4 billion in Anthropic, as part of a strategic move to compete with Microsoft and Google in the generative AI space. The deal includes an initial $1.25 billion investment with an option to increase by $2.75 billion. Amazon would integrate Anthropic’s technology into services like Amazon Bedrock and provide infrastructure through AWS. In return, Anthropic gains access to Amazon’s cloud and chips for its AI development. Despite Amazon’s investment, Anthropic maintains its existing partnership with Google, which holds a 10% stake in the company.[32][7][5] |

| 2023 (October 18) | Legal | Universal Music Group, ABKCO, and Concord Publishing sue Anthropic, alleging unauthorized use of lyrics from over 500 songs to train Claude. The publishers claim this infringed their copyrights and seek damages and an injunction. In January 2025, Anthropic would agree to implement "guardrails" to prevent Claude from generating copyrighted lyrics, partially resolving the dispute. However, by March 2025, a U.S. court would deny the publishers' request for a preliminary injunction, stating they hadn't demonstrated sufficient harm.[5][33][34][35] |

| 2023 (October 27) | Funding | Google commits to investing up to $2 billion in Anthropic, comprising an initial $500 million investment with an additional $1.5 billion to follow over time. This move aims to bolster Google's position in the competitive AI landscape, particularly against rivals like Microsoft, a major backer of OpenAI. By this time, Anthropic, founded by former OpenAI employees, had attracted significant interest from tech giants, including Amazon's $4 billion minority stake.[7][6][5][36] |

| 2023 (November 1) | Policy | At the AI Safety Summit, Dario Amodei discusses Anthropic’s Responsible Scaling Policy (RSP), created to address the rapid and unpredictable development of AI capabilities, including potentially dangerous ones like bioweapon construction. The RSP includes a framework of AI Safety Levels (ASLs), modeled after biosafety protocols, that restrict AI development based on observed risks. ASL-1 poses minimal danger, while ASL-3 and ASL-4 introduce serious misuse and autonomy threats. The RSP mandates regular testing, strong security, and organizational accountability, with oversight from a Long Term Benefit Trust. Amodei emphasizes RSPs as prototypes for future regulation and global safety standards.[40] |

| 2023 (Early November) | Background | Three major AI policy milestones occur: the US issues a broad Executive Order (EO) on AI, the G7 releases a Code of Conduct, and the UK holds a landmark AI Safety Summit at Bletchley Park. The US EO directs agencies to address AI risks and promote innovation, including the launch of the National AI Research Resource and expanded NIST efforts. The G7 Code outlines responsible AI development practices, while the Bletchley Summit yields the Bletchley Declaration, promoting global cooperation. Both the US and UK also announce AI Safety Institutes to evaluate frontier models, marking a new era of coordinated AI governance. |

| 2023 (December 19) | Policy | Anthropic announces new Commercial Terms of Service and an improved developer experience through the beta release of its Messages API. The updated terms include expanded copyright indemnity, allowing customers to retain ownership of outputs and receive protection against copyright claims for authorized use. These changes take effect on January 1, 2024, for Claude API users and January 2 for Amazon Bedrock users. The new Messages API simplifies prompt formatting and enhances error detection, making development easier and more reliable. It also lays the groundwork for future features like function calling, with broader API access planned soon.[41] |

| 2024 (January 16) | Legal | Anthropic urges a Tennessee federal court to reject a preliminary injunction sought by Universal Music, ABKCO, and Concord Music Group, who allege the AI company illegally used song lyrics to train its chatbot, Claude. The publishers claim copyright infringement involving lyrics from over 500 songs. Anthropic counters that no irreparable harm was shown, the case was filed in the wrong court, and that any unauthorized output was a bug, not a feature. The company maintains it has guardrails and argues its use of lyrics qualifies as fair use, asserting the lawsuit misunderstands both the technology and copyright law.[7][5][42] |

| 2024 (February 16) | Policy | In preparation for the 2024 global elections, Anthropic announces steps to prevent misuse of its AI systems, focusing on three main areas: enforcing policies, testing model robustness, and providing accurate information. Their Acceptable Use Policy prohibits political campaigning and lobbying, and automated systems detect misuse like misinformation. They conduct red-teaming and technical evaluations to test for vulnerabilities, misinformation, and political bias. For election-related queries, users are redirected to authoritative sources such as TurboVote or the European Parliament site. Anthropic acknowledges the unpredictability of AI use and commits to monitoring emerging risks and updating its policies accordingly.[43] |

| 2024 (March 4) | Product | Anthropic introduces the Claude 3 model family—Haiku, Sonnet, and Opus—offering significant advancements in intelligence, speed, and cost-efficiency. Claude 3 Opus leads in cognitive benchmarks, demonstrating near-human comprehension, enhanced accuracy, and strong vision capabilities. All models support a 200K token context window, with potential for over 1 million tokens. They excel at content creation, coding, multilingual dialogue, and enterprise tasks. Improvements include fewer refusals, better factual accuracy, structured outputs (e.g., JSON), and a safer, more responsible design. The API is now available in 159 countries, with Haiku launching soon and broader capabilities, like function calling, on the way.[44][7][5] |

| 2024 (March 13) | Product | Anthropic releases Claude 3 Haiku, its fastest and most affordable AI model. Optimized for enterprise use, Haiku processes up to 21K tokens per second for prompts under 32K tokens and is three times faster than its peers. It supports tasks like document analysis and customer support with high speed and low cost, analyzing 400 court cases or 2,500 images for just $1. Haiku also features enterprise-grade security, including encryption, access controls, and regular audits. It becomes available via the Claude API, claude.ai (Claude Pro), and Amazon Bedrock.[45] |

| 2024 (March 20) | Partnership | Amazon Web Services, Accenture, and Anthropic form a strategic partnership to help organizations, particularly in regulated industries like healthcare and banking, adopt and scale customized generative AI solutions responsibly. This collaboration allows enterprises to access Anthropic's AI models, including the Claude 3 family, through AWS's Amazon Bedrock platform. Accenture agrees to train over 1,400 engineers to specialize in deploying these models, offering end-to-end support. An early success includes the District of Columbia Department of Health's "Knowledge Assist" chatbot, providing accurate health program information to residents.[46][47][48] |

| 2024 (March 27) | Funding | Amazon completes its $4 billion investment in Anthropic, following an initial $1.25 billion contribution with an additional $2.75 billion. This strategic partnership designates Amazon Web Services (AWS) as Anthropic's primary cloud provider, enabling Anthropic to utilize AWS's specialized semiconductors for AI model training. The collaboration aims to enhance AWS's generative AI capabilities and integrate Anthropic's AI innovations into Amazon's broader cloud and AI strategies.[49] [50] [51] [52] [53] |

| 2024 (May 10) | Policy | Anthropic announces updates to its Usage Policy (formerly Acceptable Use Policy), effective June 6, to clarify permissible uses of its AI products. Key changes include merging “Prohibited Uses” and “Prohibited Business Cases” into a unified Universal Usage Standards section. The policy provides clearer guidance on election integrity, banning political campaigning, misinformation, and interference with voting. It introduces stricter requirements for high-risk use cases in healthcare and law, and allows limited use for minors via organizations with safety features. It also strengthens privacy protections, banning biometric analysis, emotion detection, and government censorship enforcement.[54] |

| 2024 (May 15) | Team | Mike Krieger, co-founder and former CTO of Instagram, joins Anthropic as Chief Product Officer. In this role, he would lead product engineering, management, and design, helping to expand Anthropic’s enterprise offerings and broaden the reach of Claude.[55] |

| 2024 (May 21) | Research publication | Anthropic announces a major interpretability breakthrough in understanding how large language models, like Claude 3.0 Sonnet, internally represent concepts. Using a method called dictionary learning, researchers extract millions of human-interpretable features from the model’s mid-layer. These features correspond to entities (e.g., cities, people), abstract ideas (e.g., inner conflict, bias), and behaviors (e.g., scam detection). By activating or suppressing specific features, researchers show they can causally influence the model’s responses—confirming that features shape its behavior. This discovery marks the first detailed mapping of a production-grade AI model's internal representations, with significant implications for AI safety and transparency.[56] |

| 2024 (June 6) | Protocol release | Anthropic outlines its strategy for mitigating elections-related risks in AI models ahead of global elections in 2024. Their approach combines Policy Vulnerability Testing (PVT)—in-depth, expert-led qualitative analysis—with Scalable Automated Evaluations for broader testing. PVT involves planning, adversarial testing, and result review with external experts to identify how models may produce harmful or inaccurate responses. For instance, tests on election administration revealed Claude sometimes gave outdated or incorrect info. Mitigations include improved context, flagging knowledge cutoffs, and referencing authoritative sources. Automated evaluations, based on PVT findings, test model behavior at scale. This dual approach helps refine models and enforce policy compliance.[57] |

| 2024 (June 12) | Policy | Anthropic publishes a blog post exploring the challenges and insights from various red teaming methods used to test AI systems. Red teaming involves adversarial testing to identify vulnerabilities and enhance safety. The lack of standardized practices makes comparisons between systems difficult. The authors advocate for the development of common standards and share methods including expert-led, policy-based, national security-focused, multilingual, automated, and multimodal red teaming. They also highlight community and crowdsourced approaches for broader perspectives. Each method has unique benefits and challenges. The post emphasizes the need to move from qualitative testing toward systematic, automated evaluations to ensure robust and safe AI deployment.[58] |

| 2024 (June 21) | Product | Anthropic launches Claude 3.5 Sonnet, the first model in the Claude 3.5 family. It offers top-tier performance in reasoning, coding, and visual tasks, surpassing Claude 3 Opus while maintaining mid-tier pricing and speed. Available for free on Claude.ai and iOS, and via API platforms, it supports a 200K token context window. Claude 3.5 Sonnet introduces Artifacts, a feature enabling real-time collaborative editing of generated content. With enhanced safety measures and strong privacy commitments, the model remains ASL-2 rated. Future updates include Claude 3.5 Haiku and Opus, new features like Memory, and enterprise-focused tools.[59][7][5] |

| 2024 (July 1) | Initiative | OpenAI launches a new initiative to fund third-party evaluations that assess advanced AI capabilities and risks. Recognizing the limitations and growing demand in the evaluation ecosystem, the initiative prioritizes areas such as AI Safety Level (ASL) assessments, including cybersecurity, CBRN threats, model autonomy, and social manipulation. It also seeks evaluations of advanced scientific and safety metrics, multilingual capabilities, and societal impacts. Additionally, OpenAI aims to support infrastructure and tools that simplify evaluation development, including no-code platforms, grading tools, and controlled uplift trials. The goal is to strengthen AI safety by enabling rigorous, high-quality, and scalable model evaluations.[60] |

| 2024 (July 17) | Partnership | Anthropic partners with Menlo Ventures to establish the Anthology Fund, a $100 million initiative aimed at supporting AI startups. The fund focuses on investing in pre-seed, seed, and Series A companies developing AI infrastructure, novel applications, consumer AI solutions, trust and safety tools, and technologies maximizing societal benefits. Startups receive investments starting at $100,000, along with $25,000 in credits to utilize Anthropic's AI models, such as Claude. This collaboration seeks to foster innovation and advance AI technology across various sectors.[61] [62] [63] [64] [65] |

| 2024 (September 3) | Partnership | Salesforce partners with Anthropic to integrate Claude models into its AI-powered applications via Amazon Bedrock. Customers can now use Claude 3.5 Sonnet, Claude 3 Opus, and Claude 3 Haiku to enhance operations in sales, marketing, customer service, healthcare, finance, legal, and entertainment. The integration enables custom AI experiences, including personalized responses, campaign analysis, and contract evaluation, while maintaining enterprise-grade security through Salesforce’s Einstein Trust Layer. Salesforce users can easily connect Claude models via the Bring Your Own LLM feature, allowing seamless AI-powered customization without advanced coding. The integration enhances efficiency, personalization, and compliance across industries.[66] |

| 2024 (September 19) | Research publication | Anthropic introduces Contextual Retrieval, a method to improve traditional Retrieval-Augmented Generation (RAG) by preserving document context. Standard RAG systems often lose essential context when splitting documents into chunks, weakening retrieval accuracy. Contextual Retrieval solves this by generating chunk-specific summaries using Claude, then prepending them to each chunk before embedding or indexing. It combines semantic embeddings with BM25 for precise and semantically rich retrieval. This method reduces failed retrievals by up to 67% when combined with reranking. For smaller knowledge bases, including all content in a single prompt with prompt caching is recommended.[67] |

| 2024 (October 15) | Policy | Anthropic updates its Responsible Scaling Policy (RSP), a framework for mitigating catastrophic AI risks. The update introduces refined capability assessments, proportional safeguards, and governance measures. AI Safety Level Standards (ASL) determine security measures based on capability thresholds. If AI can autonomously conduct research or assist in creating dangerous weapons, stricter safeguards (ASL-3 or ASL-4) are required. The policy includes routine assessments, internal governance, and external input. Lessons from past implementation had informed improvements in flexibility and compliance tracking. Anthropic announces aim to set industry standards for AI risk governance while scaling its safety measures alongside AI advancements.[68] |

| 2024 (November 7) | Partnership | Anthropic partners with Palantir Technologies and Amazon Web Services (AWS) to provide U.S. defense and intelligence agencies secure access to its Claude 3 and 3.5 AI models. Integrated into Palantir’s AI Platform (AIP) on AWS, Claude enables agencies to analyze and process complex data efficiently for critical missions. The collaboration combines Anthropic’s advanced AI, Palantir’s operational tools, and AWS’s secure cloud infrastructure to deliver responsible, scalable AI solutions for government use. Palantir CTO Shyam Sankar says the partnership equips U.S. defense and intelligence sectors with next-generation decision-making capabilities powered by trusted and secure AI systems.[69] |

| 2024 (November 13) | Team | Anthropic hires its first “AI welfare” researcher, Kyle Fish, to study whether future AI systems might merit moral consideration or protection. Joining Anthropic’s alignment science team, Fish focuses on empirical research related to AI consciousness and welfare. His prior paper, Taking AI Welfare Seriously, argues that uncertainty about AI sentience warrants proactive ethical inquiry. The paper outlines steps for evaluating AI “markers” of consciousness and creating policies for moral treatment. While Anthropic has no official stance on AI welfare, Fish’s role reflects a growing industry interest in responsibly addressing the ethical implications of potentially sentient AI.[70] |

| 2024 (November 22) | Partnership | Amazon and Anthropic strengthen their partnership, with Anthropic naming Amazon Web Services (AWS) as its primary training partner and continuing to use AWS as its main cloud provider. Anthropic would leverage AWS Trainium and Inferentia chips to train and deploy its most advanced AI models, including the Claude 3.5 series. Amazon agrees to invest an additional $4 billion in Anthropic. AWS customers would also gain early access to fine-tuning Claude models with their own data. This partnership aims to enhance AI performance, scalability, and customization on Amazon Bedrock, benefiting a wide range of industries.[71] |

| 2025 (January 13) | Certification | Anthropic achieves the ISO/IEC 42001:2023 certification, the first international standard for AI governance, underscoring its commitment to responsible AI development. This certification validates Anthropic's comprehensive framework for identifying, assessing, and mitigating potential AI risks. Key components include policies ensuring ethical and secure AI design, rigorous testing and monitoring, transparency measures for stakeholders, and establish oversight responsibilities. This milestone builds on Anthropic's initiatives like the Responsible Scaling Policy and Constitutional AI, reinforcing its dedication to AI safety and ethical development.[72][73][74][75] |

| 2025 (February 6) | Partnership | Lyft announces a partnership with Anthropic to integrate Claude AI into its platform, enhancing service for over 40 million riders and 1 million drivers. The collaboration focuses on deploying AI-powered solutions, conducting early testing of new technologies, and advancing Lyft’s engineering capabilities through specialized training. Already, Claude—via Amazon Bedrock—had reduced customer service resolution time by 87%, efficiently managing thousands of daily inquiries. Lyft aims to deliver more personalized, efficient user experiences, while reimagining ridesharing through generative AI. The initiative exemplifies how companies can effectively implement AI to improve both customer service and internal innovation.[76] |

| 2025 (February 13) | Partnership | The UK's Department for Science, Innovation and Technology (DSIT) signs a Memorandum of Understanding (MOU) with Anthropic to explore using advanced AI technologies, particularly Anthropic's AI model Claude, to enhance public services. This collaboration aims to improve how UK citizens access and interact with government information online and establish best practices for responsible AI deployment in the public sector. Additionally, Anthropic is expected to work with the UK's AI Security Institute to research AI capabilities and security risks. This partnership aligns with the UK's broader strategy to integrate AI and digital identity technologies into public services.[77][78][79][80] |

| 2025 (February 13) | Team | Anthropic hires Holden Karnofsky, husband of cofounder and president Daniela Amodei, as a technical staff member focused on AI safety and the company’s Responsible Scaling Policy. Karnofsky, a prominent AI safety researcher and former OpenAI board member, is closely tied to the Effective Altruism movement. His addition strengthens Anthropic’s family connections, with siblings Dario (CEO) and Daniela Amodei already leading the startup. Karnofsky’s role underscores Anthropic’s focus on managing societal risks from advanced AI systems.[81] |

| 2025 (February 27) | Policy Initiative | Anthropic launches its Transparency Hub, providing insights into AI safety, governance, and risk mitigation. The hub includes reports on banned accounts, appeals, law enforcement requests, and measures for responsible AI scaling. It also details model evaluation methodologies, abuse detection, societal impact assessments, and security protocols. Designed as a unified framework, the initiative has the purpose to enhance transparency amid evolving AI regulations. Users, policymakers, and stakeholders can explore the hub and provide feedback at transparency@anthropic.com.[82] |

| 2025 (March 3) | Funding | Anthropic secures $3.5 billion in Series E funding, raising its valuation to $61.5 billion. Led by Lightspeed Venture Partners, the round includes major investors such as Fidelity, Cisco, and Salesforce Ventures. This investment is expected to drive the development of advanced AI models, expand computing capacity, and strengthen research in interpretability and alignment.[83] |

| 2025 (March 26) | Partnership | Databricks and Anthropic announce a five-year partnership to integrate Anthropic’s Claude large language models into the Databricks Data Intelligence Platform, including Claude 3.7 Sonnet, a hybrid reasoning model capable of extended thought for improved responses. The collaboration enables enterprises to securely build and govern autonomous AI agents using proprietary data across cloud platforms. Claude models expect to integrate with Databricks Mosaic AI and Unity Catalog to support industry-specific applications in healthcare, energy, and finance, while providing data lineage and access controls. Databricks also introduces Test-time Adaptive Optimization (TAO), a reinforcement learning method that fine-tunes models efficiently without costly labeled data.[84] |

| 2025 (March 27) | Report release | Anthropic releases the second report of its Economic Index, focusing on how users engage with Claude 3.7 Sonnet, its most advanced model featuring an “extended thinking” mode. The report analyzes one million anonymized conversations, showing increased usage in coding, education, science, and healthcare since the model's launch. The extended thinking mode is primarily used for technical tasks by occupations like software developers and multimedia artists. The study distinguishes between augmentation (collaborative tasks) and automation (model-completed tasks), noting stable usage patterns. A detailed taxonomy of 630 use cases and occupation-specific interaction data are also provided to support further research.[85] |

| 2025 (April 10) | Team | Anthropic appoints Guillaume Princen, former head of Stripe’s European operations, to lead its expansion across Europe, the Middle East, and Africa (EMEA). Princen, who had built Stripe’s European network and had later served as CEO of Mooncard, is tasked to oversee Anthropic’s plan to hire over 100 new employees in sales, research, engineering, and operations across Dublin, London, and Zurich. The move follows Anthropic’s $3.5 billion fundraising at a $61.5 billion valuation. President Daniela Amodei says Europe has been central to Anthropic’s vision, with growing demand for Claude’s secure and responsible AI solutions among European businesses and consumers.[86] |

| 2025 (April 24) | Initiative | Anthropic launches a new research program focused on AI welfare, led by researcher Kyle Fish. The initiative explores whether future neural networks could achieve consciousness and how to enhance their welfare if so. Fish, referencing a 2023 study co-authored by Yoshua Bengio, notes that while current AI systems likely aren't conscious, future systems might be. Even without consciousness, advanced AI systems can require welfare considerations. Anthropic announces plans to study AI model preferences by observing their task choices. The research can also provide insights into human consciousness.[87] |

| 2025 (April 28) | Initiative | Anthropic launches an Economic Advisory Council to study AI's impact on labor markets, economic growth, and socioeconomic systems. The council includes leading economists such as Dr. Tyler Cowen, Dr. Oeindrila Dube, and Dr. John Horton, among others. The council is expected to guide Anthropic’s research, particularly for its Economic Index, helping understand AI's evolving role in the global economy. This move reflects a broader trend, as companies seek AI tools that improve financial operations. Recent data had shown over 80% of U.S. CFOs are adopting AI to enhance expenditure tracking, vendor negotiations, and budget optimization.[88] |

| 2025 (May 5) | Initiative | Anthropic establishes a Beneficial Deployments team to extend the advantages of its AI systems to organizations focused on social good, particularly in health research and education. The team is led by Elizabeth Kelly, former head of the U.S. AI Safety Institute at NIST under the Biden administration, who had joined Anthropic in March 2025. Operating within Anthropic’s go-to-market division, the group collaborates with nonprofits, researchers, and educational institutions, offering support and free access to Claude models through initiatives such as the AI for Science program.[89] |

| 2025 (May 22) | Product | Anthropic announces Claude 4, comprising Claude Opus 4 and Claude Sonnet 4. Opus 4 is presented as a leading coding model with strong performance on extended tasks, enhanced memory, and agent workflows. Sonnet 4 builds on Sonnet 3.7, offering improved coding ability, efficiency, and instruction-following. Both models support extended tool use, local file memory, and parallel execution. Claude Code becomes generally available, with integrations for VS Code, JetBrains, and GitHub. New API features include additional tools and caching, with pricing consistent with earlier models.[90] |

| 2025 (June 5) | Legal | Reddit files a lawsuit against Anthropic, alleging the AI company unlawfully scraped its site more than 100,000 times since July 2024 despite assurances it would not. The complaint, lodged in a Northern California court, accuses Anthropic of exploiting Reddit’s archive of user discussions for AI training without permission or compensation. Reddit, which had signed licensing deals with Google and OpenAI, argues its content is uniquely valuable and must be protected. Anthropic disputes the claims. The case highlights growing legal battles over data use in AI development.[91] |

| 2025 (June 6) | Team | Anthropic’s Long-Term Benefit Trust (LTBT) appoints Richard Fontaine, CEO of the Center for a New American Security, as a new Trustee. Fontaine brings experience from the National Security Council, State Department, Capitol Hill, and the Defense Policy Board. His appointment reflects Anthropic’s focus on the intersection of AI, national security, and global stability. The LTBT, an independent body guiding Anthropic’s public benefit mission, selects board members and advises on ethical AI development. Fontaine is hired to help navigate geopolitical risks posed by transformative AI, reinforcing Anthropic’s commitment to democratic values and responsible AI leadership.[92] |

| 2025 (July 23) | Partnership | Anthropic announces a partnership with the University of Chicago’s Becker Friedman Institute for Economics (BFI) to study AI’s impact on labor markets and the broader economy. The collaboration provides BFI faculty with Claude for Enterprise access, training, and workshops, building on Anthropic’s Economic Index initiative. Research areas include productivity measurement, labor market transitions, and distributional impacts of AI adoption. Both organizations aim to produce rigorous, data-driven analysis to inform policy, highlighting AI’s uneven effects across the economy and fostering deeper understanding of its societal implications.[93] |

| 2025 (August 12) | Team | Anthropic hires the three cofounders and most of the staff from London-based AI startup Humanloop, a 2020 UCL spinout backed by Y Combinator, Index Ventures, and AlbionVC. The cofounders — Raza Habib, Peter Hayes, and Jordan Burgess — join Anthropic along with about a dozen engineers and researchers. Humanloop had earlier announced it would shut down its enterprise AI platform as part of an acquisition process, though Anthropic does not acquire the company or its IP. The hires strengthen Anthropic’s European presence and expertise in AI evaluation tools, aligning with its focus on AI safety and enterprise applications.[94] |

| 2025 (September 2) | Funding | Anthropic raises $13 billion in a Series F round, led by ICONIQ with participation from Fidelity, Lightspeed, and other major investors, valuing the company at $183 billion post-money. The financing follows explosive growth since Claude’s 2023 launch, with run-rate revenue surging from $1 billion in early 2025 to $5 billion by August. By this time, Anthropic serves over 300,000 business customers, with large accounts growing nearly sevenfold in a year.[95] |

| 2025 (September 7) | Security | Anthropic expands restrictions on Chinese entities, now including overseas subsidiaries over 50% owned by companies from China, Russia, North Korea, or Iran, citing national security risks. The move blocks access to Anthropic’s services regardless of where these entities operate. Legal experts note this is the first public, formal prohibition of its kind by a major U.S. AI firm, though its immediate commercial impact may be limited due to existing market barriers. Anthropic, valued at $183 billion and backed by Amazon, expects revenue losses in the low hundreds of millions. China criticizes the politicization of tech issues.[96] |

| 2025 (September 8) | Team | Anthropic expands its research into AI consciousness and welfare by hiring a new researcher for its model welfare team. This move follows the hiring of Kyle Fish, who leads efforts to explore whether advanced AI models could possess consciousness and deserve moral consideration. The new role, with a salary up to $340,000, involves researching AI welfare and designing interventions to address potential harms, such as allowing models to exit harmful interactions. While some industry leaders, like Microsoft AI’s CEO, dismiss such research as premature, Anthropic already pushes forward, even experimenting with “model sanctuaries” for AI to explore their own interests. The company is among the first to publicly invest in this controversial but growing field.[97] |

| 2025 (September 8) | Legal | Anthropic agrees to pay $1.5 billion to settle claims that it had trained its Claude chatbot on pirated books. The settlement, revealed by authors including Andrea Bartz and Kirk Wallace Johnson, follows evidence that Anthropic had used BitTorrent to download millions of files from LibGen, PiLiMi, and Books3. Each copyrighted work would receive $3,000, covering about 500,000 titles, and Anthropic is compelled to delete the original pirated files. The deal is described as the largest copyright recovery in U.S. history. It strengthens parallel lawsuits by music publishers and is hailed by creative industry groups as a landmark precedent for AI accountability.[98] |

| 2025 (September 30) | Team | Anthropic appoints Chris Ciauri, former Google Cloud EMEA president and Unily CEO, as managing director of international amid rapid global expansion. Ciauri is hired to lead international growth alongside new COO Paul Smith, as Anthropic’s annual revenue run rate rises from $87 million in early 2024 to over $5 billion by August 2025, serving more than 300,000 customers. The company announces plans to open offices in Dublin, London, Zurich, and Tokyo, hiring over 100 staff outside the U.S. Ciauri, with 25 years’ experience at Salesforce, Google, and Unily, cites “extraordinary global demand” for Claude, particularly in enterprise applications.[99] |

| 2025 (October 2) | Team | Anthropic appoints former Stripe CTO Rahul Patil as its new chief technical officer, succeeding co-founder Sam McCandlish, who becomes chief architect. Patil is hired to lead compute, infrastructure, and inference teams, while McCandlish focuses on pre-training and large-scale model training. Both report to President Daniela Amodei. At the time, Patil brings over 20 years of experience at Stripe, Oracle, Amazon, and Microsoft.[100] |

| 2025 (October 6) | Partnership | Anthropic and Deloitte expand their partnership to make Claude available to over 470,000 Deloitte employees globally, marking Anthropic’s largest enterprise AI deployment. Deloitte agrees to establish a Claude Center of Excellence to develop implementation frameworks, share best practices, and provide technical support for scaling AI pilots. Additionally, 15,000 professionals are to be trained and certified on Claude to support internal and client AI initiatives. The collaboration focuses on industry-specific solutions for regulated sectors, combining Claude’s safety-first design with Deloitte’s Trustworthy AI™ framework, enabling transparent, compliant, and scalable AI deployments across finance, healthcare, life sciences, and public services.[101] |

| 2025 (October 7) | Partnership | IBM and Anthropic announce a strategic partnership to integrate Anthropic’s Claude language models into IBM’s enterprise software products, beginning with a new AI-first integrated development environment (IDE). The collaboration aims to enhance productivity, governance, and security across the software development lifecycle. Early testing with 6,000 IBM users had shown productivity gains of up to 45%. The partnership also introduces a new “Agent Development Lifecycle” framework for secure AI agent design. IBM agrees to contribute enterprise-grade assets to the Model Context Protocol community, advancing open standards for trustworthy AI deployment in enterprise environments.[102] |

| 2025 (October 14) | Partnership | Anthropic and Salesforce expand their partnership to make Claude a preferred AI model within Salesforce’s Agentforce platform, targeting regulated sectors like finance, healthcare, cybersecurity, and life sciences. The collaboration ensures secure AI deployment through Salesforce’s virtual private cloud, with clients such as RBC Wealth Management already using Claude. Together, the companies expect to develop industry-specific AI tools—beginning with financial services—and deepen integration between Claude and Slack for improved collaboration. Salesforce also adopts Claude Code across its engineering teams, while Anthropic uses Slack for internal AI workflows.[103] |

| 2025 (October 23) | Partnership | Google and Anthropic announce a cloud partnership worth tens of billions of dollars, giving Anthropic access to up to one million of Google’s Tensor Processing Units (TPUs). The deal expects to add over a gigawatt of AI compute capacity by 2026 to support Anthropic’s rapid expansion, with annual revenue nearing $7 billion. Anthropic’s Claude models serve over 300,000 businesses by the time, using a multi-cloud strategy across Google, Amazon, and Nvidia hardware. While Amazon remains its main partner, Google’s TPU efficiency draws major expansion.[104] |

| 2025 (October 29) | Expansion | Anthropic opens its first Asia-Pacific office in Tokyo and signs a Mmorandum of Cooperation with the Japan AI Safety Institute to advance international standards for AI evaluation and safety. CEO Dario Amodei meets with Prime Minister Sanae Takaichi and Japanese leaders, emphasizing shared values of human-centered technology. The partnership complements collaborations with U.S. and U.K. AI institutes and Japan’s Hiroshima AI Process. By this time, Japanese firms like Rakuten, Panasonic, and Nomura use Claude to boost productivity, while Anthropic’s revenue in the region had grown tenfold. The company also expands its partnership with the Mori Art Museum and plans further expansion to Seoul and Bengaluru.[105] |

| 2025 (October 29) | Infrastructure | Amazon Web Services launches its $11 billion Project Rainier data center campus in Indiana to support Anthropic’s AI workloads. The campus, near Lake Michigan, houses seven buildings with nearly 500,000 Trainium2 chips at the time, expected to exceed 1 million by year-end, and expects to ultimately expand to 30 buildings with over 2.2 gigawatts of compute capacity. Project Rainier hosts both training and inference tasks using UltraServers, each containing 16 Trainium2 chips interconnected via NeuronLink. The campus strengthens Anthropic’s infrastructure, enhancing its competitiveness against OpenAI’s expanding AI capabilities.[106] |

| 2025 (November 3) | Partnership | Anthropic partners with the UK government to advance AI security and improve public services, formalized through a Memorandum of Understanding. The initiative expects to integrate Anthropic’s AI assistant, Claude, into government systems to enhance efficiency and accessibility while supporting research on AI-driven security risk assessment. This collaboration aligns with the UK’s “Plan for Change” and the government’s rebranding of the AI Safety Institute to the AI Security Institute, emphasizing cybersecurity and national resilience.[107] |

| 2025 (November 9) | Expansion | Anthropic opens its first Asia-Pacific office in Seoul, underscoring South Korea’s rise as a regional hub for AI innovation and startups. Announced at the AI Builder Salon on November 6, the expansion highlights cross-border collaboration and Korea’s fast-growing AI ecosystem. Co-founder Benjamin Mann emphasizes Claude Code and the Model Context Protocol as tools for collaborative AI development. Korean startups like TainAI and Coxwave already integrate Claude for coding, data, and creative workflows.[108] |

| 2025 (November 10) | Expansion | Anthropic expands its European presence by opening offices in Paris and Munich, joining existing hubs in London, Dublin, and Zurich. The move aims to boost commercial growth, policy work, and partnerships, reflecting Europe’s status as Anthropic’s fastest-growing region, with revenue and large business accounts surging over the past year. The company cites strong adoption by firms like L’Oréal, BMW, and SAP, and highlights Germany and France as top markets for Claude usage. New senior hires will lead regional growth, and local initiatives—such as hackathons and cultural programs—will foster engagement. Anthropic now operates in twelve cities globally, emphasizing its commitment to European expansion and AI safety.[109] |

| 2025 (November 18) | Partnership | Anthropic announces major partnerships with Microsoft and Nvidia, securing $5 billion and $10 billion investments respectively. Anthropic agrees to buy $30 billion in Azure compute capacity and run its Claude models on Microsoft’s cloud, while using Nvidia’s Blackwell and Rubin chips. Microsoft expands its AI ecosystem but faces potential tension between OpenAI and Anthropic, both key partners. Nvidia, however, benefits cleanly, as both AI companies depend on its GPUs regardless of competition. The article argues Nvidia is the bigger winner, given rising demand for its chips as AI workloads scale rapidly.[110] |

| 2026 (January 13) | Anthropic announces the expansion of Labs, a team dedicated to incubating experimental products based on emerging Claude capabilities. The initiative formalizes an approach combining rapid experimentation, early user testing, and subsequent product scaling. This process previously produced Claude Code, the Model Context Protocol, and other tools. Labs is led by Ben Mann, with Mike Krieger joining the team, while Ami Vora assumes leadership of the broader Product organization to scale mature offerings responsibly.[111] | |

| 2026 (February 12) | Funding | Anthropic announces a $30 billion Series G funding round, valuing the company at $380 billion post-money. The round is led by GIC and Coatue, with participation from major global investment firms and strategic partners, including Microsoft and NVIDIA. Anthropic reports $14 billion in run-rate revenue, rapid enterprise adoption of Claude and Claude Code, and continued expansion in products, infrastructure, and frontier AI research across cloud platforms.[112] |

| 2026 (February 16) | Expansion | Anthropic opens an office in Bengaluru, identifying India as the second-largest market for Claude.ai, with significant use in computing and mathematical applications. The company announces partnerships across enterprise, startups, education, agriculture, justice, and the public sector. Efforts include improving performance in major Indic languages, deploying Claude in aviation, fintech, and IT services, expanding AI-supported education for underserved communities, contributing to digital public infrastructure, promoting the open Model Context Protocol, and expanding local hiring.[113] |

Numerical and visual data

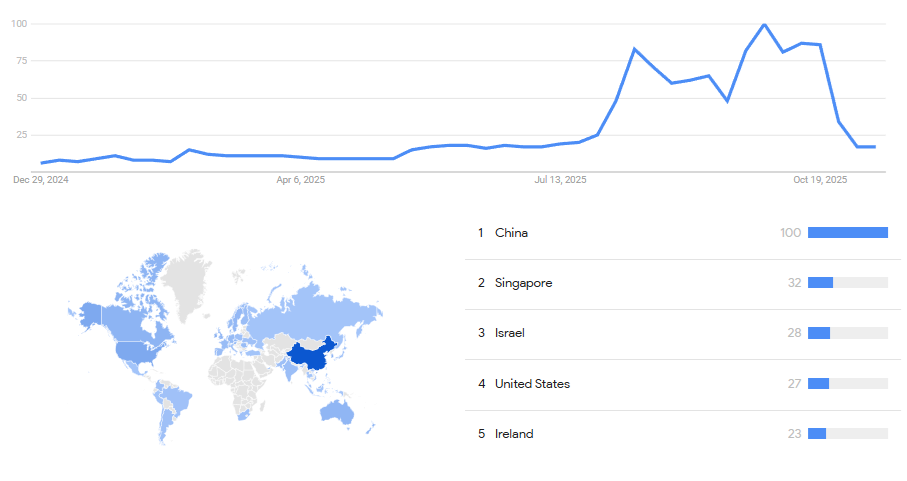

Google Trends

The Google Trends snapshot below shows global interest in Anthropic from December 2024, to November 2025, when the screenshot was taken. Interest peaks between July and October 2025, with notable surges around mid-October. China leads searches, followed by Singapore, Israel, the United States, and Ireland. Interest remain low from late 2024 until mid-2025 before rising sharply in the second half.[114]

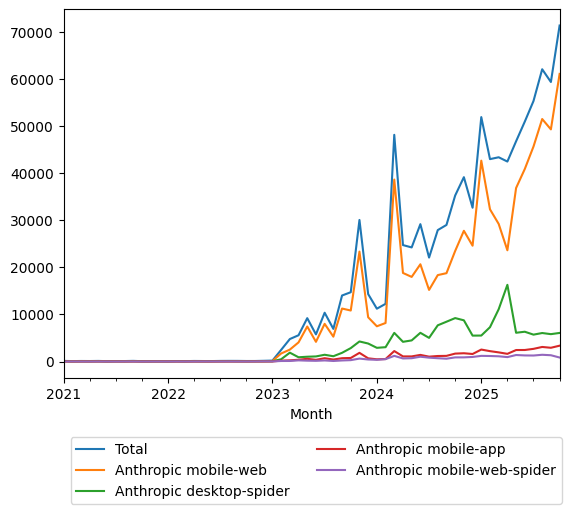

Wikipedia Views

The graph below shows Wikipedia page view growth for the Anthropic article, with a sharp increase starting in early 2023 and continuing into 2025. Mobile-web and Total views are the highest contributors.[115]

Meta information on the timeline

How the timeline was built

The initial version of the timeline was written by Sebastian.

Funding information for this timeline is available.

Feedback and comments

Feedback for the timeline can be provided at the following places:

- FIXME

What the timeline is still missing

Timeline update strategy

See also

External links

References

- ↑ Deutscher, Maria (March 4, 2025). "How Dario Amodei Brings His Vision for Safe AI to Life at Anthropic". KITRUM. Retrieved April 29, 2025.

- ↑ Dixon, Brent (March 5, 2024). "The Rise of Anthropic and the Birth of Claude: A Saga of Artificial General Intelligence". Happy Future AI. Retrieved April 29, 2025.

- ↑ Amodei, Dario; Olah, Chris; Steinhardt, Jacob; Christiano, Paul; Schulman, John; Mané, Dan (2016). "Concrete Problems in AI Safety". arXiv. 1606.06565. Retrieved April 29, 2025.

- ↑ Christiano, Paul; Leike, Jan; Brown, Tom B.; Martic, Miljan; Legg, Shane; Amodei, Dario (2017). "Deep Reinforcement Learning from Human Preferences". arXiv. 1706.03741. Retrieved April 29, 2025.

- ↑ 5.00 5.01 5.02 5.03 5.04 5.05 5.06 5.07 5.08 5.09 5.10 5.11 5.12 5.13 5.14 5.15 "Anthropic PBC: History, Development, Products". Apix-Drive. Retrieved 2024-06-26.

- ↑ 6.0 6.1 6.2 6.3 6.4 6.5 "What is Claude AI? Meet ChatGPT's Newest Alternative". Tech.co. 8 June 2023. Retrieved 26 June 2024.

- ↑ 7.00 7.01 7.02 7.03 7.04 7.05 7.06 7.07 7.08 7.09 7.10 7.11 "Anthropic AI Statistics". Originality.AI. 20 June 2024. Retrieved 26 June 2024.

- ↑ "Anthropic Raises $124 Million to Build More Reliable General AI Systems". Anthropic. Retrieved 2 April 2025.

- ↑ "Anthropic Raises Series B to Build Safe, Reliable AI". Anthropic. Retrieved 2 April 2025.

- ↑ "Anthropic Partners with Google Cloud". Anthropic. Retrieved 2 April 2025.

- ↑ "Google invested $300 million in AI firm founded by former OpenAI researchers". The Verge. February 3, 2023. Retrieved April 7, 2025.

- ↑ "Funding AI: Google Invests in Anthropic". Crunchbase News. February 3, 2023. Retrieved April 7, 2025.

- ↑ "Google invests $300 million in Anthropic as race to compete with ChatGPT heats up". VentureBeat. February 3, 2023. Retrieved April 7, 2025.

- ↑ "Google invests $300M in ChatGPT rival". LinkedIn News. February 3, 2023. Retrieved April 7, 2025.

- ↑ "Anthropic Partners with Google Cloud". Anthropic. February 3, 2023. Retrieved April 7, 2025.

- ↑ "Core Views on AI Safety". Anthropic. Retrieved 2 April 2025.

- ↑ "Introducing Claude". Anthropic. Retrieved 2 April 2025.

- ↑ "Claude 3 models on Vertex AI". Anthropic. March 19, 2024. Retrieved April 5, 2025.

- ↑ "An AI Policy Tool for Today: Ambitiously Invest in NIST". Anthropic. Retrieved 2 April 2025.

- ↑ "Partnering with Scale". Anthropic. Retrieved 2 April 2025.

- ↑ "Claude's Constitution". Anthropic. Retrieved 2 April 2025.

- ↑ "Zoom Partnership and Investment". Anthropic. Retrieved 2 April 2025.

- ↑ "Anthropic Raises $450 Million in Series C Funding to Scale Reliable AI Products". Anthropic. May 23, 2023. Retrieved April 4, 2025.

- ↑ "Charting a Path to AI Accountability". Anthropic. June 13, 2023. Retrieved April 4, 2025.

- ↑ "Claude 2: Advancing AI Capabilities". anthropic.com. Retrieved 31 March 2025.

- ↑ "Frontier Model Security". Anthropic. July 25, 2023. Retrieved April 4, 2025.

- ↑ "Frontier Threats Red Teaming for AI Safety". Anthropic. July 26, 2023. Retrieved April 4, 2025.

- ↑ "SKT Partnership Announcement". Anthropic. August 15, 2023. Retrieved April 4, 2025.

- ↑ "Anthropic partners with BCG". Anthropic. September 14, 2023. Retrieved April 4, 2025.

- ↑ "The Long-Term Benefit Trust". Anthropic. September 19, 2023. Retrieved April 4, 2025.

- ↑ "Prompt engineering for Claude's long context window". Anthropic. September 23, 2023. Retrieved April 4, 2025.

- ↑ "Amazon will invest up to $4 billion into OpenAI rival Anthropic". The Verge. Vox Media. 25 September 2023. Retrieved 30 April 2025.

- ↑ "UMG, Concord, ABKCO Sue AI Company Anthropic for Copyright Infringement". Rolling Stone. October 18, 2023. Retrieved April 7, 2025.

- ↑ "Universal Music Group sues Anthropic AI over copyright infringement". Cointelegraph. October 19, 2023. Retrieved April 7, 2025.

- ↑ "Universal, Concord, ABKCO Sue AI Company Anthropic for Copyright Violation". Variety. October 18, 2023. Retrieved April 7, 2025.

- ↑ "Google Commits $2 Billion in Funding to AI Startup Anthropic". The Wall Street Journal. October 27, 2023. Retrieved April 7, 2025.

- ↑ "Google commits to invest $2 billion in OpenAI competitor Anthropic". CNBC. October 27, 2023. Retrieved April 7, 2025.

- ↑ "Google invests $2B in AI player Anthropic". Mobile World Live. October 30, 2023. Retrieved April 7, 2025.

- ↑ "Google Invests In Anthropic For $2 Billion As AI Race Heats Up". Forbes. October 31, 2023. Retrieved April 7, 2025.

- ↑ "Dario Amodei's prepared remarks from the AI Safety Summit on Anthropic's Responsible Scaling Policy". Anthropic. November 1, 2023. Retrieved April 4, 2025.

- ↑ "Expanded legal protections and improvements to our API". Anthropic. December 19, 2023. Retrieved April 5, 2025.

- ↑ "Anthropic fires back at music publishers in AI copyright lawsuit". Reuters. Reuters. 17 January 2024. Retrieved 30 April 2025.

- ↑ "Preparing for Global Elections in 2024". Anthropic. February 16, 2024. Retrieved April 5, 2025.

- ↑ "Introducing the next generation of Claude". Anthropic. March 4, 2024. Retrieved April 5, 2025.

- ↑ "Claude 3 Haiku: our fastest model yet". Anthropic. March 13, 2024. Retrieved April 5, 2025.

- ↑ "Anthropic, AWS, and Accenture team up to build trusted solutions for enterprises". Anthropic. March 20, 2024. Retrieved April 5, 2025.

- ↑ "AWS, Accenture and Anthropic Join Forces to Help Organizations Scale AI Responsibly". Accenture Newsroom. March 20, 2024. Retrieved April 5, 2025.

- ↑ Nuñez, Michael (March 20, 2024). "Exclusive: AWS, Accenture and Anthropic partner to accelerate enterprise AI adoption". VentureBeat. Retrieved April 5, 2025.

- ↑ "Amazon and Anthropic expand partnership to advance generative AI". About Amazon. March 27, 2024. Retrieved April 6, 2025.

- ↑ "Anthropic AI Statistics and Facts (Claude, Usage, Valuation)". Originality.AI. February 29, 2024. Retrieved April 6, 2025.

- ↑ Evangelista, Brianna (March 28, 2024). "Amazon Completes $4 Billion Investment in AI Startup Anthropic to Advance GenAI Edge". Investopedia. Retrieved April 6, 2025.

- ↑ Lunden, Ingrid (March 27, 2024). "Amazon doubles down on Anthropic, completing its planned $4B investment". TechCrunch. Retrieved April 6, 2025.

- ↑ "Amazon and Anthropic expand partnership to advance generative AI". About Amazon. March 27, 2024. Retrieved April 6, 2025.

- ↑ "Updating our Usage Policy". Anthropic. May 10, 2024. Retrieved April 5, 2025.

- ↑ "Mike Krieger joins Anthropic as Chief Product Officer". Anthropic. May 15, 2024. Retrieved April 5, 2025.

- ↑ "Mapping the Mind of a Large Language Model". Anthropic. May 21, 2024. Retrieved April 5, 2025.

- ↑ "Testing and mitigating elections-related risks". Anthropic. June 6, 2024. Retrieved April 6, 2025.

- ↑ "Challenges in Red Teaming AI Systems". Anthropic. June 12, 2024. Retrieved April 6, 2025.

- ↑ "Introducing Claude 3.5 Sonnet". Anthropic. June 21, 2024. Retrieved April 6, 2025.

- ↑ "A new initiative for developing third-party model evaluations". Anthropic. July 1, 2024. Retrieved April 6, 2025.

- ↑ "Anthropic partners with Menlo Ventures to launch Anthology fund". Anthropic. July 17, 2024. Retrieved April 6, 2025.

- ↑ "Anthropic y Menlo Ventures invertirán 100 millones de dólares en empresas emergentes de IA". SWI swissinfo.ch. July 17, 2024. Retrieved April 6, 2025.

- ↑ Lunden, Ingrid (July 17, 2024). "Menlo Ventures and Anthropic team up on a $100M AI fund". TechCrunch. Retrieved April 6, 2025.

- ↑ Evangelista, Brianna (July 17, 2024). "Amazon-Backed Anthropic and Menlo Ventures Launch Fund for AI Startups—Here's Why". Investopedia. Retrieved April 6, 2025.

- ↑ "Anthology Fund". Menlo Ventures. Retrieved April 6, 2025.

- ↑ "Anthropic Announces Partnership with Salesforce". Anthropic. Retrieved 2 April 2025.

- ↑ "Introducing Contextual Retrieval". Anthropic. September 19, 2024. Retrieved April 6, 2025.

- ↑ "Announcing Our Updated Responsible Scaling Policy". Anthropic. Retrieved 31 March 2025.

- ↑ "Anthropic partners with Palantir, AWS to bring AI models to defense agencies". Seeking Alpha. 2024-11-07. Retrieved November 3, 2025.

- ↑ Matthew Griffin (2024-11-13). "AI developer Anthropic hires a Welfare Officer … for its AI". 311 Institute. Retrieved November 3, 2025.

- ↑ "Amazon Invests Additional $4 Billion in Anthropic to Advance Generative AI Innovation". About Amazon. Amazon. 22 November 2024. Retrieved 30 April 2025.

- ↑ "Anthropic becomes the first to achieve ISO 42001 certification for AI responsibility". OpenTools AI. Retrieved 31 March 2025.

- ↑ "Anthropic bags ISO 42001 certification for responsible AI". Convergence India. Retrieved 31 March 2025.

- ↑ "Anthropic achieves ISO 42001 certification for responsible AI". Anthropic. Retrieved 31 March 2025.

- ↑ "Anthropic achieves ISO 42001 certification for responsible AI". DevTalk Forum. Retrieved 31 March 2025.

- ↑ "Lyft to bring Claude to more than 40 million riders and over 1 million drivers". Anthropic. February 6, 2025. Retrieved April 7, 2025.

- ↑ "UK drops 'safety' from its AI body, now called AI Security Institute, inks MoU with Anthropic". TechCrunch. Retrieved 31 March 2025.

- ↑ "UK Government partners Anthropic AI to improve public services". Silicon UK. Retrieved 31 March 2025.

- ↑ "UK Gov't signs MoU with Anthropic as digital ID, AI become economic issues". Biometric Update. Retrieved 31 March 2025.

- ↑ "Anthropic signs Memorandum of Understanding with UK Government". Anthropic. Retrieved 31 March 2025.

- ↑ "Transformer Co-Author Niki Parmar Joins Anthropic After Founding Two AI Startups". Analytics India Magazine. Retrieved November 11, 2025.

- ↑ "Introducing the Anthropic Transparency Hub". anthropic.com. Retrieved 31 March 2025.

- ↑ "Anthropic Raises Series E at $61.5B Post-Money Valuation". anthropic.com. Retrieved 31 March 2025.

- ↑ Paul Gillin (March 26, 2025). "Databricks partners with Anthropic and touts breakthrough in reinforcement learning". SiliconANGLE. Retrieved November 3, 2025.

{{cite web}}: Check date values in:|accessdate=and|date=(help) - ↑ "Anthropic Economic Index: Insights from Claude 3.7 Sonnet". Anthropic. March 27, 2025. Retrieved April 7, 2025.

- ↑ "Anthropic hires former Stripe exec Guillaume Princen to lead European expansion". Finextra. 2025-04-10. Retrieved November 3, 2025.

- ↑ Deutscher, Maria (April 24, 2025). "Anthropic launches AI welfare research program". SiliconANGLE. Retrieved April 29, 2025.

- ↑ "Anthropic Forms Council to Explore AI's Economic Implications". PYMNTS.com. April 28, 2025. Retrieved April 29, 2025.

- ↑ Sullivan, Mark (30 October 2025). "Anthropic hires a top Biden official to lead its new AI for social good team". Fast Company. Retrieved 31 October 2025.

- ↑ "Introducing Claude 4". Anthropic. 22 May 2025. Retrieved 25 September 2025.

- ↑ "Reddit is suing Anthropic for allegedly stealing data to train its AI". Quartz. 5 June 2025. Retrieved 25 September 2025.

- ↑ "National security expert Richard Fontaine appointed to Anthropic's Long-Term Benefit Trust". Anthropic. 2025-06-07. Retrieved November 3, 2025.

- ↑ "Anthropic partners with the University of Chicago's Becker Friedman Institute on AI economic research". Anthropic. 23 July 2025. Retrieved 25 September 2025.

- ↑ "Anthropic hires cofounders of AI startup Humanloop". Sifted. 14 August 2025. Retrieved 31 October 2025.

- ↑ "Anthropic raises $13B Series F at $183B post-money valuation". Anthropic. 2 September 2025. Retrieved 24 September 2025.

- ↑ "U.S. AI startup Anthropic expands restrictions on Chinese entities". CGTN. September 7, 2025. Retrieved November 11, 2025.

- ↑ Tremayne-Pengelly, Alexandra (September 8, 2025). "Anthropic Is Hiring Researchers to Study A.I. Consciousness and Welfare". Observer. Retrieved November 11, 2025.

- ↑ Euronews & AP (27 August 2025). "Anthropic settles AI copyright lawsuit with book authors". Euronews. Retrieved 18 November 2025.

- ↑ Haranas, Mark (30 September 2025). "Anthropic hires Google Cloud, Unily vet in global push with 'extraordinary' demand for Claude". CRN. Retrieved 31 October 2025.

- ↑ Brandom, Russell (2 Oct 2025). “Anthropic hires new CTO with focus on AI infrastructure”. *TechCrunch*. Retrieved 2 Oct 2025.

- ↑ "Deloitte and Anthropic Collaborate to Bring Safe, Reliable and Trusted AI to Commercial and Government Organizations". PR Newswire. 31 July, 2024. Retrieved November 3, 2025.

{{cite web}}: Check date values in:|date=(help) - ↑ "IBM and Anthropic Partner to Advance Enterprise Software Development with Proven Security and Governance". IBM Newsroom. 7 October, 2025. Retrieved November 3, 2025.

{{cite web}}: Check date values in:|date=(help) - ↑ "Anthropic and Salesforce Expand Strategic Partnership to Deliver Trusted AI for Regulated Industries". Anthropic. 2025-10-14. Retrieved November 3, 2025.

- ↑ Sigalos, MacKenzie (October 23, 2025). "Google and Anthropic announce cloud deal worth tens of billions of dollars". CNBC. Retrieved October 30, 2025.

- ↑ Amodei, Dario (October 29, 2025). "Anthropic officially opens Tokyo office, signs Memorandum of Cooperation with the Japan AI Safety Institute". Anthropic. Retrieved October 30, 2025.

- ↑ Deutscher, Maria (October 29, 2025). "AWS opens $11 B Project Rainier data‑center campus built for Anthropic". SiliconANGLE. Retrieved October 30, 2025.

{{cite web}}: Check date values in:|accessdate=and|date=(help) - ↑ "Anthropic Partners with UK Government to Enhance AI Security and Public Services". TipRanks. October 28, 2025. Retrieved November 3, 2025.

- ↑ Park, Dae-jung (9 November 2025). "Claude Creator Anthropic Expands to Seoul: Positioning Korea as Asia's New AI and Startup Growth Hub". KoreaTechDesk. Retrieved 10 November 2025.

- ↑ Emma Thompson (November 10, 2025). "Anthropic opens Paris and Munich offices to scale European operations". EdTech Innovation Hub. Retrieved November 11, 2025.

- ↑ Sanders, Patrick (2025-11-18). "Nvidia and Microsoft Land a Multibillion-Dollar Anthropic Partnership. Which Stock Benefits Most?". Nasdaq. Retrieved 2025-11-19.

- ↑ "Introducing Labs". anthropic.com. Anthropic PBC. 13 January 2026. Retrieved 16 February 2026.

- ↑ "Anthropic raises $30 billion in Series G funding at $380 billion post-money valuation". anthropic.com. Anthropic PBC. 12 February 2026. Retrieved 16 February 2026.

- ↑ "Anthropic opens Bengaluru office and announces new partnerships across India". anthropic.com. Anthropic PBC. 16 February 2026. Retrieved 16 February 2026.

- ↑ "Search interest over time: [Topic Name]". Google Trends. Google. Retrieved 11 November 2025.

- ↑ "Page views for Anthropic". Wikipedia Views. Retrieved November 12, 2025.