Difference between revisions of "Timeline of OpenAI"

(Created page with "This is a '''timeline of OpenAI'''. ==Big picture== {| class="wikitable" ! Time period !! Development summary !! More details |- |} ==Full timeline== {| class="sortable wi...") |

|||

| (859 intermediate revisions by 3 users not shown) | |||

| Line 1: | Line 1: | ||

| − | + | {{focused coverage period|end-date = December 2024}} | |

| − | ==Big picture== | + | This is a '''timeline of {{w|OpenAI}}''', an {{w|artificial intelligence}} research organization based in the United States. It comprises both a non-profit entity called OpenAI Incorporated and a for-profit subsidiary called OpenAI Limited Partnership. OpenAI stated goals are to conduct AI research and contribute to the advancement of friendly AI, aiming to promote its development and positive impact. |

| + | |||

| + | == Sample questions == | ||

| + | |||

| + | The following are some interesting questions that can be answered by reading this timeline: | ||

| + | |||

| + | * What are some significant events previous to the creation of OpenAI? | ||

| + | ** Sort the full timeline by "Event type" and look for the group of rows with value "Prelude". | ||

| + | ** You will see some events involving key people like {{w|Elon Musk}} and {{w|Sam Altman}}, that would eventually lead to the creation of OpenAI. | ||

| + | * What are the various papers and posts published by OpenAI on their research? | ||

| + | ** Sort the full timeline by "Event type" and look for the group of rows with value "Research". | ||

| + | ** You will see mostly papers submitted to the {{w|ArXiv}} by OpenAI-affiliated researchers. Also blog posts. | ||

| + | * What are the several toolkits, implementations, algorithms, systems and software in general released by OpenAI? | ||

| + | ** Sort the full timeline by "Event type" and look for the group of rows with value "Product release". | ||

| + | ** You will see a variety of releases, some of them open-sourced. | ||

| + | ** You will see some discoveries and other significant results obtained by OpenAI. | ||

| + | * What are some updates mentioned in the timeline? | ||

| + | ** Sort the full timeline by "Event type" and look for the group of rows with value "Product update". | ||

| + | * Who are some notable team members having joined OpenAI? | ||

| + | ** Sort the full timeline by "Event type" and look for the group of rows with value "Team". | ||

| + | ** You will see the names of incorporated people and their roles. | ||

| + | * What are the several partnerships between OpenAI and other organizations? | ||

| + | ** Sort the full timeline by "Event type" and look for the group of rows with value "Partnership". | ||

| + | ** You will read collaborations with organizations like {{w|DeepMind}} and {{w|Microsoft}}. | ||

| + | * What are some significant fundings granted to OpenAI by donors? | ||

| + | ** Sort the full timeline by "Event type" and look for the group of rows with value "Donation". | ||

| + | ** You will see names like the {{w|Open Philanthropy Project}}, and {{w|Nvidia}}, among others. | ||

| + | * What are some notable events hosted by OpenAI? | ||

| + | ** Sort the full timeline by "Event type" and look for the group of rows with value "Event hosting". | ||

| + | * What are some other publications by OpenAI? | ||

| + | ** Sort the full timeline by "Event type" and look for the group of rows with value "Publication". | ||

| + | ** You will see a number of publications not specifically describing their scientific research, but other purposes, including recommendations and contributions. | ||

| + | * What are some notable publications by third parties about OpenAI? | ||

| + | ** Sort the full timeline by "Event type" and look for the group of rows with value "Coverage". | ||

| + | * Other events are described under the following types: "Achievement", "Advocacy", "Background", "Collaboration", "Commitment", "Competiton", "Congressional hearing", "Education", "Financial", "Integration", "Interview", "Notable comment", "Open sourcing", "Product withdrawal", "Reaction" ,"Recruitment", "Software adoption", and "Testing". | ||

| + | |||

| + | == Big picture == | ||

{| class="wikitable" | {| class="wikitable" | ||

| − | ! Time period !! Development summary !! More details | + | ! Time period (approximately) !! Development summary !! More details |

| + | |- | ||

| + | | 2015–2017 || Early years || OpenAI is established as a {{w|nonprofit organization}} with the mission to ensure that {{w|artificial general intelligence}} (AGI) benefits all of humanity. Its co-founders include {{w|Elon Musk}}, {{w|Sam Altman}}, Greg Brockman, Ilya Sutskever, John Schulman, and Wojciech Zaremba. During this period, the organization focuses on foundational artificial intelligence research, publishing influential papers and open-sourcing tools like OpenAI Gym<ref name="Gym">{{cite web |title=OpenAI Gym Beta |url=https://openai.com/index/openai-gym-beta/ |website=openai.com |accessdate=15 December 2024}}</ref>, designed for {{w|reinforcement learning}}. | ||

| + | |- | ||

| + | | 2018–2019 || Growth and expansion || OpenAI broadens its research scope and achieves significant breakthroughs in natural language processing and reinforcement learning. This period sees the introduction of Generative Pre-trained Transformers (GPTs), which are capable of tasks such as text generation and question answering. In 2019, OpenAI transitions to a "capped-profit" model (OpenAI LP) to attract funding, securing a $1 billion investment from {{w|Microsoft}}.<ref>{{cite web |title=Elon Musk Wanted an OpenAI for Profit |url=https://openai.com/index/elon-musk-wanted-an-openai-for-profit/ |website=openai.com |accessdate=15 December 2024}}</ref> This partnership provides access to {{w|Microsoft Azure}} cloud platform for AI training. Other notable developments include the cautious release of GPT-2, due to concerns about potential misuse of its text generation capabilities. | ||

| + | |- | ||

| + | | 2020–2021 || Launch of {{w|GPT-3}} and commercialization || In June 2020, OpenAI releases GPT-3, its most advanced language model at the time, which gains attention for its ability to generate coherent and human-like text. OpenAI introduces an API, enabling developers to integrate GPT-3 into various applications. The organization focuses on ethical AI development and forms partnerships to embed GPT-3 capabilities into tools like {{w|Microsoft Teams}} and {{w|Power Apps}}. In 2021, OpenAI introduces Codex, a specialized model designed to translate natural language into programming code, which powers tools like {{w|GitHub Copilot}}. | ||

| + | |- | ||

| + | | 2022 || Launch of ChatGPT and further advancements || OpenAI launches ChatGPT, based on a fine-tuned version of GPT-3.5, in late 2022.<ref>{{cite web |title=GPT-3.5 vs. GPT-4: Biggest Differences to Consider |url=https://www.techtarget.com/searchenterpriseai/tip/GPT-35-vs-GPT-4-Biggest-differences-to-consider |website=techtarget.com |accessdate=15 December 2024}}</ref> The tool revolutionizes conversational AI by offering practical and accessible applications for both individual and professional users. ChatGPT’s widespread adoption supports the launch of OpenAI’s subscription service, {{w|ChatGPT Plus}}. | ||

| + | |- | ||

| + | | 2023 || GPT-4 and multimodal AI || OpenAI introduces {{w|GPT-4}} in March 2023, marking a significant advancement with its ability to process both text and image inputs.<ref>{{cite web |title=GPT-4 |url=https://openai.com/index/gpt-4/ |website=openai.com |accessdate=15 December 2024}}</ref> The model powers applications like ChatGPT (Pro version), offering enhanced reasoning and problem-solving capabilities. Other key developments include the release of DALL·E 3, an advanced image generation model integrated into ChatGPT, featuring capabilities like inpainting and prompt editing.<ref>{{cite web |title=DALL·E 3 is Now Available in ChatGPT Plus and Enterprise |url=https://openai.com/index/dall-e-3-is-now-available-in-chatgpt-plus-and-enterprise/ |website=openai.com |accessdate=15 December 2024}}</ref> OpenAI’s ongoing emphasis on safety and alignment results in improved measures to mitigate harmful outputs. | ||

| + | |- | ||

| + | | 2024–present || Continued innovation and accessibility || OpenAI focuses on expanding the accessibility of its tools, introducing custom GPTs that enable users to create personalized AI assistants. Voice interaction capabilities are added, enhancing usability for diverse applications. OpenAI strengthens partnerships with educational and governmental institutions to promote AI literacy and responsible AI deployment. The organization continues to prioritize AGI safety, collaborating with other entities to ensure secure advancements in the field of artificial intelligence. | ||

| + | |} | ||

| + | |||

| + | ===Summary by year=== | ||

| + | |||

| + | {| class="wikitable" | ||

| + | ! Time period !! Development summary | ||

| + | |- | ||

| + | | 2015 || A group of influential individuals including Sam Altman, Greg Brockman, Reid Hoffman, Jessica Livingston, Peter Thiel, Elon Musk, Amazon Web Services (AWS), Infosys, and YC Research join forces to establish OpenAI. With a commitment of more than $1 billion, the organization expresses a strong dedication to advancing the field of AI for the betterment of humanity. They announce their intention to foster open collaboration by making their work accessible to the public and actively engaging with other institutions and researchers.<ref name="The History of OpenAI"/> | ||

| + | |- | ||

| + | | 2016 || OpenAI breaks from the norm by offering corporate-level salaries instead of the typical nonprofit-level salaries. They also release OpenAI Gym, a platform dedicated to reinforcement learning research. Later in December, they introduced Universe, a platform that facilitates the measurement and training of AI's general intelligence across various games, websites, and applications.<ref name="The History of OpenAI"/> | ||

| + | |- | ||

| + | | 2017 || A significant portion of OpenAI's expenditure is allocated to cloud computing, amounting to $7.9 million. On the other hand, DeepMind's expenses for that particular year soar to $442 million, representing a notable difference.<ref name="The History of OpenAI"/> | ||

| + | |- | ||

| + | | 2018 || OpenAI undergoes a shift in focus towards more extensive research and development in AI. They introduce Generative Pre-trained Transformers (GPTs). These neural networks, inspired by the human brain, are trained on large amounts of human-generated text and could perform tasks like generating and answering questions. In the same year, Elon Musk resigns from his board seat at OpenAI, citing a potential conflict of interest with his role as CEO of Tesla, which is developing AI for self-driving cars.<ref name="The History of OpenAI"/> | ||

|- | |- | ||

| + | | 2019 || OpenAI makes a transition from a non-profit organization to a for-profit model, with a capped profit limit of 100 times the investment made. This allows OpenAI LP to attract investment from venture funds and offer employees equity in the company. OpenAI forms a partnership with Microsoft, which invests $1 billion in the company. OpenAI also announces plans to license its technologies for commercial use. However, some researchers would criticize the shift to a for-profit status, raising concerns about the company's commitment to democratizing AI.<ref>{{cite web |last1=Romero |first1=Alberto |title=OpenAI Sold its Soul for $1 Billion |url=https://onezero.medium.com/openai-sold-its-soul-for-1-billion-cf35ff9e8cd4 |website=OneZero |access-date=1 June 2023 |language=en |date=13 June 2022}}</ref> | ||

| + | |- | ||

| + | | 2020 || OpenAI introduces GPT-3, a language model trained on extensive internet datasets. While its main function is to provide answers in natural language, it can also generate coherent text spontaneously and perform language translation. OpenAI also announces their plans to develop a commercial product centered around an API called "the API," which is closely connected to GPT-3.<ref name="The History of OpenAI"/> | ||

| + | |- | ||

| + | | 2021 || OpenAI introduces DALL-E, an advanced deep-learning model that has the ability to generate digital images by interpreting natural language descriptions.<ref name="The History of OpenAI">{{cite web |last1=O'Neill |first1=Sarah |title=The History of OpenAI |url=https://www.lxahub.com/stories/the-history-of-openai |website=www.lxahub.com |access-date=31 May 2023 |language=en}}</ref> | ||

| + | |- | ||

| + | | 2022 || OpenAI introduces ChatGPT<ref>{{cite web |last1=Bastian |first1=Matthias |title=OpenAI lost $540 million in 2022 developing ChatGPT and GPT-4 - Report |url=https://the-decoder.com/openai-lost-540-million-in-2022-developing-chatgpt-and-gpt-4-report/ |website=THE DECODER |access-date=31 May 2023 |date=5 May 2023}}</ref> which soon would become the fastest-growing app of all time.<ref>{{cite web |title=What is ChatGPT and why does it matter? Here's what you need to know |url=https://www.zdnet.com/article/what-is-chatgpt-and-why-does-it-matter-heres-everything-you-need-to-know/ |website=ZDNET |access-date=22 June 2023 |language=en}}</ref> | ||

| + | |- | ||

| + | | 2023 || OpenAI releases GPT-4 to ChatGPT Plus, marking a major AI advancement. The organization faces internal turmoil with CEO Sam Altman's brief dismissal. OpenAI transitions to a for-profit model to attract investment, particularly from Microsoft, while continuing its pursuit of artificial general intelligence (AGI). | ||

| + | |- | ||

| + | | 2024 || OpenAI continues to advance AI technologies, including further developments of GPT-4. The organization focuses on ethical AI deployment, emphasizing safety and transparency. Collaborations, particularly with Microsoft, strengthen its resources for progressing toward artificial general intelligence (AGI). OpenAI faces ongoing discussions about governance and AI's societal impact. | ||

| + | |- | ||

|} | |} | ||

==Full timeline== | ==Full timeline== | ||

| + | |||

| + | === Inclusion criteria === | ||

| + | |||

| + | The following events are selected for inclusion in the timeline: | ||

| + | |||

| + | * Most blog posts by OpenAI, many describing important advancements in their research. | ||

| + | * Product releases, including models and software in general. | ||

| + | * Partnerships. | ||

| + | |||

| + | We do ''not'' include: | ||

| + | |||

| + | * Comprehensive information on the team's arrivals and departures within a company. | ||

| + | * Many of OpenAI's research papers, which are not individually listed on the full timeline, but can be found on the talk page as additional entries. | ||

| + | |||

| + | === Timeline === | ||

| + | |||

| + | {| class="sortable wikitable" | ||

| + | ! Year !! Month and date !! Domain/key topic/caption !! Event type !! Details | ||

| + | |- | ||

| + | | 2014 || {{dts|October 22}}–24 || AI's existential threat || Prelude || During an interview at the AeroAstro Centennial Symposium, {{W|Elon Musk}}, who would later become co-chair of OpenAI, calls artificial intelligence humanity's "biggest existential threat".<ref>{{cite web |url=https://www.theguardian.com/technology/2014/oct/27/elon-musk-artificial-intelligence-ai-biggest-existential-threat |author=Samuel Gibbs |date=October 27, 2014 |title=Elon Musk: artificial intelligence is our biggest existential threat |publisher=[[w:The Guardian|The Guardian]] |accessdate=July 25, 2017}}</ref><ref>{{cite web |url=http://webcast.amps.ms.mit.edu/fall2014/AeroAstro/index-Fri-PM.html |title=AeroAstro Centennial Webcast |accessdate=July 25, 2017 |quote=The high point of the MIT Aeronautics and Astronautics Department's 2014 Centennial celebration is the October 22-24 Centennial Symposium}}</ref> | ||

| + | |- | ||

| + | | 2015 || {{dts|February 25}} || Superhuman AI threat || Prelude || {{w|Sam Altman}}, president of [[w:Y Combinator (company)|Y Combinator]] who would later become a co-chair of OpenAI, publishes a blog post in which he writes that the development of superhuman AI is "probably the greatest threat to the continued existence of humanity".<ref>{{cite web |url=http://blog.samaltman.com/machine-intelligence-part-1 |title=Machine intelligence, part 1 |publisher=Sam Altman |accessdate=July 27, 2017}}</ref> | ||

| + | |- | ||

| + | | 2015 || {{dts|May 6}} || Greg Brockman leaves Stripe || Prelude || Greg Brockman, who would become CTO of OpenAI, announces in a blog post that he is leaving his role as CTO of [[wikipedia:Stripe (company)|Stripe]]. In the post, in the section "What comes next" he writes "I haven't decided exactly what I'll be building (feel free to ping if you want to chat)".<ref>{{cite web |url=https://blog.gregbrockman.com/leaving-stripe |title=Leaving Stripe |first=Greg |last=Brockman |publisher=Greg Brockman on Svbtle |date=May 6, 2015 |accessdate=May 6, 2018}}</ref><ref>{{cite web |url=http://www.businessinsider.com/stripes-cto-greg-brockman-is-leaving-the-company-2015-5 |date=May 6, 2015 |first=Biz |last=Carson |title=One of the first employees of $3.5 billion startup Stripe is leaving to form his own company |publisher=Business Insider |accessdate=May 6, 2018}}</ref> | ||

| + | |- | ||

| + | | 2015 || {{dts|June 4}} || Altman's AI safety concern || Prelude || At {{w|Airbnb}}'s Open Air 2015 conference, {{w|Sam Altman}}, president of [[w:Y Combinator (company)|Y Combinator]] who would later become a co-chair of OpenAI, states his concern for advanced artificial intelligence and shares that he recently invested in a company doing AI safety research.<ref>{{cite web |url=http://www.businessinsider.com/sam-altman-y-combinator-talks-mega-bubble-nuclear-power-and-more-2015-6 |author=Matt Weinberger |date=June 4, 2015 |title=Head of Silicon Valley's most important startup farm says we're in a 'mega bubble' that won't last |publisher=Business Insider |accessdate=July 27, 2017}}</ref> | ||

| + | |- | ||

| + | | 2015 || {{dts|July}} (approximate) || AI research dinner || Prelude || {{W|Sam Altman}} sets up a dinner in {{W|Menlo Park, California}} to talk about starting an organization to do AI research. Attendees include Greg Brockman, Dario Amodei, Chris Olah, Paul Christiano, {{W|Ilya Sutskever}}, and {{W|Elon Musk}}.<ref name="path-to-OpenAI">{{cite web |url=https://blog.gregbrockman.com/my-path-to-OpenAI |title=My path to OpenAI |date=May 3, 2016 |publisher=Greg Brockman on Svbtle |archive-url = https://web.archive.org/web/20240228183857/https://blog.gregbrockman.com/my-path-to-OpenAI|archive-date = February 28, 2024}}</ref> | ||

| + | |- | ||

| + | | 2015 || Late year || Musk's $1 billion proposal || Funding || In their foundational phase, OpenAI co-founders Greg Brockman and Sam Altman initially aim to raise $100 million to launch its initiatives focused on developing artificial general intelligence (AGI). Recognizing the ambitious scope of the project, Elon Musk suggests a significantly larger funding goal of $1 billion to ensure the project’s viability. He expresses willingness to cover any funding shortfall.<ref name="index">{{cite web |title=OpenAI and Elon Musk |url=https://openai.com/index/openai-elon-musk/ |website=OpenAI |accessdate=29 September 2024}}</ref> | ||

| + | |- | ||

| + | | 2015 || {{dts|December 11}} || OpenAI's mission statement || OpenAI launch || {{w|OpenAI}} is introduced as a non-profit artificial intelligence research organization dedicated to advancing digital intelligence for the benefit of humanity, without the constraints of financial returns. OpenAI expresses aim to ensure that AI acts as an extension of individual human will and is broadly accessible. The organization recognizes the potential risks and benefits of achieving human-level AI.<ref>{{cite web |title=Introducing OpenAI |url=https://openai.com/index/introducing-openai/ |website=OpenAI |accessdate=29 September 2024}}</ref> | ||

| + | |- | ||

| + | | 2015 || {{dts|December}} || {{w|Wikipedia}} || Coverage || The article "{{w|OpenAI}}" is created on {{w|Wikipedia}}.<ref>{{cite web |title=OpenAI: Revision history |url=https://en.wikipedia.org/w/index.php?title=OpenAI&dir=prev&action=history |website=wikipedia.org |accessdate=6 April 2020}}</ref> | ||

| + | |- | ||

| + | | 2015 || {{dts|December}} || || Team || OpenAI announces {{w|Y Combinator}} founding partner {{w|Jessica Livingston}} as one of its financial backers.<ref>{{cite web |url=https://www.forbes.com/sites/theopriestley/2015/12/11/elon-musk-and-peter-thiel-launch-OpenAI-a-non-profit-artificial-intelligence-research-company/ |title=Elon Musk And Peter Thiel Launch OpenAI, A Non-Profit Artificial Intelligence Research Company |first1=Theo |last1=Priestly |date=December 11, 2015 |publisher=''{{w|Forbes}}'' |access-date=8 July 2019 }}</ref> | ||

| + | |- | ||

| + | | 2016 || {{dts|January}} || {{W|Ilya Sutskever}} || Team || {{W|Ilya Sutskever}} joins OpenAI as Research Director.<ref>{{cite web |url=https://aiwatch.issarice.com/?person=Ilya+Sutskever |date=April 8, 2018 |title=Ilya Sutskever |publisher=AI Watch |accessdate=May 6, 2018}}</ref><ref name="orgwatch.issarice.com">{{cite web |title=Information for OpenAI |url=https://orgwatch.issarice.com/?organization=OpenAI |website=orgwatch.issarice.com |accessdate=5 May 2020}}</ref> | ||

| + | |- | ||

| + | | 2016 || {{dts|January 9}} || AMA || Education || The OpenAI research team does an AMA ("ask me anything") on r/MachineLearning, the subreddit dedicated to machine learning.<ref>{{cite web |url=https://www.reddit.com/r/MachineLearning/comments/404r9m/ama_the_OpenAI_research_team/ |publisher=reddit |title=AMA: the OpenAI Research Team • r/MachineLearning |accessdate=May 5, 2018}}</ref> | ||

| + | |- | ||

| + | | 2016 || {{dts|February 25}} || {{w|Deep learning}}, {{w|neural networks}} || Research || OpenAI introduces weight normalization as a technique that improves the training of deep {{w|neural network}}s by decoupling the length and direction of weight vectors. It enhances optimization and speeds up convergence without introducing dependencies between examples in a minibatch. This method is effective for recurrent models and noise-sensitive applications, providing a significant speed-up similar to batch normalization but with lower computational overhead. Applications in {{w|image recognition}}, {{w|generative model}}ing, and deep {{w|reinforcement learning}} demonstrate the effectiveness of weight normalization.<ref>{{cite web |last1=Salimans |first1=Tim |last2=Kingma |first2=Diederik P. |title=Weight Normalization: A Simple Reparameterization to Accelerate Training of Deep Neural Networks |url=https://arxiv.org/abs/1602.07868 |website=arxiv.org |accessdate=27 March 2020}}</ref> | ||

| + | |- | ||

| + | | 2016 || {{dts|March 31}} || {{W|Ian Goodfellow}} || Team || A blog post from this day announces that {{W|Ian Goodfellow}} has joined OpenAI.<ref>{{cite web |url=https://blog.OpenAI.com/team-plus-plus/ |publisher=OpenAI Blog |title=Team++ |date=March 22, 2017 |first=Greg |last=Brockman |accessdate=May 6, 2018}}</ref> Previously, Goodfellow worked as Senior Research Scientist at {{w|Google}}.<ref>{{cite web |title=Ian Goodfellow |url=https://www.linkedin.com/in/ian-goodfellow-b7187213/ |website=linkedin.com |accessdate=24 April 2020}}</ref><ref name="orgwatch.issarice.com"/> | ||

| + | |- | ||

| + | | 2016 || {{Dts|April 26}} || {{w|Robotics}} || Team || {{w|Pieter Abbeel}} joins OpenAI.<ref>{{cite web |url=https://blog.OpenAI.com/welcome-pieter-and-shivon/ |publisher=OpenAI Blog |title=Welcome, Pieter and Shivon! |date=March 20, 2017 |first=Ilya |last=Sutskever |accessdate=May 6, 2018}}</ref><ref name="orgwatch.issarice.com"/> His work focuses on robot learning, reinforcement learning, and unsupervised learning. A cutting-edge researcher, Abbeel robots would learn various tasks, including locomotion and vision-based robotic manipulation.<ref>{{cite web |title=Pieter Abbeel AI Speaker |url=https://www.aurumbureau.com/speaker/pieter-abbeel/ |website=Aurum Speakers Bureau |access-date=13 June 2023}}</ref> | ||

| + | |- | ||

| + | | 2016 || {{dts|April 27}} || {{w|Reinforcement learning}} || Product release || OpenAI releases OpenAI Gym, a toolkit for reinforcement learning (RL) algorithms. It offers various environments for developing and comparing RL algorithms, with compatibility across different [[w:Software framework|frameworks]]. RL enables agents to learn {{w|decision-making}} and {{w|motor control}} in complex environments. OpenAI Gym addresses the need for diverse {{w|benchmark}}s and standardized environments in RL research. The toolkit encourages feedback and collaboration to enhance its capabilities.<ref>{{cite web |url=https://blog.OpenAI.com/OpenAI-gym-beta/ |publisher=OpenAI Blog |title=OpenAI Gym Beta |date=March 20, 2017 |accessdate=March 2, 2018}}</ref><ref>{{cite web |url=https://www.wired.com/2016/04/OpenAI-elon-musk-sam-altman-plan-to-set-artificial-intelligence-free/ |title=Inside OpenAI, Elon Musk's Wild Plan to Set Artificial Intelligence Free |date=April 27, 2016 |publisher=[[wikipedia:WIRED|WIRED]] |accessdate=March 2, 2018 |quote=This morning, OpenAI will release its first batch of AI software, a toolkit for building artificially intelligent systems by way of a technology called "reinforcement learning"}}</ref><ref>{{cite web |url=http://www.businessinsider.com/OpenAI-has-launched-a-gym-where-developers-can-train-their-computers-2016-4?op=1 |first=Sam |last=Shead |date=April 28, 2016 |title=Elon Musk's $1 billion AI company launches a 'gym' where developers train their computers |publisher=Business Insider |accessdate=March 3, 2018}}</ref> | ||

| + | |- | ||

| + | | 2016 || {{dts|May 25}} || {{w|Natural language processing}} || Research || OpenAI-affiliated researchers publish a paper introducing an extension of adversarial training and virtual adversarial training for text classification tasks. [[w:Adversarial machine learning|Adversarial training]] is a regularization technique for {{w|supervised learning}}, while virtual adversarial training extends it to [[w:Weak supervision|semi-supervised learning]]. However, these methods require perturbing multiple entries of the input vector, which is not suitable for sparse high-dimensional inputs like one-hot word representations in text. In this paper, the authors propose applying perturbations to word embeddings in a {{w|recurrent neural network}} (RNN) instead of the original input. This text-specific approach achieves [[w:State of the art|state-of-the-art]] results on multiple benchmark tasks for both [[w:Weak supervision|semi-supervised]] and purely {{w|supervised learning}}. The authors provide visualizations and analysis demonstrating the improved quality of the learned word embeddings and the reduced overfitting during training.<ref>{{cite journal |last1=Miyato |first1=Takeru |last2=Dai |first2=Andrew M. |last3=Goodfellow |first3=Ian |title=Adversarial Training Methods for Semi-Supervised Text Classification |date=2016 |doi=10.48550/arXiv.1605.07725}}</ref> | ||

| + | |- | ||

| + | | 2016 || June 16 || {{w|Generative model}}s || Research || OpenAI publishes post introducing the concept of generative models, which are a type of {{w|unsupervised learning}} technique in {{w|machine learning}}. The post emphasizes the importance and potential of generative models in understanding and replicating complex {{w|data set}}s, and it showcases recent advancements in this field. Generative models aim to understand and replicate the patterns and features present in a given dataset. The post discusses the use of generative models in generating images, particularly with the example of the DCGAN network. It explains the training process of generative models, including the use of {{w|Generative Adversarial Network}}s (GANs) and other approaches. The post highlights three popular types of generative models: Generative Adversarial Networks (GAN), Variational Autoencoders (VAEs), and {{w|autoregressive model}}s. Each of these approaches has its own strengths and limitations. The post also mentions recent advancements in generative models, including improvements to GANs, VAEs, and the introduction of InfoGAN. The last part briefly mentions two projects related to generative models in the context of reinforcement learning. One project focuses on curiosity-driven exploration using [[w:Bayesian inference|Bayesian]] {{w|neural network}}s. The other project explores generative models in reinforcement learning for training agents.<ref>{{cite web |title=Generative models |url=https://openai.com/research/generative-models |website=openai.com |access-date=7 June 2023}}</ref> | ||

| + | |- | ||

| + | | 2016 || {{dts|June 21}} || {{w|AI safety}} || Research || OpenAI-affiliated researchers publish a paper addressing the potential impact of accidents in {{w|machine learning}} systems. They outline five practical research problems related to accident risk, categorized based on the origin of the problem. These categories include having the wrong objective function, an objective function that is too expensive to evaluate frequently, and undesirable behavior during the learning process. The authors review existing work in these areas and propose research directions relevant to cutting-edge AI systems. They also discuss how to approach the safety of future AI applications effectively.<ref>{{cite web |url=https://arxiv.org/abs/1606.06565 |title=[1606.06565] Concrete Problems in AI Safety |date=June 21, 2016 |accessdate=July 25, 2017}}</ref><ref>{{cite web|url = https://www.openphilanthropy.org/blog/concrete-problems-ai-safety|title = Concrete Problems in AI Safety|last = Karnofsky|first = Holden|date = June 23, 2016|accessdate = April 18, 2020}}</ref> | ||

| + | |- | ||

| + | | 2016 || {{Dts|July}} || Dario Amodei joins OpenAI || Team || Dario Amodei joins OpenAI<ref>{{cite web |url=https://www.crunchbase.com/person/dario-amodei |title=Dario Amodei - Research Scientist @ OpenAI |publisher=Crunchbase |accessdate=May 6, 2018}}</ref>, working on the Team Lead for AI Safety.<ref name="Dario Amodeiy"/><ref name="orgwatch.issarice.com"/> | ||

| + | |- | ||

| + | | 2016 || {{dts|July 28}} || Security and adversarial AI, {{w|automated programming}}, {{w|cybersecurity}}, {{w|multi-agent system}}s, {{w|simulation}} || Recruitment || OpenAI publishes post calling for applicants to work in significant problems in AI that have a meaningful impact. They list several problem areas that they believe are crucial for advancing AI and its implications for society. The first problem area mentioned is detecting covert breakthrough AI systems being used by organizations for potentially malicious purposes. OpenAI emphasizes the need to develop methods to identify such undisclosed AI breakthroughs, which could be achieved through various means like monitoring news, {{w|financial market}}s, and {{w|online game}}s. Another area of interest is building an agent capable of winning online [[w:Competitive programming|programming competitions]]. OpenAI recognizes the power of a program that can generate other programs, and they see the development of such an agent as highly valuable. Additionally, OpenAI highlights the significance of cyber-security defense. They stress the need for AI techniques to defend against sophisticated hackers who may exploit AI methods to break into computer systems. Lastly, OpenAI expresses interest in constructing a complex simulation with multiple long-lived agents. Their aim is to create a large-scale simulation where agents can interact, learn over an extended period, develop language, and achieve diverse goals.<ref>{{cite web |title=Special Projects |url=https://openai.com/blog/special-projects/ |website=openai.com |accessdate=5 April 2020}}</ref> | ||

| + | |- | ||

| + | | 2016 || {{dts|August 15}} || AI Research || Donation || American multinational technology company {{W|Nvidia}} announces that it has donated the first {{W|Nvidia DGX-1}} (a supercomputer) to OpenAI, which plans to use the supercomputer to train its AI on a corpus of conversations from {{W|Reddit}}. The DGX-1 supercomputer is expected to enable OpenAI to explore new problems and achieve higher levels of performance in AI research.<ref>{{cite web |url=https://blogs.nvidia.com/blog/2016/08/15/first-ai-supercomputer-OpenAI-elon-musk-deep-learning/ |title=NVIDIA Brings DGX-1 AI Supercomputer in a Box to OpenAI |publisher=The Official NVIDIA Blog |date=August 15, 2016 |accessdate=May 5, 2018}}</ref><ref>{{cite web |url=http://fortune.com/2016/08/15/elon-musk-artificial-intelligence-OpenAI-nvidia-supercomputer/ |title=Nvidia Just Gave A Supercomputer to Elon Musk-backed Artificial Intelligence Group |publisher=Fortune |first=Jonathan |last=Vanian |date=August 15, 2016 |accessdate=May 5, 2018}}</ref><ref>{{cite web |url=https://futurism.com/elon-musks-OpenAI-is-using-reddit-to-teach-an-artificial-intelligence-how-to-speak/ |date=August 17, 2016 |title=Elon Musk's OpenAI is Using Reddit to Teach An Artificial Intelligence How to Speak |first=Cecille |last=De Jesus |publisher=Futurism |accessdate=May 5, 2018}}</ref> | ||

| + | |- | ||

| + | | 2016 || {{dts|August 29}} || Infrastructure || Research || OpenAI publishes an article discussing the infrastructure necessary for {{w|deep learning}}. The research process starts with small {{w|ad-hoc}} experiments that need to be quickly conducted, so deep learning infrastructure must be flexible and allow users to analyze the models effectively. Then, the model is scaled up, and experiment management becomes critical. The article describes an example of improving {{w|Generative Adversarial Network}} training, from a prototype on [[w:MNIST database|MNIST]] and {{w|CIFAR-10}} datasets to a larger model on the ImageNet dataset. The article also discusses the software and hardware infrastructure necessary for deep learning, such as [[w:Python (programming language)|Python]], {{w|TensorFlow}}, and high-end GPUs. Finally, the article emphasizes the importance of simple and usable infrastructure management tools.<ref>{{cite web |title=Infrastructure for Deep Learning |url=https://openai.com/blog/infrastructure-for-deep-learning/ |website=openai.com |accessdate=28 March 2020}}</ref> | ||

| + | |- | ||

| + | | 2016 || {{dts|October 11}} || {{w|Robotics}} || Research || OpenAI-affiliated researchers publish a paper addressing the challenge of transferring control policies from {{w|simulation}} to the real world. The authors propose a method that leverages the similarity in state sequences between simulation and reality. Instead of directly executing simulation-based controls on a robot, they predict the expected next states using a deep inverse dynamics model and determine suitable real-world actions. They also introduce a data collection approach to improve the model's performance. Experimental results demonstrate the effectiveness of their approach compared to existing methods for addressing simulation-to-real-world discrepancies.<ref>{{cite web |last1=Christiano |first1=Paul |last2=Shah |first2=Zain |last3=Mordatch |first3=Igor |last4=Schneider |first4=Jonas |last5=Blackwell |first5=Trevor |last6=Tobin |first6=Joshua |last7=Abbeel |first7=Pieter |last8=Zaremba |first8=Wojciech |title=Transfer from Simulation to Real World through Learning Deep Inverse Dynamics Model |url=https://arxiv.org/abs/1610.03518 |website=arxiv.org |accessdate=28 March 2020}}</ref> | ||

| + | |- | ||

| + | | 2016 || {{dts|October 18}} || Safety || Research || OpenAI-affiliated researchers publish a paper presenting a method called Private Aggregation of Teacher Ensembles (PATE) to address the privacy concerns associated with sensitive training data in machine learning applications. The approach involves training multiple models using [[w:Disjoint-set data structure|disjoint datasets]], which contain sensitive information. These models, referred to as "teachers," are not directly published but used to train a "student" model. The student model learns to predict outputs through noisy voting among the teachers and does not have access to individual teachers or their data. The student's privacy is ensured using {{w|differential privacy}}, even when the adversary can inspect its internal workings. The method is applicable to any model, including non-convex models like [[w:Deep learning|Deep Neural Networks]] (DNNs), and achieves state-of-the-art privacy/utility trade-offs on MNIST and Street View House Numbers (SVHN) datasets. The approach combines an improved privacy analysis with semi-supervised learning.<ref>{{cite web |last1=Papernot |first1=Nicolas |last2=Abadi |first2=Martín |last3=Erlingsson |first3=Úlfar |last4=Goodfellow |first4=Ian |last5=Talwar |first5=Kunal |title=Semi-supervised Knowledge Transfer for Deep Learning from Private Training Data |url=https://arxiv.org/abs/1610.05755 |website=arxiv.org |accessdate=28 March 2020}}</ref> | ||

| + | |- | ||

| + | | 2016 || {{dts|November 14}} || Generative models || Research || OpenAI-affiliated researchers publish a paper discussing the challenges in quantitatively analyzing decoder-based {{w|generative model}}s, which have shown remarkable progress in generating realistic samples of various modalities, including images. These models rely on a decoder network, which is a deep neural network that defines a generative distribution. However, evaluating the performance of these models and estimating their log-likelihoods can be challenging due to the intractability of the task. The authors propose using Annealed Importance Sampling as a method for evaluating log-likelihoods and validate its accuracy using bidirectional Monte Carlo. They provide the evaluation code for this technique. Through their analysis, they examine the performance of decoder-based models, the effectiveness of existing log-likelihood estimators, the issue of overfitting, and the models' ability to capture important modes of the data distribution.<ref>{{cite web |last1=Wu |first1=Yuhuai |last2=Burda |first2=Yuri |last3=Salakhutdinov |first3=Ruslan |last4=Grosse |first4=Roger |title=On the Quantitative Analysis of Decoder-Based Generative Models |url=https://arxiv.org/abs/1611.04273 |website=arxiv.org |accessdate=28 March 2020}}</ref> | ||

| + | |- | ||

| + | | 2016 || {{dts|November 15}} || {{w|Cloud computing}} || Partnership || Microsoft's artificial intelligence research division partners with OpenAI. Through this collaboration, OpenAI is granted access to Microsoft's virtual machine technology for AI training and simulation, while Microsoft would benefit from cutting-edge research conducted on its [[w:Microsoft Azure|Azure]] cloud platform. Microsoft sees this partnership as an opportunity to advance machine intelligence research on Azure and attract other AI companies to the platform. The collaboration aligns with Microsoft's goal to compete with Google and Facebook in the AI space and strengthen its position as a central player in the industry.<ref>{{cite web |url=https://www.theverge.com/2016/11/15/13639904/microsoft-OpenAI-ai-partnership-elon-musk-sam-altman |date=November 15, 2016 |publisher=The Verge |first=Nick |last=Statt |title=Microsoft is partnering with Elon Musk's OpenAI to protect humanity's best interests |accessdate=March 2, 2018}}</ref><ref>{{cite web |url=https://www.wired.com/2016/11/next-battles-clouds-ai-chips/ |title=The Next Big Front in the Battle of the Clouds Is AI Chips. And Microsoft Just Scored a Win |publisher=[[wikipedia:WIRED|WIRED]] |first=Cade |last=Metz |accessdate=March 2, 2018 |quote=According to Altman and Harry Shum, head of Microsoft new AI and research group, OpenAI's use of Azure is part of a larger partnership between the two companies. In the future, Altman and Shum tell WIRED, the two companies may also collaborate on research. "We're exploring a couple of specific projects," Altman says. "I'm assuming something will happen there." That too will require some serious hardware.}}</ref> | ||

| + | |- | ||

| + | | 2016 || {{dts|December 5}} || Reinforcement learning || Product release || OpenAI releases Universe, a tool that aims to train and measure AI frameworks using video games, applications, and websites. The goal is to accelerate the development of generalized intelligence that can excel at multiple tasks. Universe provides a wide range of environments, including {{w|Atari 2600}} games, flash games, web browsers, and [[w:Computer-aided design|CAD software]], for AI systems to learn and improve their capabilities. By applying reinforcement learning techniques, which leverage rewards to optimize problem-solving, Universe enables AI models to perform tasks such as playing games and browsing the web. The tool's versatility and real-world applicability make it valuable for benchmarking AI performance and potentially advancing AI capabilities beyond current platforms like Siri or Google Assistant.<ref>{{cite web |url=https://github.com/OpenAI/universe |accessdate=March 1, 2018 |publisher=GitHub |title=universe}}</ref><ref>{{cite web |url=https://techcrunch.com/2016/12/05/OpenAIs-universe-is-the-fun-parent-every-artificial-intelligence-deserves/ |date=December 5, 2016 |publisher=TechCrunch |title=OpenAI's Universe is the fun parent every artificial intelligence deserves |author=John Mannes |accessdate=March 2, 2018}}</ref><ref>{{cite web |url=https://www.wired.com/2016/12/OpenAIs-universe-computers-learn-use-apps-like-humans/ |title=Elon Musk's Lab Wants to Teach Computers to Use Apps Just Like Humans Do |publisher=[[wikipedia:WIRED|WIRED]] |accessdate=March 2, 2018}}</ref><ref>{{cite web |url=https://news.ycombinator.com/item?id=13103742 |title=OpenAI Universe |website=Hacker News |accessdate=May 5, 2018}}</ref> | ||

| + | |- | ||

| + | | 2017 || Early year || Recognition of resource needs || Funding || OpenAI realizes that building {{w|artificial general intelligence}} would require significantly more resources than previously anticipated. The organization begins evaluating the vast computational power needed for AGI development, acknowledging that billions of dollars in annual funding would be necessary.<ref name="index"/> | ||

| + | |- | ||

| + | | 2017 || {{dts|January}} || Paul Christiano joins OpenAI || Team || Paul Christiano joins OpenAI to work on AI alignment.<ref>{{cite web |url=https://paulfchristiano.com/ai/ |title=AI Alignment |date=May 13, 2017 |publisher=Paul Christiano |accessdate=May 6, 2018}}</ref> He was previously an intern at OpenAI in 2016.<ref>{{cite web |url=https://blog.openai.com/team-update/ |publisher=OpenAI Blog |title=Team Update |date=March 22, 2017 |accessdate=May 6, 2018}}</ref> | ||

| + | |- | ||

| + | | 2017 || {{dts|March}} || AI governance, philanthropy || Donation || The Open Philanthropy Project awards a grant of $30 million to {{w|OpenAI}} for general support.<ref name="donations-portal-open-phil-ai-risk">{{cite web |url=https://donations.vipulnaik.com/donor.php?donor=Open+Philanthropy+Project&cause_area_filter=AI+safety |title=Open Philanthropy Project donations made (filtered to cause areas matching AI safety) |accessdate=July 27, 2017}}</ref> The grant initiates a partnership between Open Philanthropy Project and OpenAI, in which {{W|Holden Karnofsky}} (executive director of Open Philanthropy Project) joins OpenAI's board of directors to oversee OpenAI's safety and governance work.<ref>{{cite web |url=https://www.openphilanthropy.org/focus/global-catastrophic-risks/potential-risks-advanced-artificial-intelligence/OpenAI-general-support |publisher=Open Philanthropy Project |title=OpenAI — General Support |date=December 15, 2017 |accessdate=May 6, 2018}}</ref> The grant is criticized by {{W|Maciej Cegłowski}}<ref>{{cite web |url=https://twitter.com/Pinboard/status/848009582492360704 |title=Pinboard on Twitter |publisher=Twitter |accessdate=May 8, 2018 |quote=What the actual fuck… “Open Philanthropy” dude gives a $30M grant to his roommate / future brother-in-law. Trumpy!}}</ref> and Benjamin Hoffman (who would write the blog post "OpenAI makes humanity less safe")<ref>{{cite web |url=http://benjaminrosshoffman.com/OpenAI-makes-humanity-less-safe/ |title=OpenAI makes humanity less safe |date=April 13, 2017 |publisher=Compass Rose |accessdate=May 6, 2018}}</ref><ref>{{cite web |url=https://www.lesswrong.com/posts/Nqn2tkAHbejXTDKuW/OpenAI-makes-humanity-less-safe |title=OpenAI makes humanity less safe |accessdate=May 6, 2018 |publisher=[[wikipedia:LessWrong|LessWrong]]}}</ref><ref>{{cite web |url=https://donations.vipulnaik.com/donee.php?donee=OpenAI |title=OpenAI donations received |accessdate=May 6, 2018}}</ref> among others.<ref>{{cite web |url=https://www.facebook.com/vipulnaik.r/posts/10211478311489366 |title=I'm having a hard time understanding the rationale... |accessdate=May 8, 2018 |first=Vipul |last=Naik}}</ref> | ||

| + | |- | ||

| + | | 2017 || {{dts|March 24}} || {{w|Reinforcement learning}} || Research || OpenAI publishes document presenting evolution strategies (ES) as a viable alternative to {{w|reinforcement learning}} techniques. They highlight that ES, a well-known optimization technique, performs on par with RL on modern RL benchmarks, such as {{w|Atari}} and MuJoCo, while addressing some of RL's challenges. ES is simpler to implement, does not require {{w|backpropagation}}, scales well in a distributed setting, handles sparse rewards effectively, and has fewer hyperparameters. The authors compare this discovery to previous instances where old ideas achieved significant results, such as the success of {{w|convolutional neural network}}s (CNNs) in image recognition and the combination of Q-Learning with CNNs in solving Atari games. The implementation of ES is demonstrated to be efficient, with the ability to train a 3D MuJoCo humanoid walker in just 10 minutes using a [[w:Computer cluster|computing cluster]]. The document provides a brief overview of conventional RL, compares it to ES, discusses the tradeoffs between the two approaches, and presents experimental results supporting the effectiveness of ES.<ref>{{cite web |title=Evolution Strategies as a Scalable Alternative to Reinforcement Learning |url=https://openai.com/blog/evolution-strategies/ |website=openai.com |accessdate=5 April 2020}}</ref><ref>{{cite web |last1=Juliani |first1=Arthur |title=Reinforcement Learning or Evolutionary Strategies? Nature has a solution: Both. |url=https://medium.com/beyond-intelligence/reinforcement-learning-or-evolutionary-strategies-nature-has-a-solution-both-8bc80db539b3 |website=Beyond Intelligence |access-date=25 June 2023 |language=en |date=29 May 2017}}</ref> | ||

| + | |- | ||

| + | | 2017 || {{dts|March}} || {{w|Artificial general intelligence}} || Reorganization || Greg Brockman and a few other core members of OpenAI begin drafting an internal document to lay out a path to {{w|artificial general intelligence}}. As the team studies trends within the field, they realize staying a nonprofit is financially untenable.<ref name="technologyreview.comñ">{{cite web |title=The messy, secretive reality behind OpenAI’s bid to save the world |url=https://www.technologyreview.com/s/615181/ai-OpenAI-moonshot-elon-musk-sam-altman-greg-brockman-messy-secretive-reality/ |website=technologyreview.com |accessdate=28 February 2020}}</ref> | ||

| + | |- | ||

| + | | 2017 || {{dts|April}} || OpenAI history || Coverage || An article by Brent Simoneaux and Casey Stegman is published, providing insights into the early days of OpenAI and the individuals involved in shaping the organization. The article begins by debunking the notion that OpenAI's office is filled with futuristic gadgets and experiments. Instead, it describes a typical tech startup environment with desks, laptops, and bean bag chairs, albeit with a small robot tucked away in a side room. OpenAI–founders Greg Brockman and Ilya Sutskever, were inspired to establish the organization after a dinner conversation in 2015 with tech entrepreneur Sam Altman and Elon Musk. They discussed the idea of building safe and beneficial AI and decided to create a nonprofit organization. Overall, the article provides a glimpse into the early days of OpenAI and the visionary individuals behind the organization's mission to advance AI for the benefit of humanity.<ref>{{cite web |url=https://www.redhat.com/en/open-source-stories/ai-revolutionaries/people-behind-OpenAI |title=Open Source Stories: The People Behind OpenAI |accessdate=May 5, 2018 |first1=Brent |last1=Simoneaux |first2=Casey |last2=Stegman}} In the HTML source, last-publish-date is shown as Tue, 25 Apr 2017 04:00:00 GMT as of 2018-05-05.</ref><ref>{{cite web |url=https://www.reddit.com/r/OpenAI/comments/63xr4p/profile_of_the_people_behind_OpenAI/ |publisher=reddit |title=Profile of the people behind OpenAI • r/OpenAI |date=April 7, 2017 |accessdate=May 5, 2018}}</ref><ref>{{cite web |url=https://news.ycombinator.com/item?id=14832524 |title=The People Behind OpenAI |website=Hacker News |accessdate=May 5, 2018 |date=July 23, 2017}}</ref> | ||

| + | |- | ||

| + | | 2017 || {{dts|April 6}} || {{w|Sentiment analysis}} || Product release || OpenAI unveils an unsupervised system which is able to perform a excellent {{w|sentiment analysis}}, despite being trained only to predict the next character in the text of Amazon reviews.<ref>{{cite web |title=Unsupervised Sentiment Neuron |url=https://openai.com/blog/unsupervised-sentiment-neuron/ |website=openai.com |accessdate=5 April 2020}}</ref><ref>{{cite web |url=https://techcrunch.com/2017/04/07/OpenAI-sets-benchmark-for-sentiment-analysis-using-an-efficient-mlstm/ |date=April 7, 2017 |publisher=TechCrunch |title=OpenAI sets benchmark for sentiment analysis using an efficient mLSTM |author=John Mannes |accessdate=March 2, 2018}}</ref> | ||

| + | |- | ||

| + | | 2017 || {{dts|April 6}} || {{w|Generative model}}s || Research || OpenAI-affiliated researchers publish a paper exploring the capabilities of byte-level recurrent language models. Through extensive training and computational resources, these models acquire disentangled features that represent high-level concepts. Remarkably, the researchers discover a single unit within the model that effectively performs {{w|sentiment analysis}}. The learned representations, achieved through unsupervised learning, outperform existing methods on the binary subset of the Stanford Sentiment Treebank dataset. Moreover, the models trained using this approach are highly efficient in terms of data requirements. Even with a small number of labeled examples, their performance matches that of strong baselines trained on larger datasets. Additionally, the researchers demonstrate that manipulating the sentiment unit in the model influences the generative process, allowing them to produce samples with positive or negative sentiment simply by setting the unit's value accordingly.<ref>{{cite journal |last1=Radford |first1=Alec |last2=Jozefowicz |first2=Rafal |last3=Sutskever |first3=Ilya |title=Learning to Generate Reviews and Discovering Sentiment |date=2017 |doi=10.48550/arXiv.1704.01444}}</ref><ref>{{cite web |url=https://techcrunch.com/2017/04/07/openai-sets-benchmark-for-sentiment-analysis-using-an-efficient-mlstm/ |date=April 7, 2017 |publisher=TechCrunch |title=OpenAI sets benchmark for sentiment analysis using an efficient mLSTM |author=John Mannes |accessdate=March 2, 2018}}</ref> | ||

| + | |- | ||

| + | | 2017 || {{dts|April 6}} || OpenAI's Evolution Strategies || Research || OpenAI unveils reuse of an old field called “{{w|neuroevolution}}”, and a subset of algorithms from it called “evolution strategies,” which are aimed at solving optimization problems. In one hour training on an Atari challenge, an algorithm is found to reach a level of mastery that took a reinforcement-learning system published by DeepMind in 2016 a whole day to learn. On the walking problem the system takes 10 minutes, compared to 10 hours for DeepMind's approach.<ref>{{cite web |title=OpenAI Just Beat Google DeepMind at Atari With an Algorithm From the 80s |url=https://singularityhub.com/2017/04/06/OpenAI-just-beat-the-hell-out-of-deepmind-with-an-algorithm-from-the-80s/ |website=singularityhub.com |accessdate=29 June 2019}}</ref> | ||

| + | |- | ||

| + | | 2017 || {{dts|May 15}} || OpenAI releases Roboschool || Product release || OpenAI releases Roboschool as an {{w|open-source software}}, integrated with OpenAI Gym, that facilitates [[w:Robotics simulator|robot simulation]]. It provides a range of [[w:Operating environment|environments]] for controlling robots in simulation, including both modified versions of existing MuJoCo environments and new challenging tasks. Roboschool utilizes the Bullet Physics Engine and offers free alternatives to MuJoCo, removing the constraint of a paid license. The software supports training multiple agents together in the same environment, allowing for multiplayer interactions and learning. It also introduces interactive control environments that require the robots to navigate towards a moving flag, adding complexity to locomotion problems. Trained policies are provided for these environments, showcasing the capability of the software. Overall, Roboschool offers a platform for robotics research, {{w|simulation}}, and control policy development within the OpenAI Gym framework.<ref>{{cite web |title=Roboschool |url=https://openai.com/blog/roboschool/ |website=openai.com |accessdate=5 April 2020}}</ref> | ||

| + | |- | ||

| + | | 2017 || {{dts|May 24}} || OpenAI releases Baselines || Product release || OpenAI releases Baselines, a collection of {{w|reinforcement learning}} {{w|algorithm}}s that provide high-quality implementations. These implementations serve as reliable benchmarks for researchers to replicate, improve, and explore new ideas in the field of reinforcement learning. The DQN implementation and its variations in OpenAI Baselines achieve performance levels comparable to those reported in published papers. They are intended to serve as a foundation for incorporating novel approaches and as a means of comparing new methods against established ones. By offering these baselines, OpenAI aims to facilitate research advancements in the field of reinforcement learning.<ref>{{cite web |url=https://blog.OpenAI.com/OpenAI-baselines-dqn/ |publisher=OpenAI Blog |title=OpenAI Baselines: DQN |date=November 28, 2017 |accessdate=May 5, 2018}}</ref><ref>{{cite web |url=https://github.com/OpenAI/baselines |publisher=GitHub |title=OpenAI/baselines |accessdate=May 5, 2018}}</ref> | ||

| + | |- | ||

| + | | 2017 || {{dts|June 12}} || Deep RL from human preferences || Research || OpenAI-affiliated researchers present a study on deep {{w|reinforcement learning}} (RL) systems. They propose a method to effectively communicate complex goals to RL systems by utilizing human preferences between pairs of trajectory segments. Their approach demonstrates successful solving of complex RL tasks, such as [[w:Atari, Inc. (1993–present)|Atari]] games and simulated robot locomotion, without relying on a reward function. The authors achieve this by providing feedback on less than one percent of the agent's interactions with the environment, significantly reducing the need for human oversight. Additionally, they showcase the flexibility of their approach by training complex novel behaviors in just about an hour of human time. This work surpasses previous achievements in learning from human feedback, as it tackles more intricate behaviors and environments.<ref>{{cite web |url=https://arxiv.org/abs/1706.03741 |title=[1706.03741] Deep reinforcement learning from human preferences |accessdate=March 2, 2018}}</ref><ref>{{cite web |url=https://www.gwern.net/newsletter/2017/06 |author=gwern |date=June 3, 2017 |title=June 2017 news - Gwern.net |accessdate=March 2, 2018}}</ref><ref>{{cite web |url=https://www.wired.com/story/two-giants-of-ai-team-up-to-head-off-the-robot-apocalypse/ |title=Two Giants of AI Team Up to Head Off the Robot Apocalypse |publisher=[[wikipedia:WIRED|WIRED]] |accessdate=March 2, 2018 |quote=A new paper from the two organizations on a machine learning system that uses pointers from humans to learn a new task, rather than figuring out its own—potentially unpredictable—approach, follows through on that. Amodei says the project shows it's possible to do practical work right now on making machine learning systems less able to produce nasty surprises.}}</ref> | ||

| + | |- | ||

| + | | 2017 || {{dts|June 28}} || OpenAI releases mujoco-py || Open sourcing || OpenAI open-sources mujoco-py, a Python library for robotic simulation based on the MuJoCo engine. It offers parallel simulations, GPU-accelerated rendering, texture randomization, and VR interaction. The new version provides significant performance improvements, allowing for faster trajectory optimization and {{w|reinforcement learning}}. Beginners can use the MjSim class, while advanced users have access to lower-level interfaces.<ref>{{cite web |title=Faster Physics in Python |url=https://openai.com/blog/faster-robot-simulation-in-python/ |website=openai.com |accessdate=5 April 2020}}</ref> | ||

| + | |- | ||

| + | | 2017 || {{dts|June}} || OpenAI-DeepMind safety partnership || Partnership || OpenAI partners with {{w|DeepMind}}’s safety team in the development of an algorithm which can infer what humans want by being told which of two proposed behaviors is better. The learning algorithm uses small amounts of human feedback to solve modern {{w|reinforcement learning}} environments.<ref>{{cite web |title=Learning from Human Preferences |url=https://OpenAI.com/blog/deep-reinforcement-learning-from-human-preferences/ |website=OpenAI.com |accessdate=29 June 2019}}</ref> | ||

| + | |- | ||

| + | | 2017 || {{dts|July 27}} || OpenAI introduces parameter noise || Research || OpenAI publishes a blog post discussing the benefits of adding adaptive noise to the parameters of reinforcement learning algorithms, specifically in the context of exploration. The technique, called parameter noise, enhances the efficiency of exploration by injecting randomness directly into the parameters of the agent's neural network policy. Unlike traditional action space noise, parameter noise ensures that the agent's exploration is consistent across different time steps. The authors demonstrate that parameter noise can significantly improve the performance of reinforcement learning algorithms, leading to higher scores and more effective exploration. They address challenges related to the sensitivity of network layers, changes in sensitivity over time, and determining the appropriate noise scale. The article also provides baseline code and benchmarks for various algorithms, showcasing the benefits of parameter noise in different tasks.<ref>{{cite web |title=Better Exploration with Parameter Noise |url=https://openai.com/blog/better-exploration-with-parameter-noise/ |website=openai.com |accessdate=5 April 2020}}</ref> | ||

| + | |- | ||

| + | | 2017 || {{dts|August 12}} || {{w|Reinforcement learning}} || Achievement || OpenAI's Dota 2 bot, trained through self-play, emerges victorious against top professional players at The International, a major eSports event. The bot, developed by OpenAI, remains undefeated against the world's best Dota 2 players. While the 1v1 battles are less complex than professional matches, OpenAI reportedly works on a bot capable of playing in larger 5v5 battles. Elon Musk, who watches the event, would express concerns about unregulated AI, emphasizing its potential dangers.<ref>{{cite web |url=https://techcrunch.com/2017/08/12/OpenAI-bot-remains-undefeated-against-worlds-greatest-dota-2-players/ |date=August 12, 2017 |publisher=TechCrunch |title=OpenAI bot remains undefeated against world's greatest Dota 2 players |author=Jordan Crook |accessdate=March 2, 2018}}</ref><ref>{{cite web |url=https://www.theverge.com/2017/8/14/16143392/dota-ai-OpenAI-bot-win-elon-musk |date=August 14, 2017 |publisher=The Verge |title=Did Elon Musk's AI champ destroy humans at video games? It's complicated |accessdate=March 2, 2018}}</ref><ref>{{cite web |url=http://www.businessinsider.com/the-international-dota-2-OpenAI-bot-beats-dendi-2017-8 |date=August 11, 2017 |title=Elon Musk's $1 billion AI startup made a surprise appearance at a $24 million video game tournament — and crushed a pro gamer |publisher=Business Insider |accessdate=March 3, 2018}}</ref><ref>{{cite web |title=Dota 2 |url=https://openai.com/research/dota-2 |website=openai.com |access-date=15 March 2023}}</ref> | ||

| + | |- | ||

| + | | 2017 || {{dts|August 13}} || NYT highlights OpenAI's AI safety work || Coverage || ''{{W|The New York Times}}'' publishes a story covering the AI safety work (by Dario Amodei, Geoffrey Irving, and Paul Christiano) at OpenAI.<ref>{{cite web |url=https://www.nytimes.com/2017/08/13/technology/artificial-intelligence-safety-training.html |date=August 13, 2017 |publisher=[[wikipedia:The New York Times|The New York Times]] |title=Teaching A.I. Systems to Behave Themselves |author=Cade Metz |accessdate=May 5, 2018}}</ref> | ||

| + | |- | ||

| + | | 2017 || {{dts|August 18}} || OpenAI releases ACKTR and A2C || Product release || OpenAI releases two new Baselines implementations: ACKTR and A2C. A2C is a deterministic variant of Asynchronous Advantage Actor Critic (A3C), providing equal performance. ACKTR is a more sample-efficient reinforcement learning algorithm than TRPO and A2C, requiring slightly more computation than A2C per update. ACKTR excels in sample complexity by using the natural gradient direction and is only 10-25% more computationally expensive than standard gradient updates. OpenAI has also published benchmarks comparing ACKTR with A2C, PPO, and ACER on various tasks, demonstrating ACKTR's competitive performance. A2C, a synchronous alternative to A3C, is included in this release and is efficient for single-GPU and CPU-based implementations.<ref>{{cite web |title=OpenAI Baselines: ACKTR & A2C |url=https://openai.com/blog/baselines-acktr-a2c/ |website=openai.com |accessdate=5 April 2020}}</ref> | ||

| + | |- | ||

| + | | 2017 || {{Dts|September 13}} || OpenAI introduces LOLA for multi-agent RL || Research || OpenAI publishes a paper introducing a new method for training agents in multi-agent settings called "Learning with Opponent-Learning Awareness" (LOLA). The method takes into account how an agent's policy affects the learning of the other agents in the environment. The paper shows that LOLA leads to the emergence of cooperation in the iterated prisoners' dilemma and outperforms naive learning in this domain. The LOLA update rule can be efficiently calculated using an extension of the policy gradient estimator, making it suitable for model-free RL. The method is applied to a grid world task with an embedded social dilemma using recurrent policies and opponent modeling.<ref>{{cite web |url=https://arxiv.org/abs/1709.04326 |title=[1709.04326] Learning with Opponent-Learning Awareness |accessdate=March 2, 2018}}</ref><ref>{{cite web |url=https://www.gwern.net/newsletter/2017/09 |author=gwern |date=August 16, 2017 |title=September 2017 news - Gwern.net |accessdate=March 2, 2018}}</ref> | ||

| + | |- | ||

| + | | 2017 || {{dts|October 11}} || OpenAI unveils RoboSumo || Product release || OpenAI announces development of a simple {{w|sumo}}-wrestling videogame called RoboSumo to advance the intelligence of artificial intelligence (AI) software. In this game, robots controlled by machine-learning algorithms compete against each other. Through {{w|trial and error}}, the AI agents learn to play the game and develop strategies to outmaneuver their opponents. OpenAI's project aims to push the boundaries of machine learning beyond the limitations of heavily-used techniques that rely on labeled example data. Instead, they focus on reinforcement learning, where software learns through trial and error to achieve specific goals. OpenAI believes that competition among AI agents can foster more complex problem-solving and enable faster progress. The researchers also test their approach in other games and scenarios, such as spider-like robots and soccer penalty shootouts.<ref>{{cite web |url=https://www.wired.com/story/ai-sumo-wrestlers-could-make-future-robots-more-nimble/ |title=AI Sumo Wrestlers Could Make Future Robots More Nimble |publisher=[[wikipedia:WIRED|WIRED]] |accessdate=March 3, 2018}}</ref><ref>{{cite web |url=http://www.businessinsider.com/elon-musk-OpenAI-virtual-robots-learn-sumo-wrestle-soccer-sports-ai-tech-science-2017-10 |first1=Alexandra |last1=Appolonia |first2=Justin |last2=Gmoser |date=October 20, 2017 |title=Elon Musk's artificial intelligence company created virtual robots that can sumo wrestle and play soccer |publisher=Business Insider |accessdate=March 3, 2018}}</ref> | ||

| + | |- | ||

| + | | 2017 || {{Dts|November 6}} || OpenAI researchers Form Embodied Intelligence || Team || ''{{W|The New York Times}}'' reports that Pieter Abbeel (a researcher at OpenAI) and three other researchers from Berkeley and OpenAI have left to start their own company called Embodied Intelligence.<ref>{{cite web |url=https://www.nytimes.com/2017/11/06/technology/artificial-intelligence-start-up.html |date=November 6, 2017 |publisher=[[wikipedia:The New York Times|The New York Times]] |title=A.I. Researchers Leave Elon Musk Lab to Begin Robotics Start-Up |author=Cade Metz |accessdate=May 5, 2018}}</ref> | ||

| + | |- | ||

| + | | 2017 || {{dts|December 6}} || {{w|Neural network}}s || Product release || OpenAI releases highly-optimized GPU kernels for neural network architectures with block-sparse weights. These kernels can run significantly faster than cuBLAS or cuSPARSE, depending on the chosen sparsity. They enable the training of neural networks with a large number of hidden units and offer computational efficiency proportional to the number of non-zero weights. The release includes benchmarks that show performance improvements over other algorithms like A2C, PPO, and ACER in various tasks. This development opens up opportunities for training large, efficient, and high-performing neural networks, with potential applications in fields like text analysis and image generation.<ref>{{cite web |title=Block-Sparse GPU Kernels |url=https://openai.com/blog/block-sparse-gpu-kernels/ |website=openai.com |accessdate=5 April 2020}}</ref> | ||

| + | |- | ||

| + | | 2017 || Late year || Transition to for-profit structure || || Discussions among OpenAI’s leadership and Elon Musk leads to the decision to establish a for-profit entity to attract necessary funding. Musk expresses a desire for majority equity and control over the organization, emphasizing the urgency of building a competitor to major players like {{w|Google}} and {{w|DeepMind}}.<ref name="index"/> | ||

| + | |- | ||

| + | | 2018 || Early February || Proposal to merge with Tesla || Notable comment || Elon Musk suggests in an email that OpenAI should merge with [[w:Tesla, Inc.|Tesla]], referring to it as a "cash cow" that could provide financial support for AGI development. He believes Tesla can serve as a viable competitor to Google, although he acknowledges the challenges of this strategy.<ref name="index"/> | ||

| + | |- | ||

| + | | 2018 || {{dts|February 20}} || AI ethics, security || Publication || OpenAI co-authors a paper forecasting the potential misuse of AI technology by malicious actors and ways to prevent and mitigate these threats. The report makes high-level recommendations for companies, research organizations, individual practitioners, and governments to ensure a safer world, including acknowledging AI's dual-use nature, learning from {{w|cybersecurity}} practices, and involving a broader cross-section of society in discussions. The paper highlights concrete scenarios where AI can be maliciously used, such as {{w|cybercriminal}}s using {{w|neural network}}s to create {{w|computer virus}}es with automatic exploit generation capabilities and {{w|rogue state}}s using AI-augmented surveillance systems to pre-emptively arrest people who fit a predictive risk profile.<ref>{{cite web |url=https://arxiv.org/abs/1802.07228 |title=[1802.07228] The Malicious Use of Artificial Intelligence: Forecasting, Prevention, and Mitigation |accessdate=February 24, 2018}}</ref><ref>{{cite web |url=https://blog.OpenAI.com/preparing-for-malicious-uses-of-ai/ |publisher=OpenAI Blog |title=Preparing for Malicious Uses of AI |date=February 21, 2018 |accessdate=February 24, 2018}}</ref><ref>{{cite web |url=https://maliciousaireport.com/ |author=Malicious AI Report |publisher=Malicious AI Report |title=The Malicious Use of Artificial Intelligence |accessdate=February 24, 2018}}</ref><ref name="musk-leaves" /><ref>{{cite web |url=https://www.wired.com/story/why-artificial-intelligence-researchers-should-be-more-paranoid/ |title=Why Artificial Intelligence Researchers Should Be More Paranoid |first=Tom |last=Simonite |publisher=[[wikipedia:WIRED|WIRED]] |accessdate=March 2, 2018}}</ref> | ||

| + | |- | ||

| + | | 2018 || {{dts|February 20}} || Donors/advisors || Team || OpenAI announces changes in donors and advisors. New donors are: {{W|Jed McCaleb}}, {{W|Gabe Newell}}, {{W|Michael Seibel}}, {{W|Jaan Tallinn}}, and {{W|Ashton Eaton}} and {{W|Brianne Theisen-Eaton}}. {{W|Reid Hoffman}} is "significantly increasing his contribution". Pieter Abbeel (previously at OpenAI), {{W|Julia Galef}}, and Maran Nelson become advisors. {{W|Elon Musk}} departs the board but remains as a donor and advisor.<ref>{{cite web |url=https://blog.OpenAI.com/OpenAI-supporters/ |publisher=OpenAI Blog |title=OpenAI Supporters |date=February 21, 2018 |accessdate=March 1, 2018}}</ref><ref name="musk-leaves">{{cite web |url=https://www.theverge.com/2018/2/21/17036214/elon-musk-OpenAI-ai-safety-leaves-board |date=February 21, 2018 |publisher=The Verge |title=Elon Musk leaves board of AI safety group to avoid conflict of interest with Tesla |accessdate=March 2, 2018}}</ref> | ||

| + | |- | ||

| + | | 2018 || {{dts|February 26}} || Robotics || Product release || OpenAI announces a research release that includes eight simulated robotics environments and a reinforcement learning algorithm called Hindsight Experience Replay (HER). The environments are more challenging than existing ones and involve realistic tasks. HER allows learning from failure by substituting achieved goals for the original ones, enabling agents to learn how to achieve arbitrary goals. The release also includes requests for further research to improve HER and reinforcement learning. The goal-based environments require some changes to the Gym API and can be used with existing reinforcement learning algorithms. Overall, this release provides new opportunities for robotics research and advancements in reinforcement learning.<ref>{{cite web |title=Ingredients for Robotics Research |url=https://openai.com/blog/ingredients-for-robotics-research/ |website=openai.com |accessdate=5 April 2020}}</ref> | ||

| + | |- | ||

| + | | 2018 || {{dts|March 3}} || {{w|Hackathon}} || Event hosting || OpenAI hosts its first hackathon. Applicants include high schoolers, industry practitioners, engineers, researchers at universities, and others, with interests spanning healthcare to {{w|AGI}}.<ref>{{cite web |url=https://blog.OpenAI.com/hackathon/ |publisher=OpenAI Blog |title=OpenAI Hackathon |date=February 24, 2018 |accessdate=March 1, 2018}}</ref><ref>{{cite web |url=https://blog.OpenAI.com/hackathon-follow-up/ |publisher=OpenAI Blog |title=Report from the OpenAI Hackathon |date=March 15, 2018 |accessdate=May 5, 2018}}</ref> | ||

| + | |- | ||

| + | | 2018 || {{Dts|April 5}}{{snd}}June 5 || {{w|Reinforcement learning}} || Event hosting || The OpenAI Retro Contest takes place.<ref>{{cite web |url=https://contest.OpenAI.com/ |title=OpenAI Retro Contest |publisher=OpenAI |accessdate=May 5, 2018}}</ref><ref>{{cite web |url=https://blog.OpenAI.com/retro-contest/ |publisher=OpenAI Blog |title=Retro Contest |date=April 13, 2018 |accessdate=May 5, 2018}}</ref> It is a competition organized by OpenAI that involves using the Retro platform to develop artificial intelligence agents capable of playing classic video games. Participants are required to train their agents to achieve high scores in a set of selected games using reinforcement learning techniques. The contest provides a framework called gym-retro, which allows participants to interact with and train agents on retro games using OpenAI Gym. The goal is to develop intelligent agents that can learn and adapt to the games' dynamics, achieving high scores and demonstrating effective gameplay strategies.<ref>{{cite web |title=[OpenAI Retro Contest] Getting Started |url=https://medium.com/@dnk8n/openai-retro-contest-getting-started-62a9e5cc3801 |website=medium.com |access-date=31 May 2023}}</ref> | ||

| + | |- | ||

| + | | 2018 || {{dts|April 9}} || AI Ethics, AI Governance, AI Safety || Commitment || OpenAI releases a charter stating that the organization commits to stop competing with a value-aligned and safety-conscious project that comes close to building artificial general intelligence, and also that OpenAI expects to reduce its traditional publishing in the future due to safety concerns.<ref>{{cite web |url=https://blog.OpenAI.com/OpenAI-charter/ |publisher=OpenAI Blog |title=OpenAI Charter |date=April 9, 2018 |accessdate=May 5, 2018}}</ref><ref>{{cite web |url=https://www.lesswrong.com/posts/e5mFQGMc7JpechJak/OpenAI-charter |title=OpenAI charter |accessdate=May 5, 2018 |date=April 9, 2018 |author=wunan |publisher=[[wikipedia:LessWrong|LessWrong]]}}</ref><ref>{{cite web |url=https://www.reddit.com/r/MachineLearning/comments/8azk2n/d_OpenAI_charter/ |publisher=reddit |title=[D] OpenAI Charter • r/MachineLearning |accessdate=May 5, 2018}}</ref><ref>{{cite web |url=https://news.ycombinator.com/item?id=16794194 |title=OpenAI Charter |website=Hacker News |accessdate=May 5, 2018}}</ref><ref>{{cite web |url=https://thenextweb.com/artificial-intelligence/2018/04/10/the-ai-company-elon-musk-co-founded-is-trying-to-create-sentient-machines/ |title=The AI company Elon Musk co-founded intends to create machines with real intelligence |publisher=The Next Web |date=April 10, 2018 |author=Tristan Greene |accessdate=May 5, 2018}}</ref> | ||

| + | |- | ||

| + | | 2018 || {{Dts|April 19}} || Team salary || Financial || ''{{W|The New York Times}}'' publishes a story detailing the salaries of researchers at OpenAI, using information from OpenAI's 2016 {{W|Form 990}}. The salaries include $1.9 million paid to {{W|Ilya Sutskever}} and $800,000 paid to {{W|Ian Goodfellow}} (hired in March of that year).<ref>{{cite web |url=https://www.nytimes.com/2018/04/19/technology/artificial-intelligence-salaries-OpenAI.html |date=April 19, 2018 |publisher=[[wikipedia:The New York Times|The New York Times]] |title=A.I. Researchers Are Making More Than $1 Million, Even at a Nonprofit |author=Cade Metz |accessdate=May 5, 2018}}</ref><ref>{{cite web |url=https://www.reddit.com/r/reinforcementlearning/comments/8di9yt/ai_researchers_are_making_more_than_1_million/dxnc76j/ |publisher=reddit |title="A.I. Researchers Are Making More Than $1 Million, Even at a Nonprofit [OpenAI]" • r/reinforcementlearning |accessdate=May 5, 2018}}</ref><ref>{{cite web |url=https://news.ycombinator.com/item?id=16880447 |title=gwern comments on A.I. Researchers Are Making More Than $1M, Even at a Nonprofit |website=Hacker News |accessdate=May 5, 2018}}</ref> | ||

| + | |- | ||

| + | | 2018 || {{Dts|May 2}} || AI training, AI goal learning, self-play || Research || A paper by Geoffrey Irving, Paul Christiano, and Dario Amodei explores an approach to training AI systems to learn complex human goals and preferences. Traditional methods that rely on direct human judgment may fail when the tasks are too complicated. To address this, the authors propose training agents through self-play using a [[w:Zero-sum thinking|zero-sum debate game]]. In this game, two agents take turns making statements, and a human judge determines which agent provides the most true and useful information. The authors demonstrate the effectiveness of this approach in an experiment involving the [[w:MNIST database|MNIST dataset]], where agents compete to convince a sparse classifier, resulting in significantly improved accuracy. They also discuss theoretical and practical considerations of the debate model and suggest future experiments to further explore its properties.<ref>{{cite web |url=https://arxiv.org/abs/1805.00899 |title=[1805.00899] AI safety via debate |accessdate=May 5, 2018}}</ref><ref>{{cite web |url=https://blog.OpenAI.com/debate/ |publisher=OpenAI Blog |title=AI Safety via Debate |date=May 3, 2018 |first1=Geoffrey |last1=Irving |first2=Dario |last2=Amodei |accessdate=May 5, 2018}}</ref> | ||

| + | |- | ||

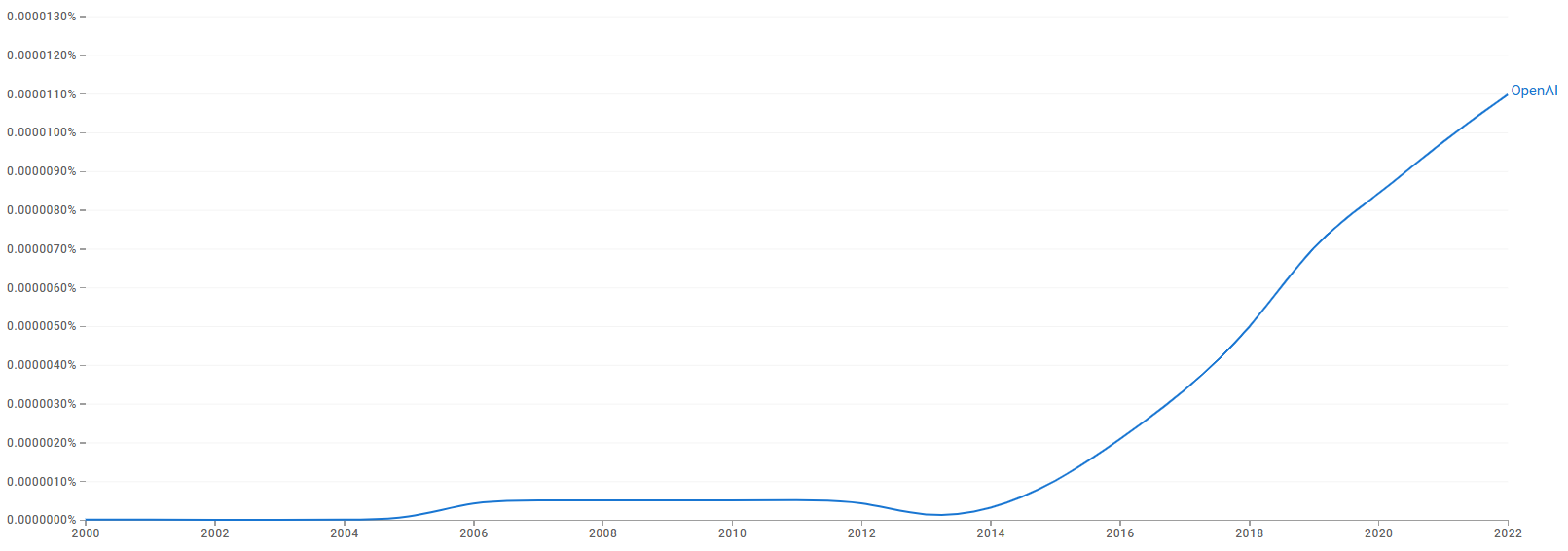

| + | | 2018 || {{dts|May 16}} || {{w|Computation}} || Research || OpenAI releases an analysis showing that the amount of compute used in the largest AI training runs had been increasing exponentially since 2012, with a 3.4-month doubling time. This represents a more rapid growth rate compared to {{w|Moore's Law}}. The increase in compute plays a crucial role in advancing AI capabilities. The trend is expected to continue, driven by hardware advancements and algorithmic innovations. However, there would eventually be limitations due to cost and chip efficiency. The authors highlight the importance to address the implications of this trend, including safety and malicious use of AI. Modest amounts of compute have also led to significant AI breakthroughs, indicating that massive compute is not always a requirement for important results.<ref>{{cite web |title=AI and Compute |url=https://openai.com/blog/ai-and-compute/ |website=openai.com |accessdate=5 April 2020}}</ref> | ||

| + | |- | ||

| + | | 2018 || {{dts|June 11}} || {{w|Unsupervised learning}} || Research || OpenAI announces having obtained significant results on a suite of diverse language tasks with a scalable, task-agnostic system, which uses a combination of transformers and unsupervised pre-training.<ref>{{cite web |title=Improving Language Understanding with Unsupervised Learning |url=https://openai.com/blog/language-unsupervised/ |website=openai.com |accessdate=5 April 2020}}</ref> | ||

| + | |- | ||